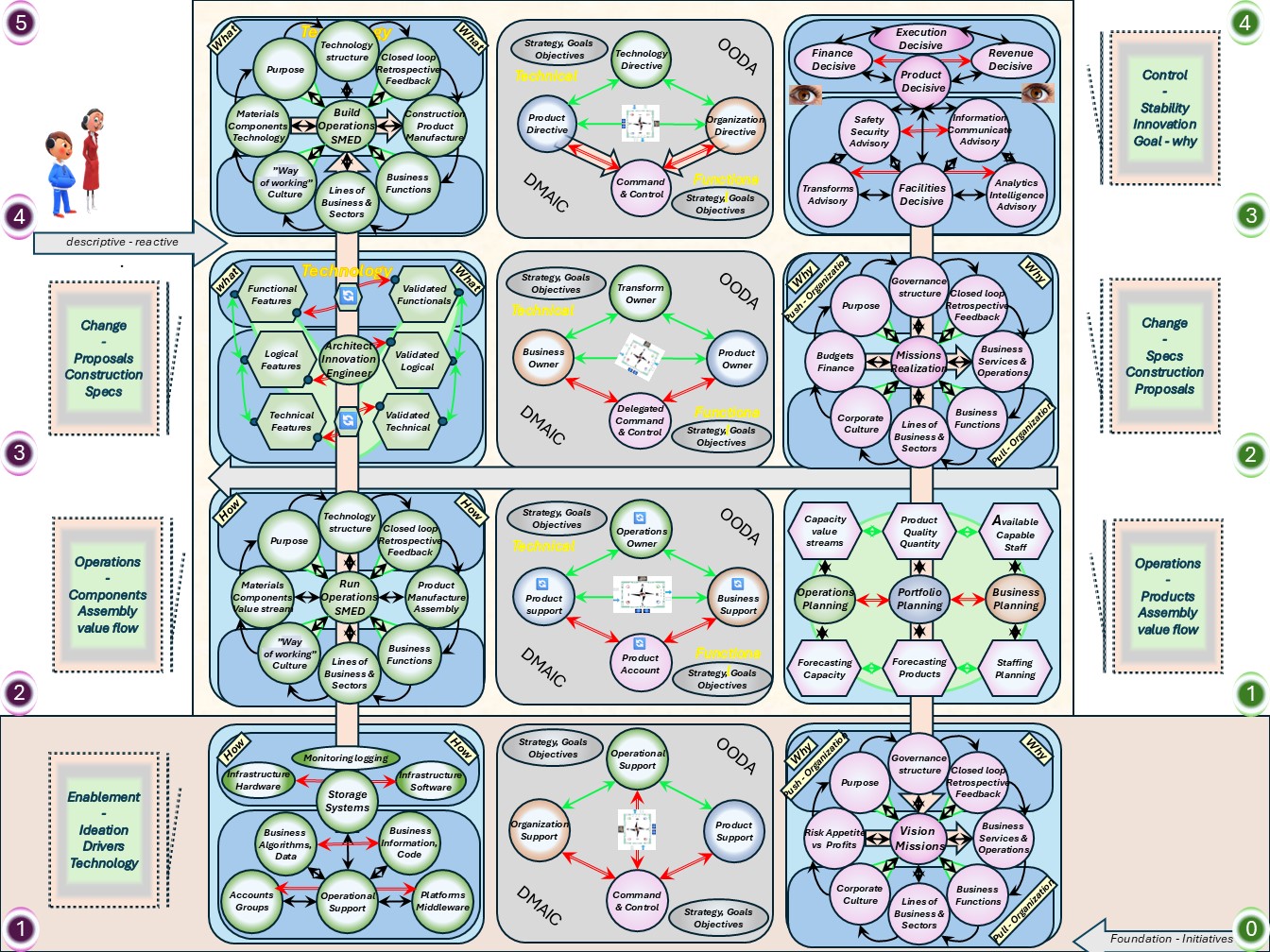

Command & Control Realisations

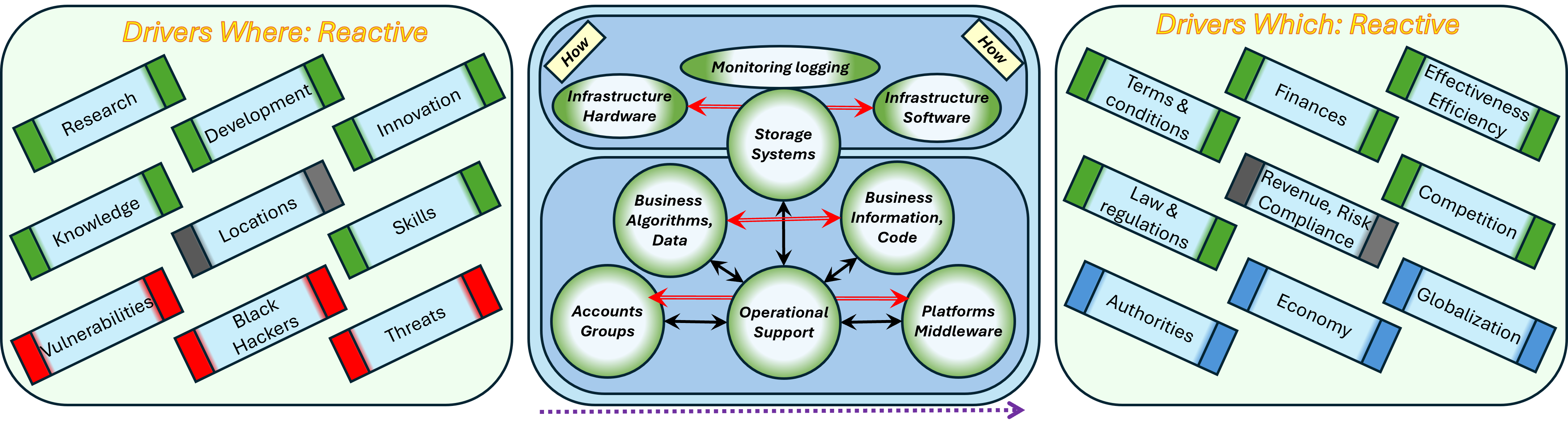

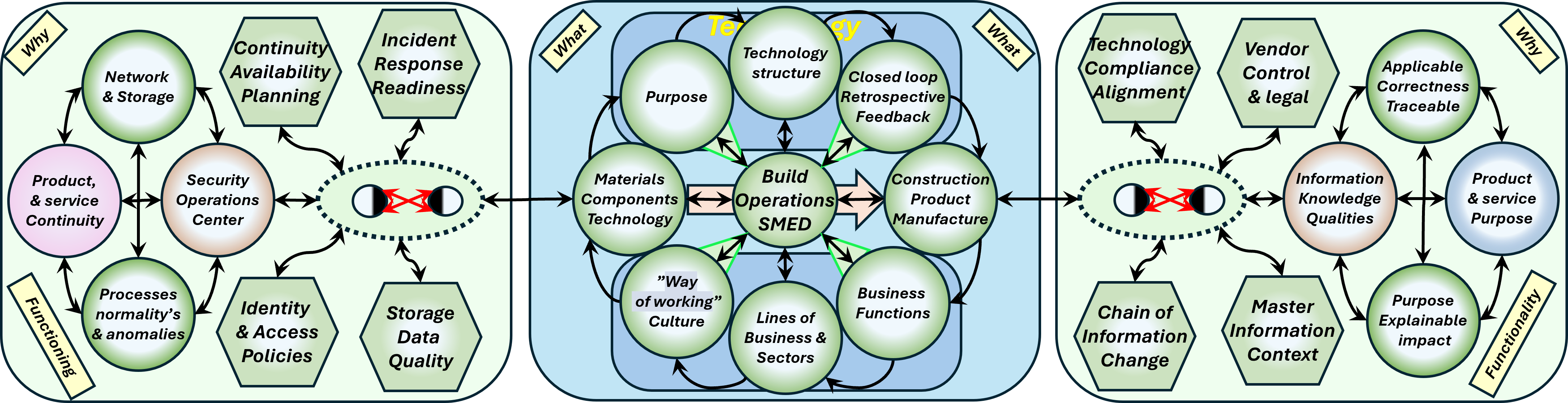

W-1 Command & Control - acting on situations

W-1.1 Contents

⚙ W-1.1.1 Global content

The data explosion. The change is the ammount we are collecting measuring processes as new information (edge).

📚 Information requests.

⚙ measurements monitoring.

🎭 Agility for changes?

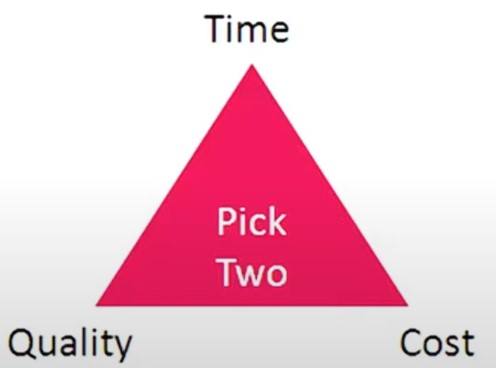

⚖ solution & performance acceptable?

🔰 Too fast ..

previous.

⚙ W-1.1.2 Local content

| Reference | Squad | Abbrevation |

| W-1 Command & Control - acting on situations | |

| W-1.1 Contents | contents | Contents |

| W-1.1.1 Global content | |

| W-1.1.2 Local content | |

| W-1.1.3 Guide reading this page | |

| W-1.1.4 Progress | |

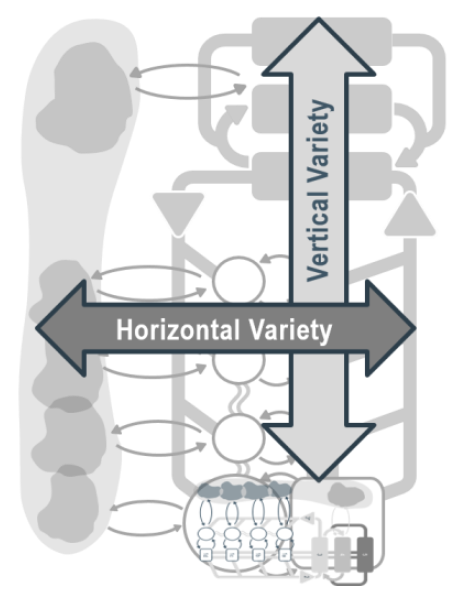

| W-1.2 Floor plans, ordered dimensions | C6autist_02 | I_Floor |

| W-1.2.1 Context information technology: master data | |

| W-1.2.2 Definitions products, goods, services: master data | |

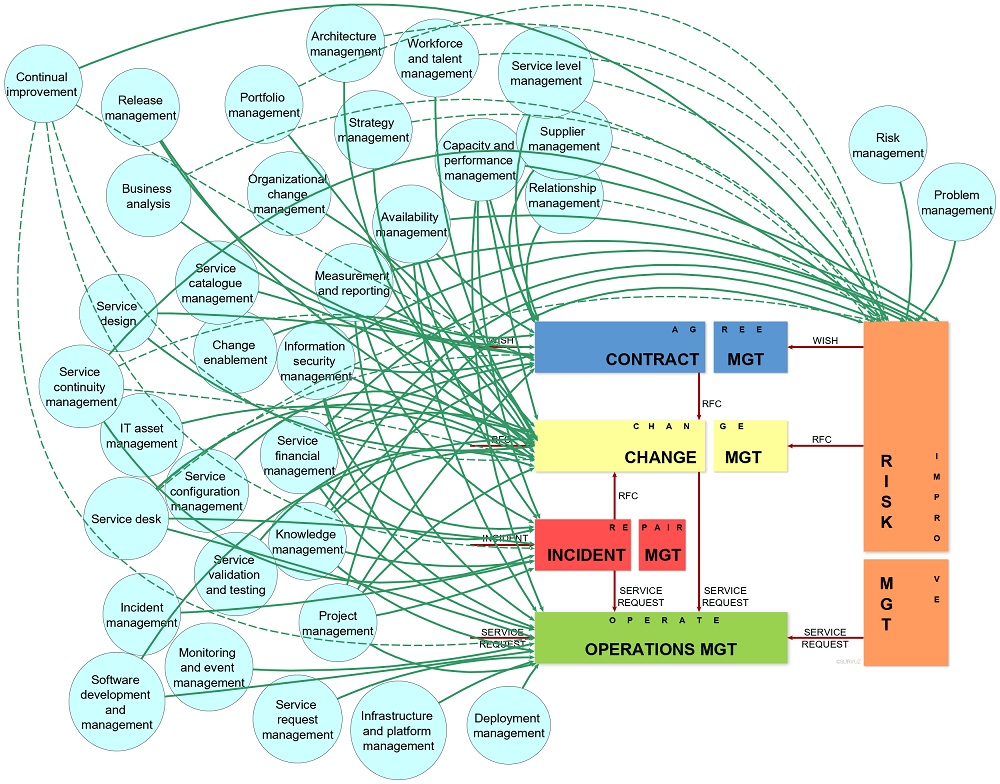

| W-1.2.3 Services orientation at information technology | |

| W-1.2.4 Roles tasks levels in Information Services | |

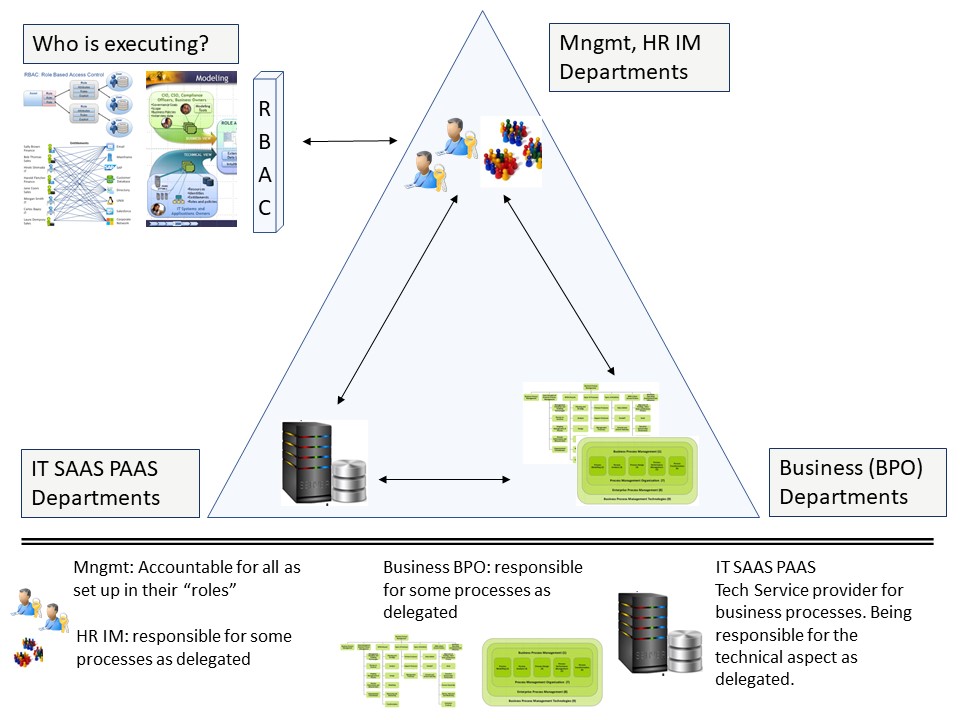

| W-1.3 Steering roles tasks | C6autist_03 | C_Tasks |

| W-1.3.1 Technical "how to" for understanding steering | |

| W-1.3.2 The what to start operational steering | |

| W-1.3.3 Understanding the data flow, data lineage | |

| W-1.3.4 Roles tasks levels supporting Information Services | |

| W-1.4 Culture building people | C6autist_04 | H_Culture |

| W-1.4.1 Project management, the change challenges | |

| W-1.4.2 Project into Programme into Portfolio (3P) | |

| W-1.4.3 Managing flow in activities, portfolios | |

| W-1.4.4 CPO, CPE, Managing living viable systems | |

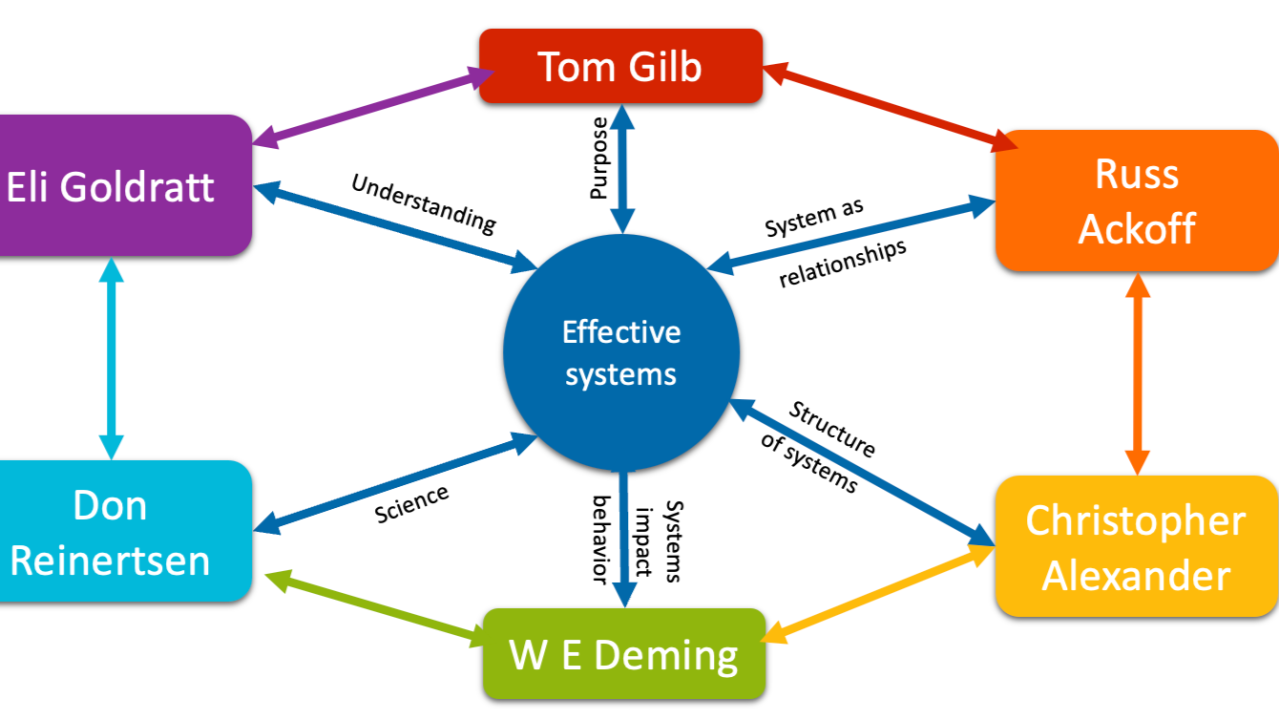

| W-1.5 Sound underpinned theory, foundation | C6autist_05 | C_Theory |

| W-1.5.1 Chosen colours and shapes for the floor plans | |

| W-1.5.2 The viable system, conscious decisions | |

| W-1.5.3 The viable system, autonomic technology | |

| W-1.5.4 Mediation: Technical autonomy vs organisational control | |

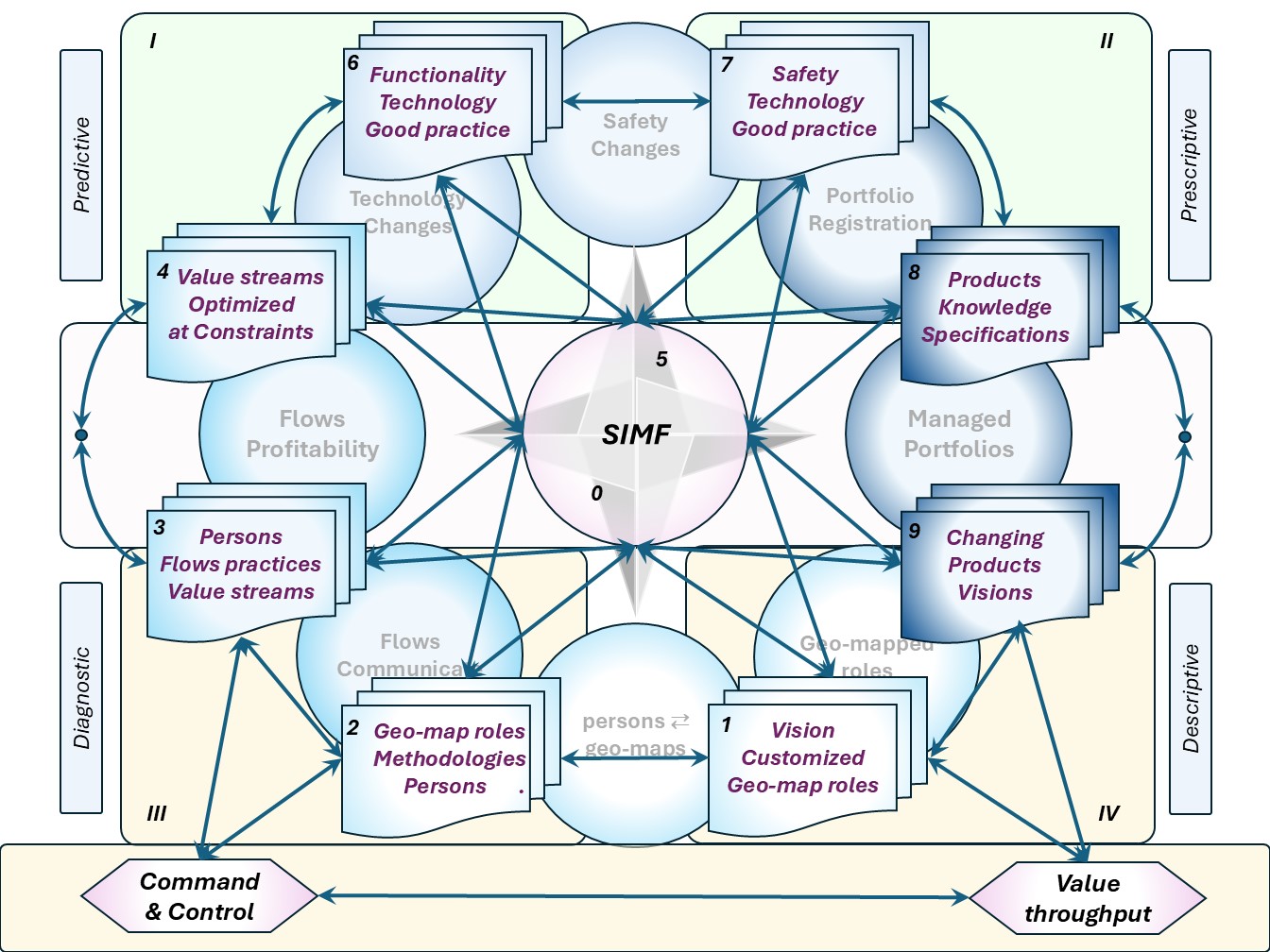

| W-1.6 Maturity 0: Strategy impact understood | C6autist_06 | CMM0-SIM |

| W-1.6.1 Determining the position, the situation | |

| W-1.6.2 Individual logical irrational together | |

| W-1.6.3 Value stream VaSM vs Viable system ViSM | |

| W-1.6.4 Flexibility in architecture, engineering, design | |

| W-2 Command & Control working on gaps for solutions | |

| W-2.1 Understanding ICT Service Gap types | C6authow_01 | PT_GAP |

| W-2.2.1 Understanding the technical design processes | |

| W-2.2.2 Understanding technical performance choices | |

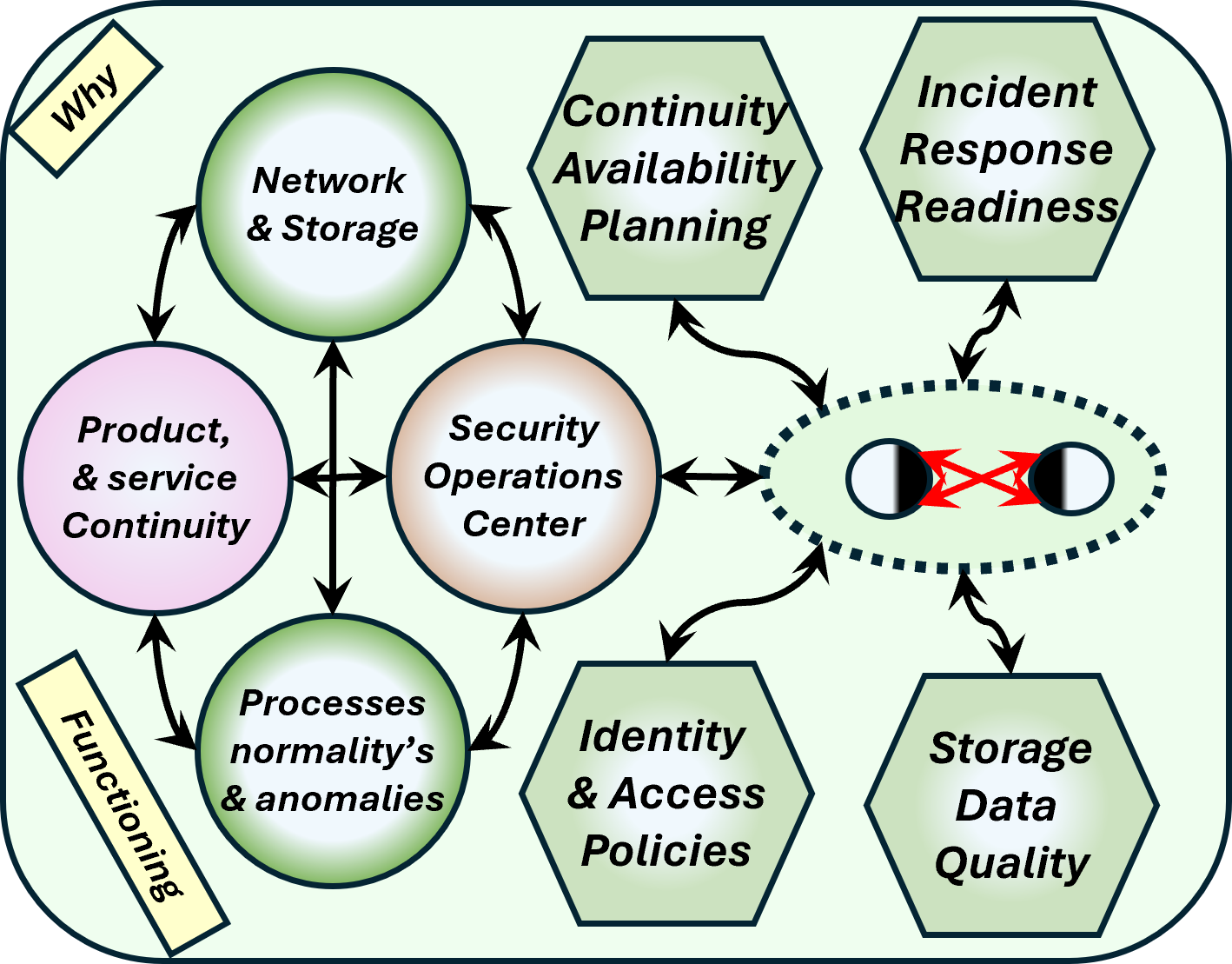

| W-2.2.3 Data governance, knowing wat is going on | |

| W-2.2.4 Data governance, knowing who is acting at what | |

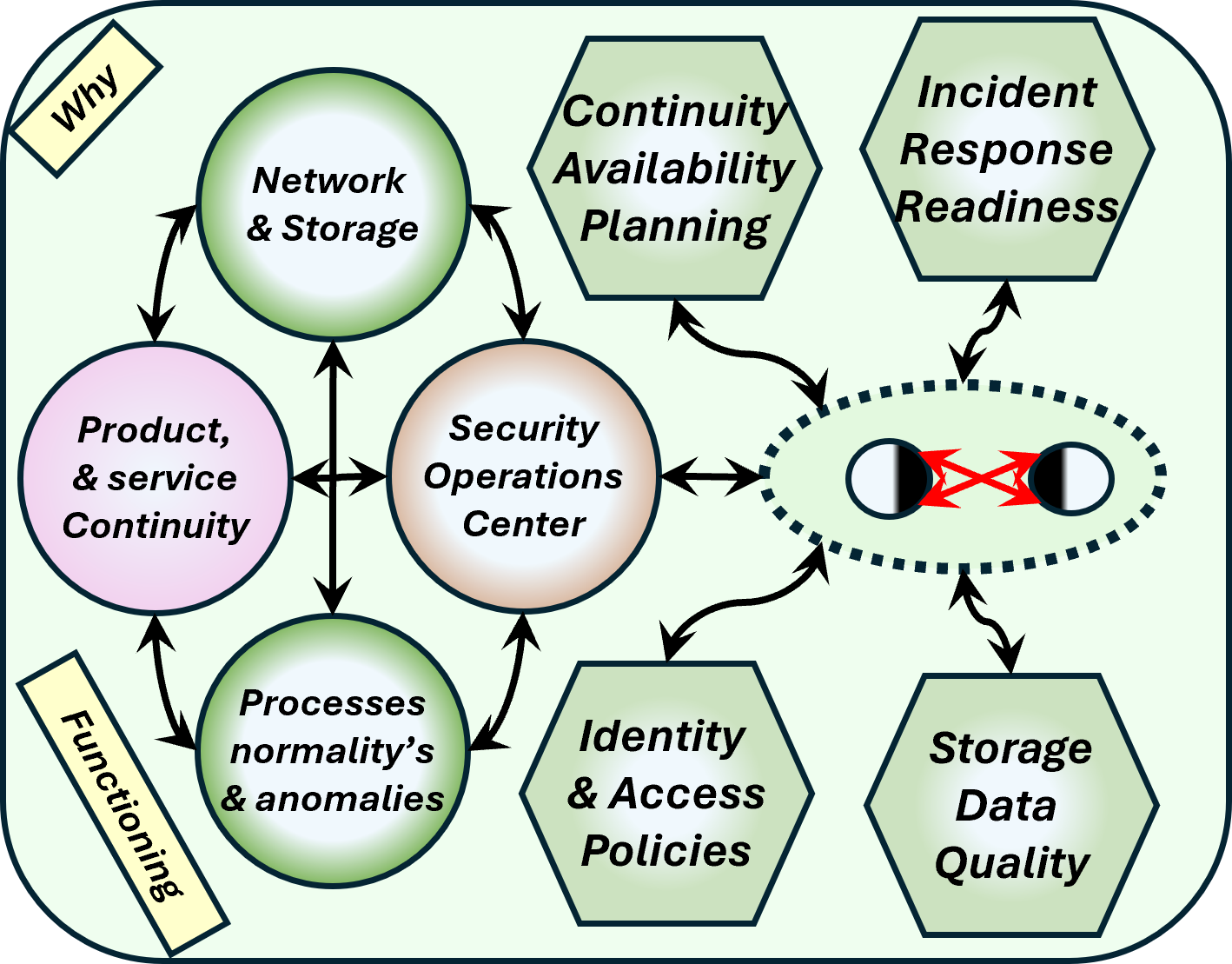

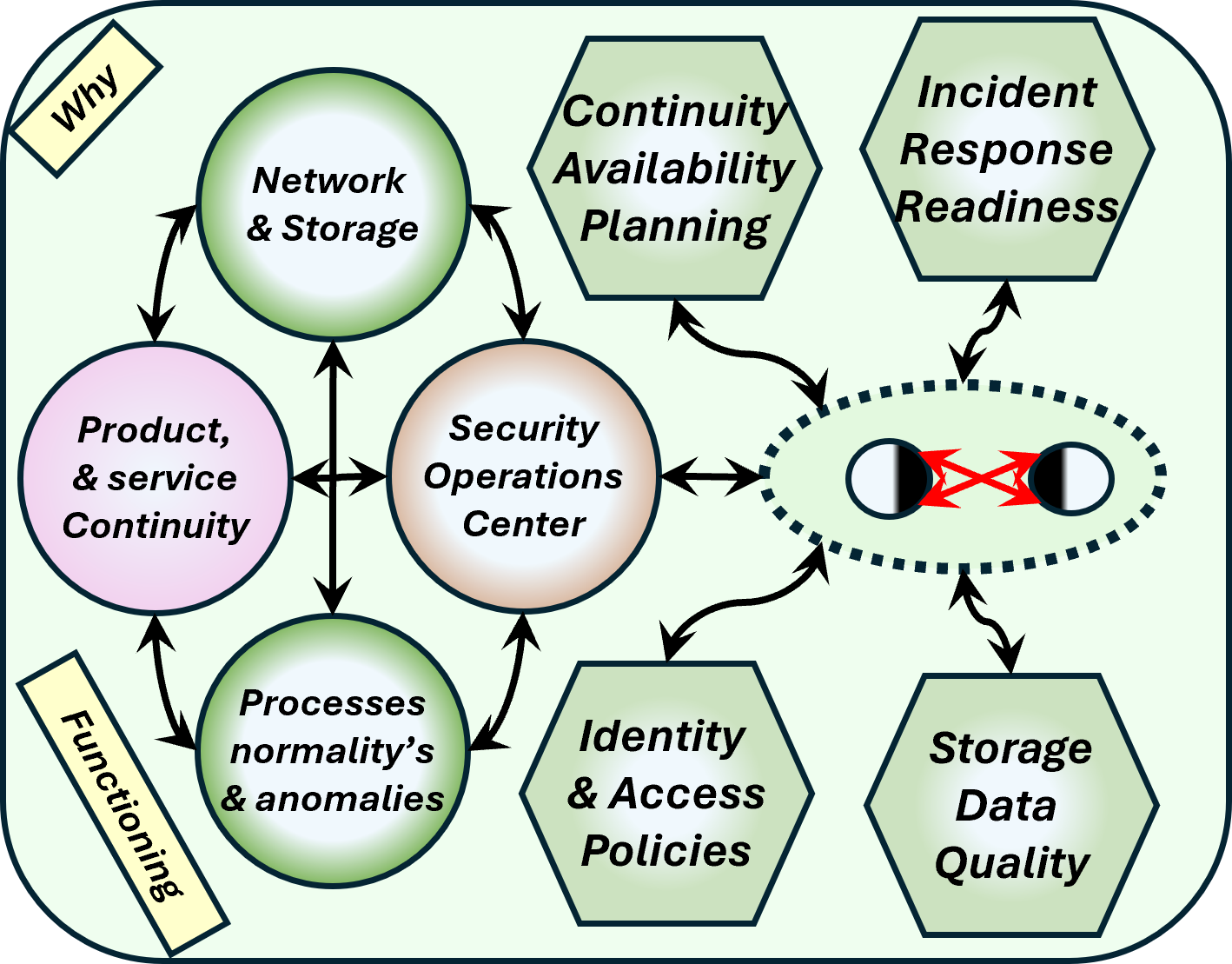

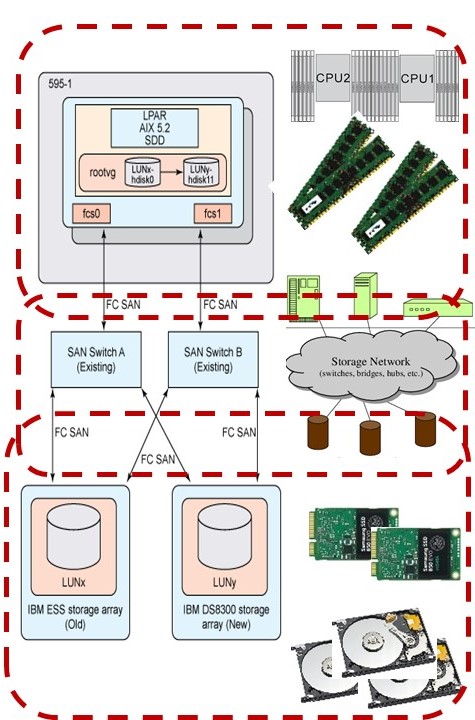

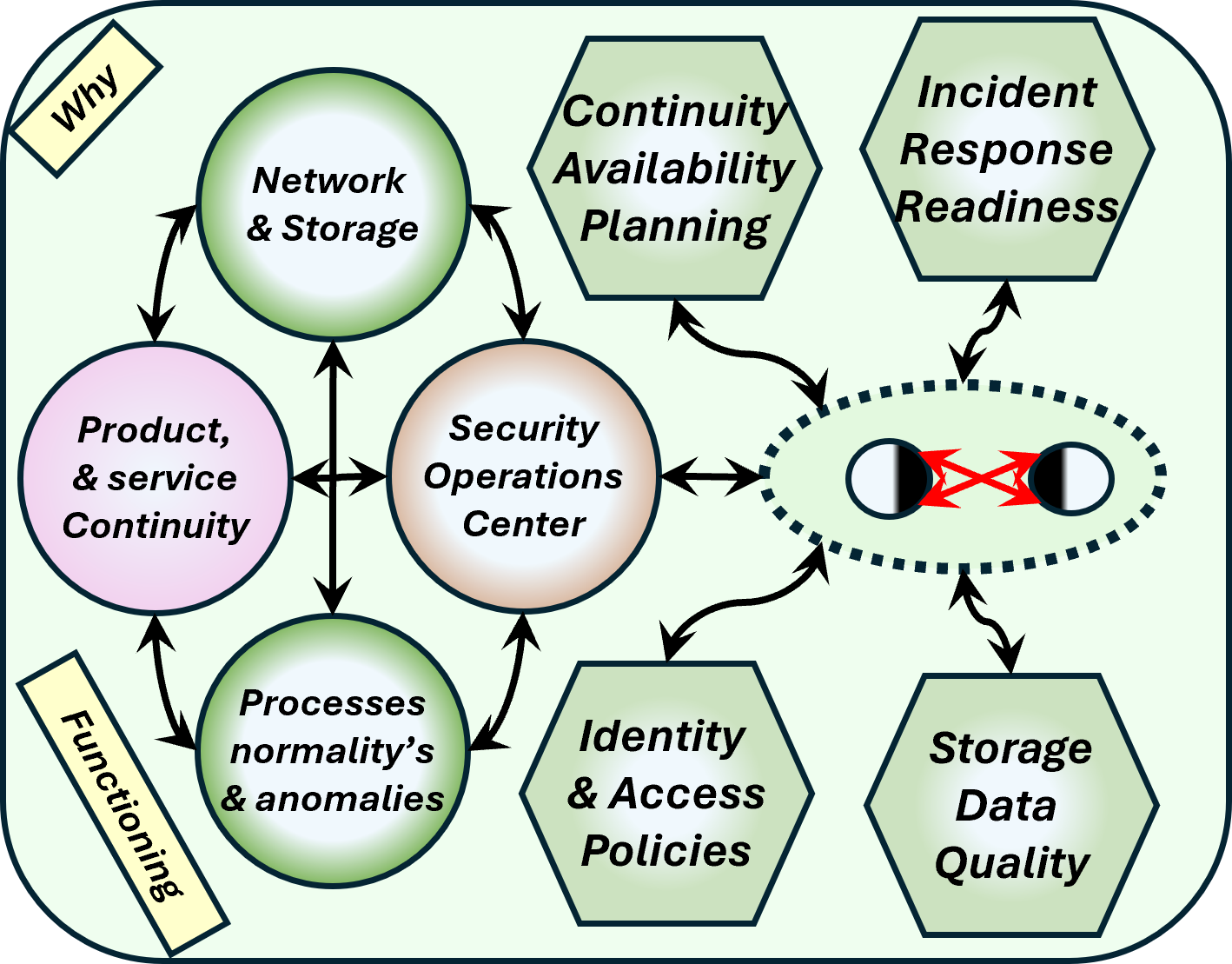

| W-2.2 Floor plans, understanding ICT operating value streams | C6authow_02 | ✅-TechSec |

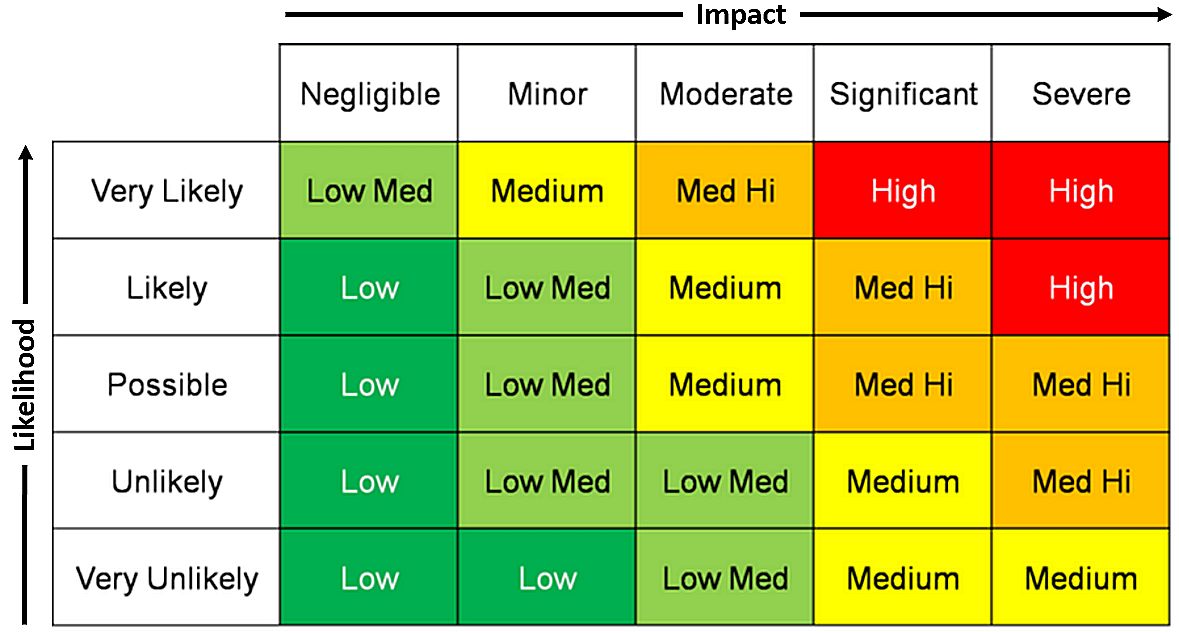

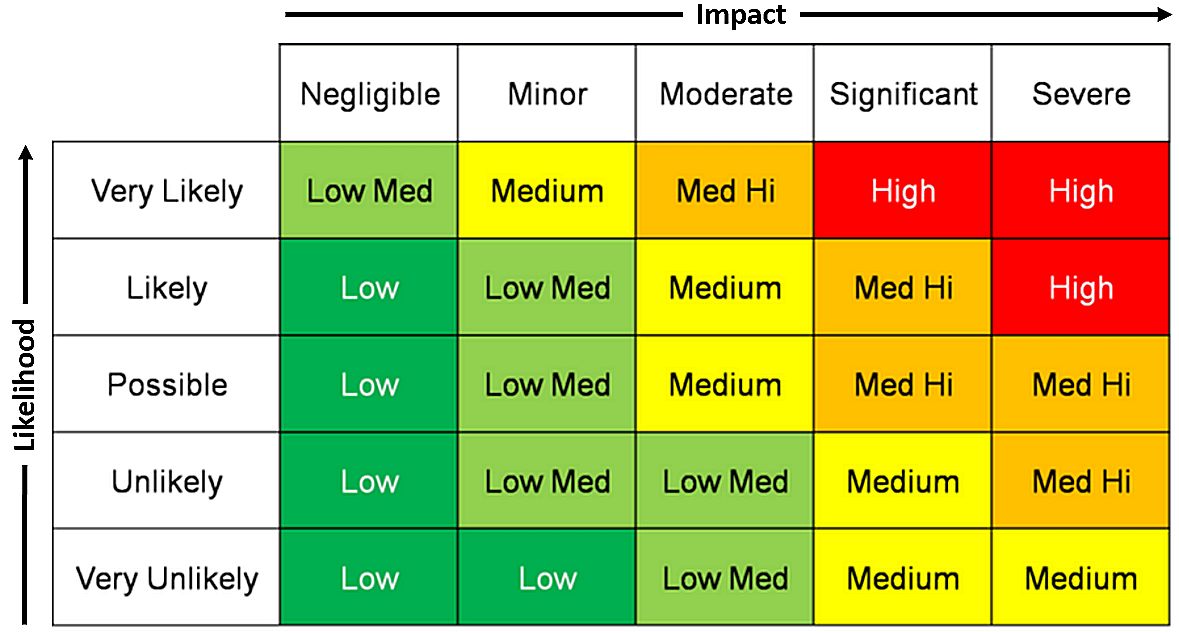

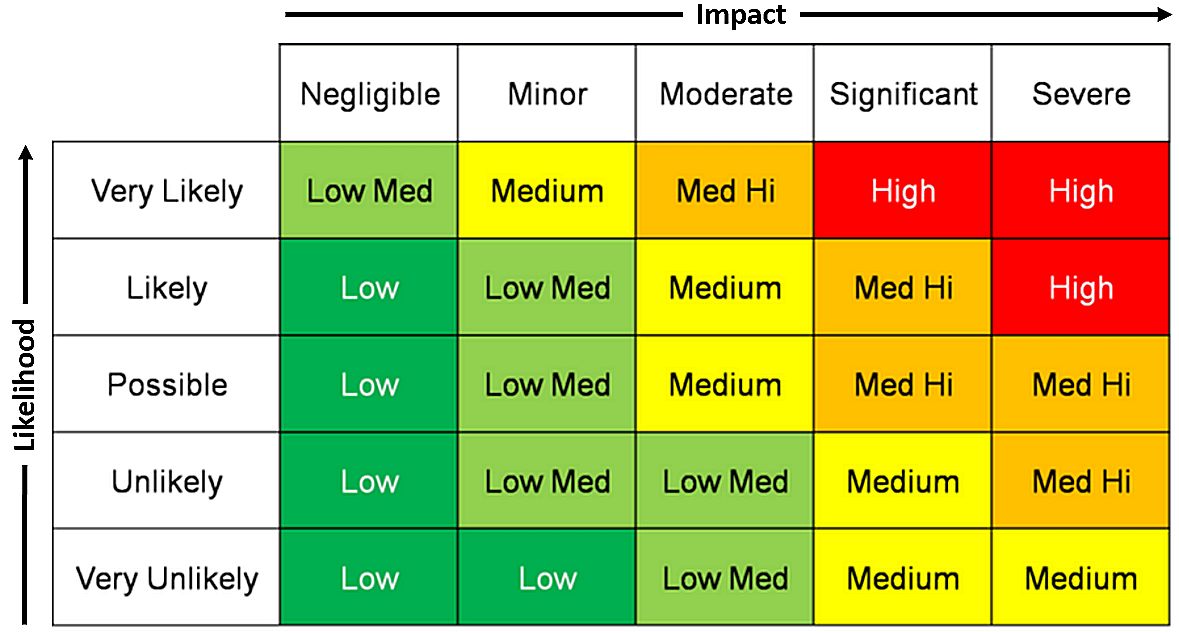

| W-2.2.1 Technology quality & risk rating | |

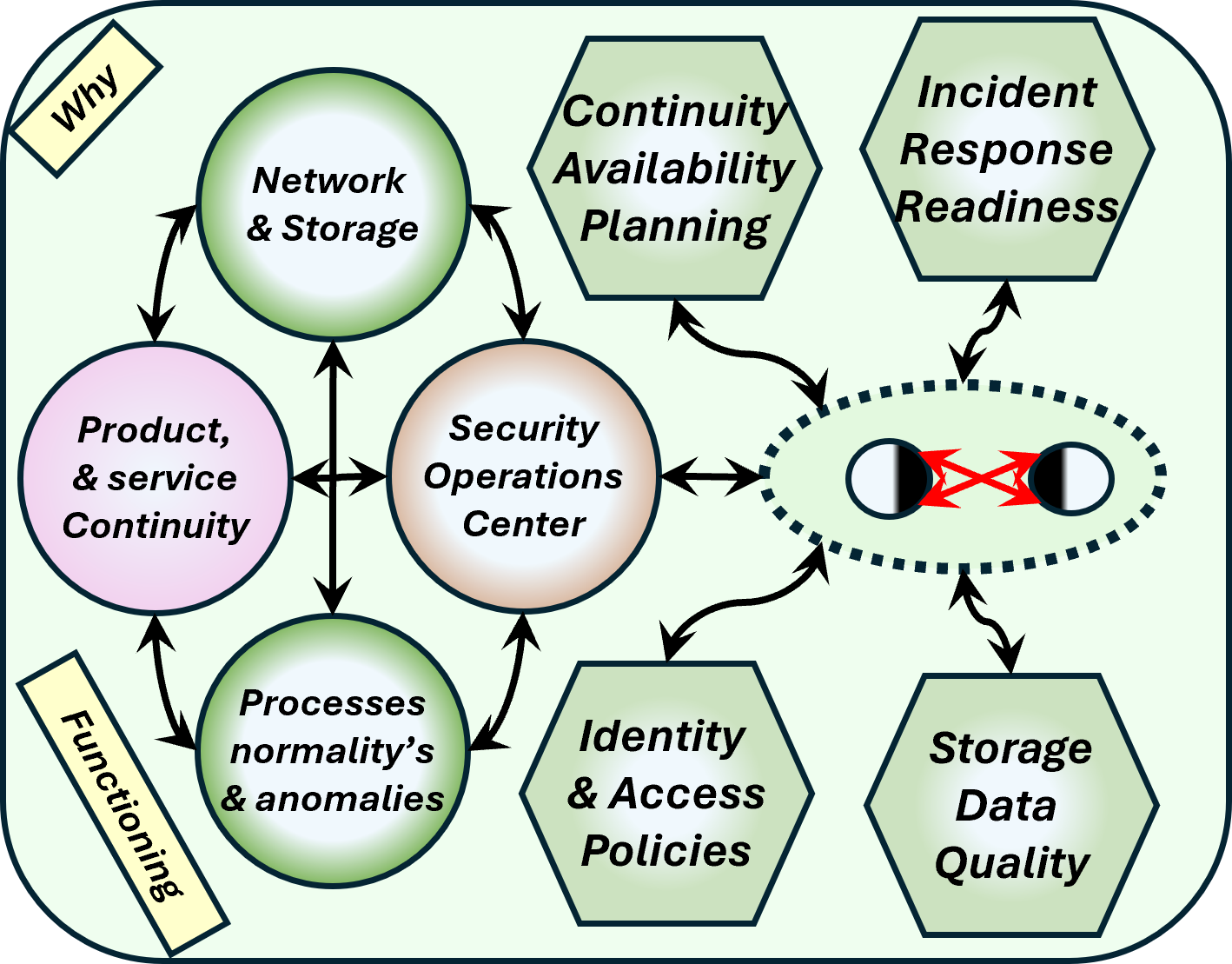

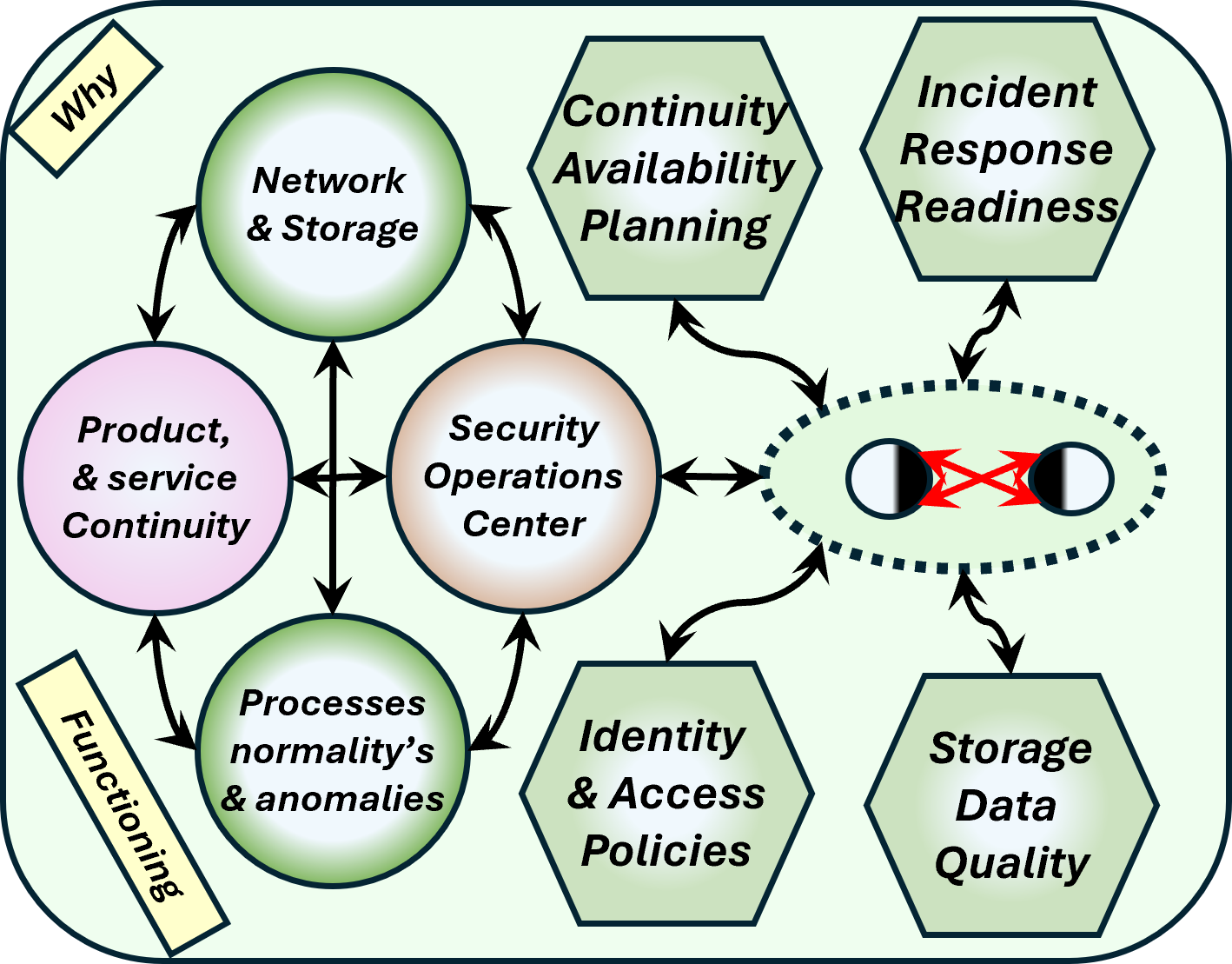

| W-2.2.2 Information process: Identities, Access, incident response | |

| W-2.2.3 Safety Monitoring for anamolies by open source issues | |

| W-2.2.4 Safety Monitoring for anamolies known internal processes | |

| W-2.3 Information systems: Actuators - Steers | C6authow_03 | C_Steer |

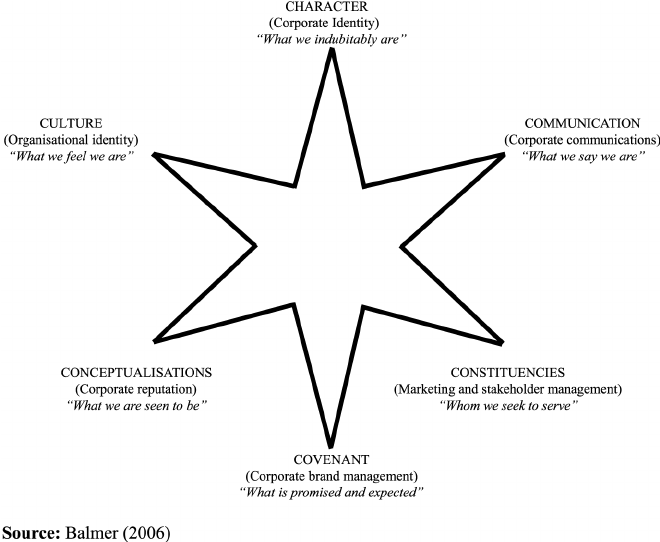

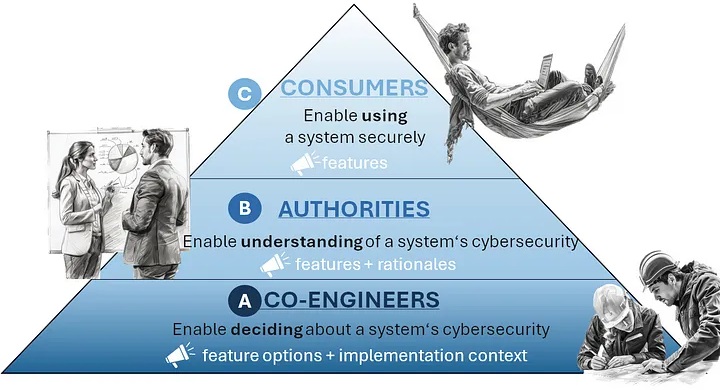

| W-2.3.1 Communicating a shared value, mission for understanding | |

| W-2.3.2 Communications, variety & velocity and regulators | |

| W-2.3.3 Communications, variety & velocity within systems | |

| W-2.3.4 Product vs Service provider & Top-down vs Bottom-up | |

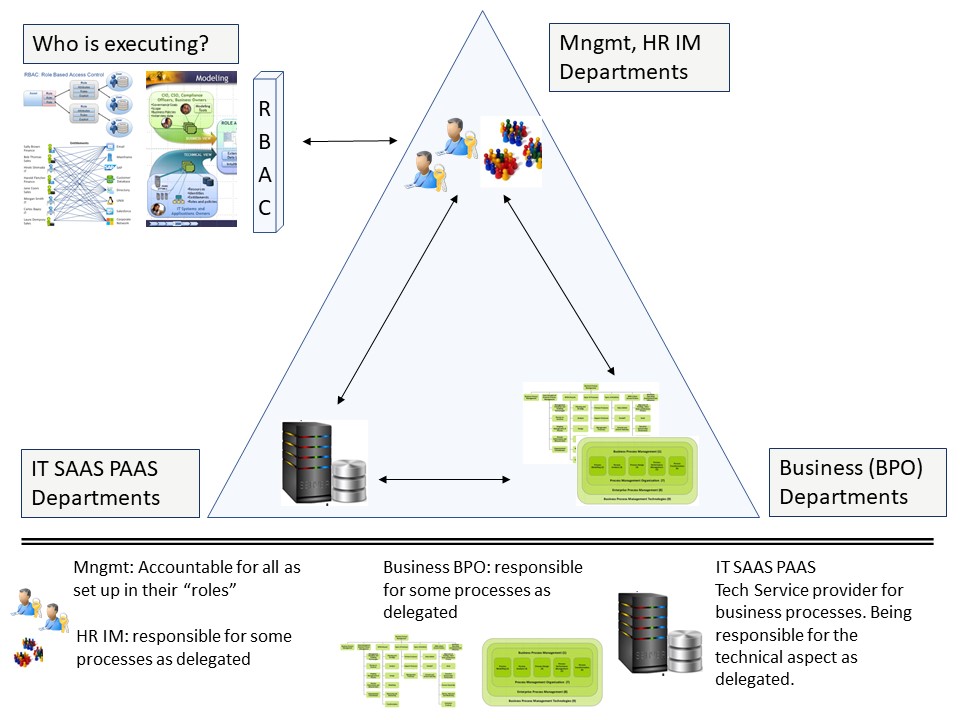

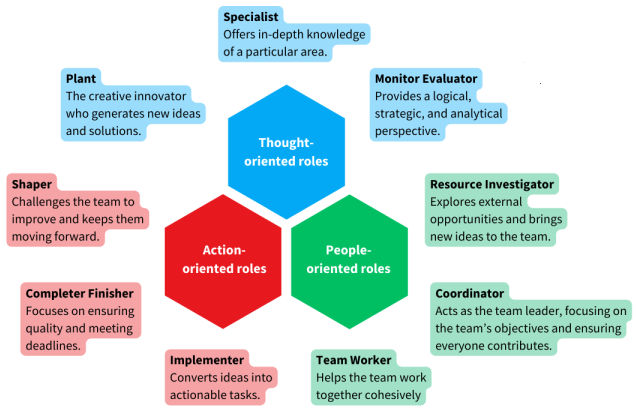

| W-2.4 Roles tasks in the organisation | C6authow_04 | C&C_PT |

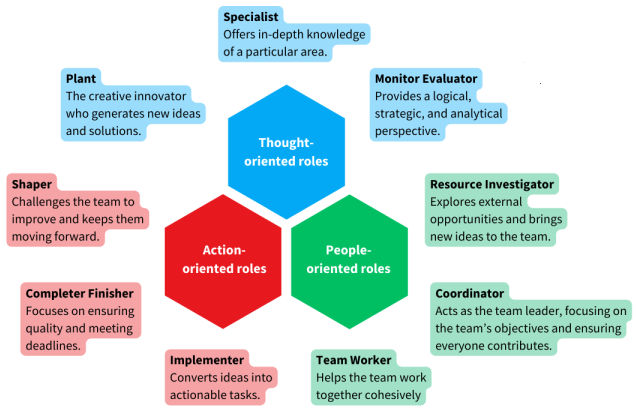

| W-2.4.1 Team member and organic system roles | |

| W-2.4.2 The ignored Engineer executing everything | |

| W-2.4.3 Mediation technology: functionality - functioning | |

| W-2.4.4 Interactions in the organic viable system | |

| W-2.5 Sound underpinned anatomy of a viable system | C6authow_05 | ✅-Morphing |

| W-2.5.1 Fundaments of activities processes (0-1-2, 4-5) | |

| W-2.5.2 Operational deliveries, functioning portfolio (1-2) | |

| W-2.5.3 Changing products, services, functionality portfolio (2-3) | |

| W-2.5.4 Autonomic compliancy control & conscious decisions (3-4) | |

| W-2.6 Maturity 3: Enable strategy to operations | C6authow_06 | CMM3-SIM |

| W-2.6.1 SIMF-VSM Safety with Technology at Technology | |

| W-2.6.2 SIMF-VSM Uncertainties imperfections at processes, persons | |

| W-2.6.3 Dichotomy: generic approaches vs local in house | |

| W-2.6.4 SIMF-VSM Multidemensional perspectives & revised context | |

| W-3 Command & Control planning for innovations | |

| W-3.1 Information processing in the information age | C6autsll_01 | I_Vuca |

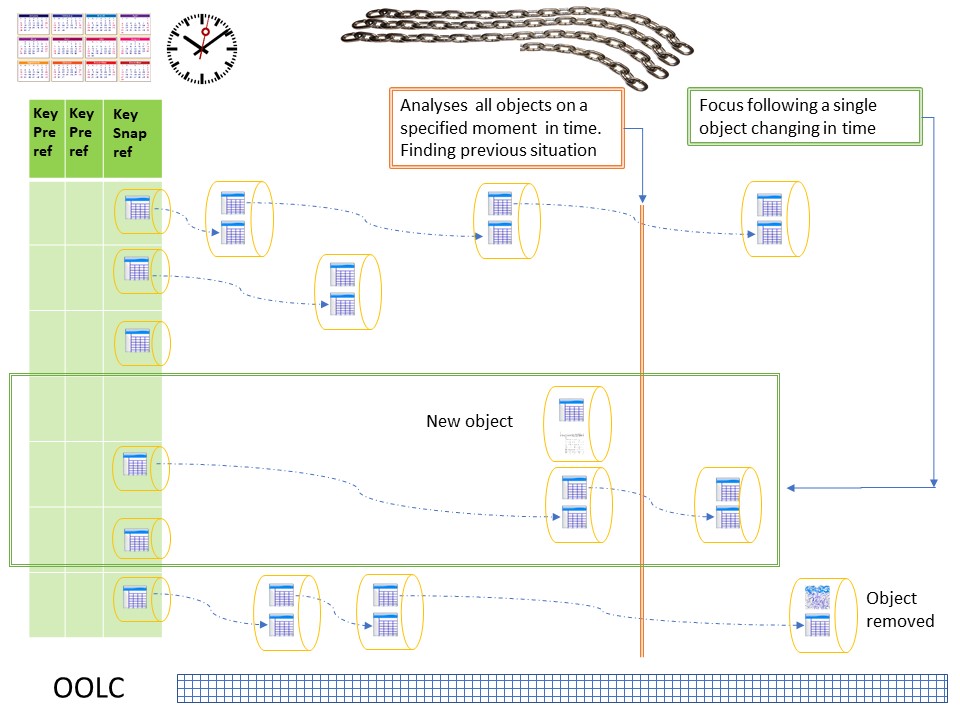

| W-3.1.1 Master data, understanding information | |

| W-3.1.2 Volatile master metadata and information chains | |

| W-3.1.3 Strategy conflicts: safe platforms, business applications | |

| W-3.1.4 Strategy conflicts solution: change to systems thinking | |

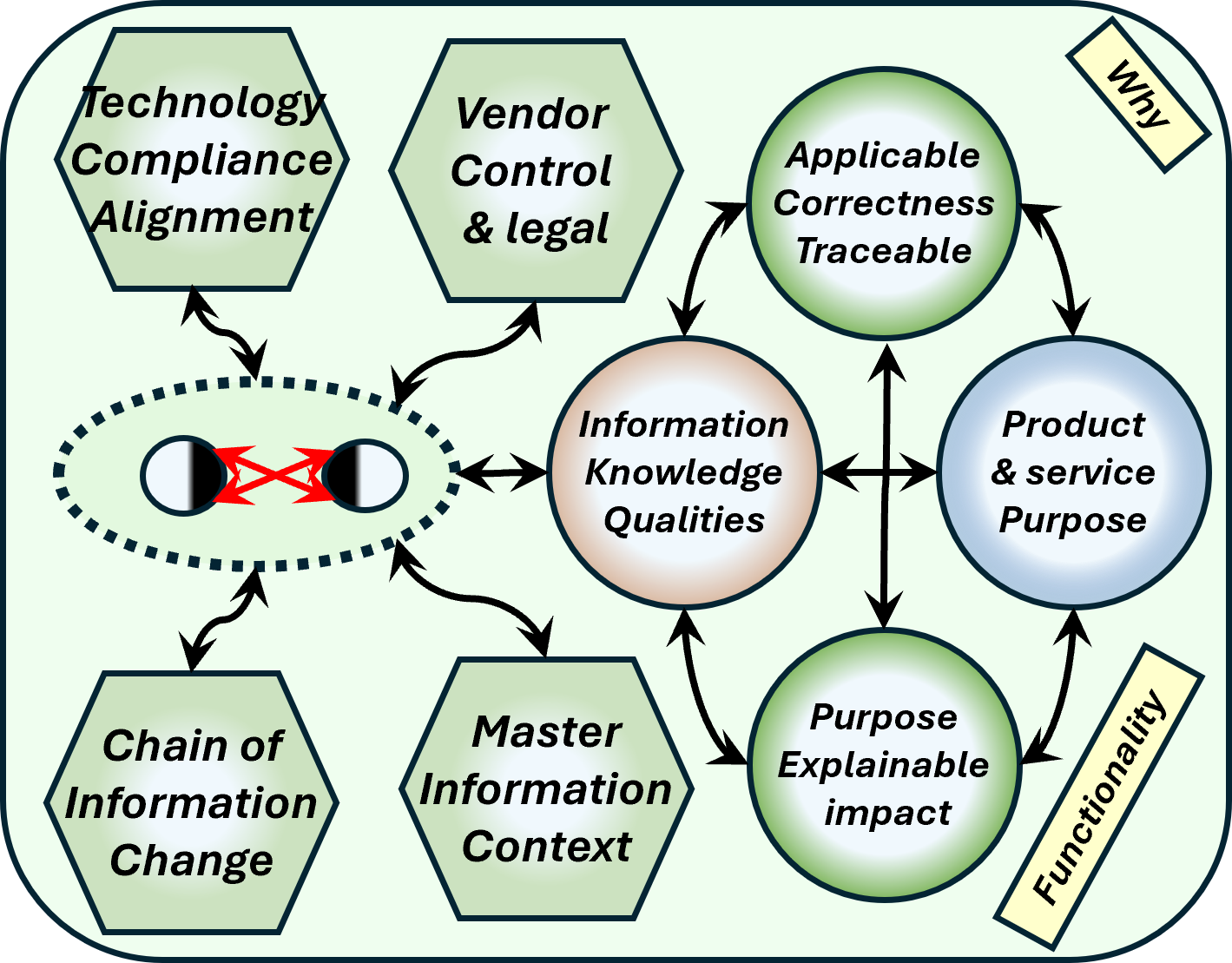

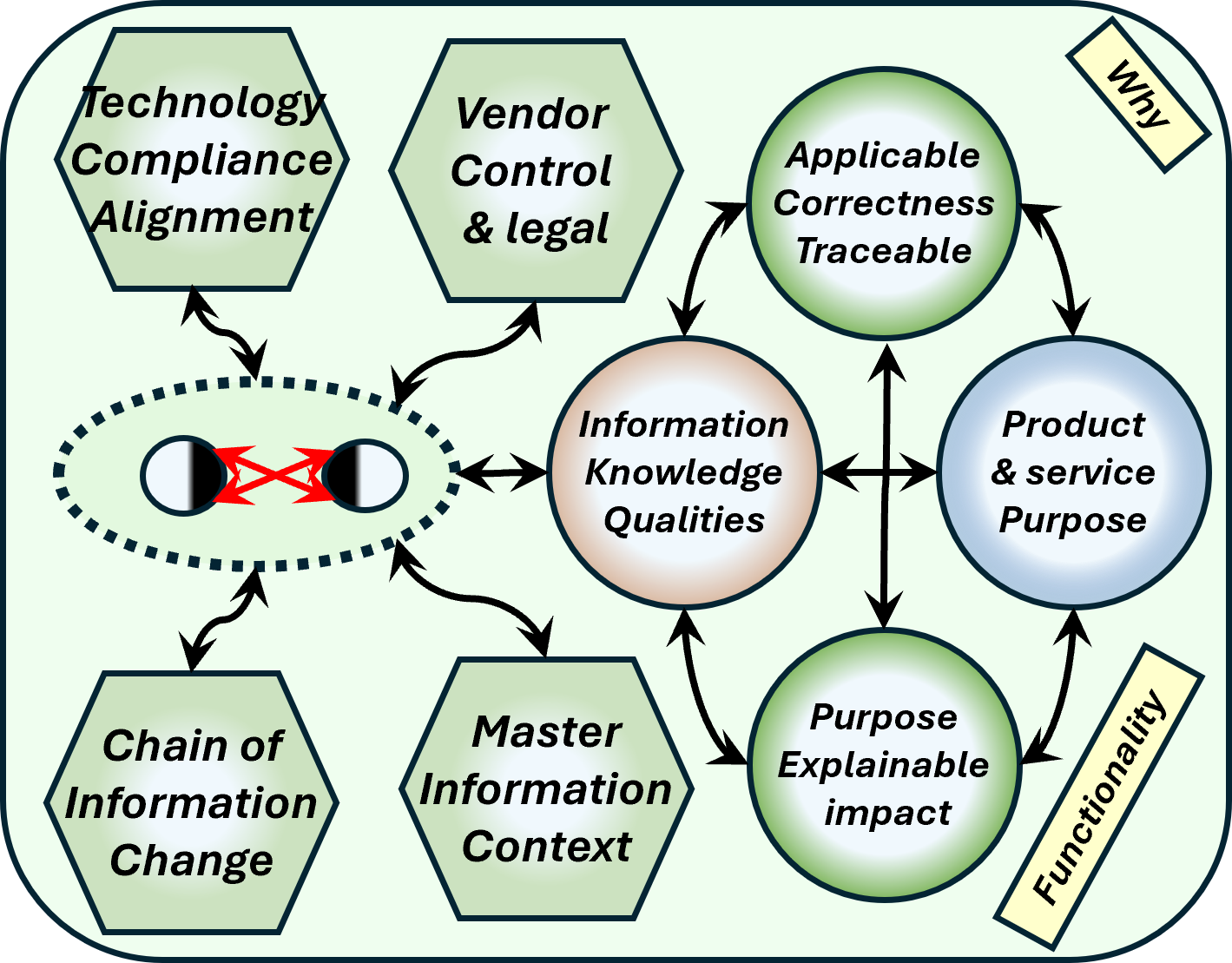

| W-3.2 Floor plans, optimizing value streams | C6autsll_02 | ✅-AppSec |

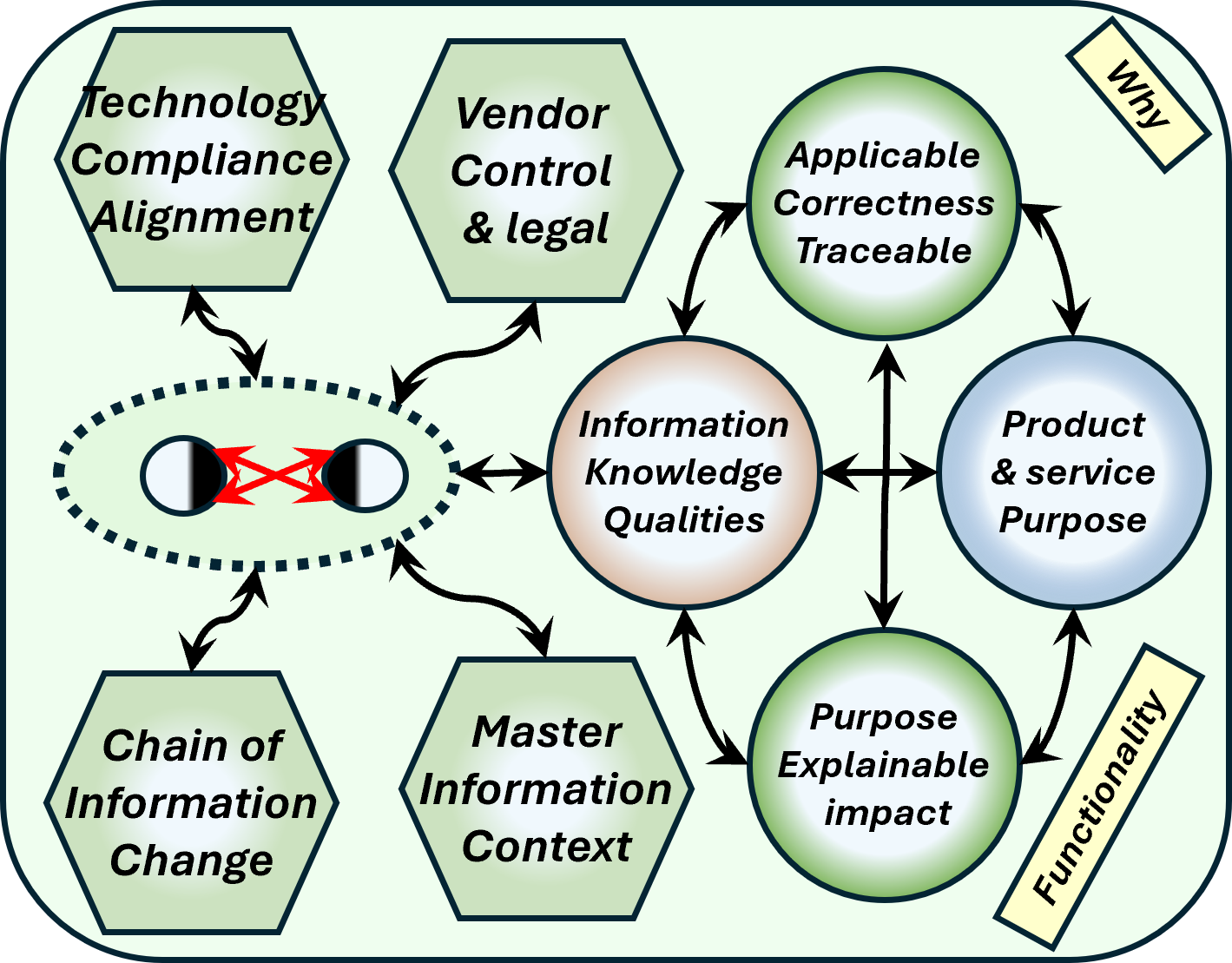

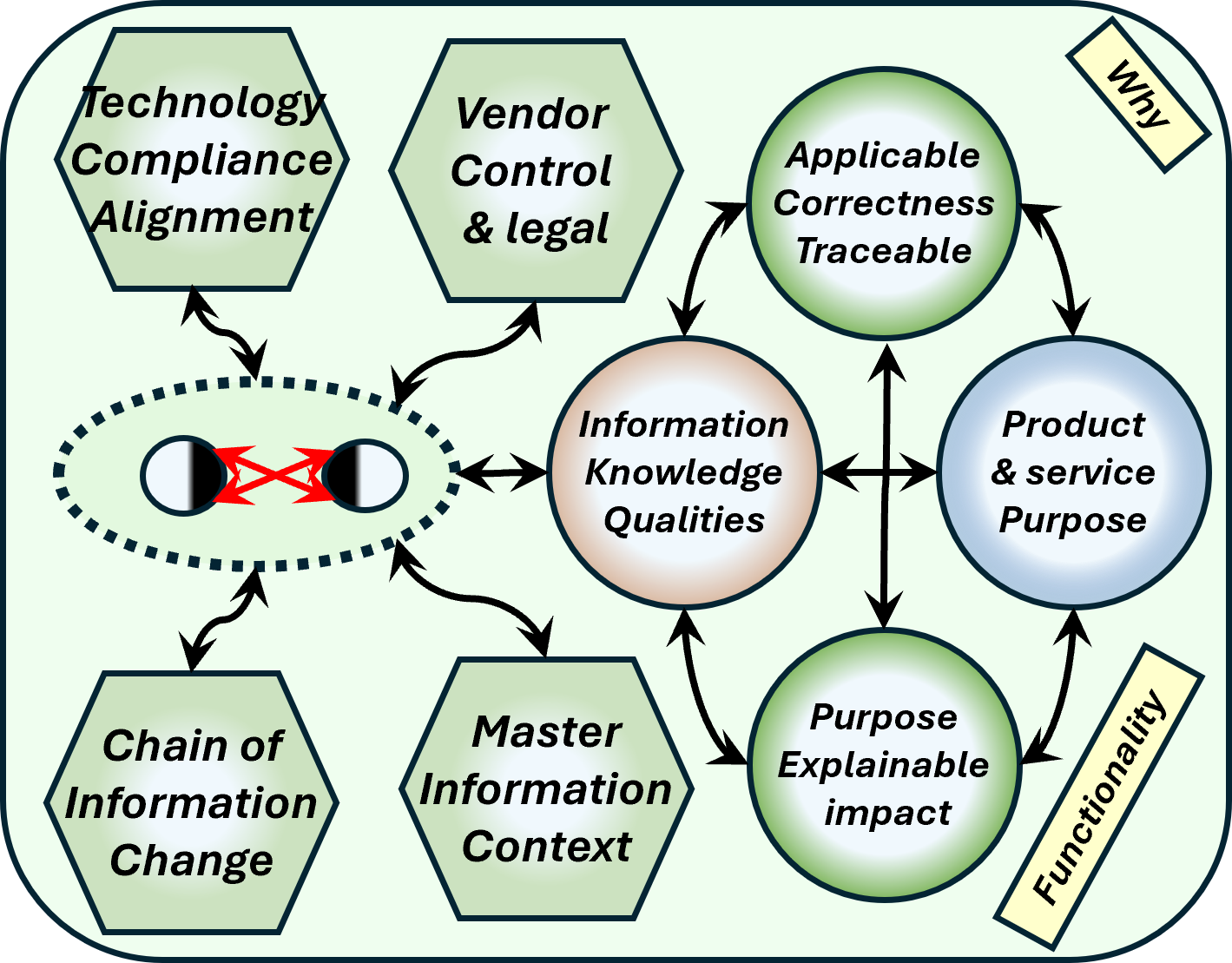

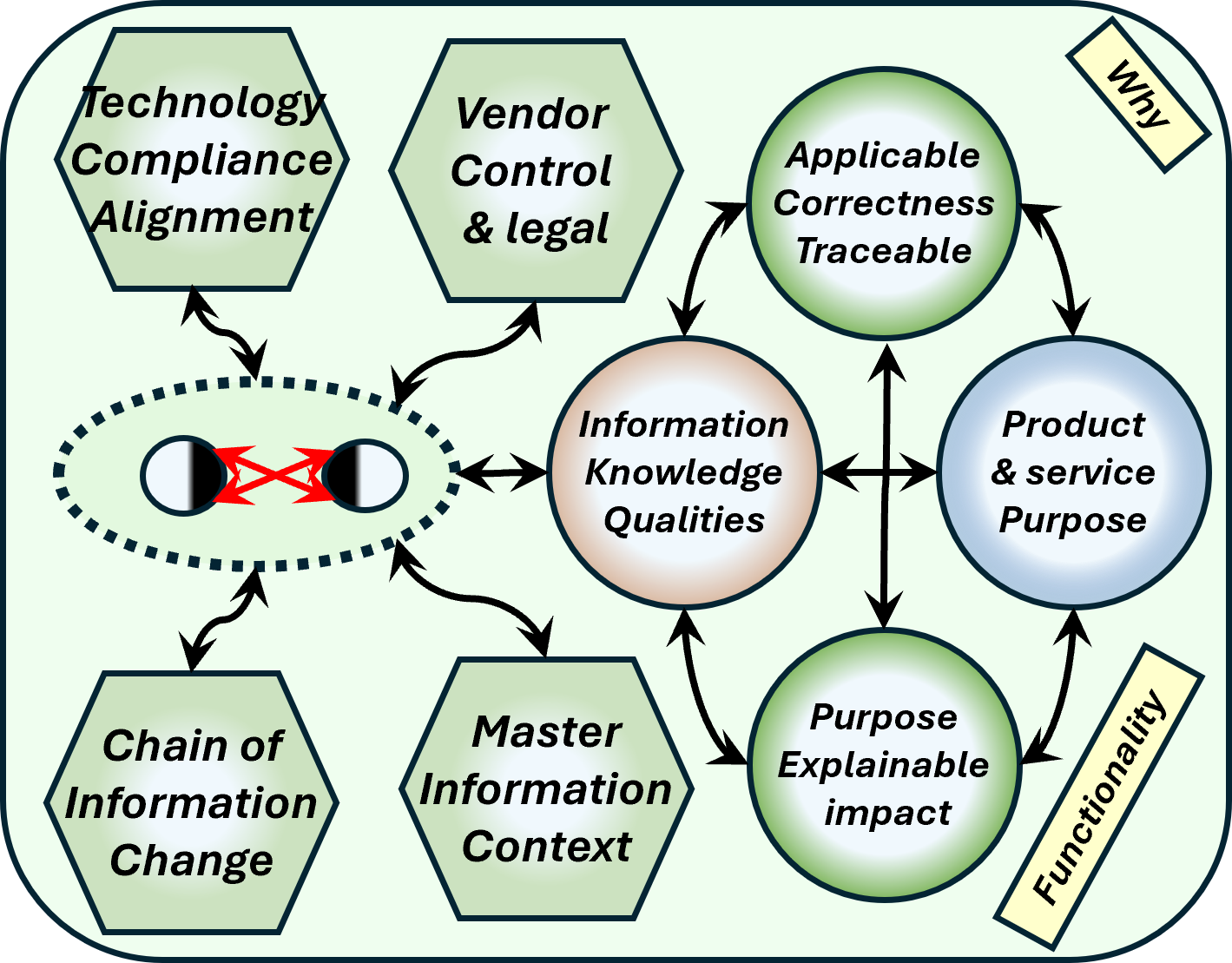

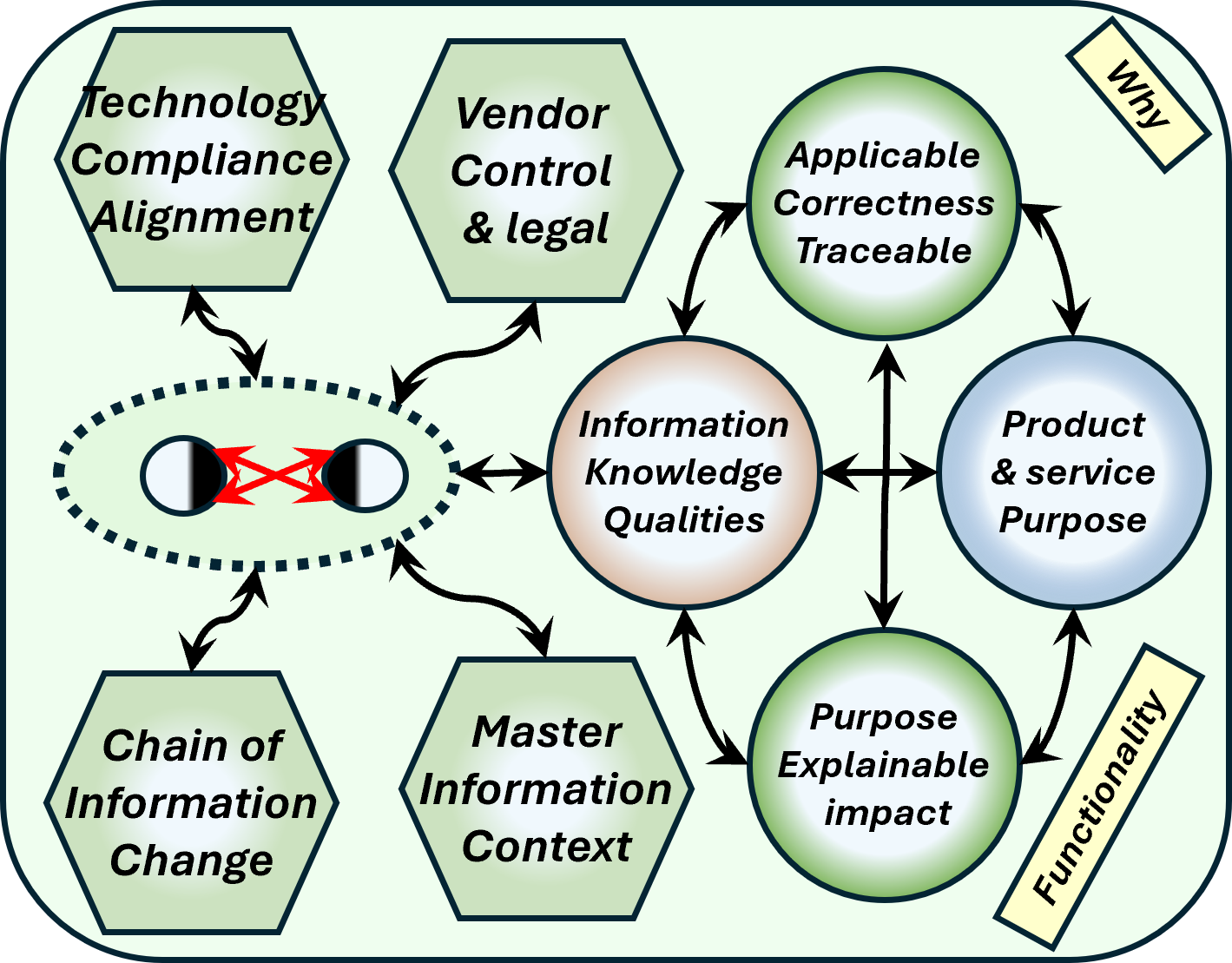

| W-3.2.1 Information quality & risk rating | |

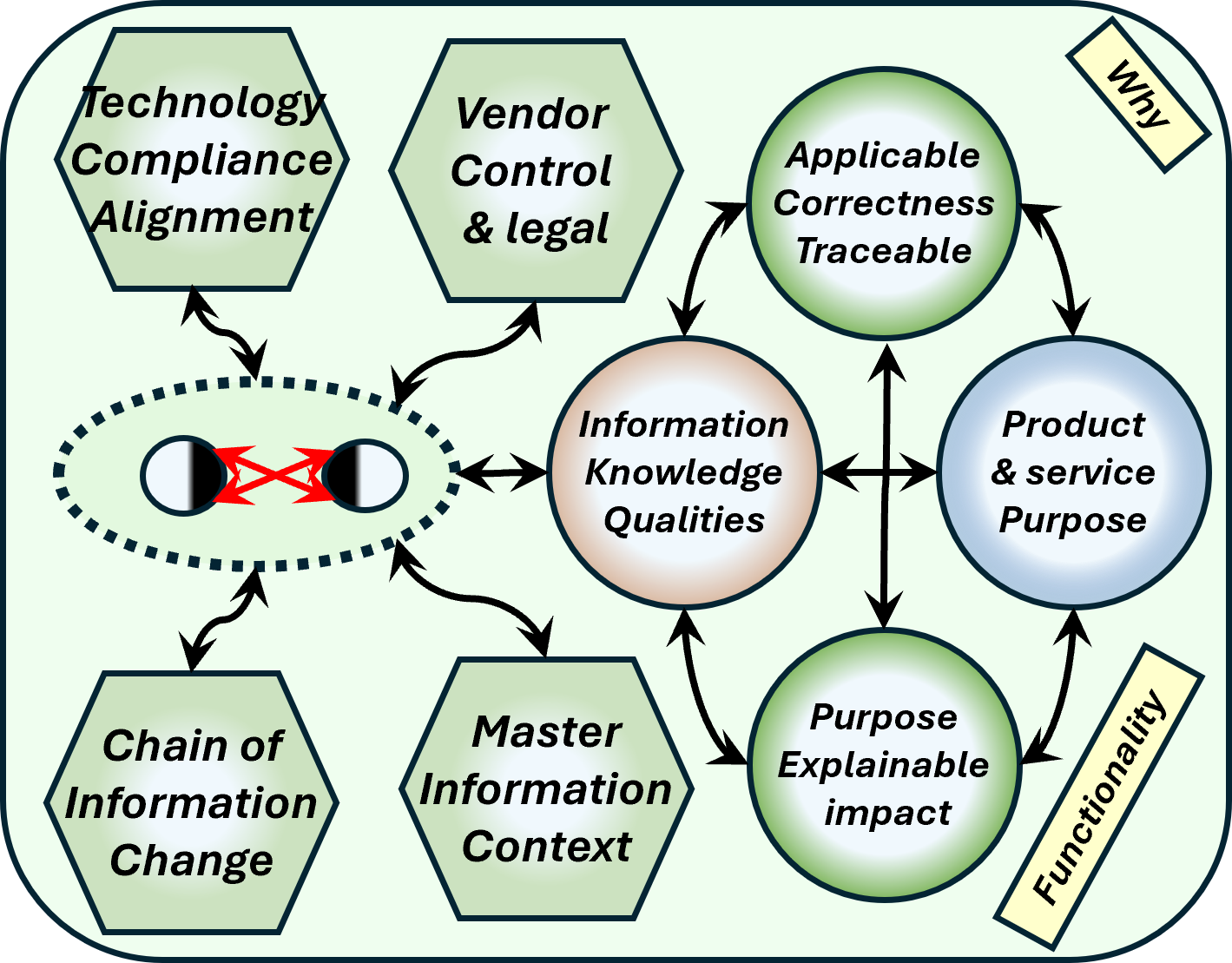

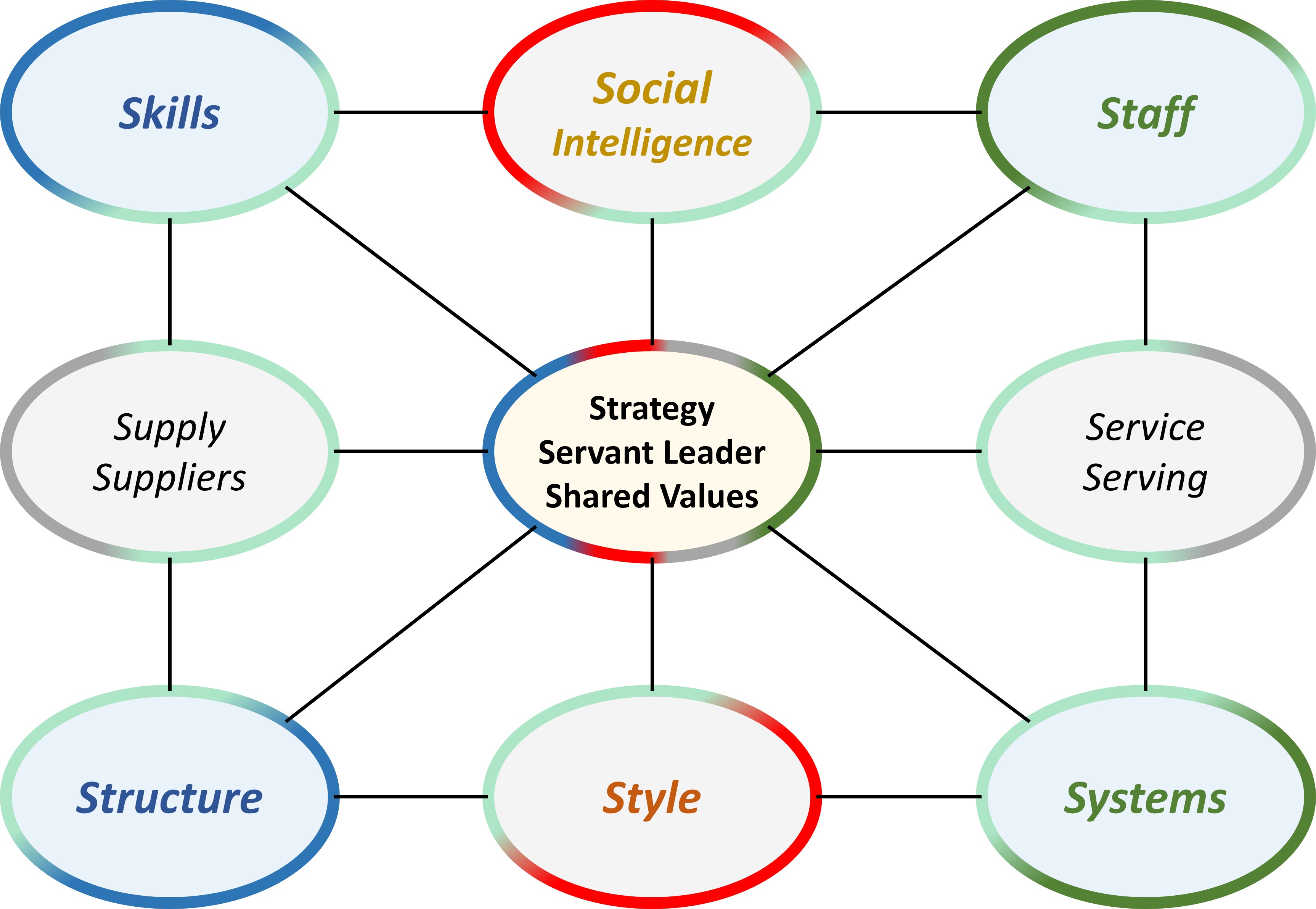

| W-3.2.2 Chain of Information change & Master data Context | |

| W-3.2.3 Information knowledge qualities by product, service | |

| W-3.2.4 Information impact by product, service | |

| W-3.3 Why to steer in the information landscape | C6autsll_03 | C_Bani |

| W-3.3.1 Understanding information: data, processes, actions, results | |

| W-3.3.2 Understanding goals with needed associated change | |

| W-3.3.3 Activities in the organisation for the organisation | |

| W-3.3.4 6C-Control is not specific it is very generic | |

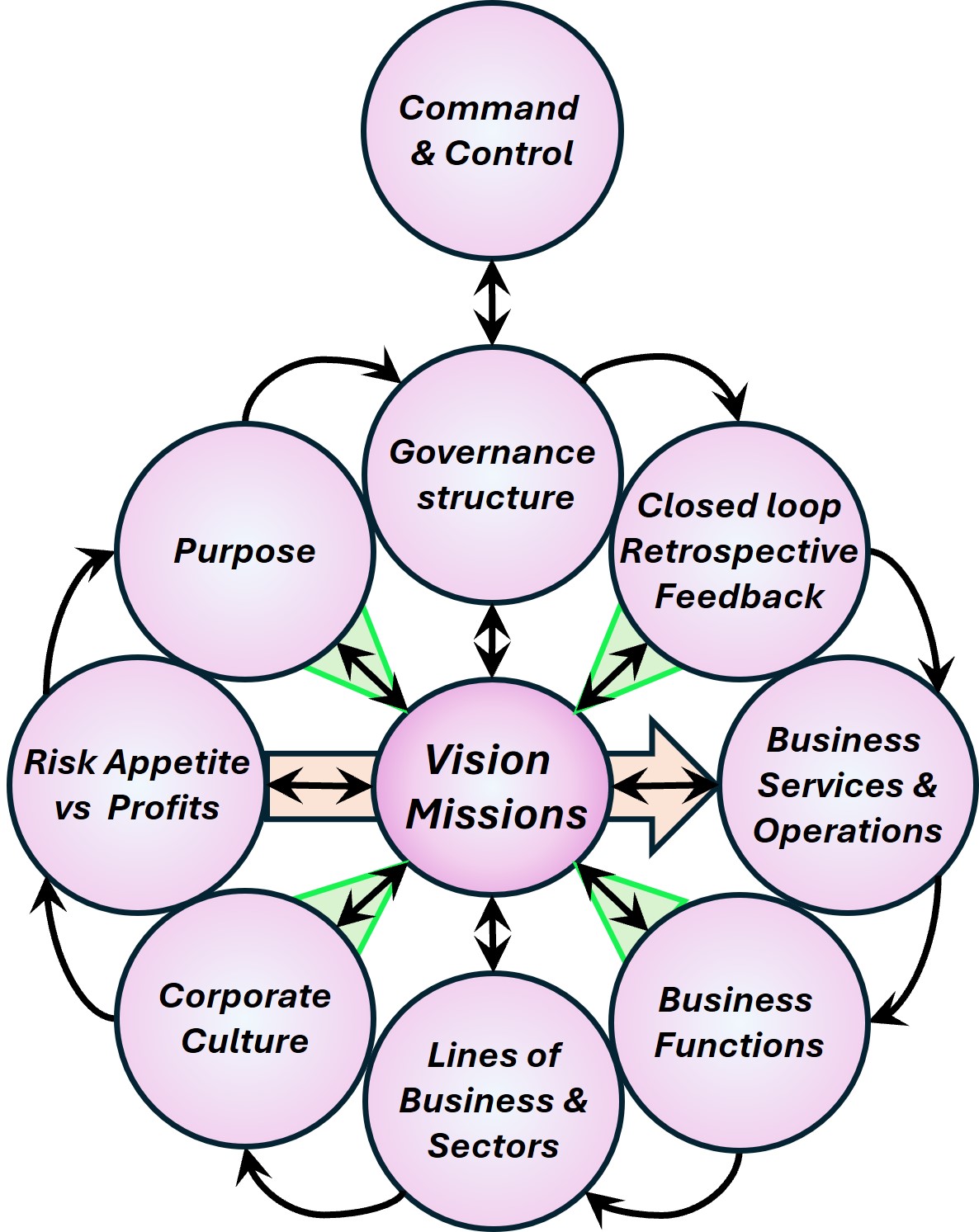

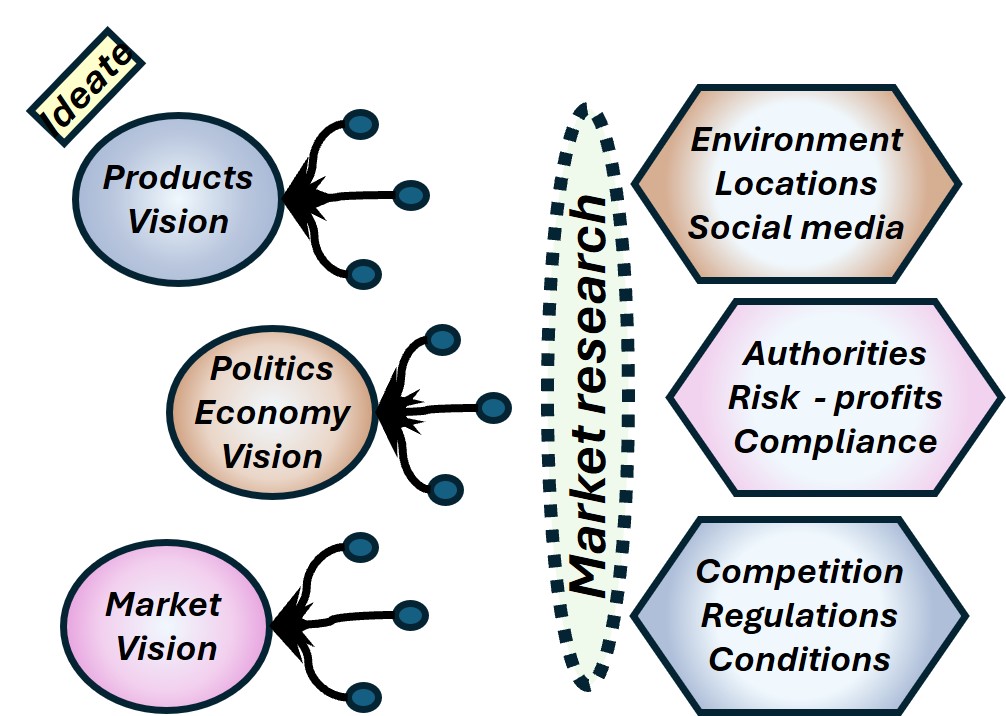

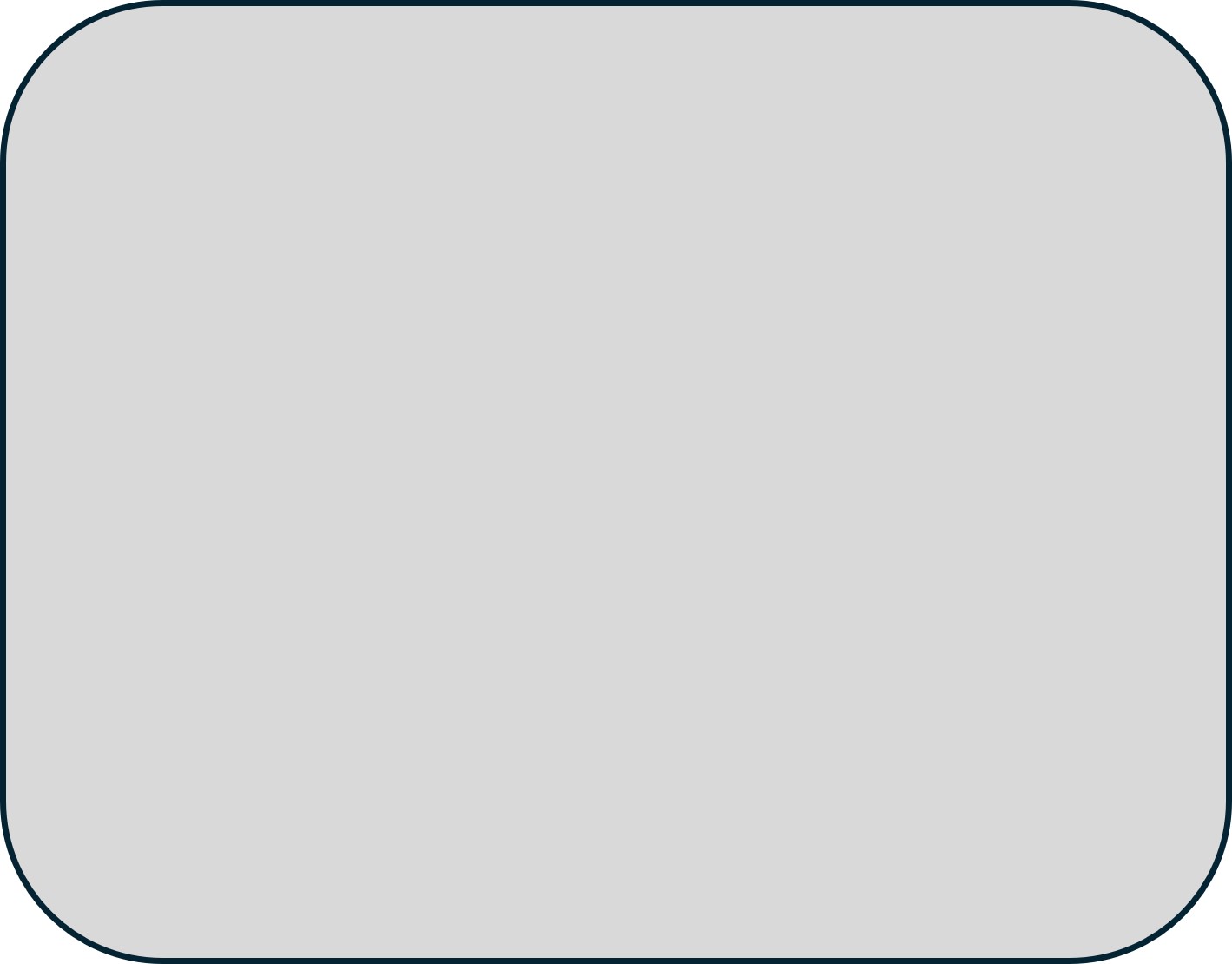

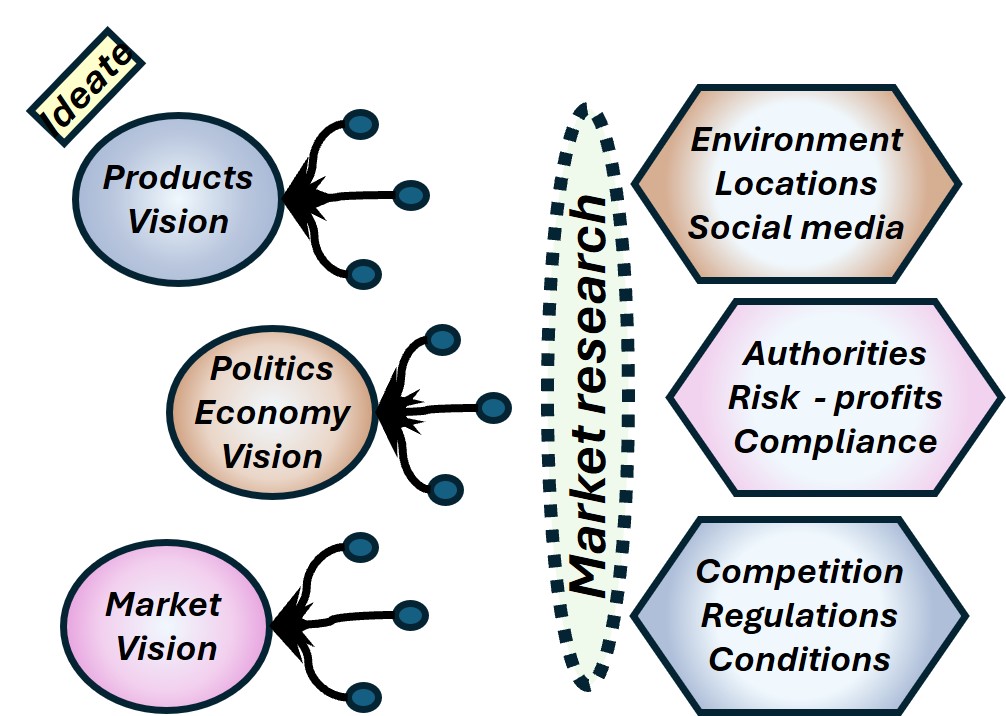

| W-3.4 Visions & missions boardroom results | C6autsll_04 | C_Vision |

| W-3.4.1 How to Structure engineering the enterprise | |

| W-3.4.2 Learning structuring the enterprise by examples | |

| W-3.4.3 Beliefs, social networks influencing the enterprise | |

| W-3.4.4 The closed loop in structuring the enterprise | |

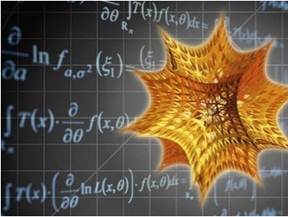

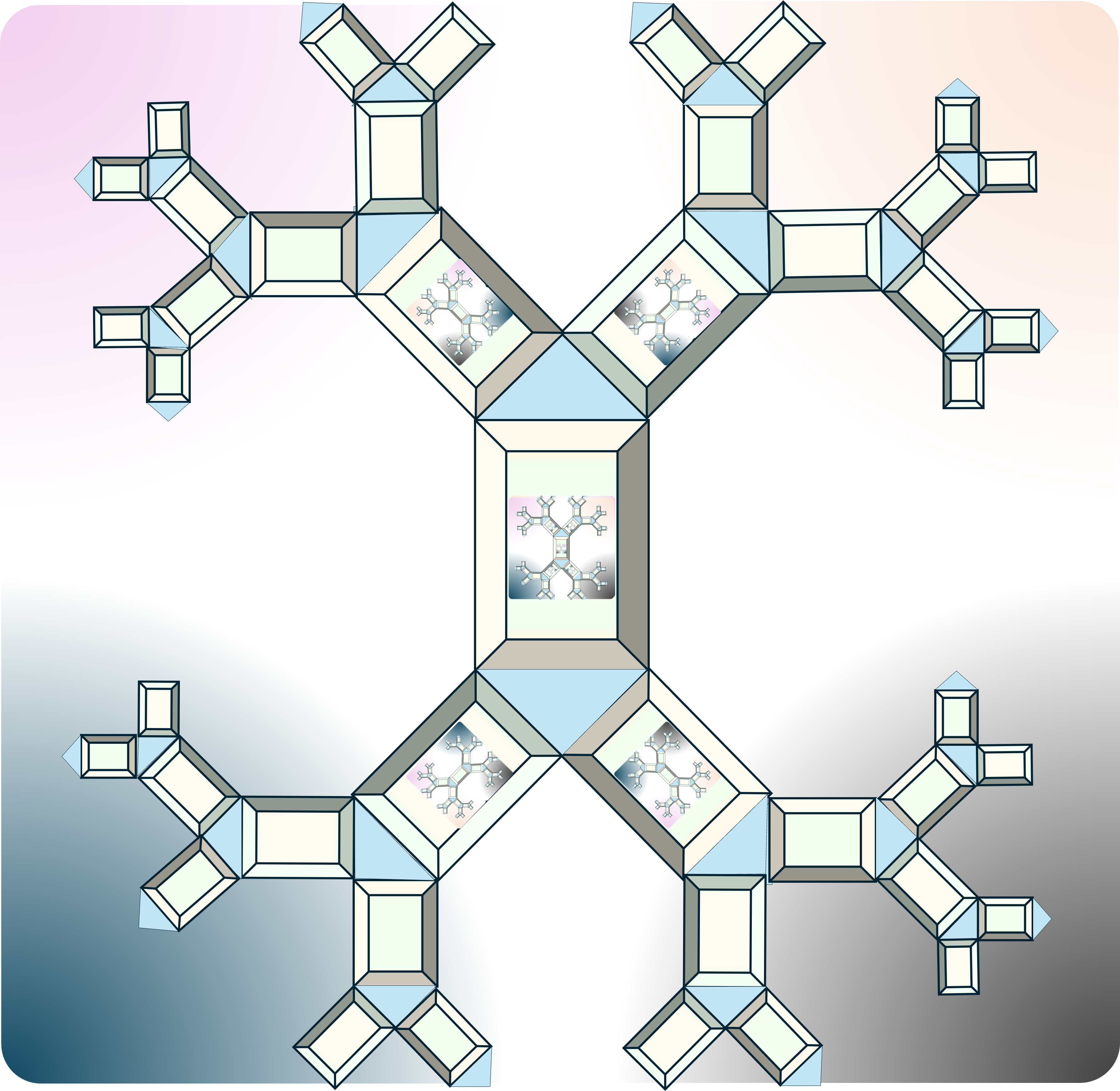

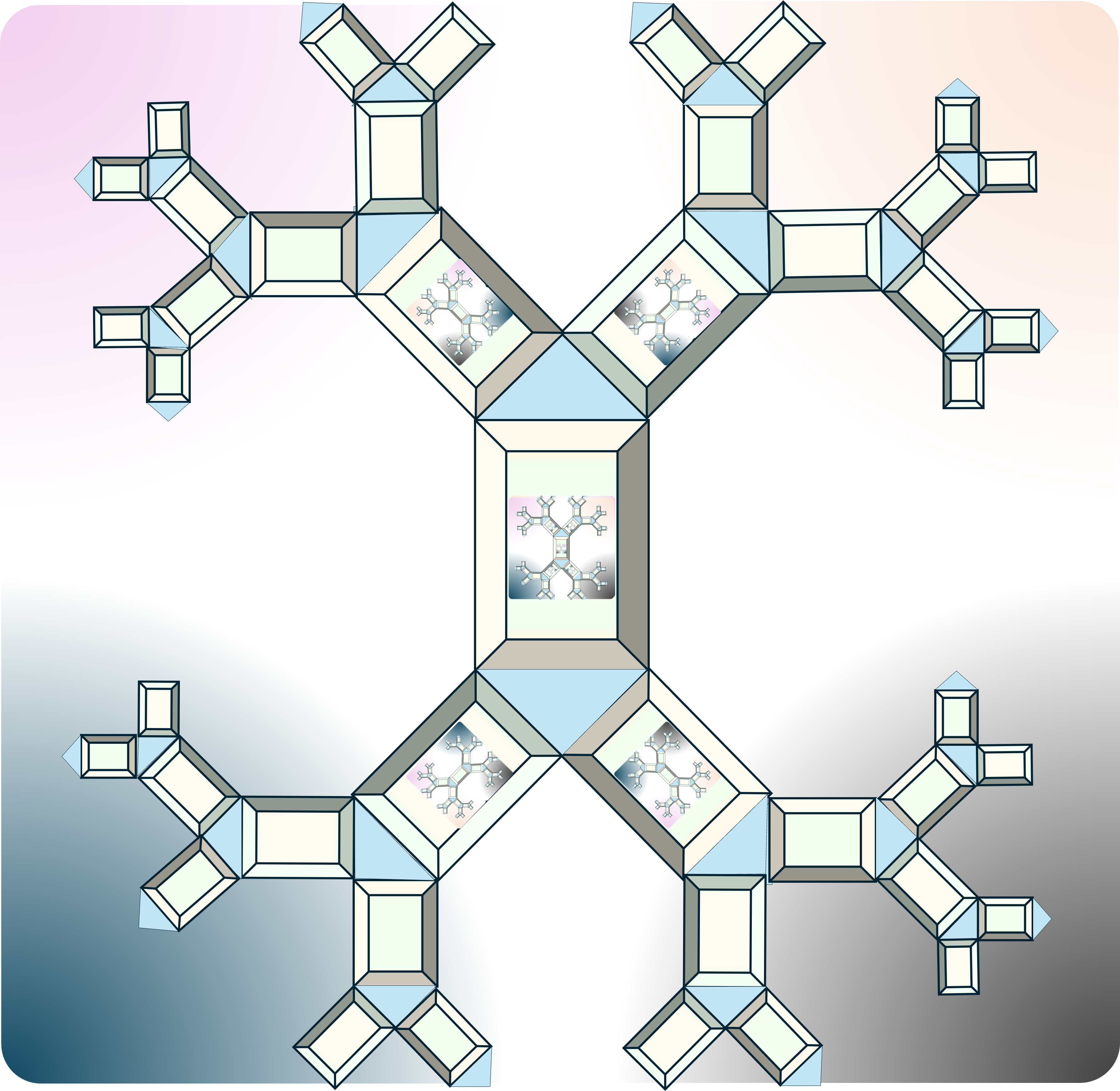

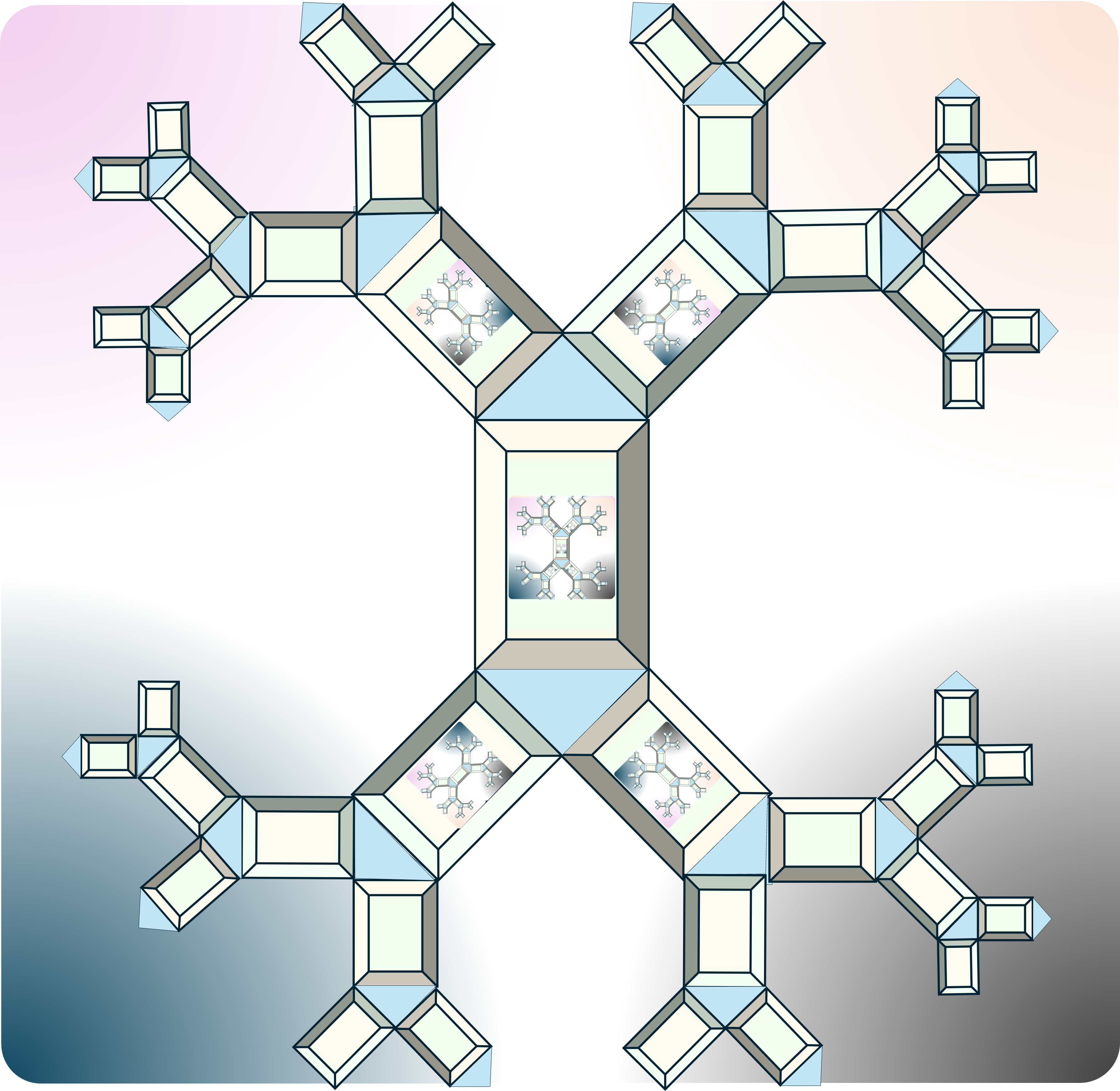

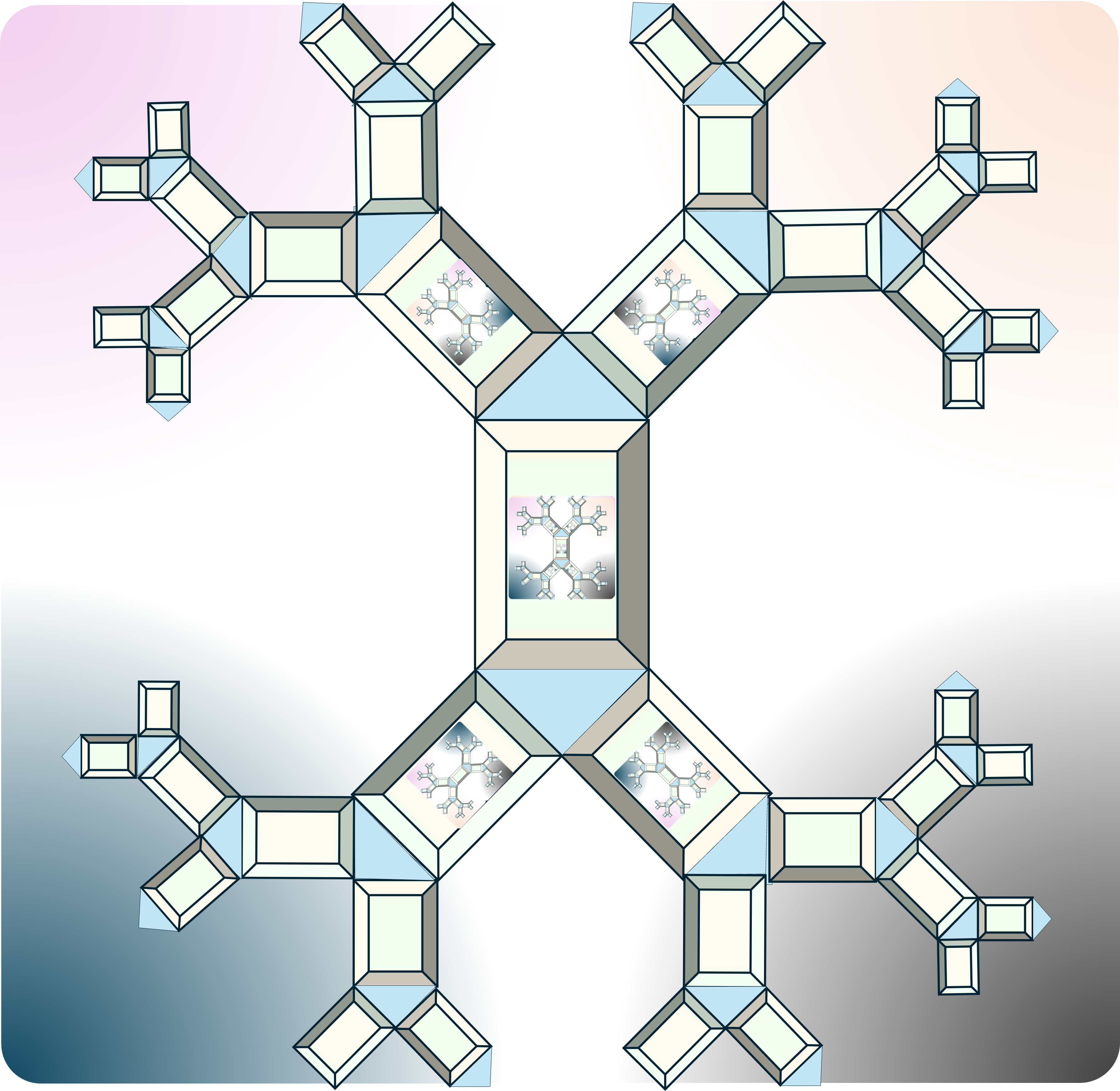

| W-3.5 Sound underpinned theory, improvements | C6autsll_05 | ✅-fractals |

| W-3.5.1 A structured enterprise, the organic cycle | |

| W-3.5.2 The structured enterprise, backend and frontend | |

| W-3.5.3 A structured enterprise, the hidden organisatonal synapse | |

| W-3.5.4 Primary and indispensable secondary processes in the whole | |

| W-3.6 Maturity 5: Strategy visions adding value | C6autsll_06 | CMM5-SIM |

| W-3.6.1 SIMF-VSM Safety with Information at Technology | |

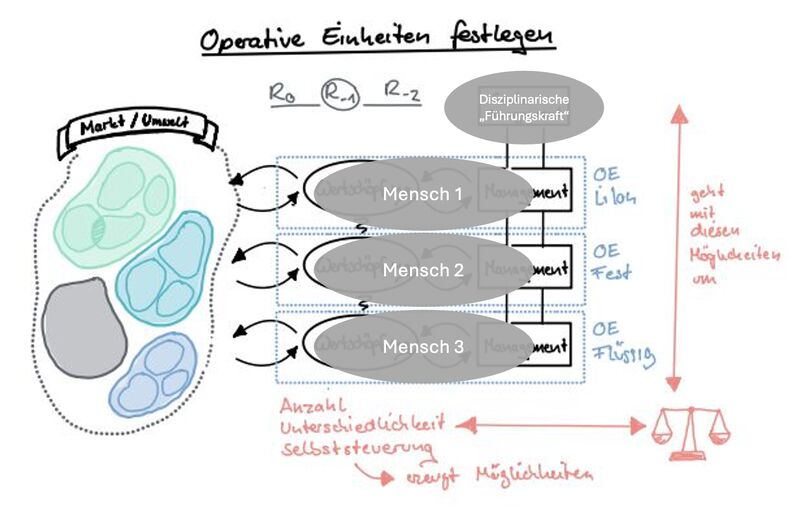

| W-3.6.2 Structuring viable systems with competing dichotomies | |

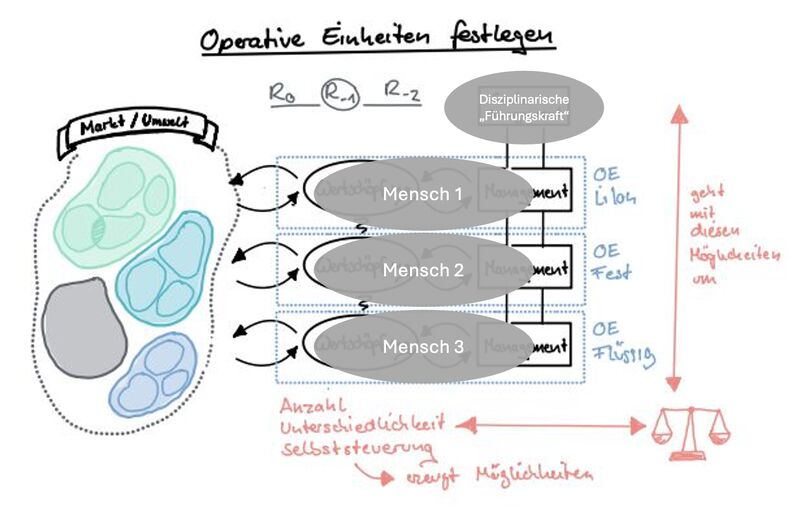

| W-3.6.3 People authoritative leader "PAL" - Operational Units "OUs" | |

| W-3.6.4 A generic context of the 6C in viable systems | |

| W-3.6.5 Following steps | |

⚖ M-1.1.3 Guide reading this page

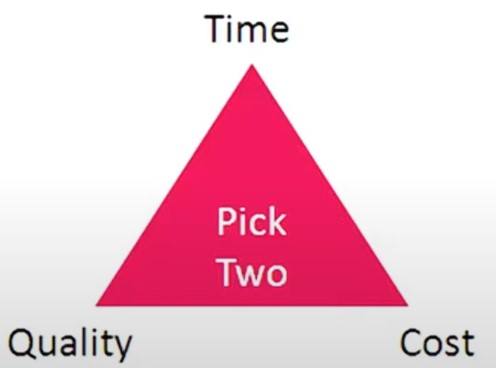

Avoiding the tooligan trap.

What's a 'Tooligan'?

How often do you find yourself falling in love with a tool and then finding a problem so that you can use it?

Whenever I learn something new, I often want to go and try it out and test it on the peeps or systems that I work with, so I start with the tools and look for a problem.

I see this in many organisations and teams that I have worked with.

I was talking to a friend expressing my frustration about the number of tools they used that didn't seem to solve any problem but were just there for the sake of a tool.

- 😲 "Ah," he said, "Tooligans!"

- "What? " I asked "Yes, 'Tooligans'! They have a problem, so they buy a tool or implement a new tool to solve it.

But sometimes they don't understand the real problem and if the tool will solve it."

- "That's exactly it!" I exclaimed, "Tools are everywhere, and the problems are still there."

As humans, we are innately tool makers and users.

Tools can help us express our ideas better, allow us to create beautiful things and solve real problems.

- How do we know we have the right tool for the job?

- How do we know that it will solve the problem for us?

- How do we know if it will improve things or have the opposite effect?

We might want to ask a different question:

- What tools must we use to apply our practices effectively?

- And from there, the next logical question is, what are our practices?

- What are the things that we do to get things done around here?

This is a great question, but probably not the first one to ask.

👉🏾 How will we know what we do is getting us what we want?

Before deciding what we will do, we might want to ask…

- What are the principles that are important to apply to get work done?

- What do we want to leverage to reach more of what we value?

And so before we decide how to shift the system, we must understand what we value.

- Practices (what we do)

Deciding on what we value can help us understand what to optimise for, for example. We optimise for speed because we think that will enable us to get 'X' done faster.

- Tools (what we use)

- Principles

Human work systems behave in specific ways, and some patterns and levers influence how a system works for better or worse.

Principles remain true regardless of context, so understanding which principles can be leveraged can help shift a system positively.

What are the system's needs?

This brings us to possibly the first question we want to ask:

👉🏾 "What problems are we solving here?"

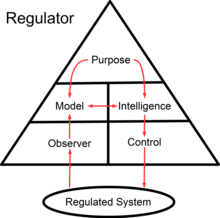

When we look at things this way, we use a model that I love, The Spine Model.

The Spine Model encourages us to start with understanding the system of work and the Needs of that system or the problem we are trying to solve.

It asks us to identify what we Value and what we want to optimise for.

- Once we know what we like to optimise, we can look for Principles and levers that apply.

- Once we know what we value/want to optimise and what principles are necessary, we can decide what to do.

- What Practices will we use?

- Then, finally, we can choose the tools that will support all of this.

Let's walk an example through and see.

- Problem: A team is having trouble keeping track of their busy work.

They don't know who is busy with what and where it is in their process. It also takes a long time for things to get delivered, and they don't know why.

hey want to be able to see what is going on so that they can make improvements and be faster.

- Needs: Visibility of the work and the process of how work flows.

- Values:

- Transparency,

- Speed.

- Principles:

- Visualisation of the work and process to create transparency,

- Work In Progress Limits to help with speed and delivery,

- Feedback loops to help with improvements and alignment,

- Metrics to know if improvements are happening.

- Practices:

- Visualise the work and the flow of work,

- Get together daily to sync, Weekly to plan, and every two weeks to improve,

- Measure lead time and cycle time and look for improvements.

- Tools:

We have many options now, but using a checklist for our practices and principles can help us decide and ensure that the tool gives us as much of what we need as possible.

We can also start very low-tech with a physical board or wall if we are collocated with something fast, cheap, and effortless that we can iterate over instead of something rigid, expensive and challenging to implement.

Getting the tooligans into the system's needs

"If you want a straight spine, you have to start at the top, at Needs and work down iteratively."

😱 I have seen so many organisations do the opposite.

They start with a tool (Jira, Pivotal Tracker, Microsoft Azure, etc.), and then that tool defines their practices.

😲 Those practices shape the principles that apply and determine what optimisations are possible.

More often than not, the original problem remains, and sometimes, the cycle continues with the following tool.

“The value of the Spine Model is to enable thinking and communication, heterodoxy.”

😉 The Spine Model can help us put our critical thinking hats on and ensure we are solving the right problem.

It's about communication and shared understanding, which can save us time and money on useless tools and unsolved problems in the long term.

👉🏾 When I find myself being a "tooligan", I remind myself to begin with Needs.

⚒ M-1.1.4 Progress

done and currently working on:

- 2020 week 05

- Setting wiht the mind for a technology approach.

- Splitting page into more logical parapgraphs.

- 2024 week 47

- Web content redesign with Jabes being pivotal started for this page.

- The goal for command & control has become more clear by:

- Knowing what the conceptual gaps in command & controls are from theoretical perspectives

- Insight needed for improvements according Gemba, using the shop-floor

- The old content integrating and moving to the technical system serve area where applicable.

- 2024 week 50

- Getting to the possible realisations is not easy.

- The goal for command & control:

- Using the viable system 3d system model according Gemba, shop-floor

- Details for sophisticated technical details in information safety and information quality

- The logical growth path for introducing into an existing system by the floor levels

- The first chapters W-1.1 to W-1.6 getting an aligned idea for content draft level.

- 2024 week 51

- Getting to the possible realisations is not easy.

- Processed the VSM theory with some tweaks, hopefully as improvements.

- Updates in the text W-1.1 to W-1.6 for alignment

- Adding the principles for an infomations processing systems in a VSM setting

- Chapters W-2.1 to W-2.6 got an idea in content draft level.

- 2025 week 1

- Chapters W-3.1 to W-3.6 got an idea in content draft level.

- Thia page is in draft ready for the time being.

Planning to do & changes:

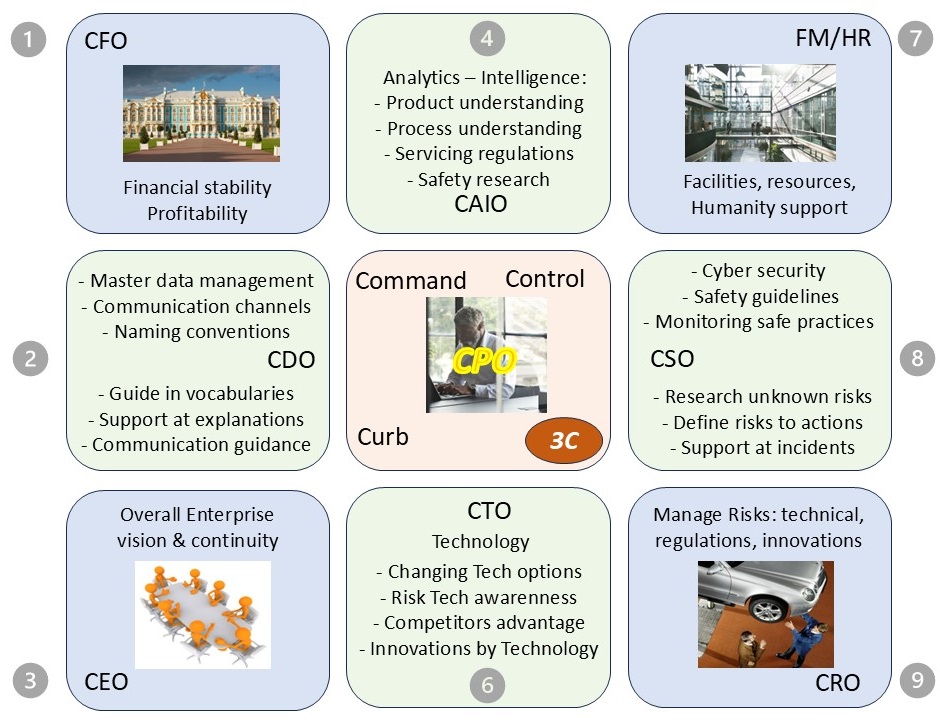

- The Vism is only the operational part, this one was build up from a simple 9 plane.

The strategy part is missing for the higher level system-5 and system-4.

- The replication will mirror some things

- The replication will focus on the other dichotomy

- Chief Product officer as central nerve:

- must be technically strong, Customer oriented

- organizational influence, cooperation

- mental toughness is crucial, Cost awareness

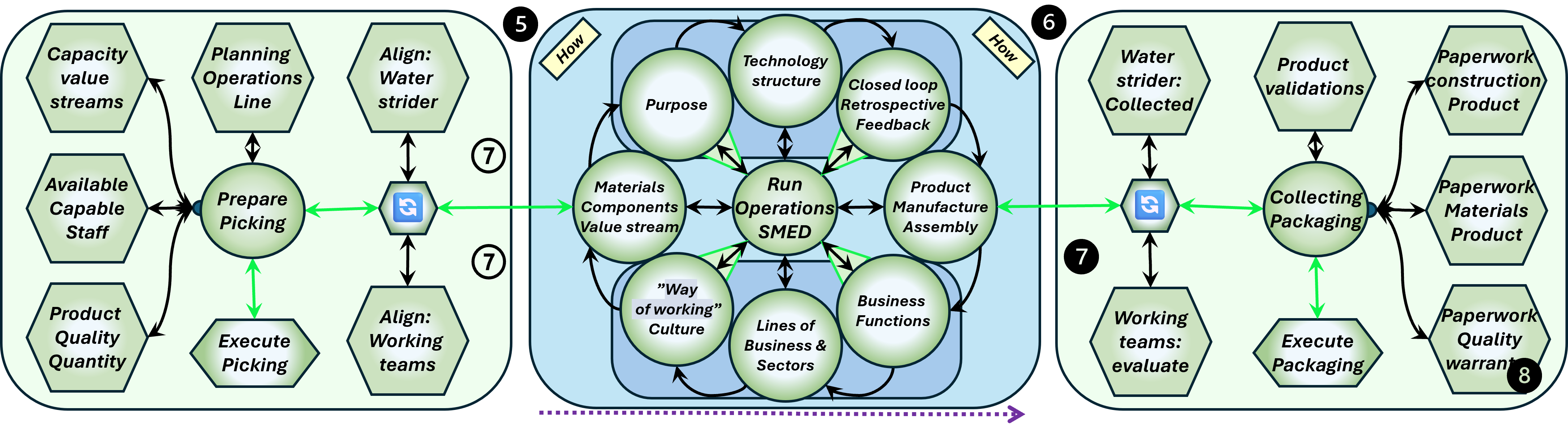

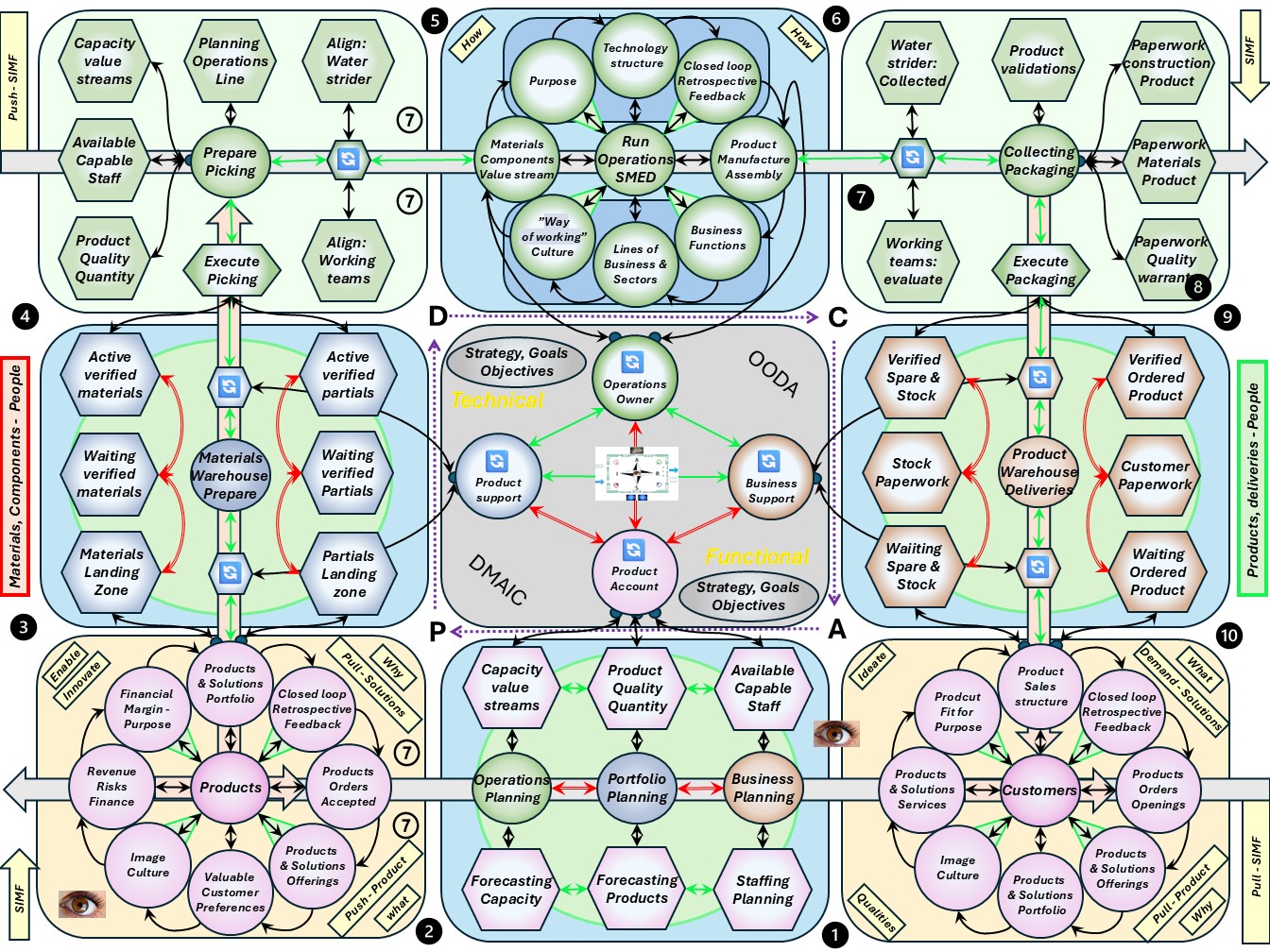

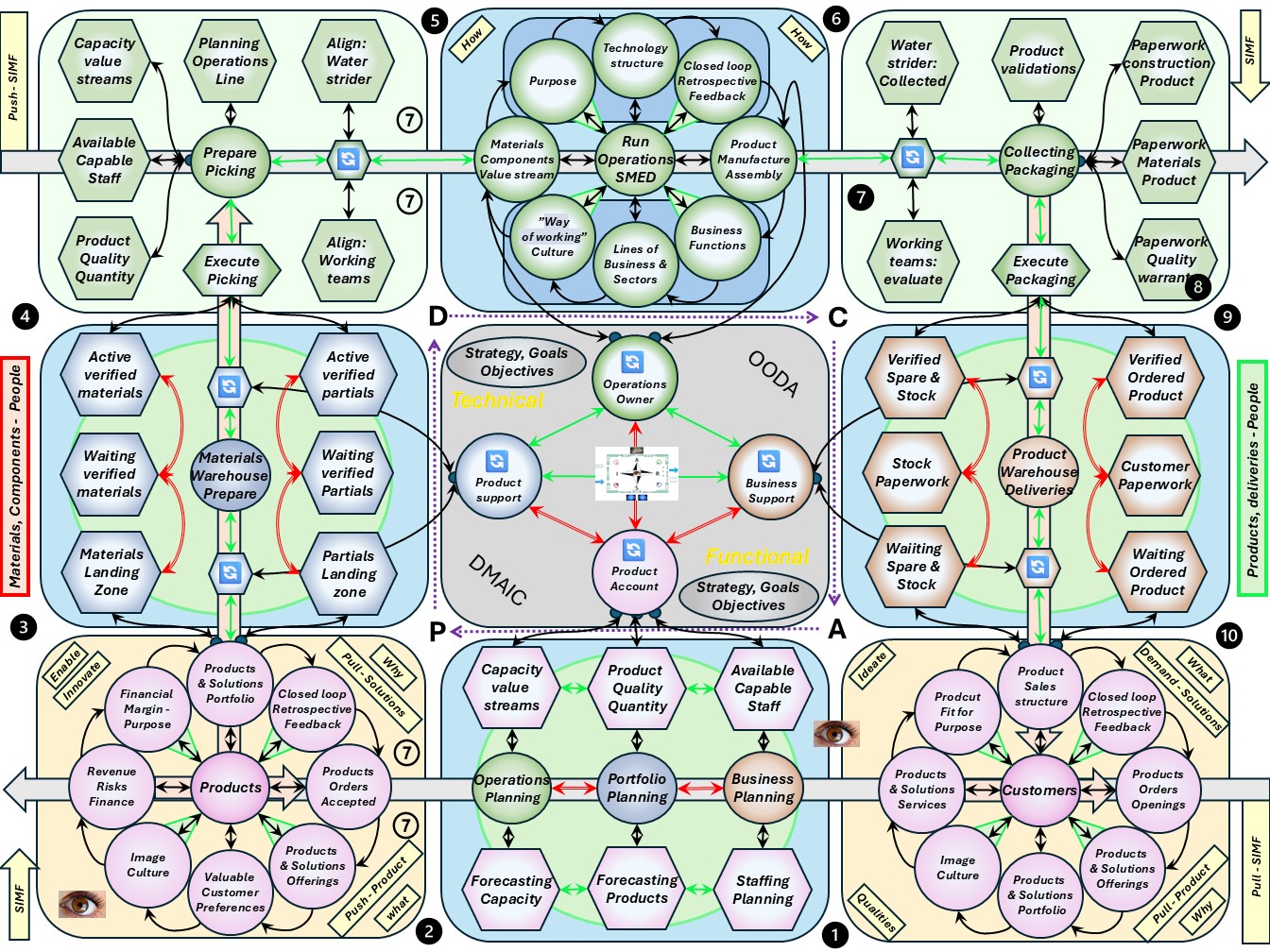

W-1.2 Floor plans, ordered dimensions

Building any non trivial construction is going by several stages.

These are:

- high level design & planning

- detailed design & realisation

- evaluation & corrections

Non trivial means it will be repeated for improved positions.

Before any design, tools are needed for measuring what is going on.

Without knowing the situation or direction there is no hope in achieving a destination by improvements.

⚖ W-1.2.1 Context information technology: master data

Functional data governance 101.

There is no options in avoiding accountability.

Foundation managing information processes products services for data, information is functional about:

- The context purpose: 📚 it is describing.

- Who is using and when: 🎭 access control and monitoring

- Reliability availability: 👁 Transactional integirty, optional system recovery

- Relationships: ⚙ between logical elements (meta context plan)

- An inventory: ⚖ What is really being important & used

Technical data governance 101.

Using a relational database for managing information is one of the many technical options to realize the functional information processing.

The technical translation of the functional context uses a different language:

- DDL Data Declaring: 📚

The translation from logical to technical has functional impact. Transactional processing is very different compared to analytics.

- DCL Data Control: 🎭 This is partially "access control", "information security".

That is a generic functional topic for another level of tasks & responsibilities.

- TCL Transaction Control: 👁 Loosing information can be catastrofal when it is about legal agreements.

An example of critical systems is payments.

- DML Data Manipulation: ⚙ Modification

- DQL Data Querying: ⚖ Usage

Avoiding misunderstanding: platforms & applications

What is a

"platform"

is as confusing by lack of a shared definition as "the application". Not clear anymore if tangible are machines, servers.

❶

In IT, a platform is any hardware or software used to host an application or service. ...

The term platform may also go beyond simply describing the underlying architecture to also include software that is built upon the architecture.

For example, the adoption of virtual machines in an enterprise requires a hypervisor platform. ...

❷ Tools are software but inseparatable parts of a platform.

Even though an application may require an underlying computing system, such as a particular OS and server or storage hardware, an application may be considered a platform when it is used as a tool for performing meaningful work. ...

For example:

- Structured Query Language (SQL) is a database application. But it is frequently used as a component in other functions, such as logging, analytics, customer relationship management and enterprise resource planning systems. SQL may be referred to as a platform.

- Similarly, a web server application may be considered a platform because it is used to operate the business storefront or user/partner portal.

- Software stacks, combinations of software components, that facilitate the deployment of other complex services for the business may also be referred to as platforms.

The goal of platform engineering is to create organized groups of resources and services that developers can use without needing to deeply understand or directly manage them.

These organized groups, called platforms, are often built using many of the same software development skills and abilities found across DevOps teams.

❸ The "system programmer" role as defined by IBM in the mainframe context (80's).

The platform team uses tool experts to understand developer needs, select the best tools for the required tasks, perform integrations and automations, and troubleshoot and maintain the established platform over time. ...

But platforms don't just happen, and one size never fits all. Platforms themselves are typically considered a product, and they must be created and maintained for the business and its specific software development and productivity needs.

Because platforms are composed of discrete components and services, they can be changed and enhanced over time.

Using a shared environment, shared way of practices, controlled quality for the production environment, there is no other option than a regulated centralised approach for platforms.

It are the equivalents of machinery in the industry. These should usually not be installed nor maintained by the intended operators of the machinery. It is far too demanding to combine those skills to excel.

⚖ W-1.2.2 Definitions products, goods, services: master data

Products, Goods, Services, what is it about?

There is not a good single reference understanding the mastedata object container "service".

Combining multiple sources is the best option. Links with ideas about services, goods for products:

Using chatgpt result for the definition of Services and from the linked sources:

Services are intangible activities or benefits that an organization provides to consumers in exchange for money or something else of value.

Products: Goods vs Services

Differences are:

- Tangibility: Goods are tangible and can be seen and touched, while services are intangible.

- Storage: Goods can be stored and inventoried, whereas services perish if not consumed.

Services cannot be touched, stored, or transported

Service-relevant resources, processes, and systems are assigned for service delivery during a specific period in time.

- Production and Consumption: Goods are produced, then sold, and then consumed.

Goods are sold first and then produced and consumed simultaneously.

❹ Unique characteristics of Services:

- Intangibility: Services cannot be seen, tasted, felt, heard, or smelled before they are bought. They are performances rather than objects.

- Inseparability: Services are produced and consumed simultaneously. The service provider and the consumer must be present for the transaction to occur.

- Perishability: Services cannot be stored for later use. If not used, the opportunity to provide the service is lost.

- Variability: The quality of services can vary greatly depending on who provides them and when, where, and how they are provided.

Each service is unique. It can never be exactly repeated as the time, location, circumstances, conditions, current configurations and/or assigned resources are different for the next delivery, even if the same service is requested by the consumer.

Products, Goods, Services: types and quality

The human factor is often the key success factor in service provision.

❺ Types of Services:

- Business Services: These are services used by businesses to conduct their operations.

Examples include consulting, advertising, and logistics.

- Personal Services: These are services provided to individuals (consumers).

Examples of Services:

- Healthcare: Medical consultations, surgeries, and nursing care.

- Education: Teaching, tutoring, and training.

- Hospitality: Hotel stays, restaurant dining, and travel services.

- Governmental: eg passport, housing, personal finance aid, roads.

❻ Types of Services:

Service quality can be measured through various dimensions such as:

- reliability, Mass generation and delivery of services must be mastered before expanding.

- responsiveness, Demand can vary by season, time of day, business cycle, etc.

- assurance, Consistency is necessary to create enduring relationships.

- empathy, and

- tangibles (the physical evidence of the service).

Both inputs and outputs to the processes involved providing services are highly variable, as are the relationships between these processes, making it difficult to maintain consistent service quality.

Many services involve variable human activity, rather than a precisely determined process.

Service-commodity goods continuum

The distinction between a good and a service remains disputed.

Classical economists contended that goods were objects of value over which ownership rights could be established and exchanged.

Ownership implied tangible possession of an object that had been acquired through purchase, barter or gift from the producer or previous owner and was legally identifiable as the property of the current owner.

😲

Adam Smith's famous book, The Wealth of Nations, published in 1776, distinguished between the outputs of what he termed "productive" and "unproductive" labor.

The former, he stated, produced goods that could be stored after production and subsequently exchanged for money or other items of value.

The latter, however useful or necessary, created services that perished at the time of production and therefore did not contribute to wealth.

Building on this theme, French economist Jean-Baptiste Say argued that production and consumption were inseparable in services, coining the term "immaterial products" to describe them.

🤔

In the modern day, Gustofsson & Johnson describe a continuum with pure service on one terminal point and pure commodity good on the other.

Most products fall between these two extremes.

For example, a restaurant provides a physical good (the food), but also provides services in the form of ambience, the setting and clearing of the table, etc.

⚖ W-1.2.3 Services orientation at information technology

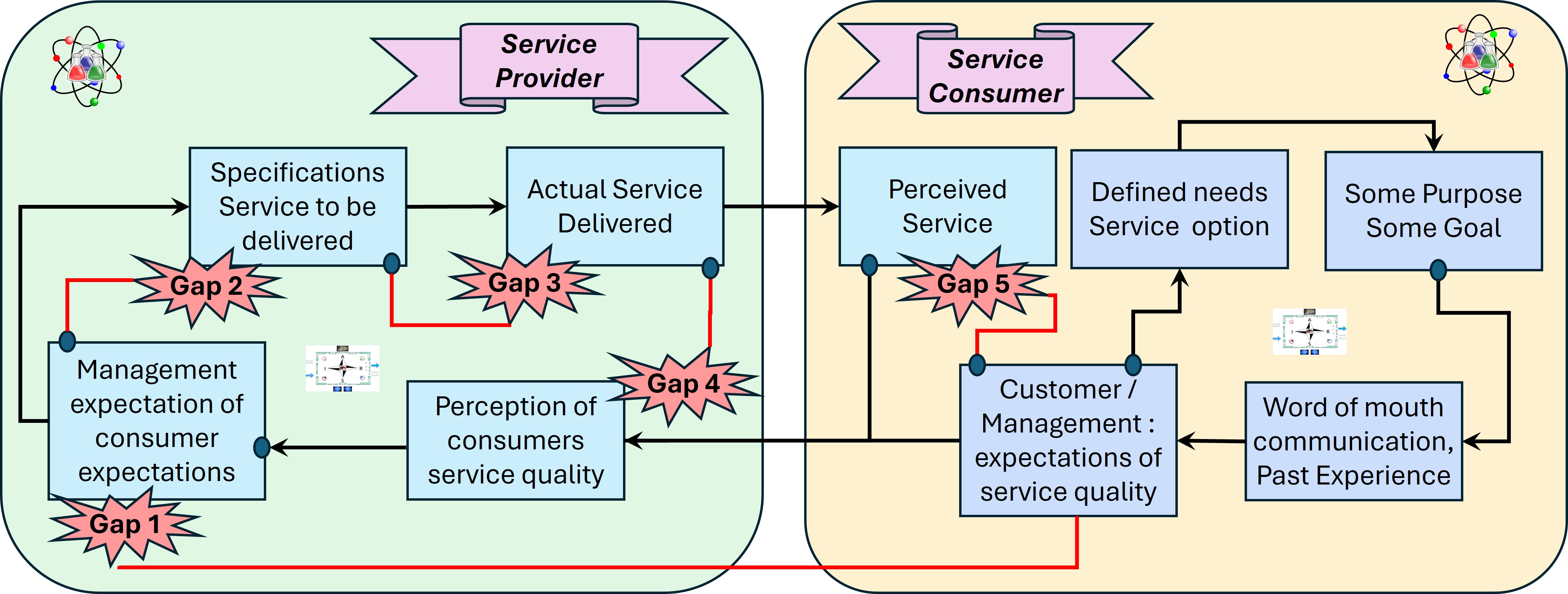

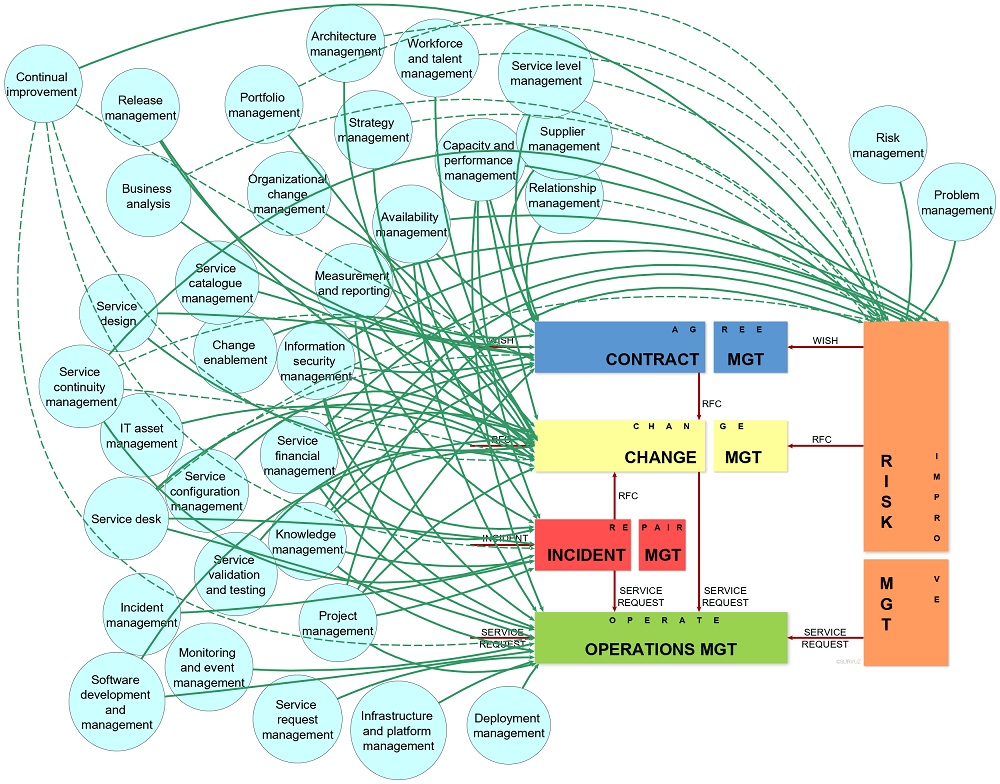

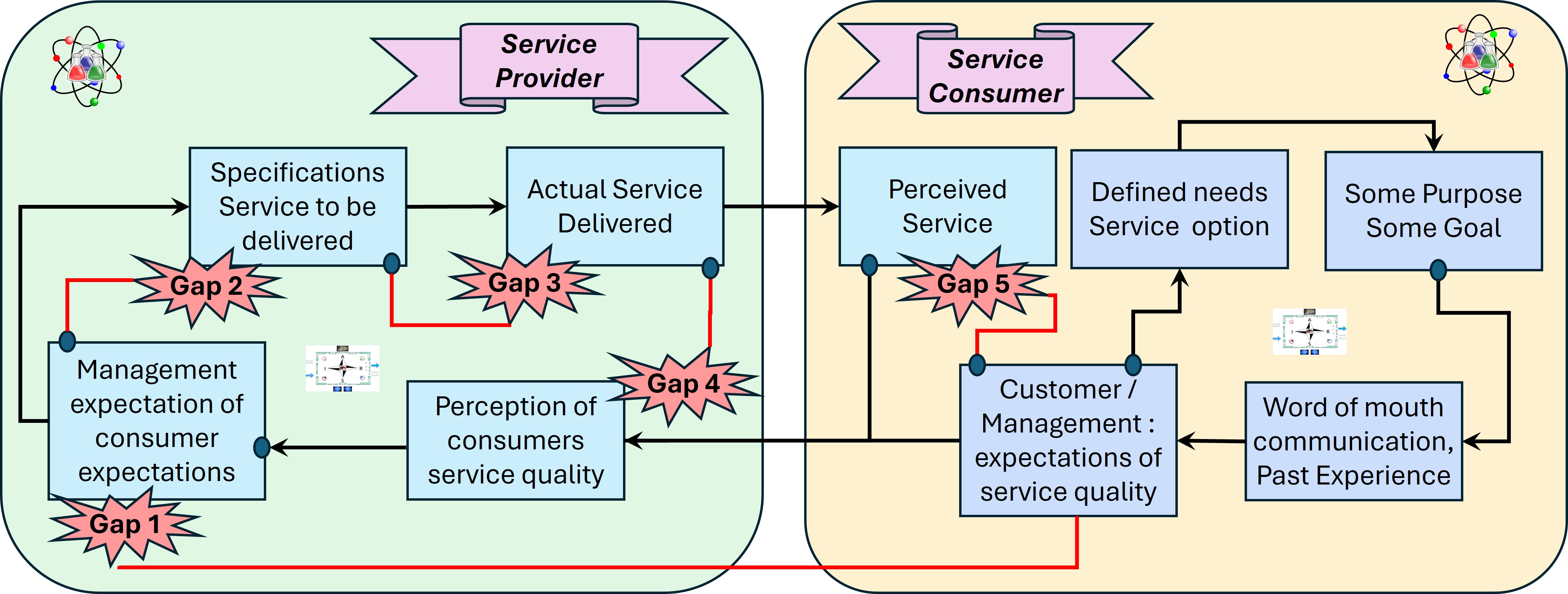

Managing service gaps

❼

Consequently, customers evaluation of overall service quality is based on a combination of all five aspects outlined above.

Knowing the way customers evaluate service, it is important to understand, identify and measure the potential gaps that may exist in the service delivery process.

- knowledge gap This occurs when there is a disconnect between what a customer wants or expects in service quality and what the management team of the service provider thinks the customer wants or expects from the service delivery.

- standards gap This occurs when there is a difference between what the management team wants and the actual service delivery specification that management develops for employees to follow in delivering the service.

- delivery gap This gap can occur when there is a disconnect between the service standard and the actual service delivered to the customer.

- communications gap This happens when there is a difference in what the customer is told they can expect and what service is actually delivered.

- Expectation gap This gap can appear when there is a difference in what the customer expects from the service (prior to consumption or purchase) and what the customer perceives of the service after it has been provided.

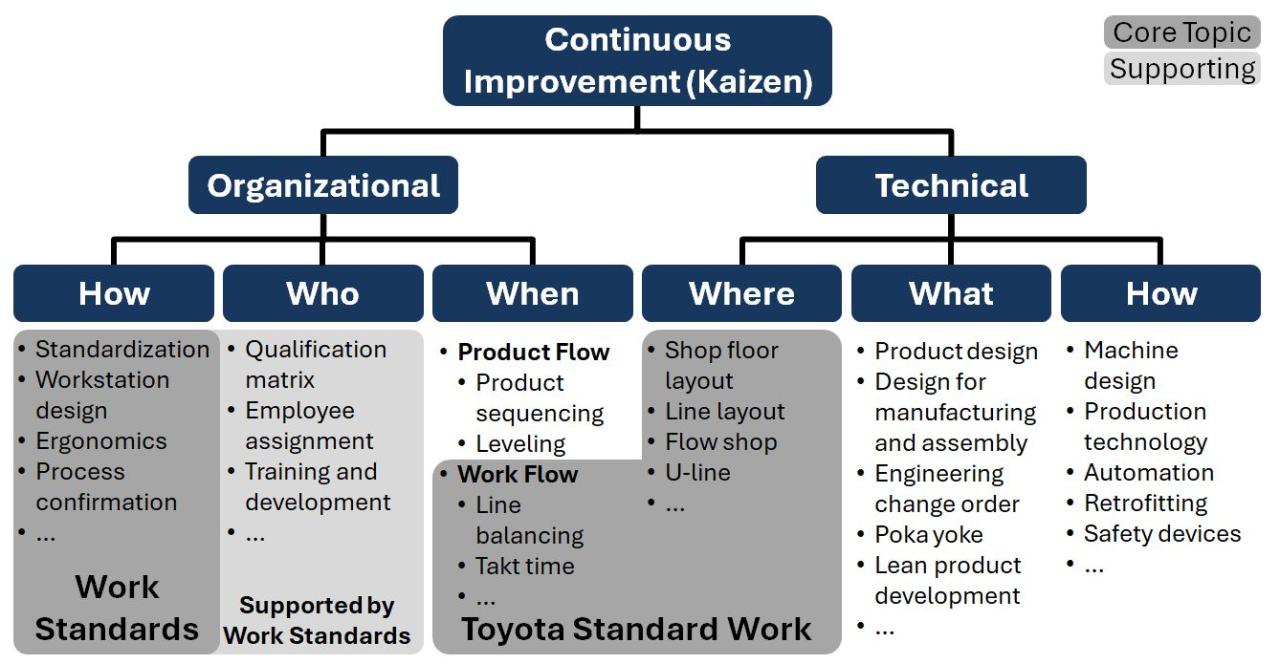

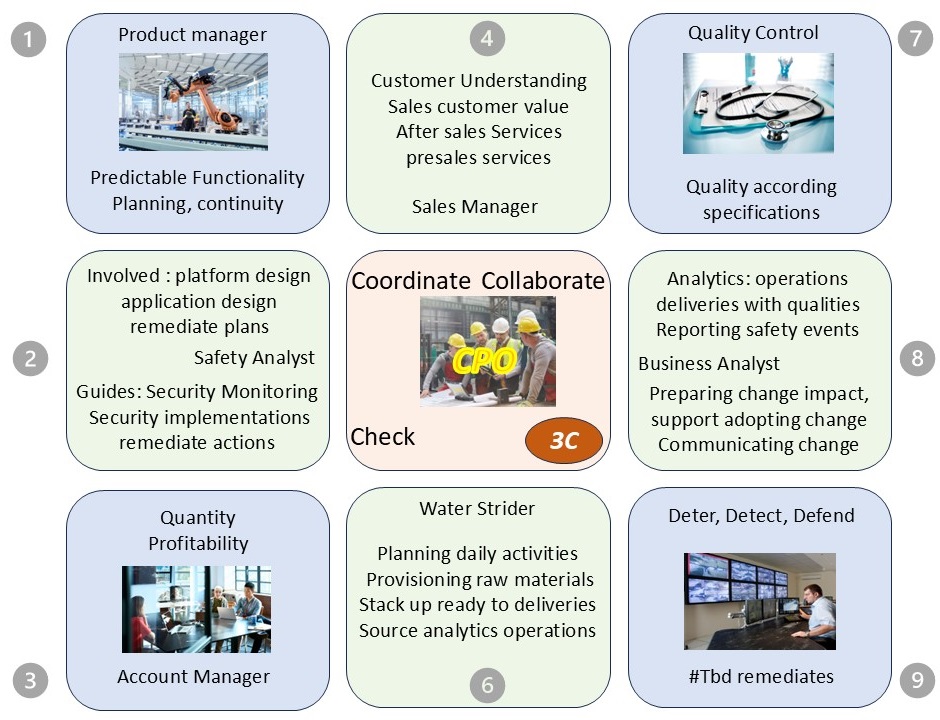

The soll in infomration service provision

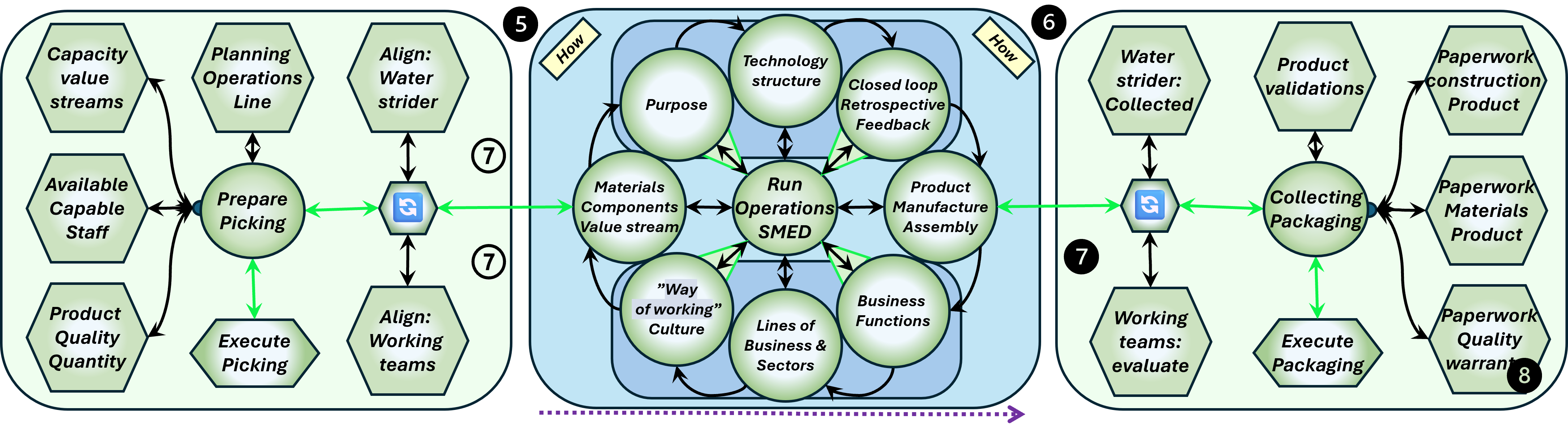

❽ Changes in the way of working are needed at a lot of levels. Bottom-up from technology perspective starts at 6.

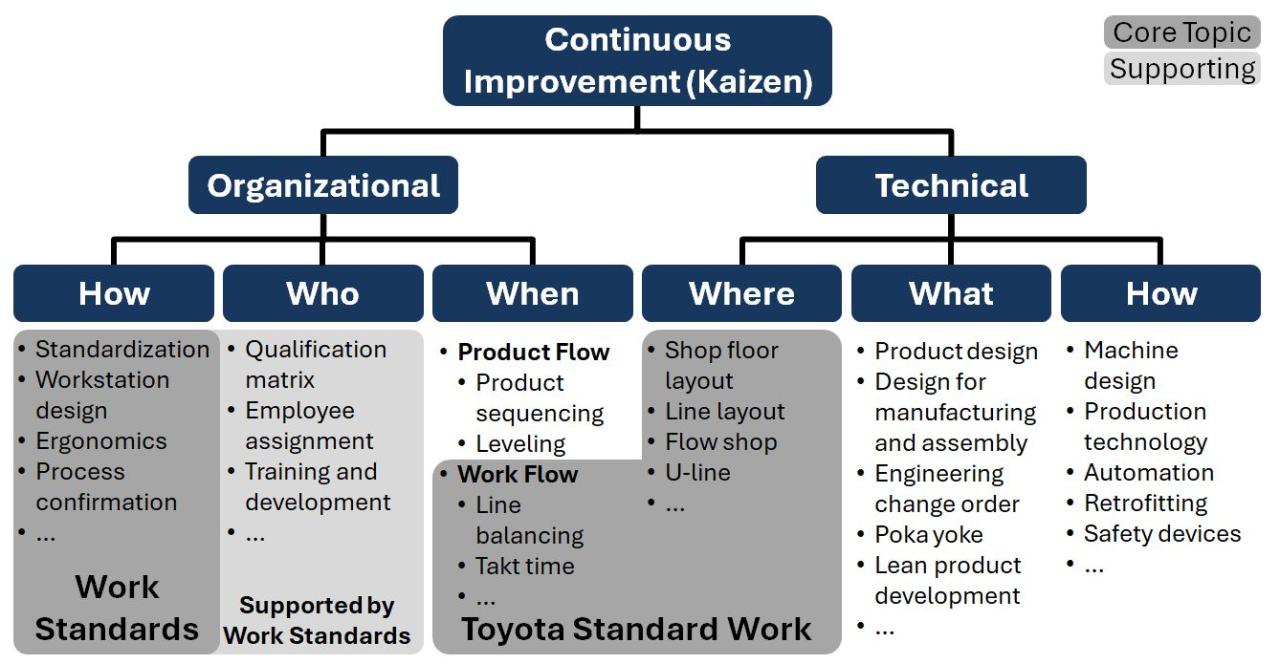

The columns are: Customer Focus, Processes & Tools, Continuous Learning & Improvements, Team structure, Value stream management, Culture.

👉🏾 The "soll" in a matrix (top-details, context-bottom):

| |  |  |

| | Customer | P&T | CLI | Teams | VSM | Culture |

| | ➡ What | ➡ How | ➡ Where | ➡ Who | ➡ When | ➡ Which |

| 6 5 ➡ | seek satisfaction | continuous improvement | quickly actions | organic autonomy | eliminate bottlenecks | diversity in thinking |

| 7 4 ➡ | visible deliveries | automate: no defects | evolving skills | knowledge sharing | balance: speed - quality | shared visions missions |

| 8 3 ⟳ | feedback loops | effective efficiency | small iterations | breaking hierarchy | informed decisions | safe, blame-free |

| 8 3 ⟲ |

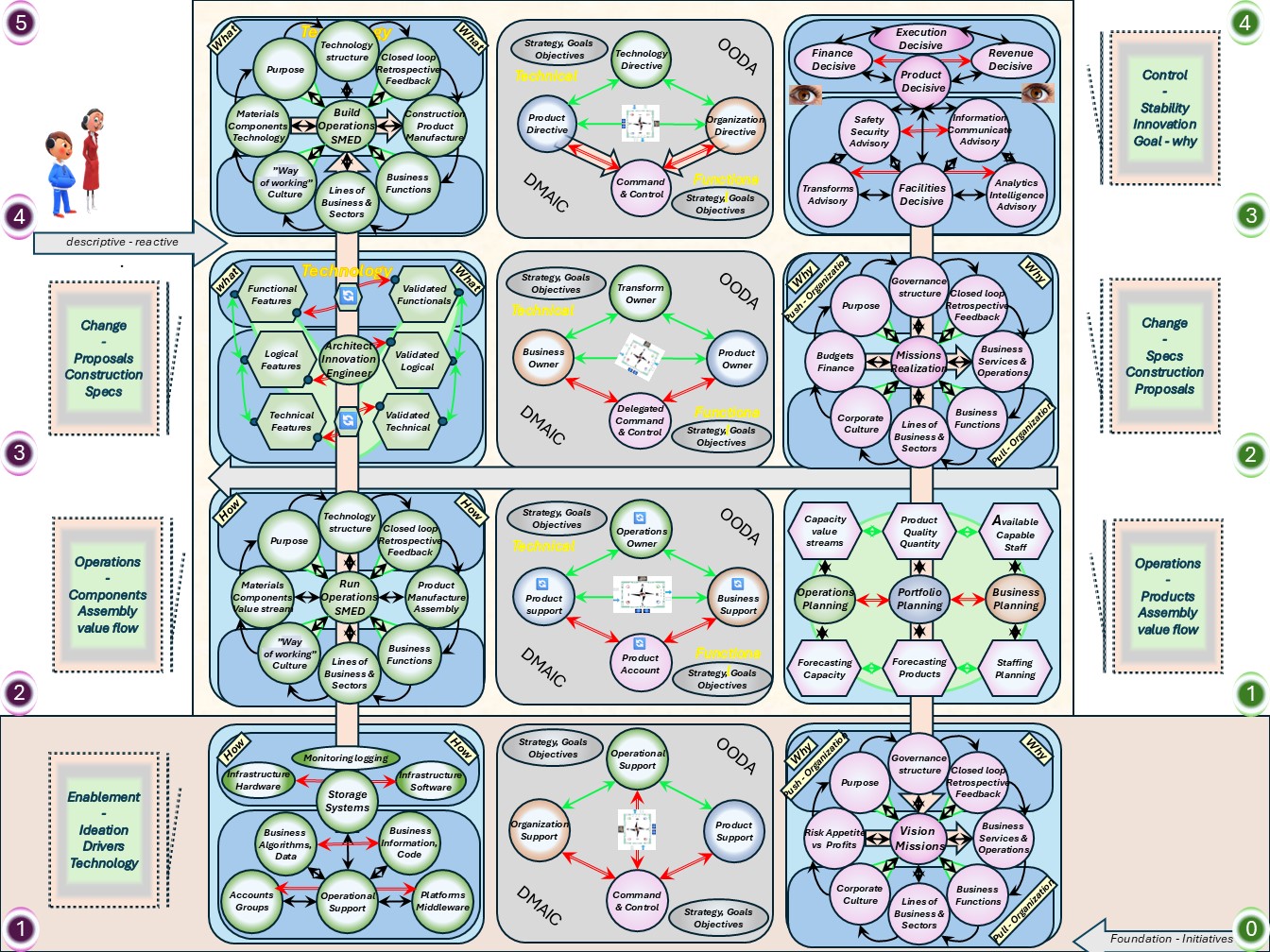

| 9 2 ⬅ | value creation | collaboration | mistakes = learning | responsible autonomy | flow measurements | trust & openess |

| 0 1 ⬅ | Understand needs | lean: avoid 3m | adaption culture | diversity in teams | lean: flow optimisation | transparancy |

🎯💡 Promoting this way of working can be only succesfull by showing it by example.

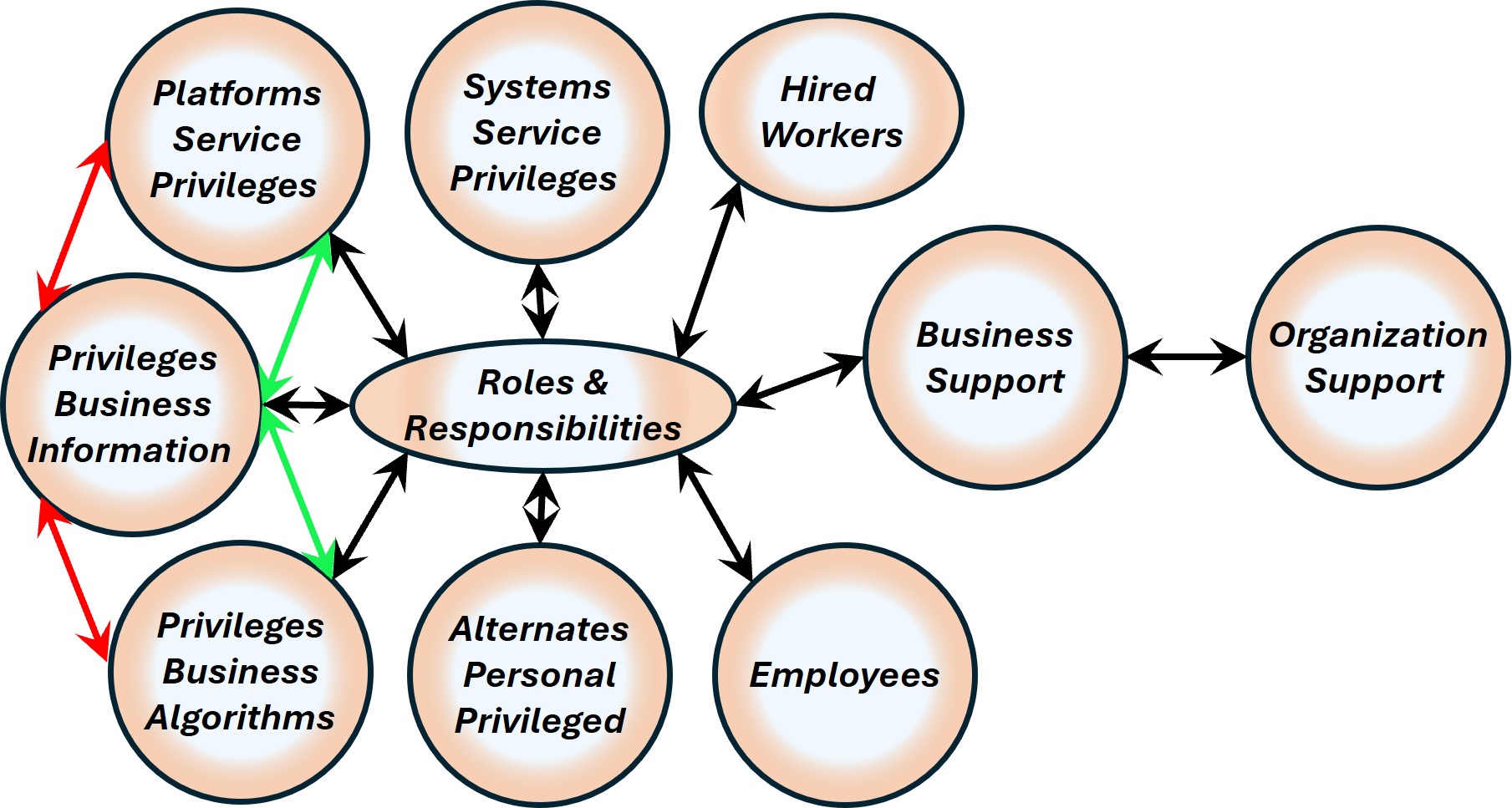

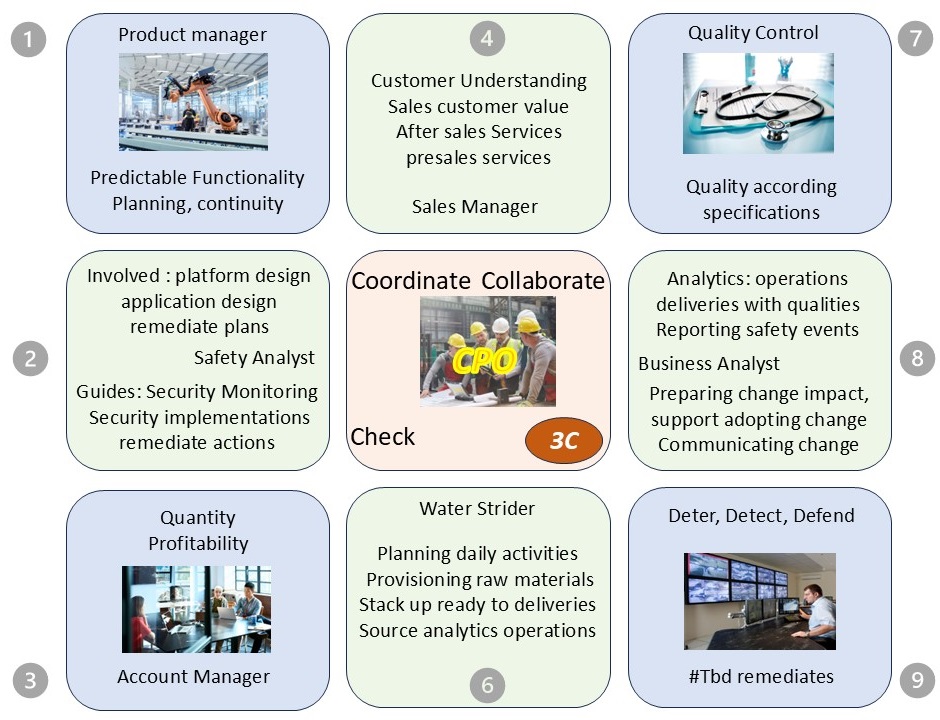

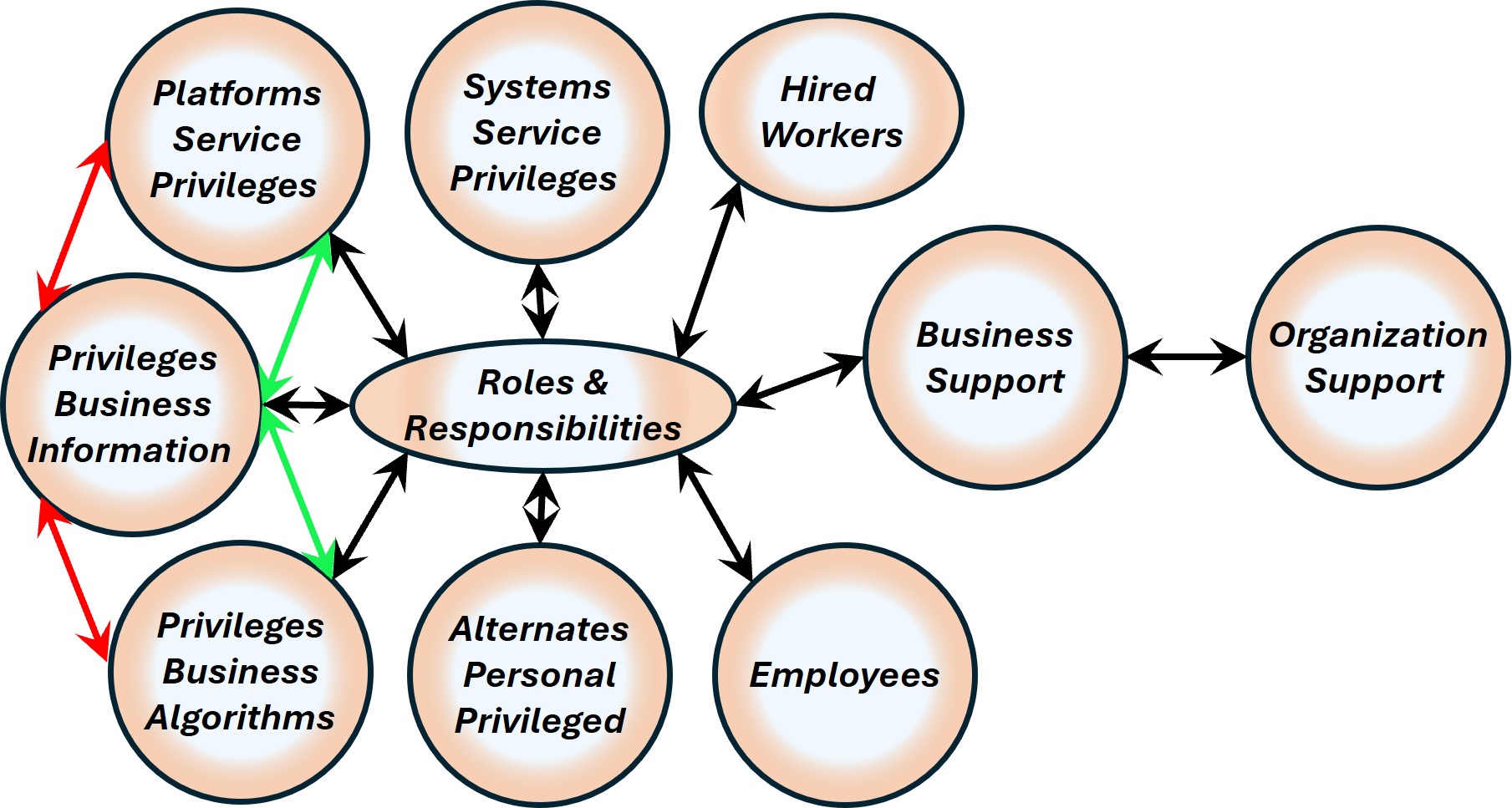

⚖ W-1.2.4 Roles tasks levels in Information Services

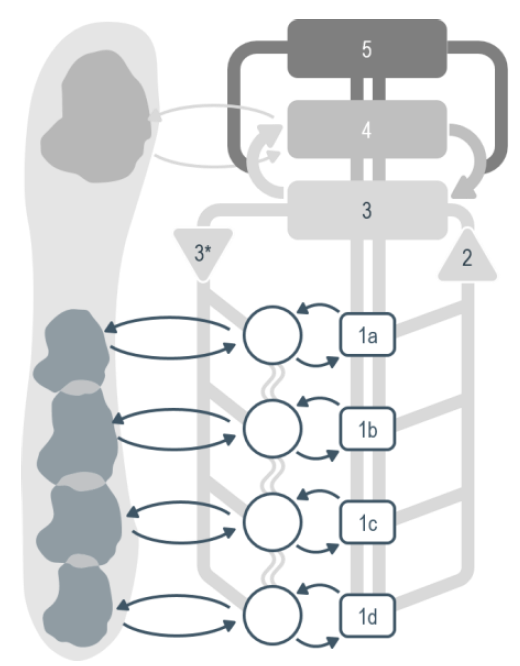

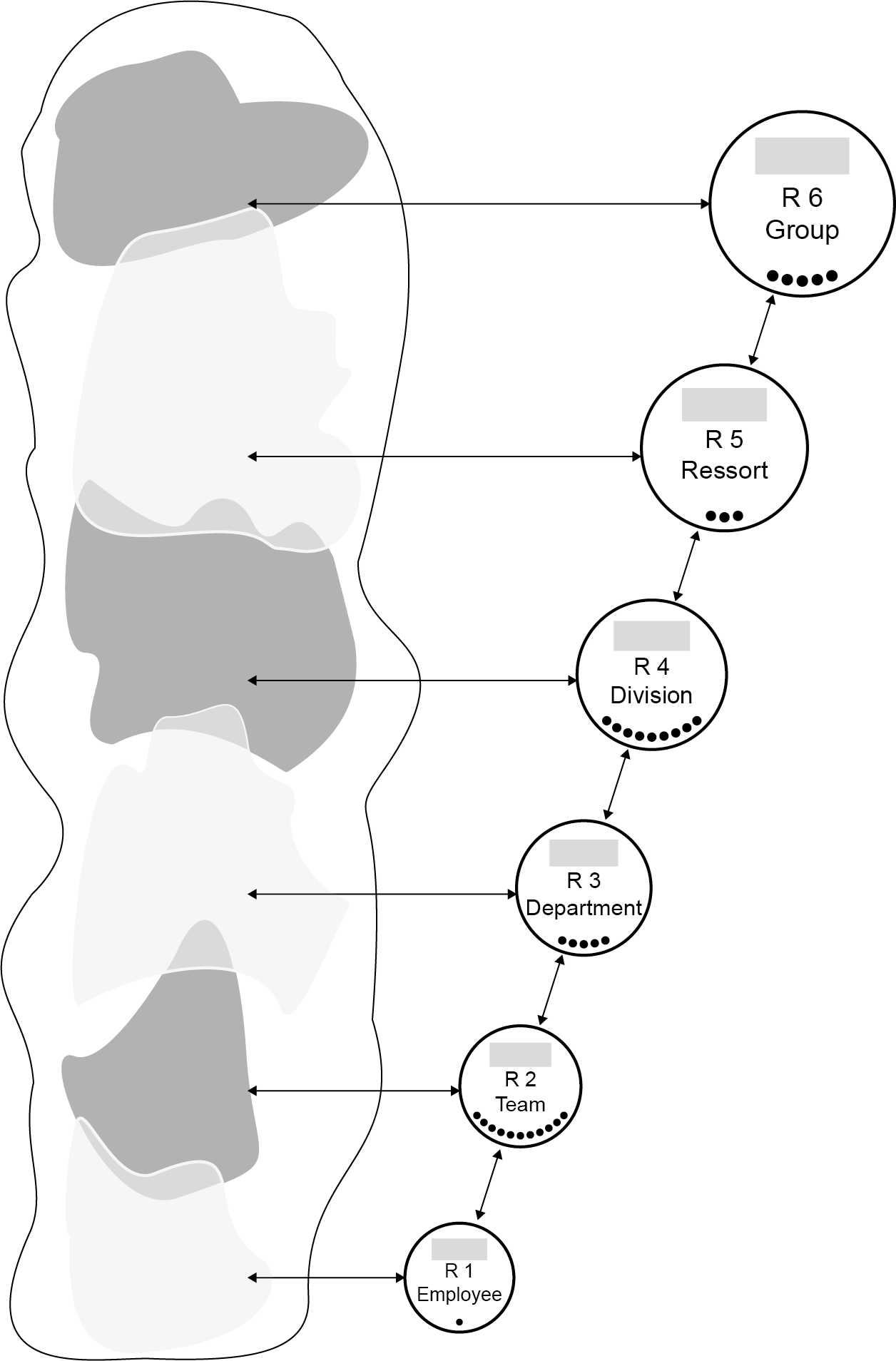

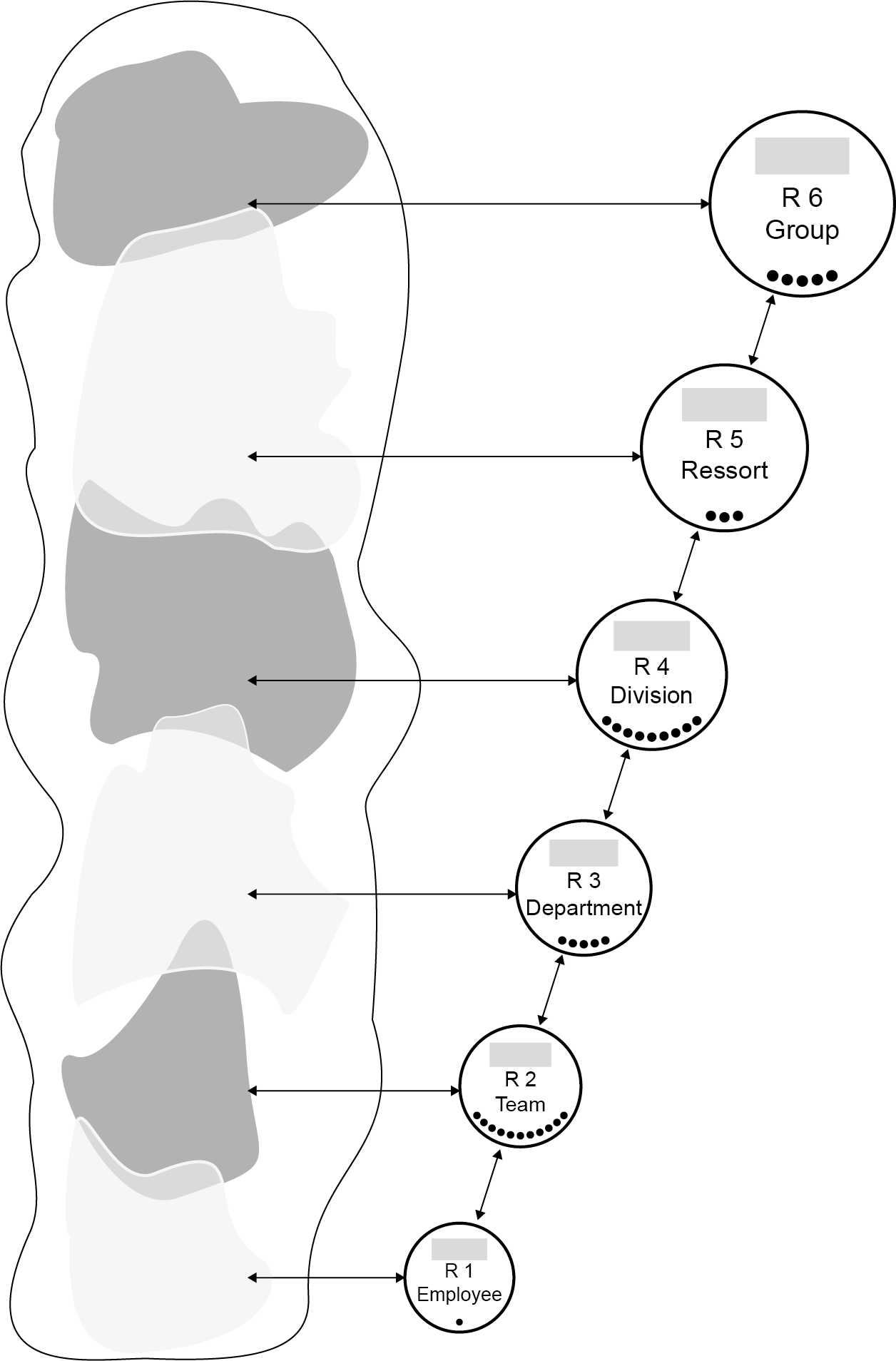

Building up the Information service bottom-up

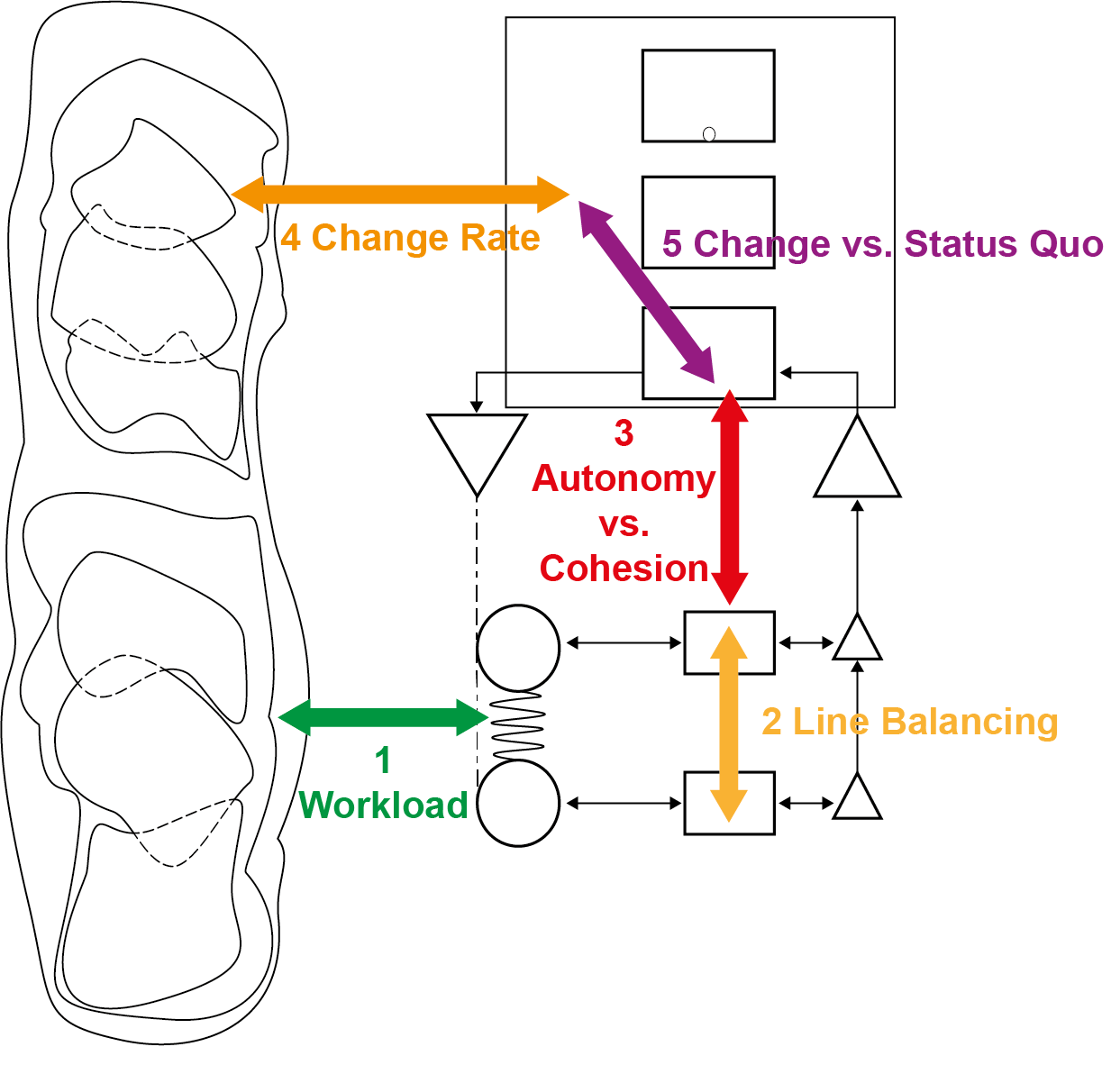

❾ Every floor level is build on the next one by logical dependencies.

When a task has not found its destination on the intended floor, ad-hoc bypasses are used.

Execution machines floor 0/1, the how for the organisation

Execution processes floor 1/2, the how in value streams

Change enacting floor 2/3, the what for value streams

Change control floor 3/4, the what quality & quantity for the organisation

❿

❿ In a mature situation all levels are in place and aligned with their antipodes.

W-1.3 Steering roles tasks

Managing the building any non trivial construction follows several stages.

These are:

- high level design & planning

- detailed design & realisation

- evaluation & corrections

Non trivial means it will be repeated for improved positions.

Managing the process, information is needed for understanding what is going on.

Without knowing the situation or direction there is no hope in achieving a destination by improvements.

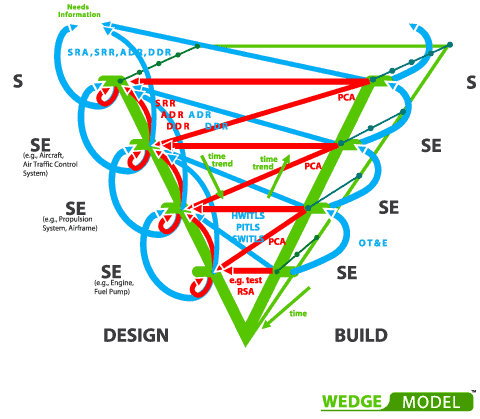

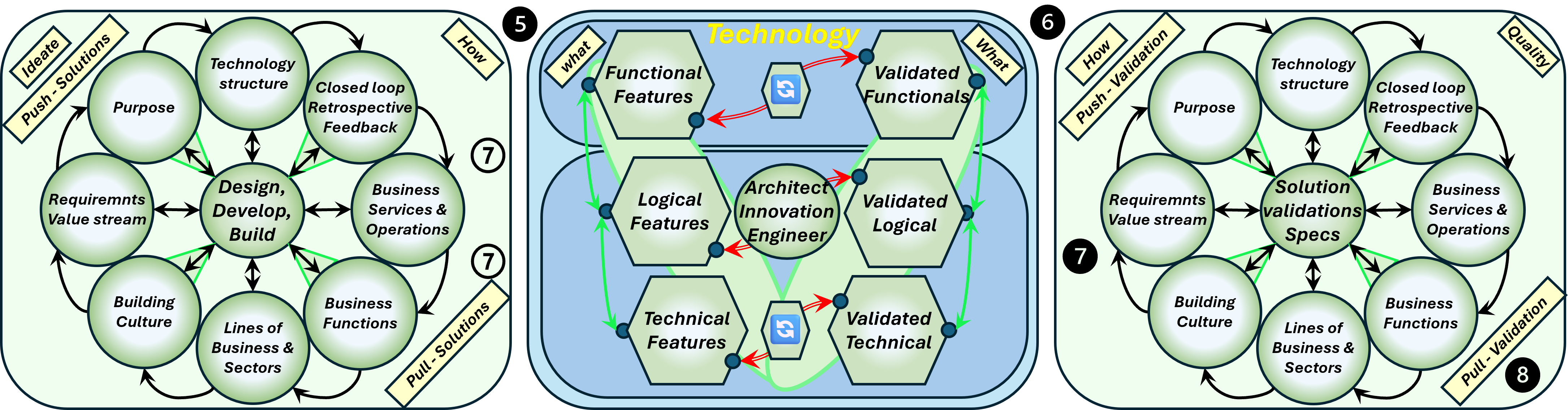

⚖ W-1.3.1 Technical "how to" for understanding steering

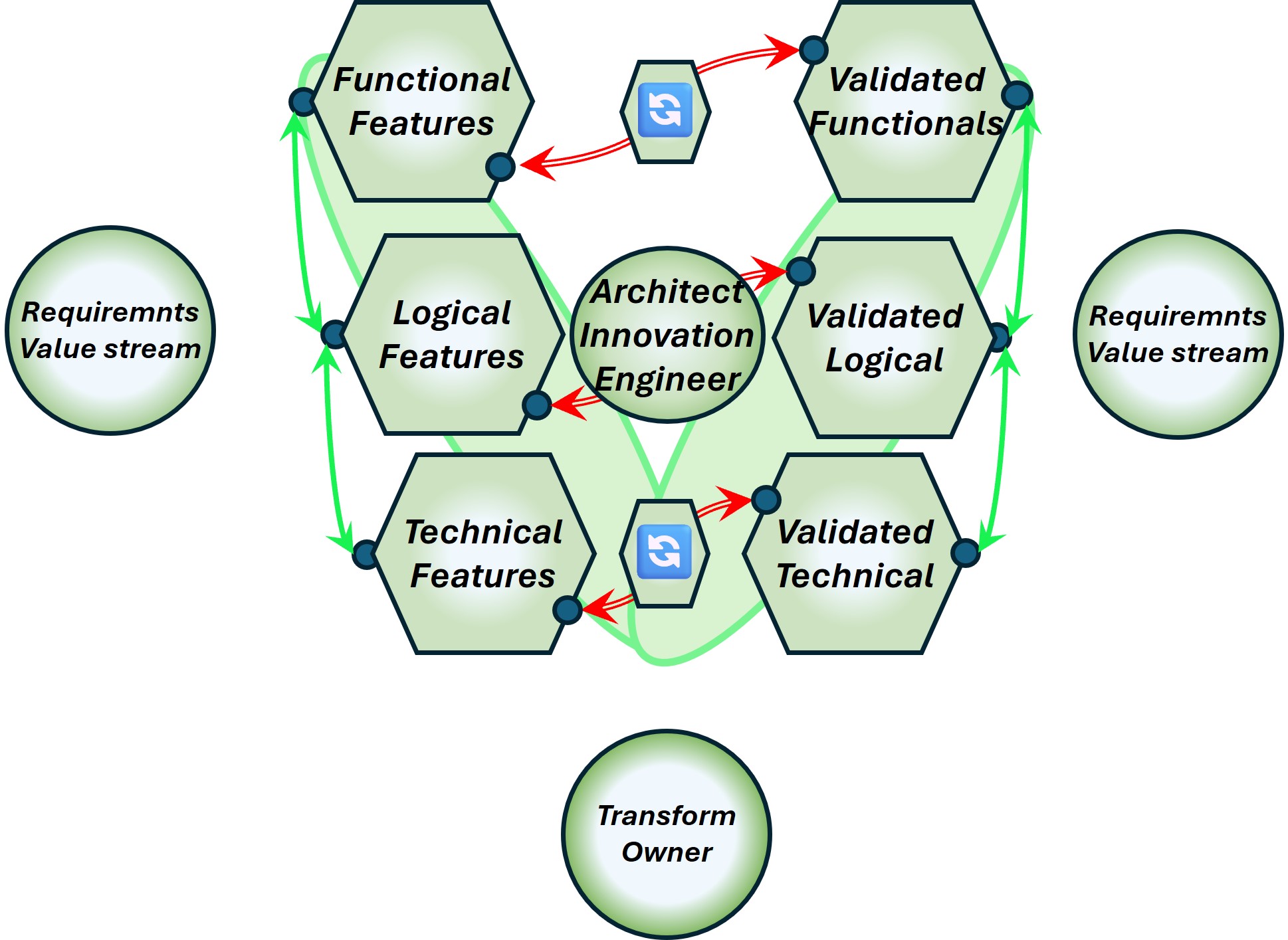

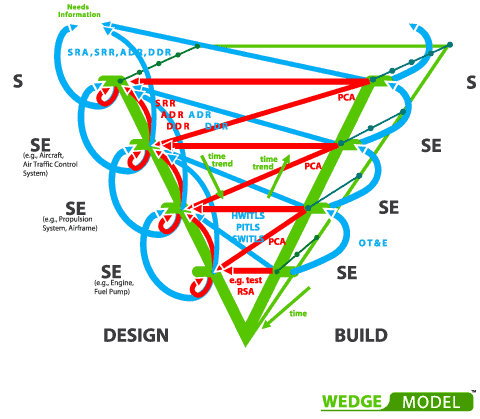

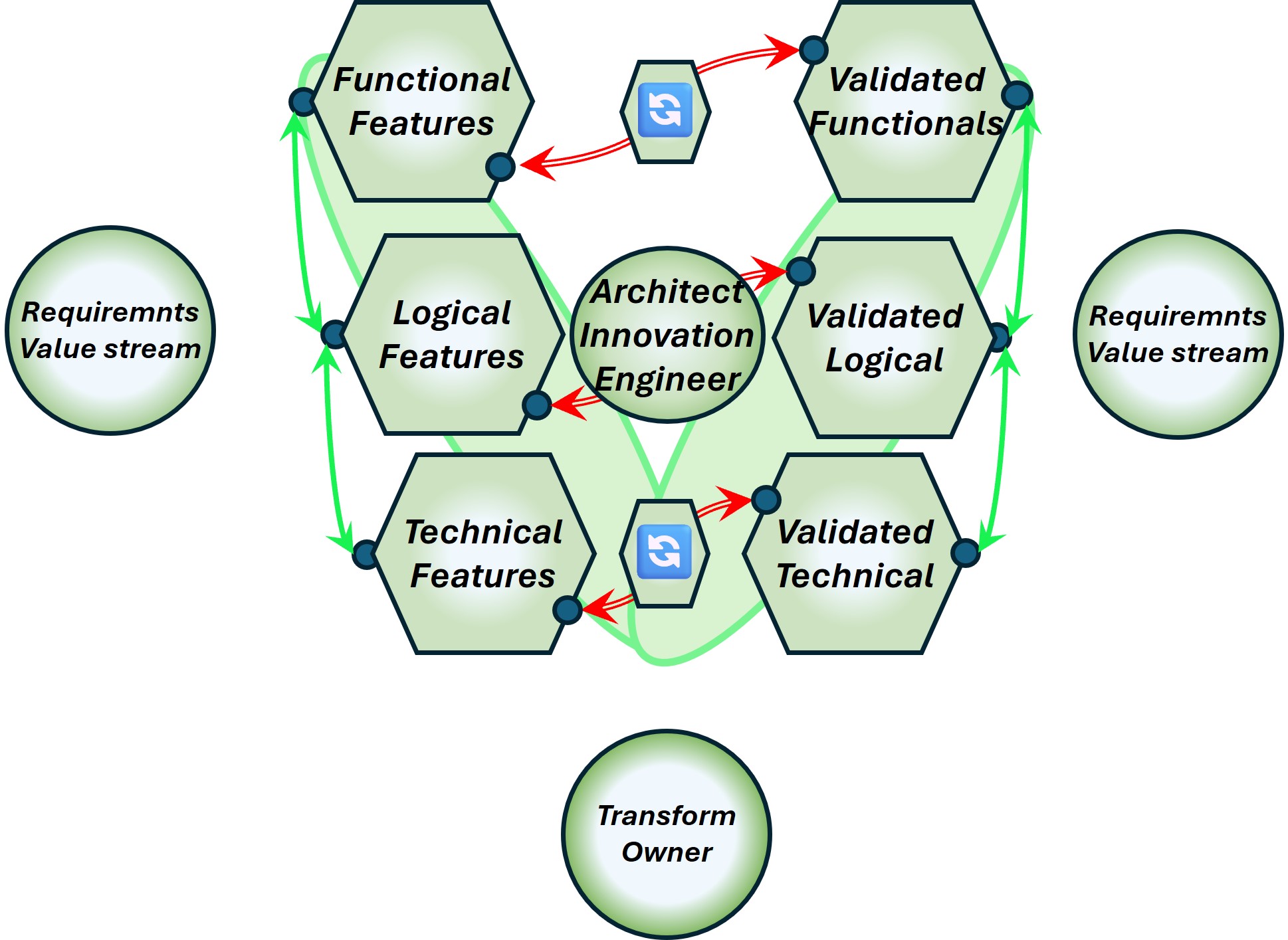

The V-model extended to a W-model

Organizing, planning the work in the primary value stream is a common activity.

Time is important for delivering results.

In engineering using the V-model is the standard, doing as much as possible in parallel.

There is no final design for every detail during construction.

The most important things at high level are however defined for achieving a defined goal.

To be extended to:

- Getting requirements, backlog items, ideas into the engineering line. (wedge model)

- Delivering validated results into specifications for the product: goods, services. (triple V)

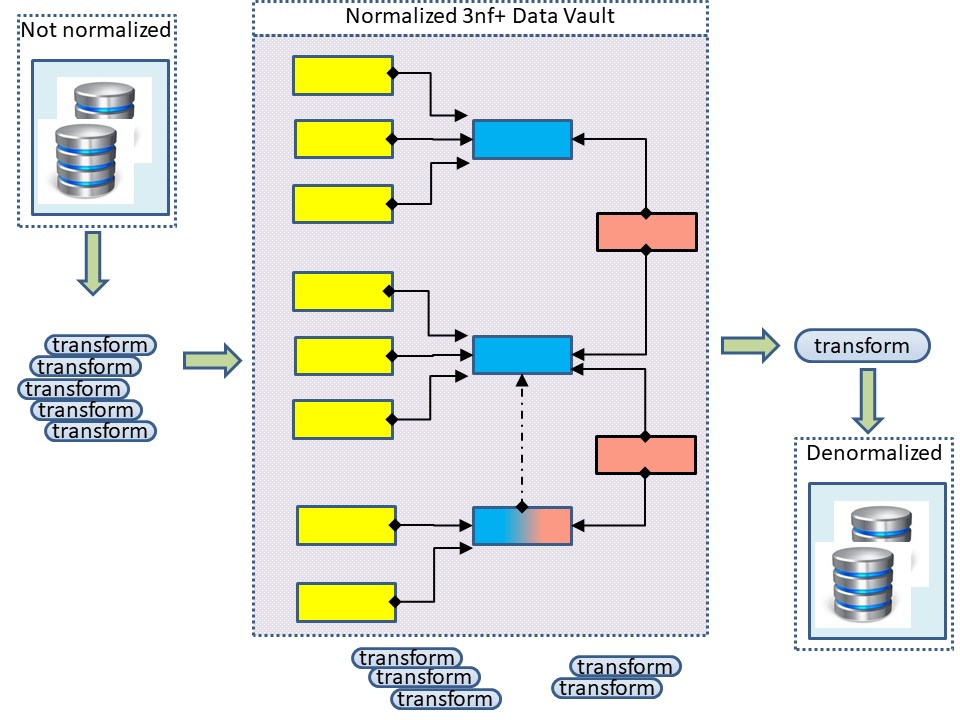

Transactional operations - Normalization

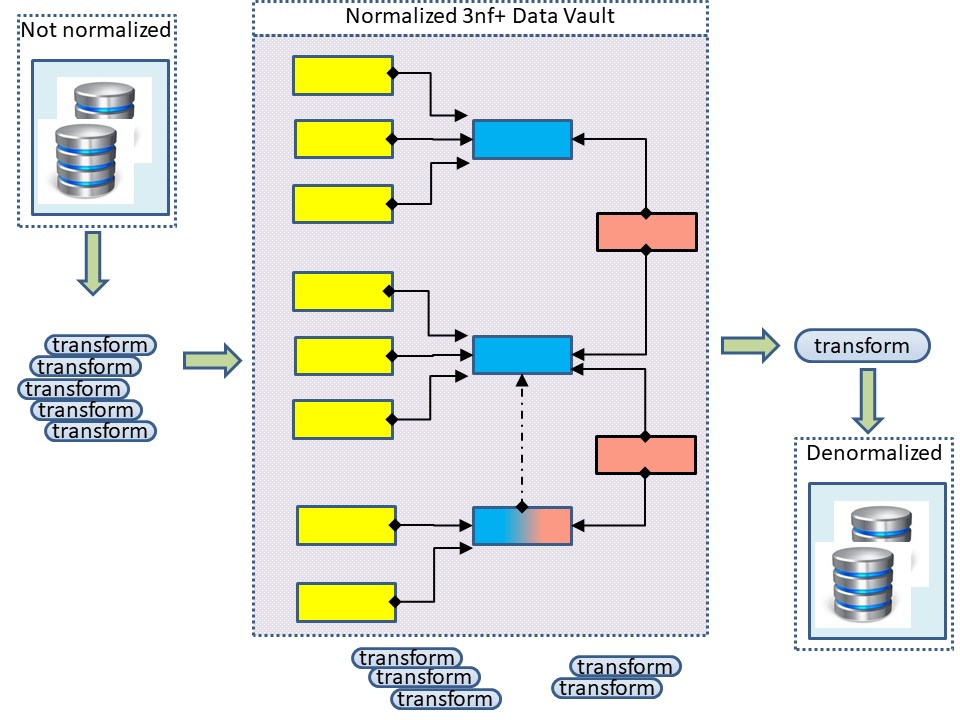

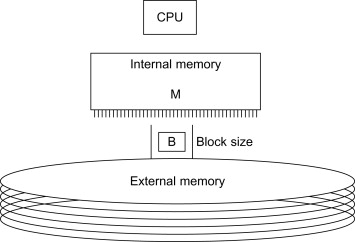

❶ In transactional systems it is important to avoid any duplication of an artefact, element, because it is too complex to keep duplications synchronized.

Details:

👓

The concept of

database normalization

is generally traced back to E.F. Codd, an IBM researcher who, in 1970, published a paper describing the relational database model.

Definion of the third Normal Form (3NF):

- Each column is unique in 1NF.

- All attributes within the entity should depend solely on the unique identifier of the entity in 2NF.

- No column entry should be dependent on any other entry (value) other than the key for the table , 3NF is achieved, considered as the database is normalized.

Reporting Business Intelligence (BI)- Denormalization

Denormalization is the process of reversing the transformations made during normalization for performance reasons.

It's a topic that stirs controversy among database experts;

Tthere are those who claim the cost is too high and never denormalize, and there are those that tout its benefits and routinely denormalize.

❷ Classic Business Intelligence are reshaping all operational data into new dedicated data models.

The reason for this is taht facts and dimension used in the operational process are not suited for reporting and analyses.

The concepts of a transactional operational data design with normalization are followed.

- The result is a lot of transformations for tables.

- What is delivered as olap or reports, is denormalised using summaries.

National language Support (NLS)

National Language Support (NLS) and localized versions are frequently confused.

- NLS ensures that systems can handle local language data.

- A localized version is a software product in which the entire user interface appears in a particular language.

NLS is about:

- string manipulation

- character classifications

- character comparison rules

- code character sets

- date and time formatting

- user interfaces

- message-text languages

- numeric and monetary formatting

- sort orders

❸ In the moment the NLS options are propagating into logical constructs the logic has become dependent on a NLS setting.

Many tools are suffering from this not wanted effects.

This also has impact on the realisation in the data processing.

👓 details

Examples:

- eclipse NLS guidelines. for modifying the tool in supporting NLS.

⚖ W-1.3.2 The what to start operational steering

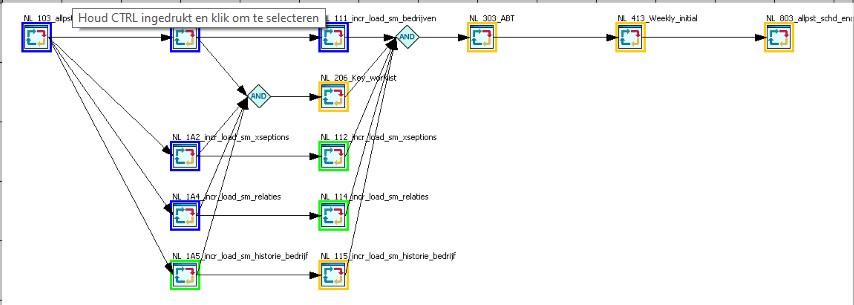

Scheduling, planning operations.

Scheduling is the other part of running processes.

Instead of defining blocks of code in a program it is about defining blocks of programs for a process.

Processes are planed in time to run in time windows with dependencies.

❹ avoiding confusion by same word other context:

- For building a program "job" is used by developers.

- For building a process flow, having a start and end, "job" is used at operations.

This "job" (process flow) can consist of many "jobs" (programs).

Running process flows will cause a work load for the system (technical infrastrucuture)

- The developers, operations, examples of staff are doing their "job" (work).

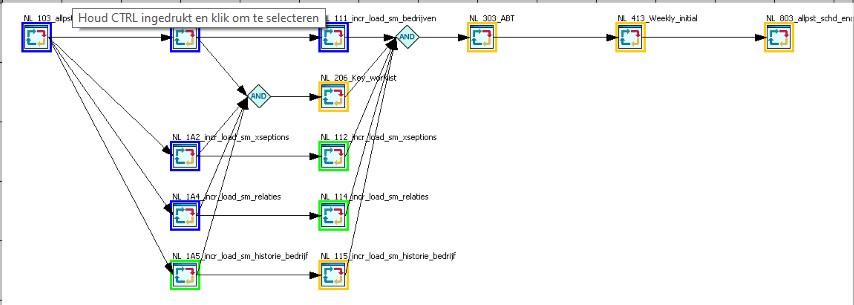

Building a process flow

Building a process flow (job) is defining the order how to run code units (jobs).

- Defining the first and last progrm units.

Used for initialisation and a message of a successful finish.

- Dependencies when a next code unit may run, which ones to wait to get ready.

- Allowing for multiple code units to run when there are no dependencies:

- Allowing a single process flow being active at one moment or having multiple of the same process flow running at the same moment.

When parallel flows are allowed, unique application datasets are needed.

See figure, link

👓, details.

Operational control process flows I

Operational task: Monitoring the progress within a running process.

When automated there is only human interaction needed when there is signal of things going wrong.

- blue ready,

- green running,

- yellow waiting,

- red in error.

Andon ,

stop the line , and do not push the problem downstream.

❺ Human intervention ready for action (Andon).

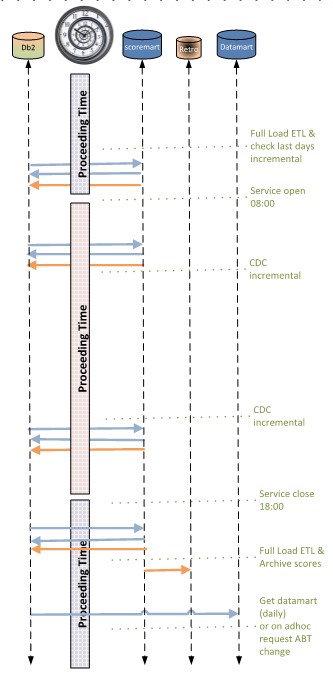

Running planned proces flows

Having process flow defined the planning is:

- when they should run, able to start.

- when they should be ready.

- Dependencies between flows when running

- Dependencies between programs when running

- what impact there is on technical system resources.

- what impact there is on technical system resources.

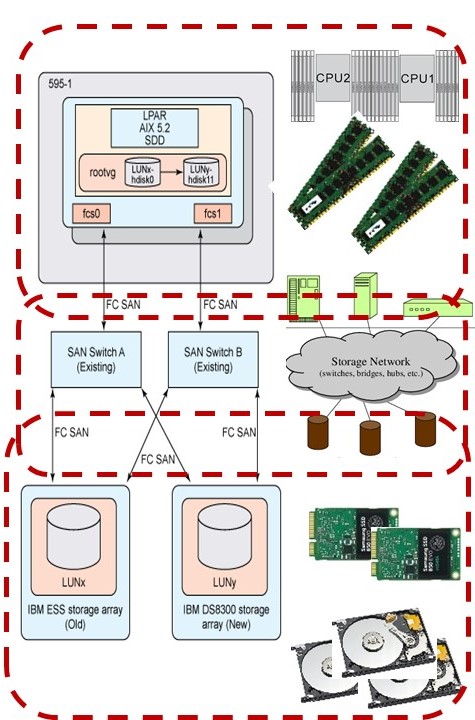

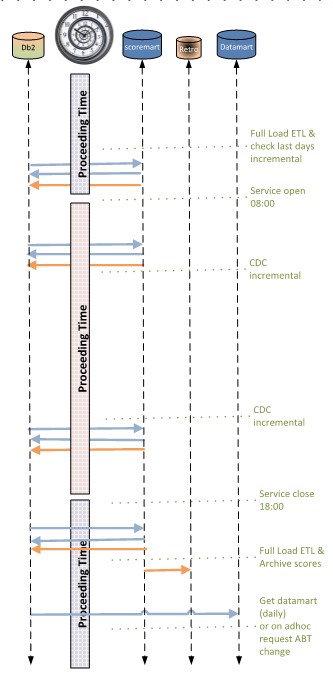

In the example, see figure:

- early morning, out of office hours, a full load of several warehouses is run.

The full load in this case was faster than trying to catch all changes.

An additional advantage: missing changes in the source system will not have a big impact as the longest data synchronisation delay is one day.

- During office hours every 15 minutes update for changes. Achieve a near real time updated version.

Developing a system like this is more easy, understandable when the scheduling and program units are designed and build as a system.

See figure, link

👓, details

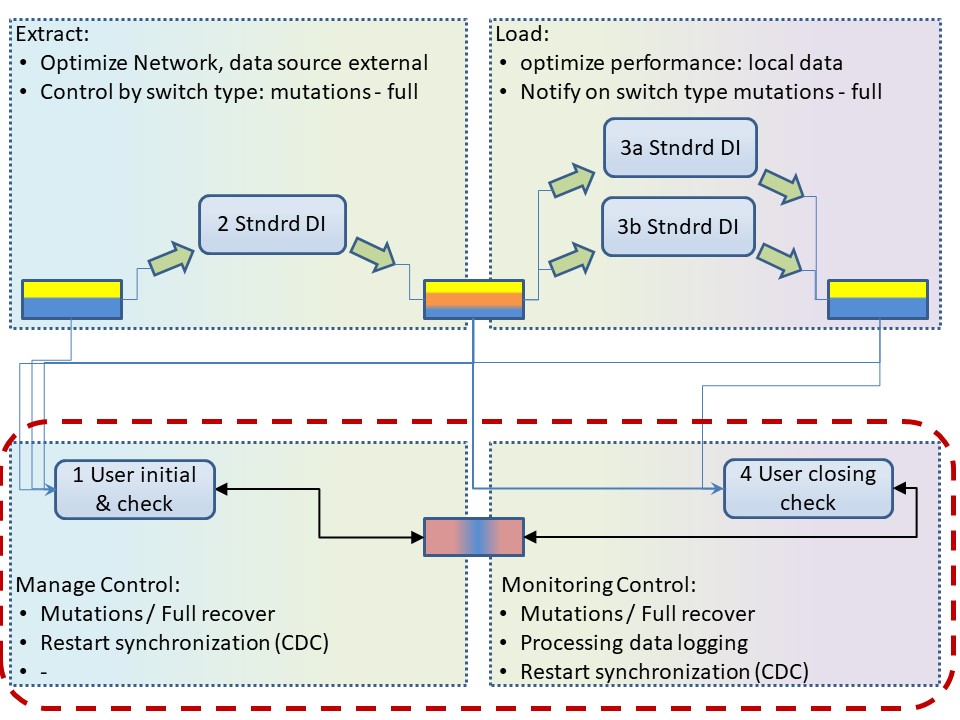

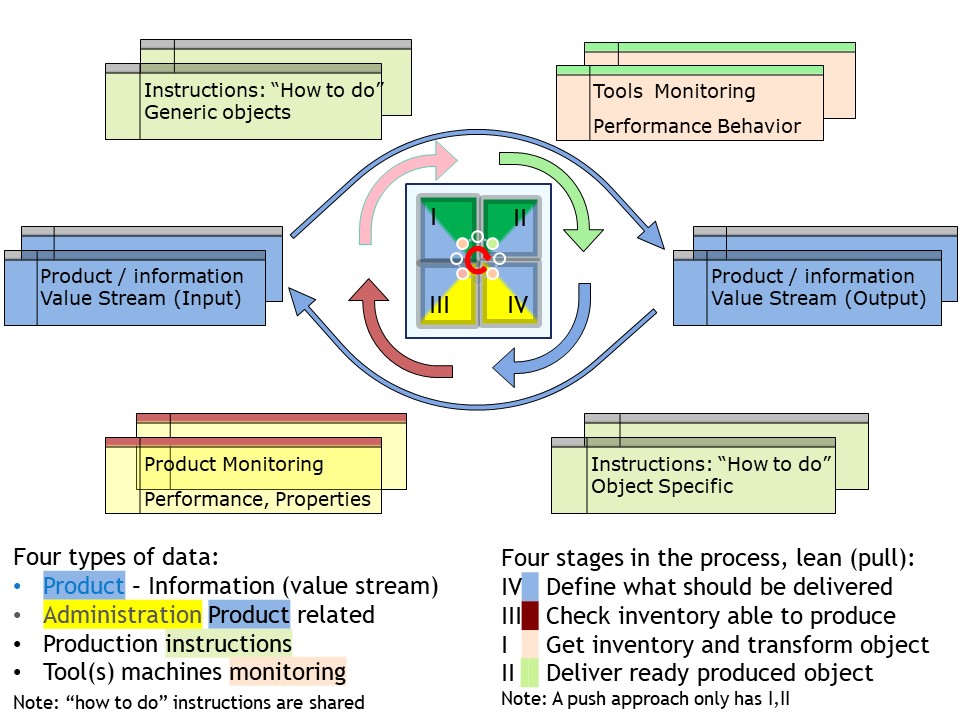

❻ What is processed are indicators of deliveries, results for information products.

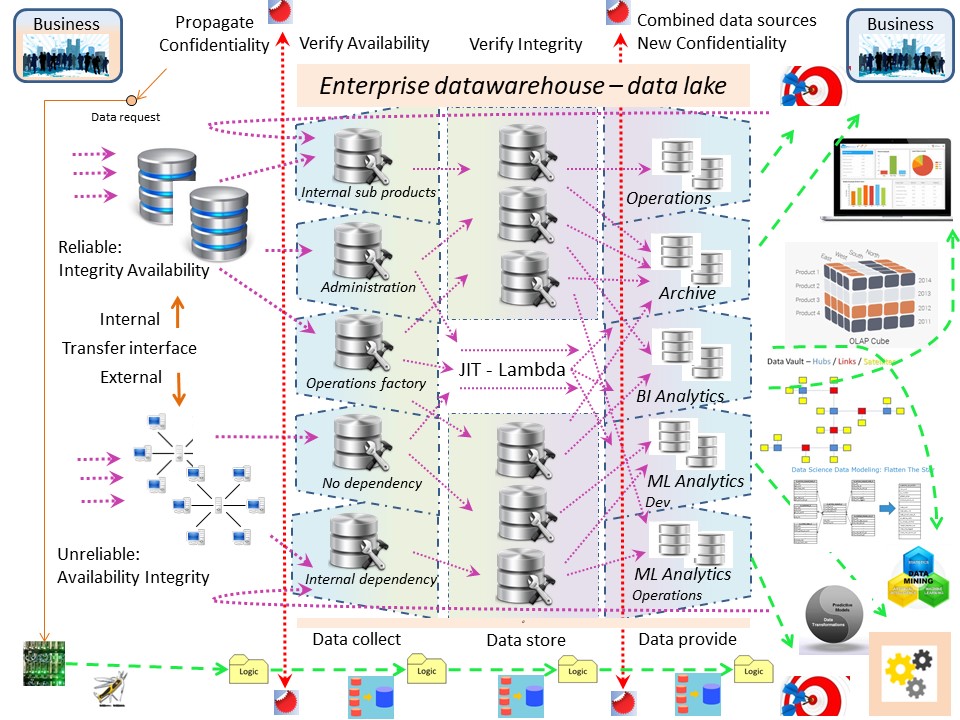

⚖ W-1.3.3 Understanding the data flow, data lineage

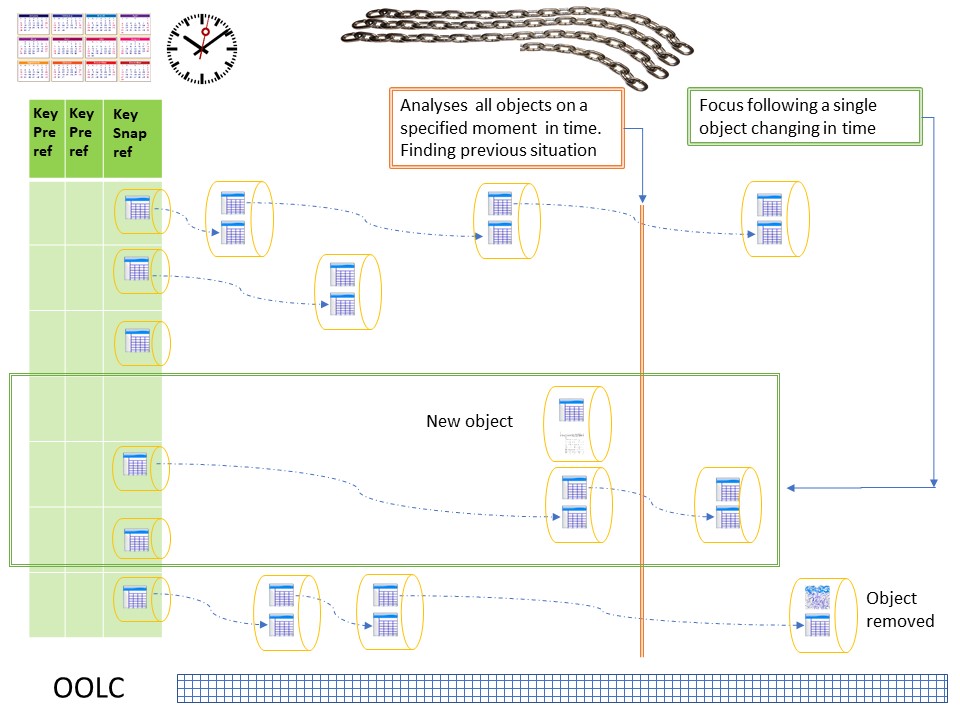

data lineage following the cycle

Knowing what information from what source is processed into new information at a new location is lineage (derivation),

"data lineage" .

❼

Understanding changes in data requires understanding the data chain, the rules that have been applied to data as it moves along the data chain, and what effects the rules have had on the data.

Data lineage includes the concept of an origin for the data—its original source or provenance—and the movement and change of the data as it passes through systems and is adopted for different uses (the sequence of steps within the data chain through which data has passed).

Pushing the metaphor, we can imagine that any data that changes as it moves through the data chain includes some but not all characteristics of its previous states and that it will pick up other characteristics through its evolution.

Data lineage is important to data quality measurement because lineage influences expectations.

In a figure,

See right side.

Details 👓

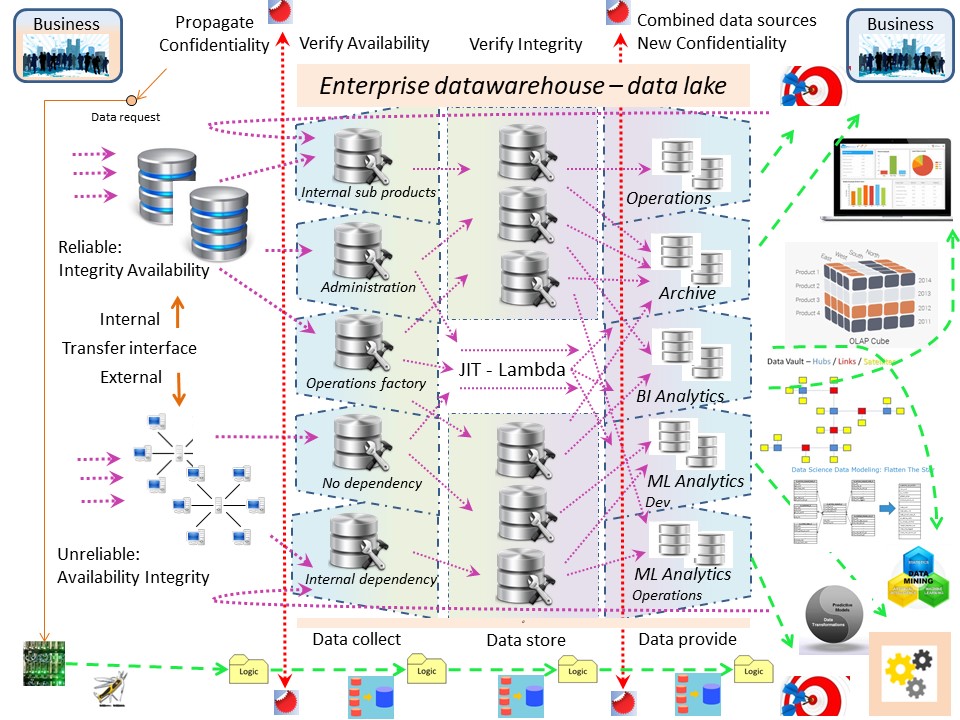

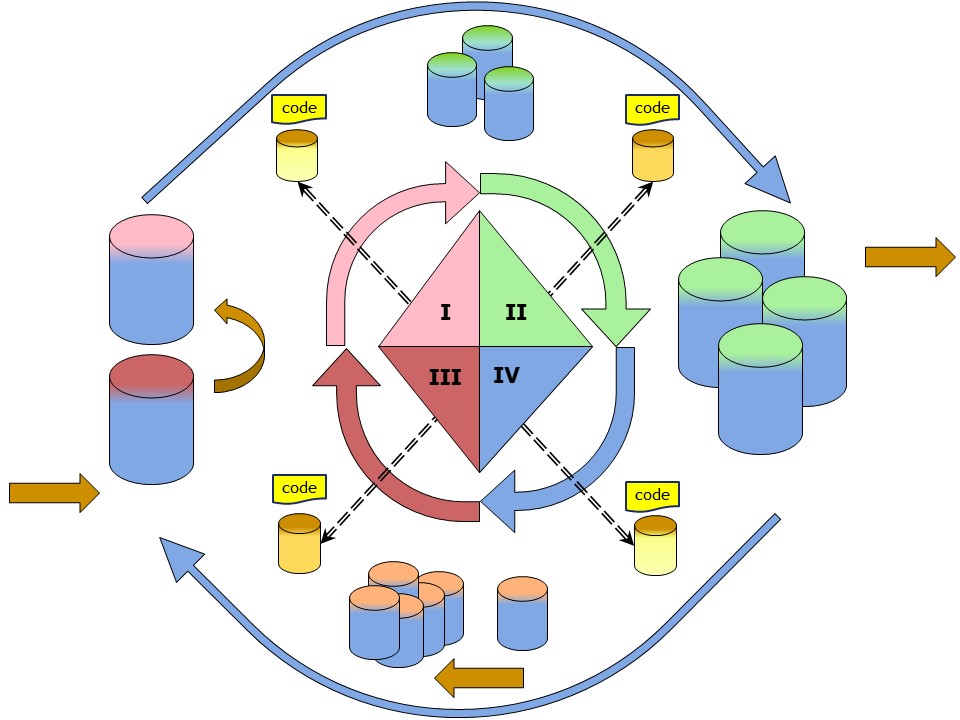

Capacity Considerations, the enterprise data warehouse (EDW)

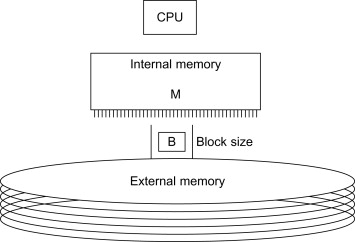

A standardised location in normal information processes using data is brings normal capacity questions.

Change data - Transformations

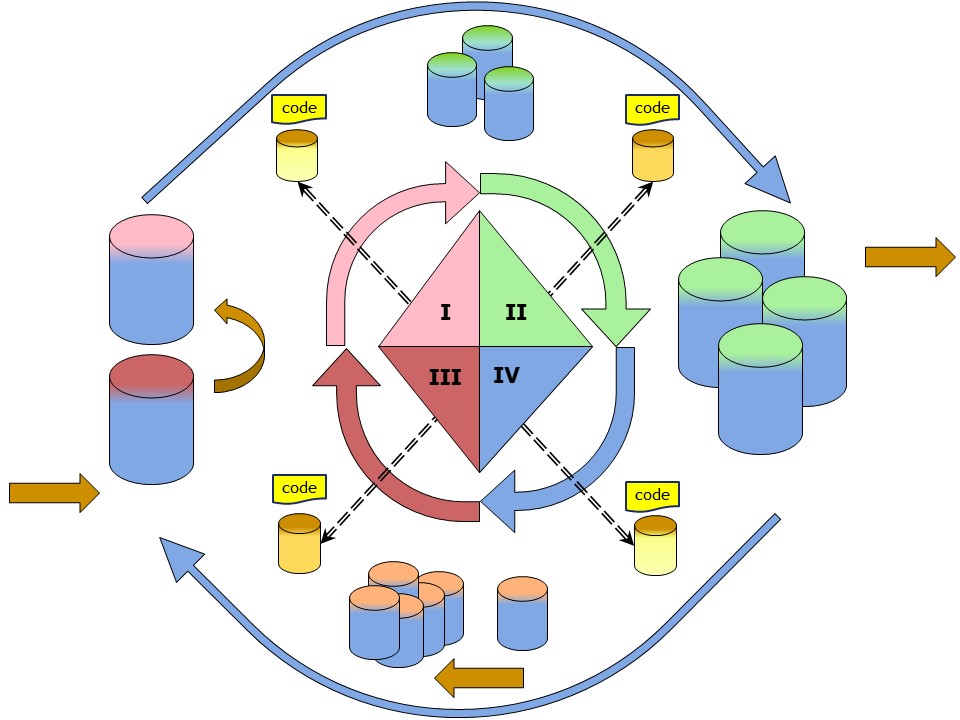

More in details the transport of data, flow goes:

- Landing to warehouse collecting point(s).

- Staging transported internally to service technically according agreements.

- Semantic prepatransported internally for best service according agreements.

- Databank to a customer from the warehouse provision point(s).

This breaks with the common acceptance of using a data ware house.

The data warehouse is not used for operational processes but only for doing analytics to inform decision makers.

In normal industrial approaches the ware house is used for operational processes.

Measuring what is going on, informing decision makers is a different topic, different information flow.

The enterprise warehouse 3.0:

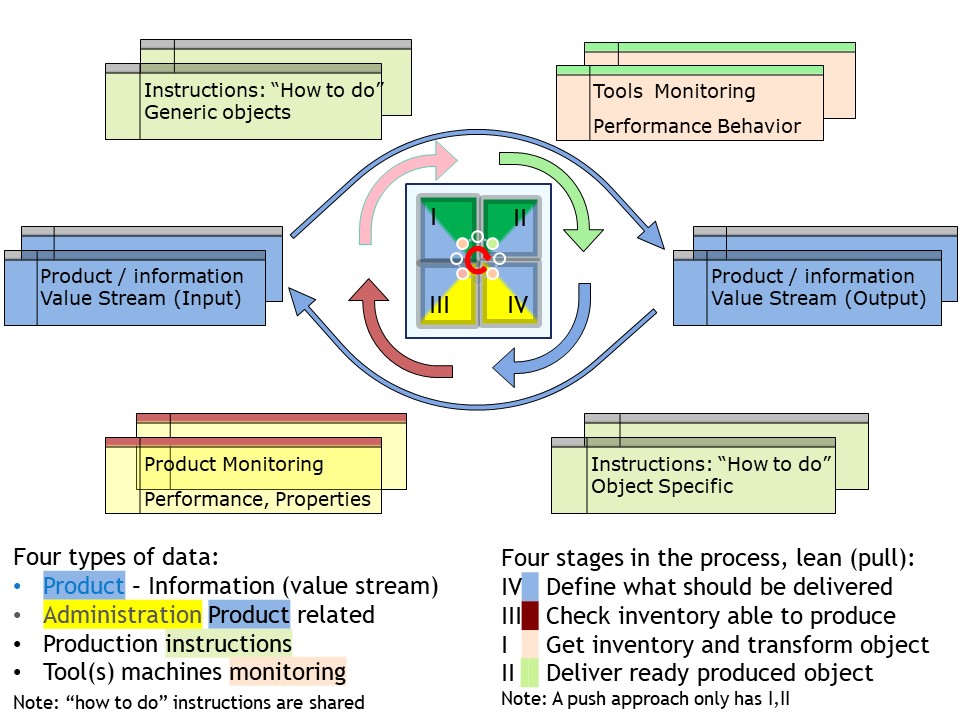

- Covers the operation information flows by four stages

- Dedicated flows of measurements, supporting closed loops are a part of the offering.

- Modelling data, information with all very detailed relationships is not a function of a Datawarehouse.

In a figure,

See right side.

Details 👓

In a figure,

See right side.

When the Collecting and sending area's of the EDW 3.0 are the ones that are most limiting the flow, the planning is best done for traffic by managing this service.

❽ Data lineage, data quality, information quality is "by design" of the information products.

⚖ W-1.3.4 Roles tasks levels supporting Information Services

Building up the Information lineage bottom-up

❾ Every floor level is build on the next one by logical dependencies.

When a task has not found its destination on the intended floor, ad-hoc bypasses are used.

Information quality, service product

A specification can be clearly and completely, consistently and concisely specified by means of standard attributes that conform to the MECE principle (Mutually Exclusive, Collectively Exhaustive).

The MECE principle is used in mapping process wherein the optimum arrangement of information is exhaustive and does not double count at any level of the hierarchy.

By reorganizing the information using MECE and the related storytelling framework, the point of the topic can be addressed quickly and supported with appropriate detail.

SCQA: Situation, Complication, Question, and Answer, a brief overview:

- Situation: Sets the context or background for the issue at hand.

- Complication: Introduces the problem or challenge that disrupts the situation.

- Question: This is the central question that arises from the complication.

- Answers, choices: Provides solutions and/or responses to the question.

From this a generic approach for pruducts, goods and/or services:

- Consumer benefits: Set of benefits that are triggerable, consumable and effectively utilizable for consumer.

These benefits must be described in terms that are meaningful to consumers.

- Specific functional parameters: Parameters that are essential and that describe the important dimension(s) of the escape, the output or the outcome.

- Delivery point: The physical location and/or logical interface where the benefits are rendered to the consumer.

At this delivery preparation can be assessed, delivery can be monitored and controlled.

- Consumer count: the number of consumers that are enabled to consume a product.

- Delivery readiness time: the moments when the product is available and all the specified elements are available at their delivery point

- Consumer support times: the moments when the support team ("service desk") is available.

The service desk is the Single Point of Contact (SPoC) for service inquiries.

At those times, the service desk can be reached by defined available communication methods.

- Consumer support language: the language(s) spoken by the service desk.

- Fulfilment target the provider's promise to deliver the product, expressed as the ratio of the count of successful product deliveries to the count of requests by a single consumer or consumer group over some time period.

- Impairment duration: the maximum allowable interval between the first occurrence of a product impairment and the full resumption and completion of the product delivery.

- Delivery duration the maximum allowable period for effectively rendering all product benefits to the consumer.

- Delivery unit the scope/number of action(s) that constitute a delivered product.

Serves as the reference object for the product delivering price, for all product costs as well as for charging and billing.

- Delivery price the amount of money the customer pays to receive a product.

Typically, the price includes a product access price that qualifies the consumer to request the product and a price for each delivery.

❿ In a mature situation all levels of support are in place and aligned with their antipodes.

W-1.4 Culture building people

Managing the working force at any non trivial construction is moving to the edges.

The cultural changes are:

- Respect for people, learning investments at staff

- Accepting uncertainties and imperfections

- Trusting the working force while getting also well informed

Non trivial means it will be repeated for improved positions.

Managing the working force at processes, information is needed for understanding what is going on.

Without knowing the situation or direction there is no hope in achieving a destination by improvements.

⚖ W-1.4.1 Project management, the change challenges

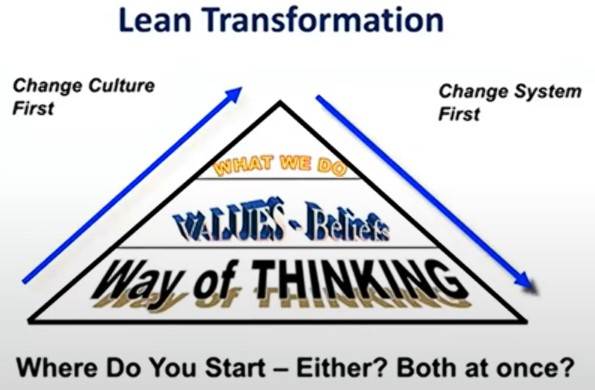

Culture by frameworks, hypes

There is for a many years a fight going on in the information technology world how work should get managed.

Instead of learning from other STEM, Science Technology Engineering Mathematics, what they have learned and what is is possbile, reinventing the wheel is common.

- The waterfall vas Agile fights, without understanding the why.

- Forcing uSing tools, tooligans, without understanding the why.

No such thing as Waterfall (A.Dooley 2024)

The Agile movement has greatly enriched the project management landscape.

Unfortunately the ‘cult of Agile’ is doing more harm than good with its narrow evangelical views.

...

It was perhaps naïve of me to not anticipate that there are many, many different views of what the term 'Waterfall' actually means.

That shouldn't have been a surprise since there is a similar lack of common understanding of what 'Agile' actually is, so if Waterfall is the antonym of something that isn't well defined, why would I expect the term Waterfall itself to be well defined? ...

All of this has reinforced my view that in the world of project and programme management, we should stop using the terms Agile and Waterfall (and, as a consequence, Hybrid) and just talk about agility.

All projects demonstrate some degree of agility at some point in their life cycle and agility can take many forms.

The confusion of:

- managing building a product ⇆ organizing resources

- the building of the product ⇆ engineering

Development life cycles focus on the delivery phase of a project or programme and often arise from particular domains such as construction, engineering or IT.

They should not be confused with governance life cycles or specialist life cycles. ...

As uncertainty about the detail of objectives increases, development life cycles need to be more iterative so they can adapt as more information becomes available.

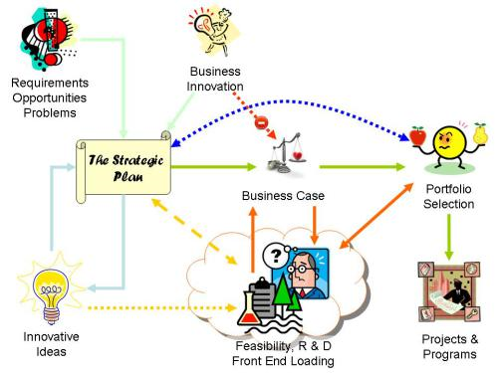

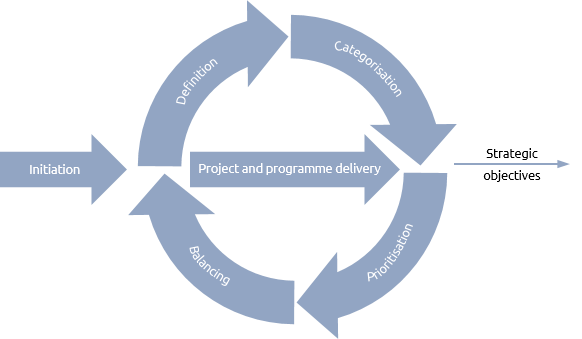

Future for project, programme management

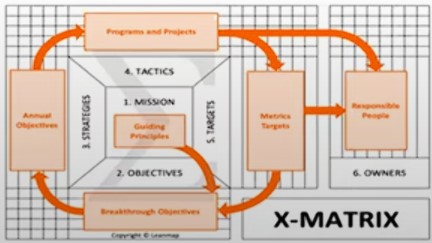

Vision is linking

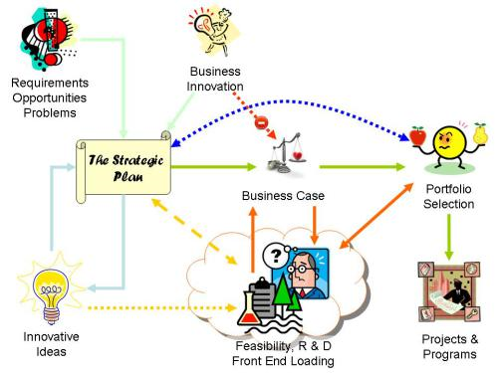

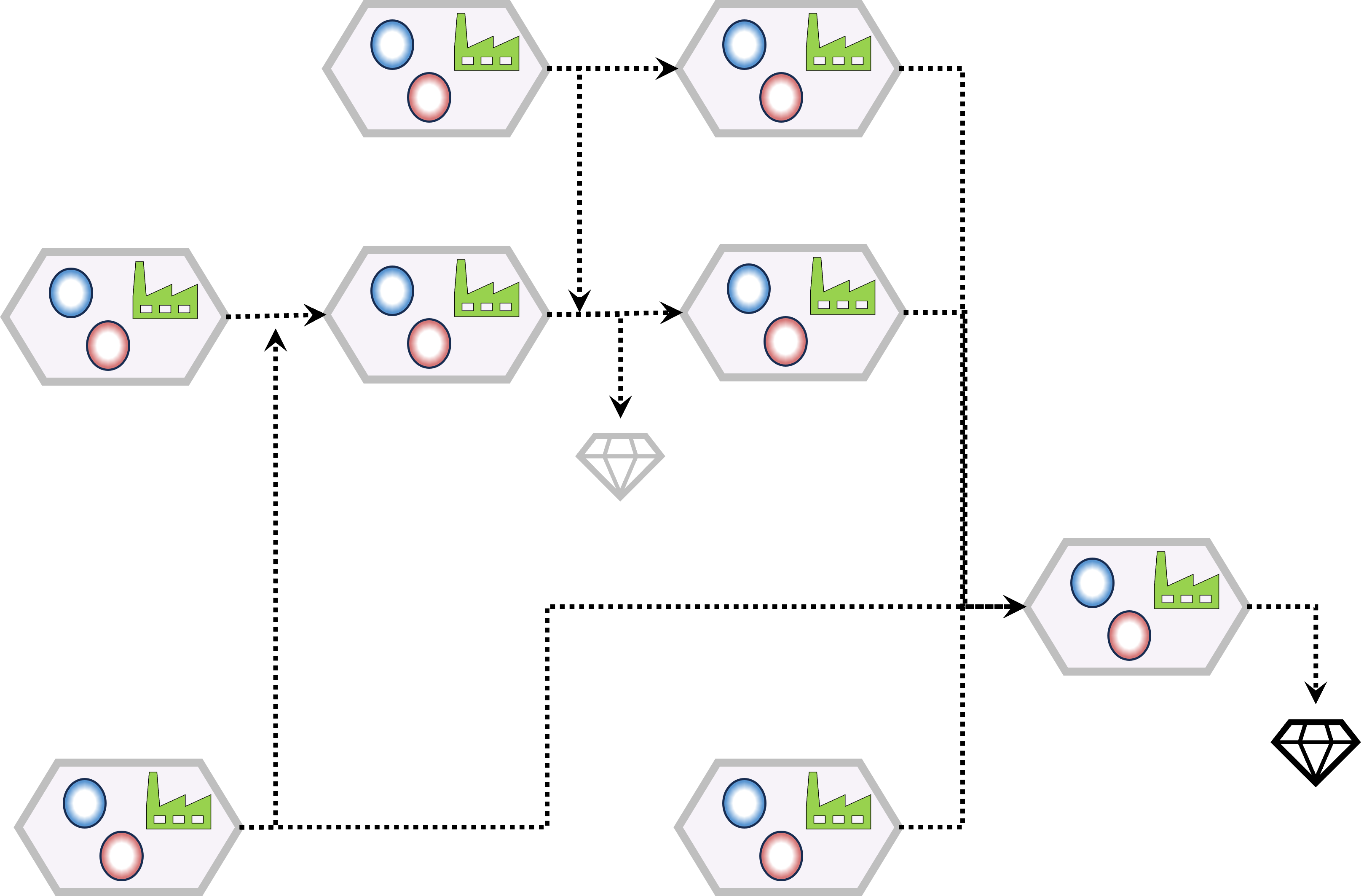

Innovation and value (L.Bourne)

The key is an effective and viable strategic planning process that is capable of developing a realistic strategy that encompasses both support and enhancements for business as usual, and innovation.

Strategic planning is a complex and skilled process outside of the scope of this post, for now we will assume the organisation is capable of effective strategic planning. ...

There is a close link between the portfolio management processes and strategic planning, what's actually happening in the organisation's existing projects and programs is one of the baselines needed to maintain an effective strategic plan (others include the current operational baseline and changes in the external environment).

In the other direction, the current/updated strategy informs the portfolio decision making processes.

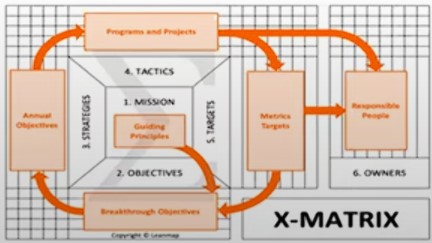

In a figure:

See right side.

The strategic plan is the embodiment of the organisation’s intentions for the future and the role of portfolio management is to achieve the most valuable return against this plan within the organisation’s capacity and capability constraints. ...

👉🏾 The long term viability of any organisation depends on its ability to innovate.

⚖ W-1.4.2 Project into Programme into Portfolio (3P)

Planning, a life cycle for a product, service

life-cycle

Project, programme and portfolio management (P3M) is the application of methods, procedures, techniques and competence to achieve a set of defined objectives.

The goals of P3 management are to:

- deliver the required objectives to stakeholders in a planned and controlled manner;

- govern and manage the processes that deliver the objectives effectively and efficiently.

Investment in effective P3 management will provide benefits to both the host organisation and the people involved in delivering the work. It will:

- increase the likelihood of achieving the desired results

- ensure effective and efficient use of resources

- satisfy the needs of different stakeholders

A consistent approach to P3 management, coupled with the use of competent resources is central to developing organisational capability maturity.

A mature organisation will successfully deliver objectives on a regular and predictable basis.

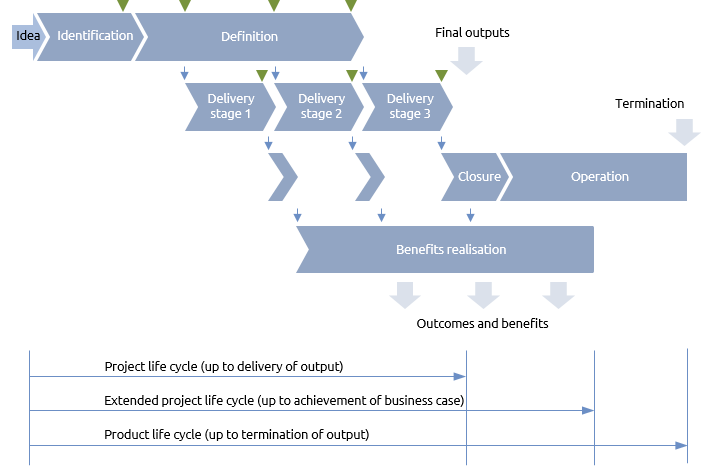

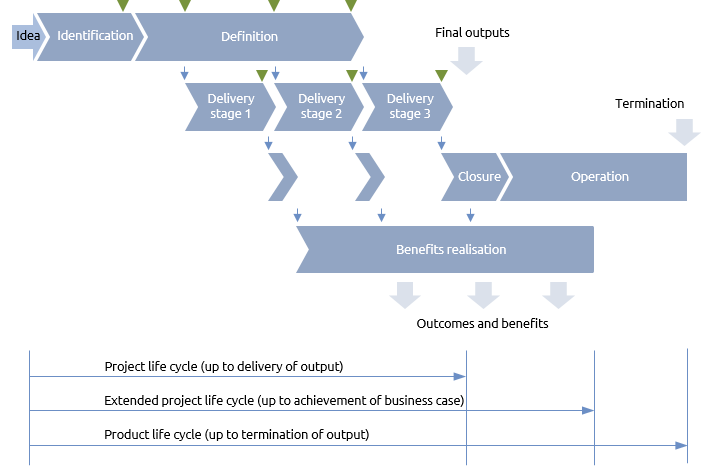

A P3 life cycle illustrates the distinct phases that take an initial idea, capture stakeholder requirements, develop a set of objectives and then deliver those objectives.

The goals of life cycle management are to:

- identify the phases of a life cycle that match the context of the work

- structure governance activities in accordance with the life cycle phases

Projects and programmes are the primary mechanisms for delivering objectives while portfolios are more focused on co-ordinating and governing delivery of multiple projects and/or programmes.

As a result the project and programme life cycles have many similarities and follow the same basic approach.

The simplest life cycle is a project life cycle that is only concerned with developing an output.

Programme

A typical programme life cycle is shown.

Steps:

- idea,

- identification,

- definition,

- delivery (1-n),

- closure,

- output.

Benefits realisation start at the first delivery.

See figure.

It all starts with someone having an idea that is worth investigation.

This triggers high level requirements management and assessment of the viability of the idea to create a business case.

At the end of the phase there is a gate where a decision made whether or not to proceed to more detailed (and therefore costly) definition of the work. ...

The full product life cycle also includes:

- Operation – continuing support and maintenance

- Termination – closure at the end of the product’s useful life

In a parallel project life cycle, most of the phases overlap and there may be multiple handovers of interim deliverables prior to closure of the project.

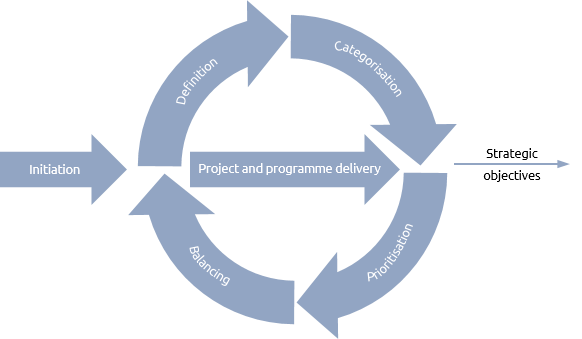

Portfolio

Unlike projects and programmes, portfolios are less likely to have a defined start and finish.

Portfolio management is a more continual cycle coordinating projects and programmes.

It may, however, be constrained by a strategic planning cycle that reviews strategy over a defined period.

If an organisation has, for example, a three-year strategic planning cycle, then the portfolio cycle will have compatible time constraints.

👉🏾 The portfolio management team may be responsible not only for co-ordinating the projects and programmes to deliver strategic objectives, but also for improving the maturity of project, programme and portfolio management.

⚖ W-1.4.3 Managing flow in activities, portfolios

TOC Theory of constraints

BlueDolphins Love the FLOW

To make the long story (s. above) short, "the current accounting is so complex because it tries to optimize everything!

The main assumption of accounting is:

- every team or department has to be efficient, means loaded at 100%.

- if you load every team to 100%, then the overall output is also optimized!

But that is impossible because every system has exactly one constraint.

Without a constraint, it would grow with infinite speed, explode or exhaust all resources. And will die immediately. ...

This algorithm based on the Theory of Constraints (TOC) and Throughput Accounting (TA) is easier because every step is deterministic and easy to understand for everyone.

TOC Dolphins book: Management 4.0, Handbook for Agile Practices (3.0 )

The buzzwords “Agility, Agile or Agile Management” are often interpreted as miracle-workers.

But the number of different meanings attributed to these terms is immense: There are thousands of experts and tens of thousands of books and articles on what agile work actually is.

Subject of agility: everyone is an expert, everyone knows how to do it best. ...

This book was conceived as a manual or "handbook" and ended up as a "brain book".

It is full of concepts and principles, some rough and coarse, some fine polished.

But all help to understand and put into practice the agile movement, and to ride this great wave without sinking!

Highlights:

-

It is often quite astonishing to see how seldom, operative problems seem to attract management attention in today’s large corporations, unless they impact on the tactical strategies of the manager involved.

A more integrated approach is needed, to encourage management to focus on the total throughput of the company, rather than on the individual interests of single departments.

-

“Agile Manifesto” The concept is based on the delegation of responsibilities, self-organization and incremental development steps, which allow a flexible response to customer needs.

These principles can also be transferred to general management tasks.

It would be a fatal misunderstanding though, to see such an approach as an IT project.

-

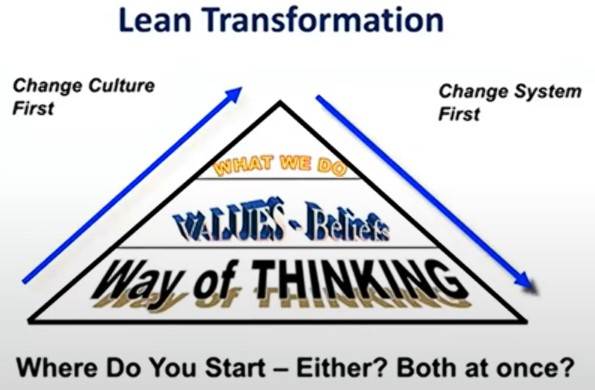

To achieve an effective transformation the company needs to bring about nothing less than a complete culture change.

Management has to relinquish its monopoly on information ownership, which may be perceived as loss of power.

-

Of course management still retains responsibility for steering the company as a whole in the right direction, yet its role has changed.

As coaches to cross-functional teams, they need to cooperate closely with management colleagues.

👉🏾 It is necessary for management to collaborate, in order to eliminate bottlenecks for the teams, by focusing on the total throughput.

There is no longer room for individual power play between departments, as all teams have cross-functional tasks.

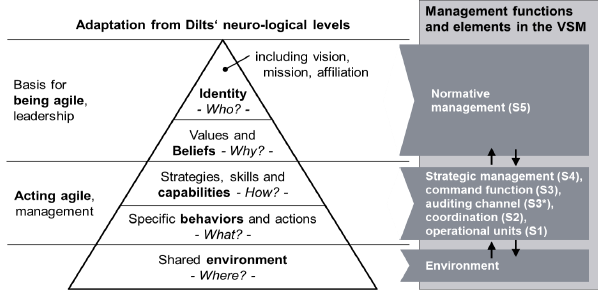

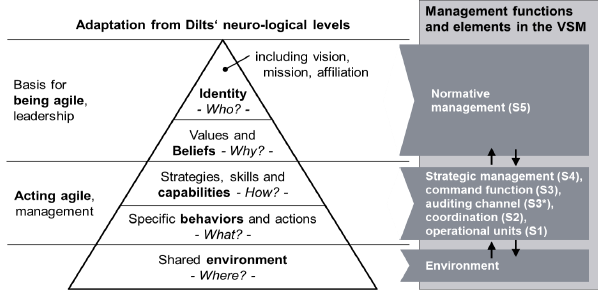

Managing a living viable system

Current enterprises are, to a great extent, pushed by a permanent demand for change and adaption.

One of their main requirements therefore is their ability to react accurately and precisely to dynamic and quickly changing market demands.

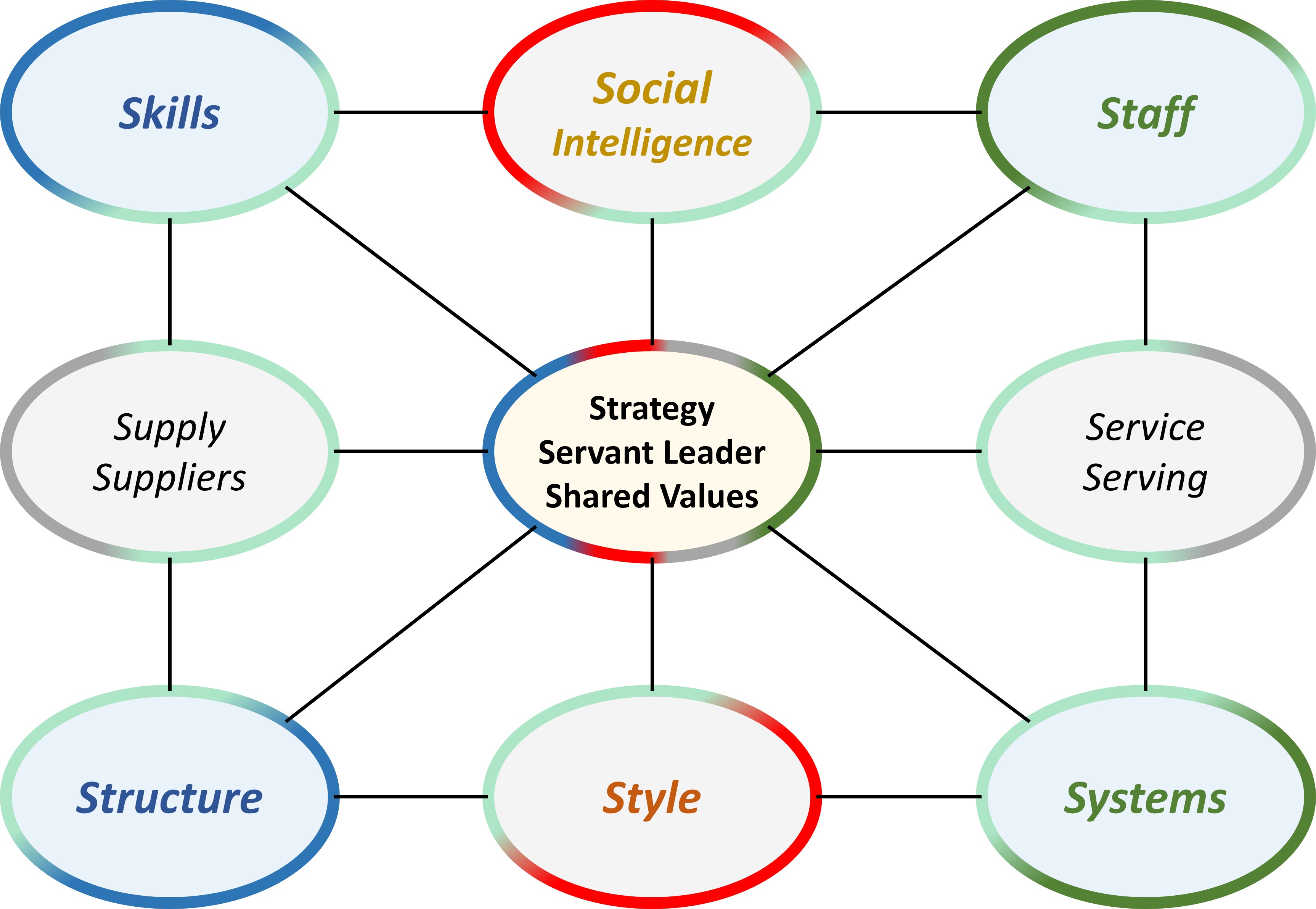

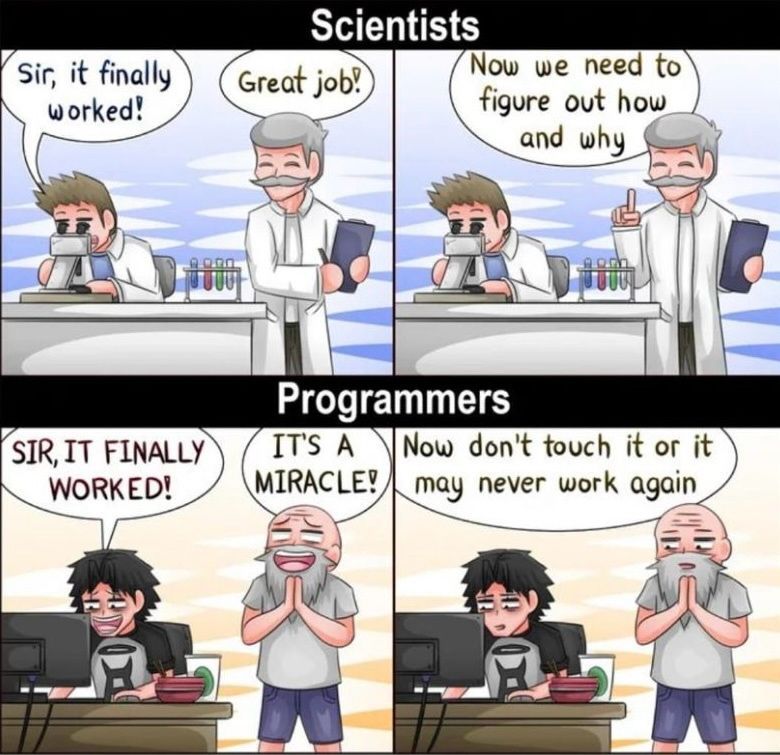

Cybernetic combined with a hierarchy 6w1h.

Management cybernetics provides a structural framework of managerial functions and the required interactions that will enable the long-term success of businesses. ...

changing the normative setting in an organization is crucial for reaching the desired synergy effects, i.e. initiating a process where “the whole is greater than the sum of its parts” (Aristotle).

The main driver for a collective interconnection between people, is

- firstly the development of a collective vision or a shared corporate goal (“big picture”)

- secondly a corporate culture based on confidence and mindful appreciation between the representatives of management functions and operational units.

... We cannot stress strongly enough how important it is from our point of view that any increase in S1’s self-organizing capabilities should always be accompanied by an agile reshaping of the higher management functions S2 through S5 in the sense described above.

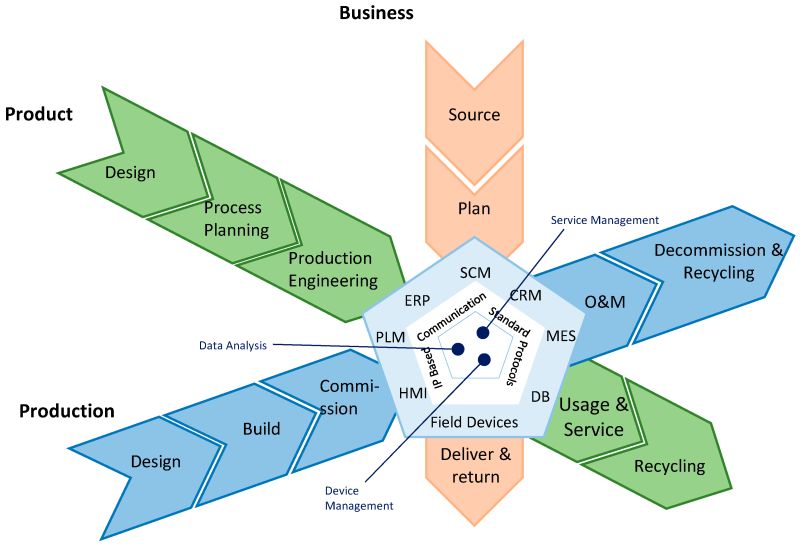

⚖ W-1.4.4 CPO, CPE, Managing living viable systems

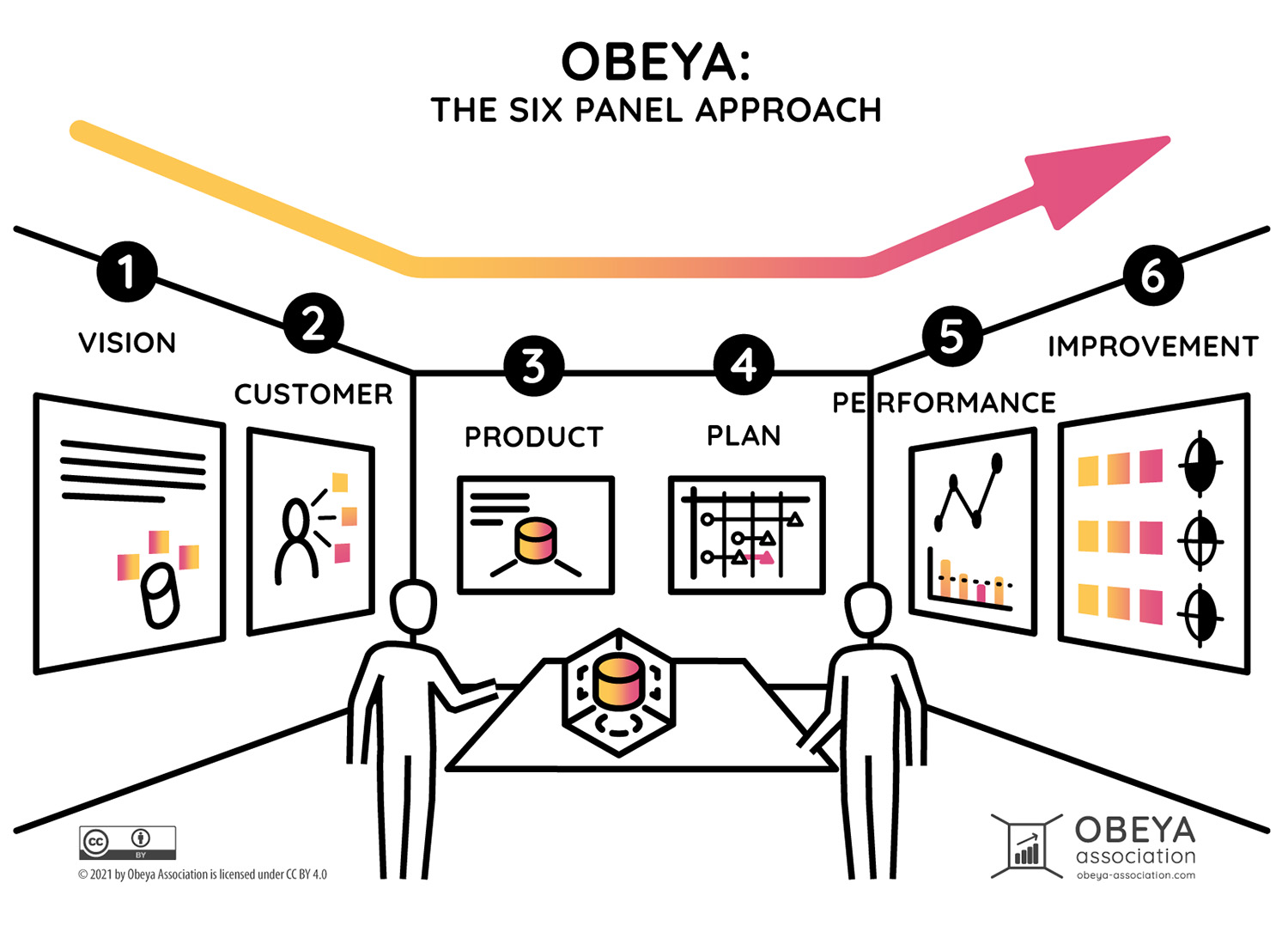

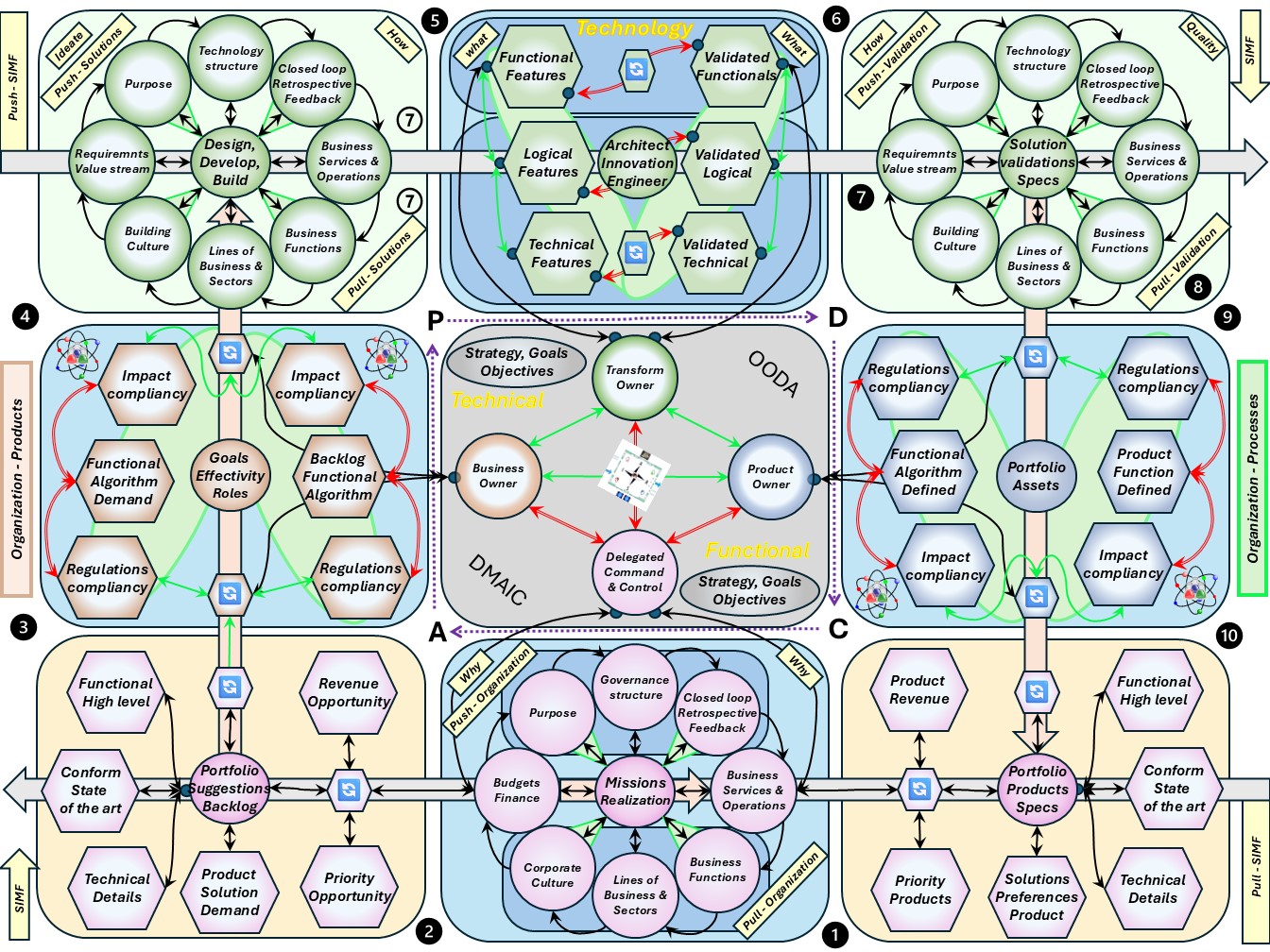

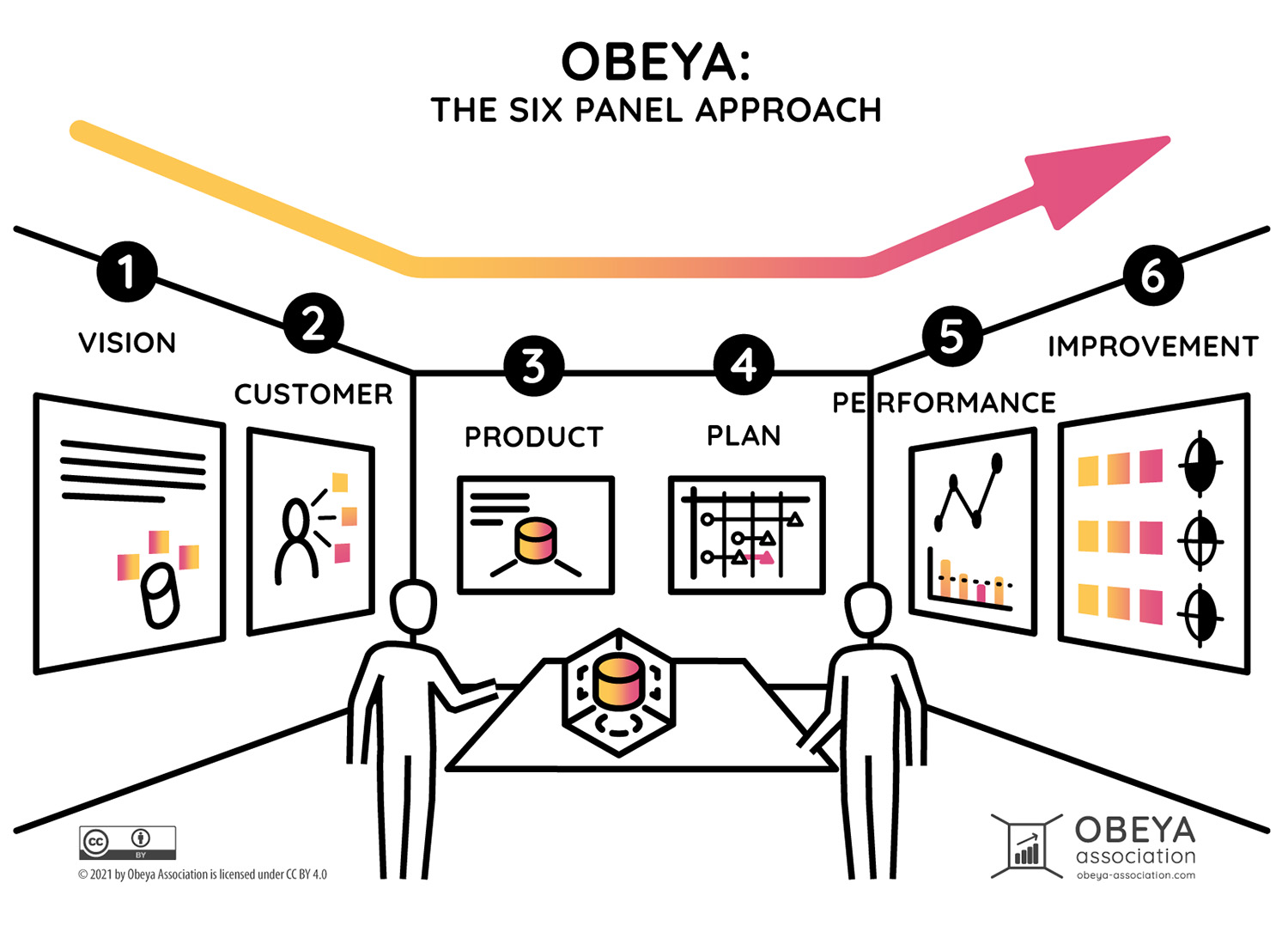

Lean Product and Process Development (LPPD)

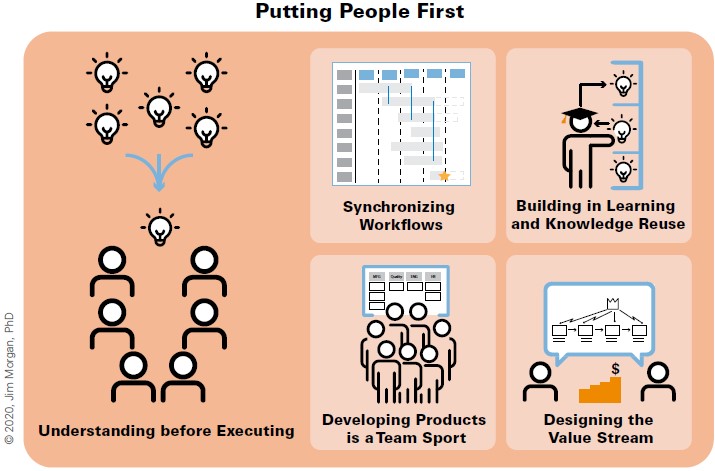

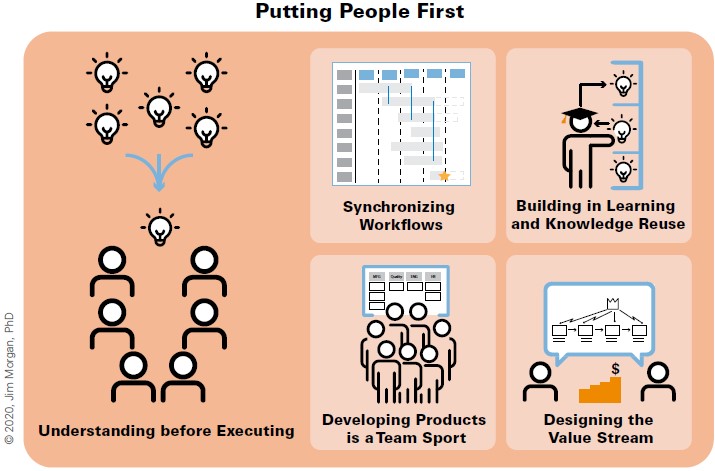

LPPD Guiding Principles (Jim Morgan, Lara Harrington, Steve Shoemaker)

CPO Chief product officer, the goal focus on the product, good or service.

The LPPD Guiding Principles provide a holistic framework for effective and efficient product and service

development, enabling you to achieve your development goals.

- Putting People First: Organizing your development system and using lean practices to support people to

reach their full potential and perform their best sets up your organization to develop great products and

services your customers will love.

- Understanding before Executing: Taking the time to understand your customers and their context while

exploring and experimenting to develop knowledge helps you discover better solutions that meet your

customers’ needs.

- Developing Products Is a Team Sport: Leveraging a deliberate process and supporting practices to engage

team members across the enterprise from initial ideas to delivery ensures that you maximize value creation.

- Synchronizing Workflows: Organizing and managing the work concurrently to maximize the utility of

incomplete yet stable data enables you to achieve flow across the enterprise and reduce time to market.

- Building in Learning and Knowledge reuse: Creating a development system that encourages rapid learning,

reuses existing knowledge, and captures new knowledge to make it easier to use in the future helps you

build a long-term competitive advantage.

- Designing the Value Stream: Making trade-offs and decisions throughout the development cycle

through a lens of what best supports the success of the future delivery value stream will improve its

operational performance.

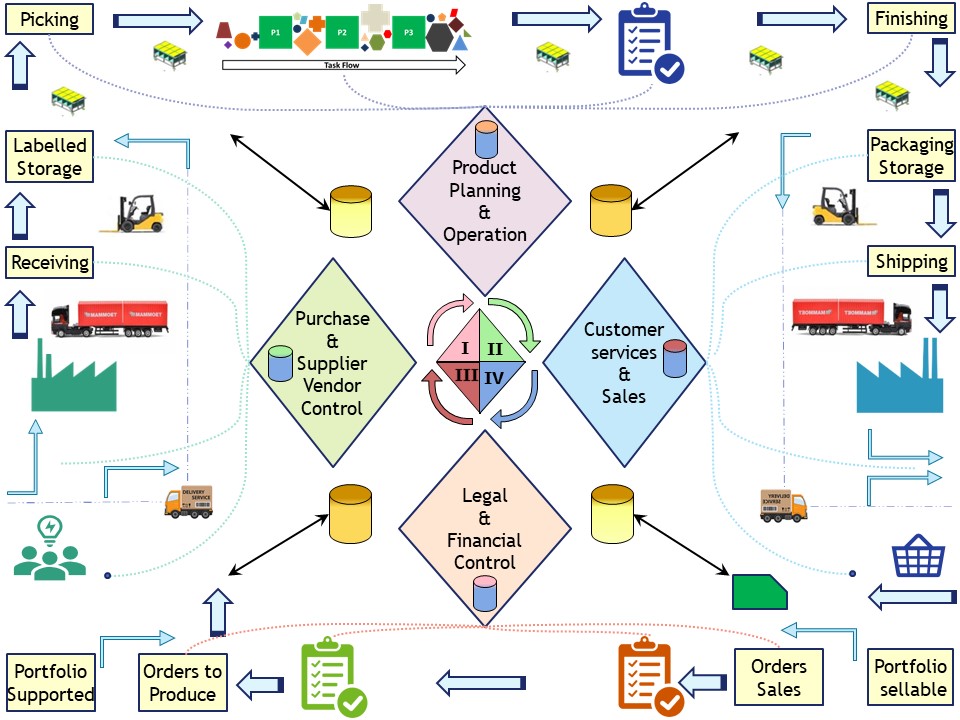

In a figure:

See right side.

Scaling without sacrificing innovation

👉🏾

Developing adaptiveness in a changing world (Sandrine Olivencia)

Chief product Engineers take on the critical role of balancing customer value technology and also finance to craft their products.

Actually a chief product engineer is not tied to a specific role like in agile.

Product manager or Tech lead is more of a responsibility or and a mindset.

CPE: Chief product Engineers can emerge from any part of the organization

3 Practices to scale artisanship (19m06):

- Emotion-centric design

- Performance-based product

- Mentor Chief Product Engineers

Chief product engineer (22m42) :

- Visceral passion for the customer

- Strong grasp of the product

- Committed to optimizing costs

The goal of this mentorship system is for experienced leaders to pass on their knowledge their vision and their artisanship to the next generation.

The Cornerstone of the system is the chief product role the chief product engineer role.

Product-led approach (262m42) :

- Mentor Chief Product Engineers

- Design products that sell themselves

- Perpetuate an artisan mindset

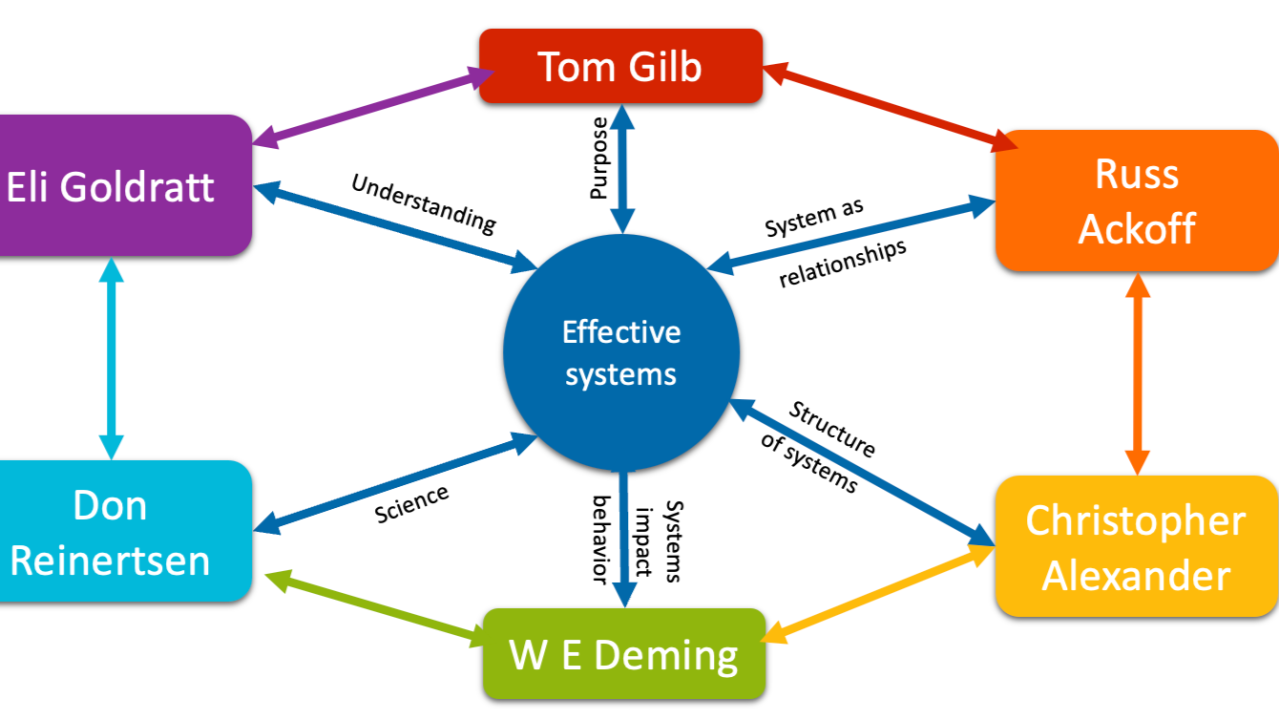

W-1.5 Sound underpinned theory, foundation

Knowing the position situation in by observing several types of associated information .

These are:

- Art of the role by observed input and results

- Art of the role by follow up interactions

- Kind of task in the process by role

Non trivial means it will be repeated for improved positions.

Command & control needs information for what understanding what is going on.

Without knowing the situation or direction there is no hope in achieving a destination by improvements.

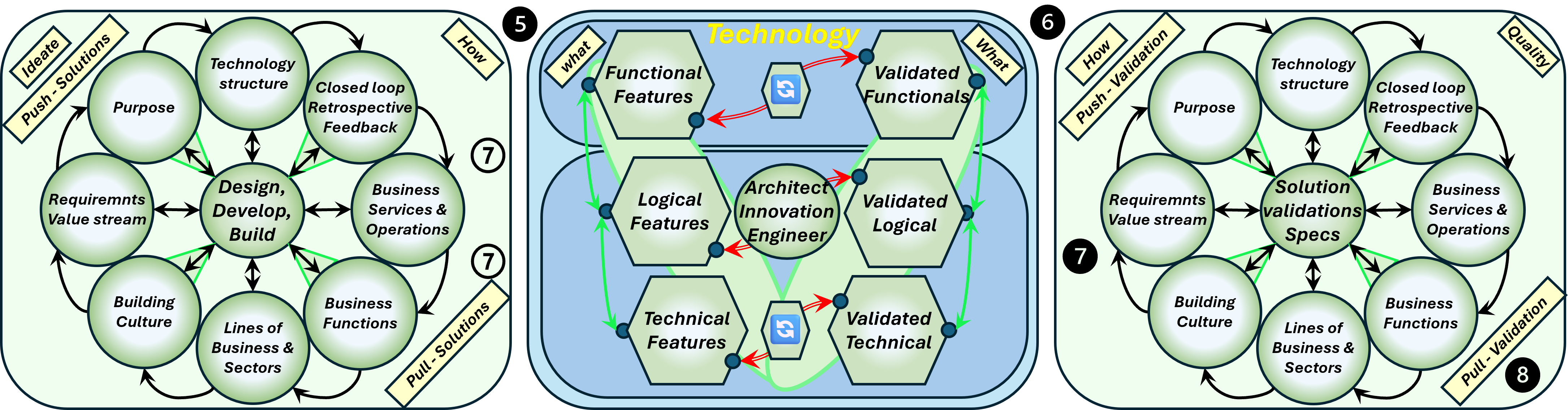

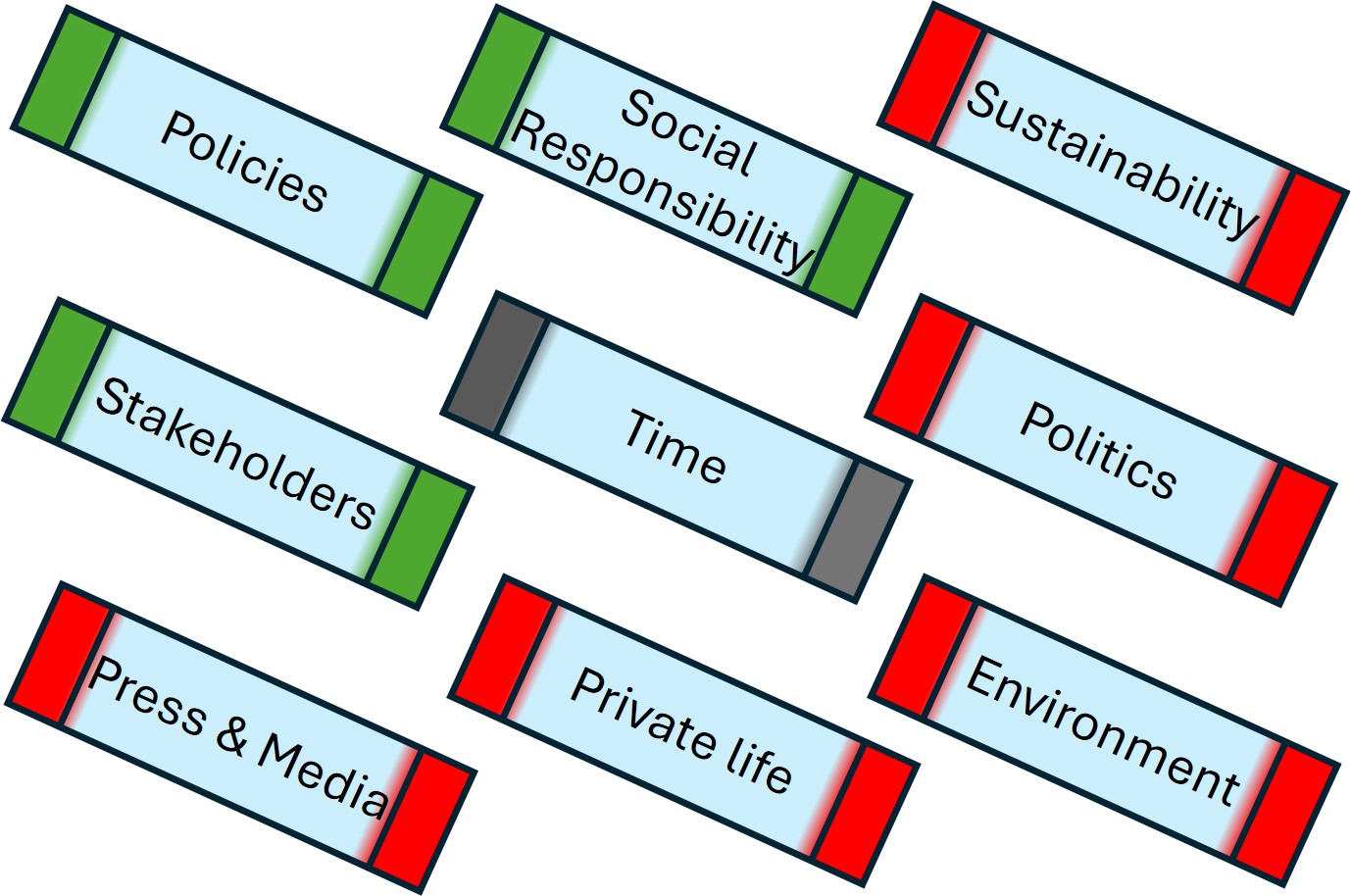

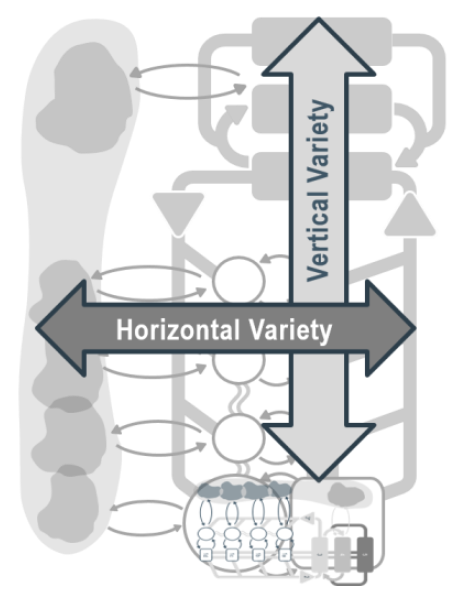

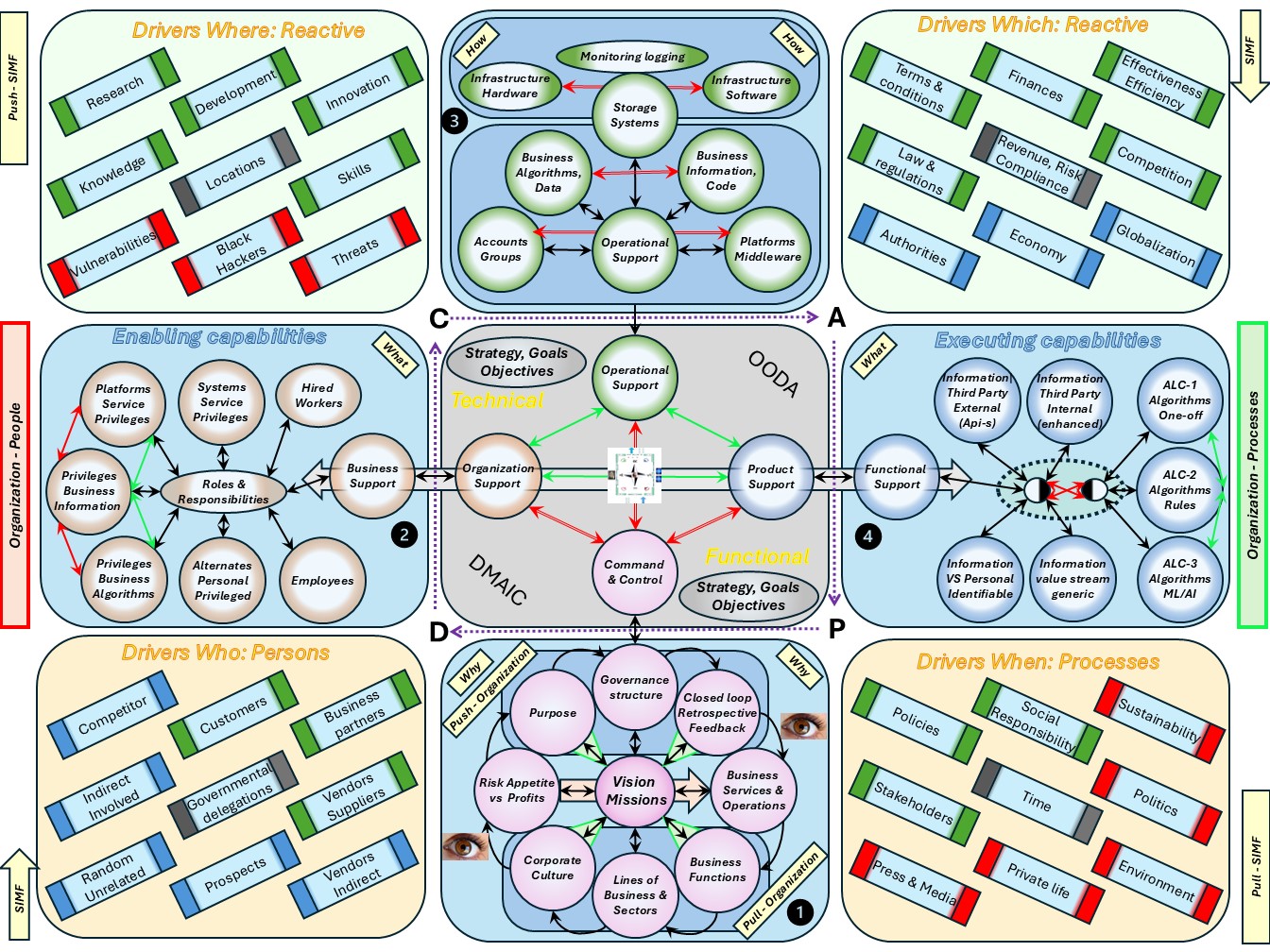

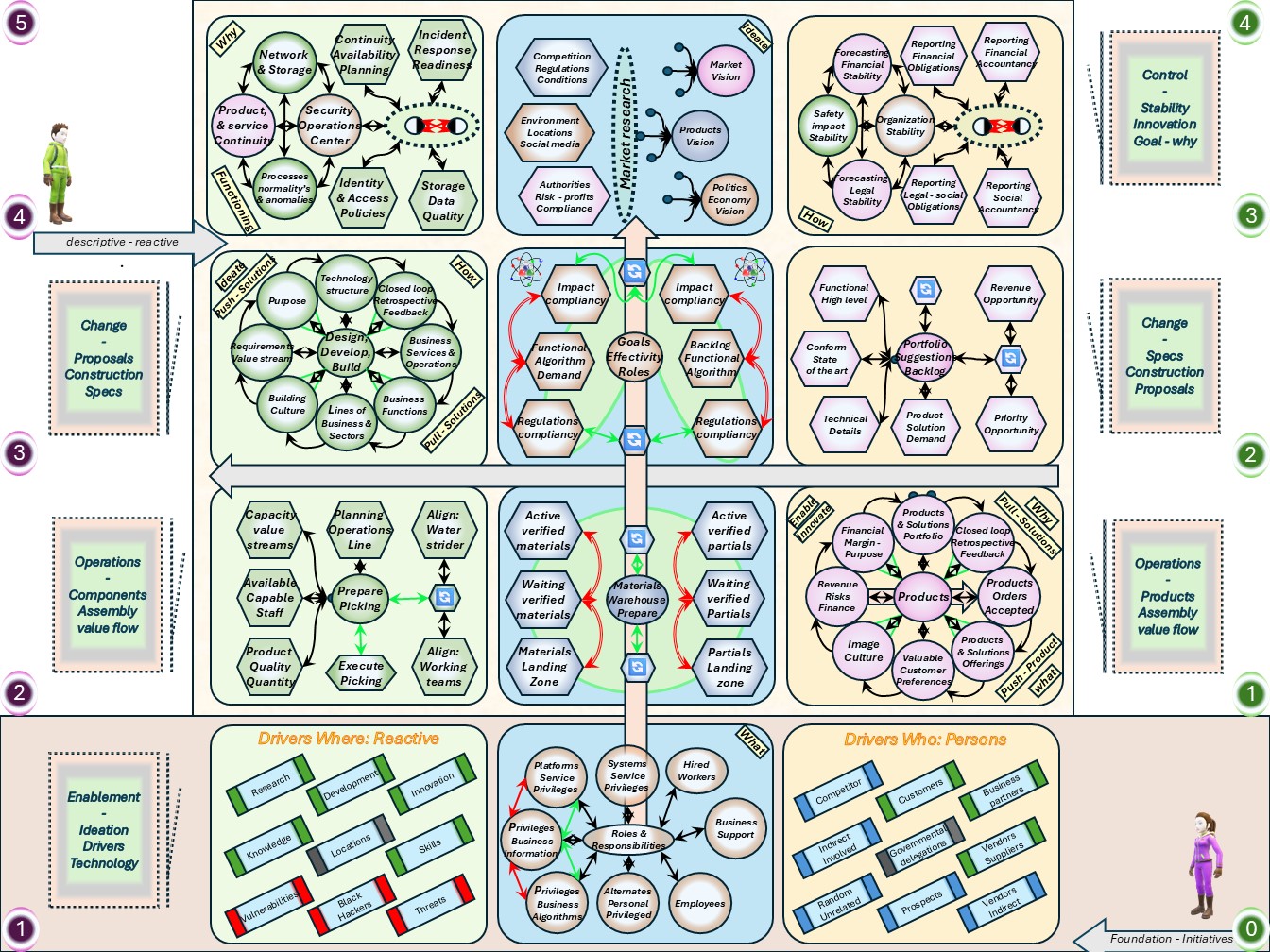

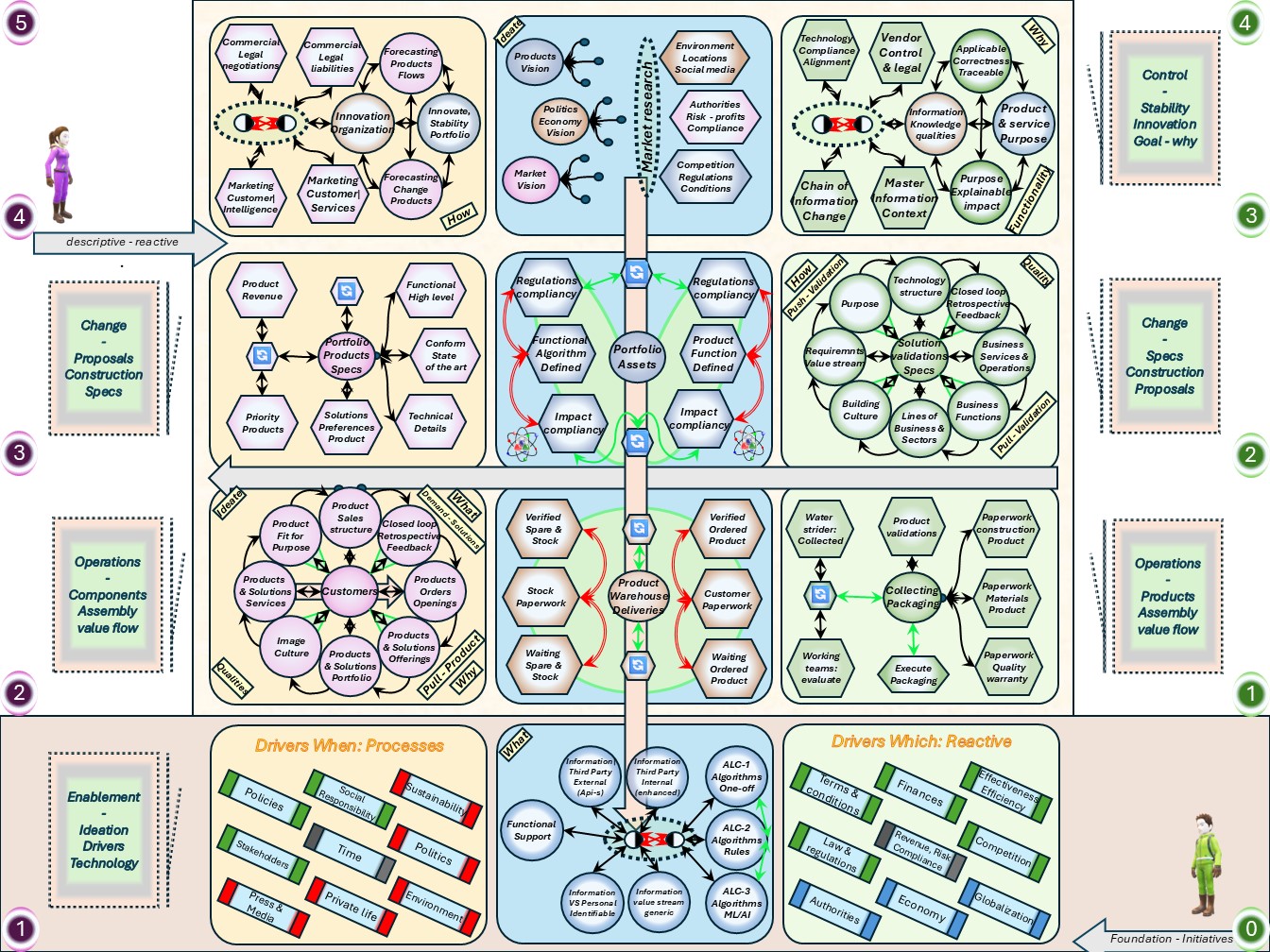

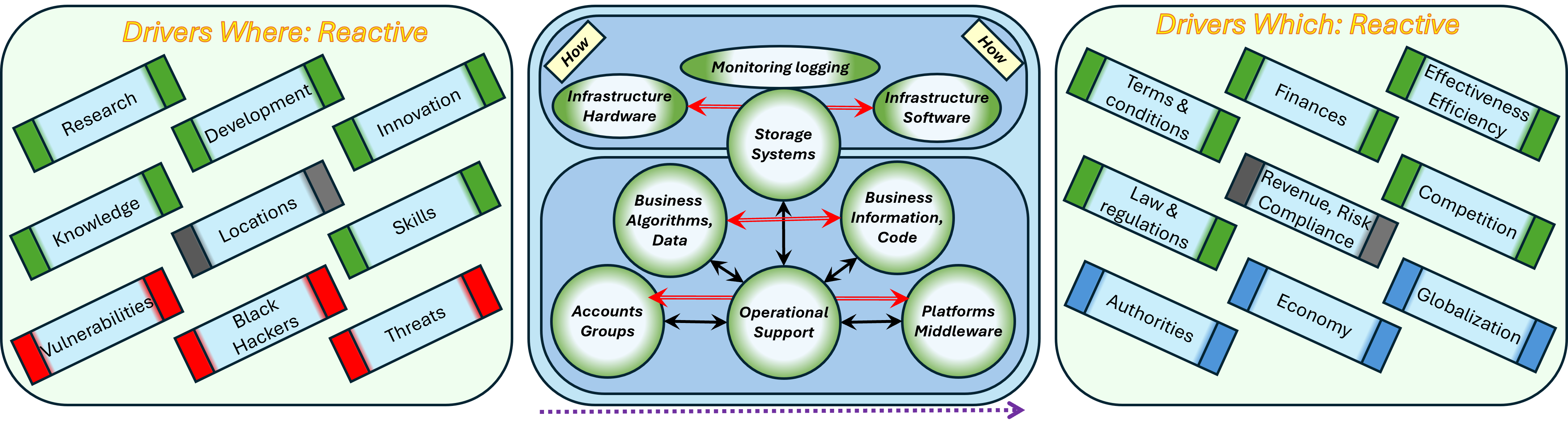

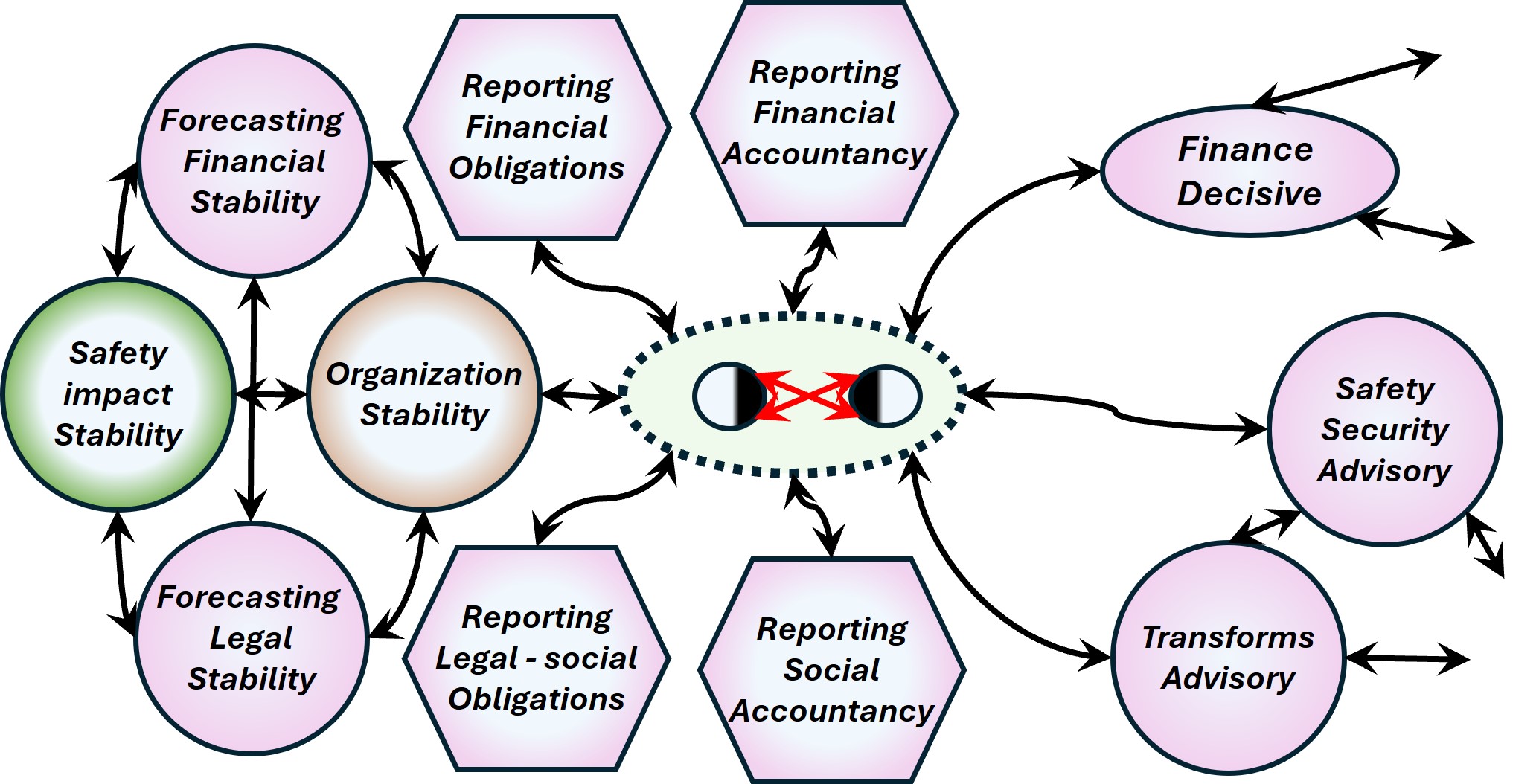

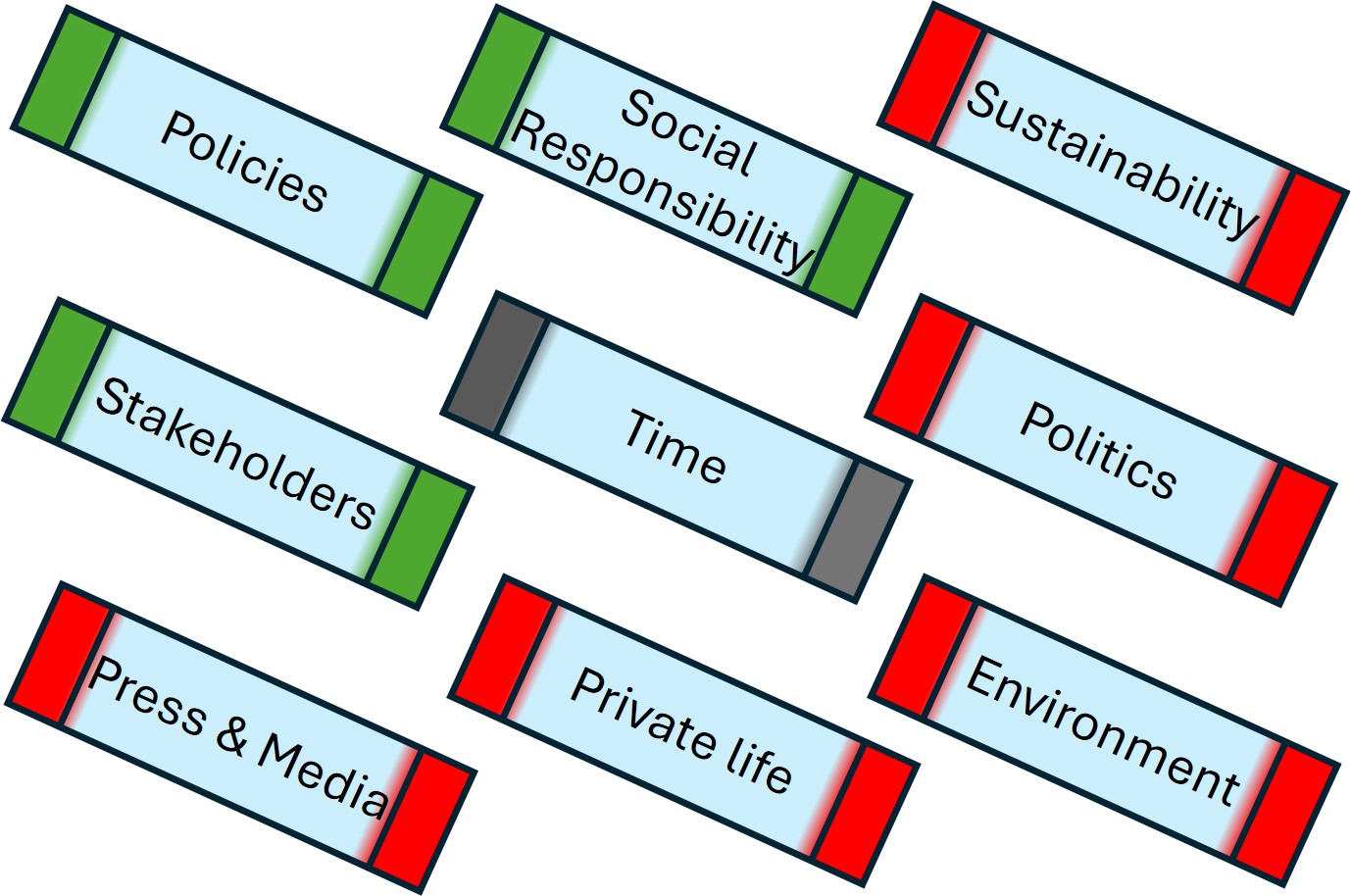

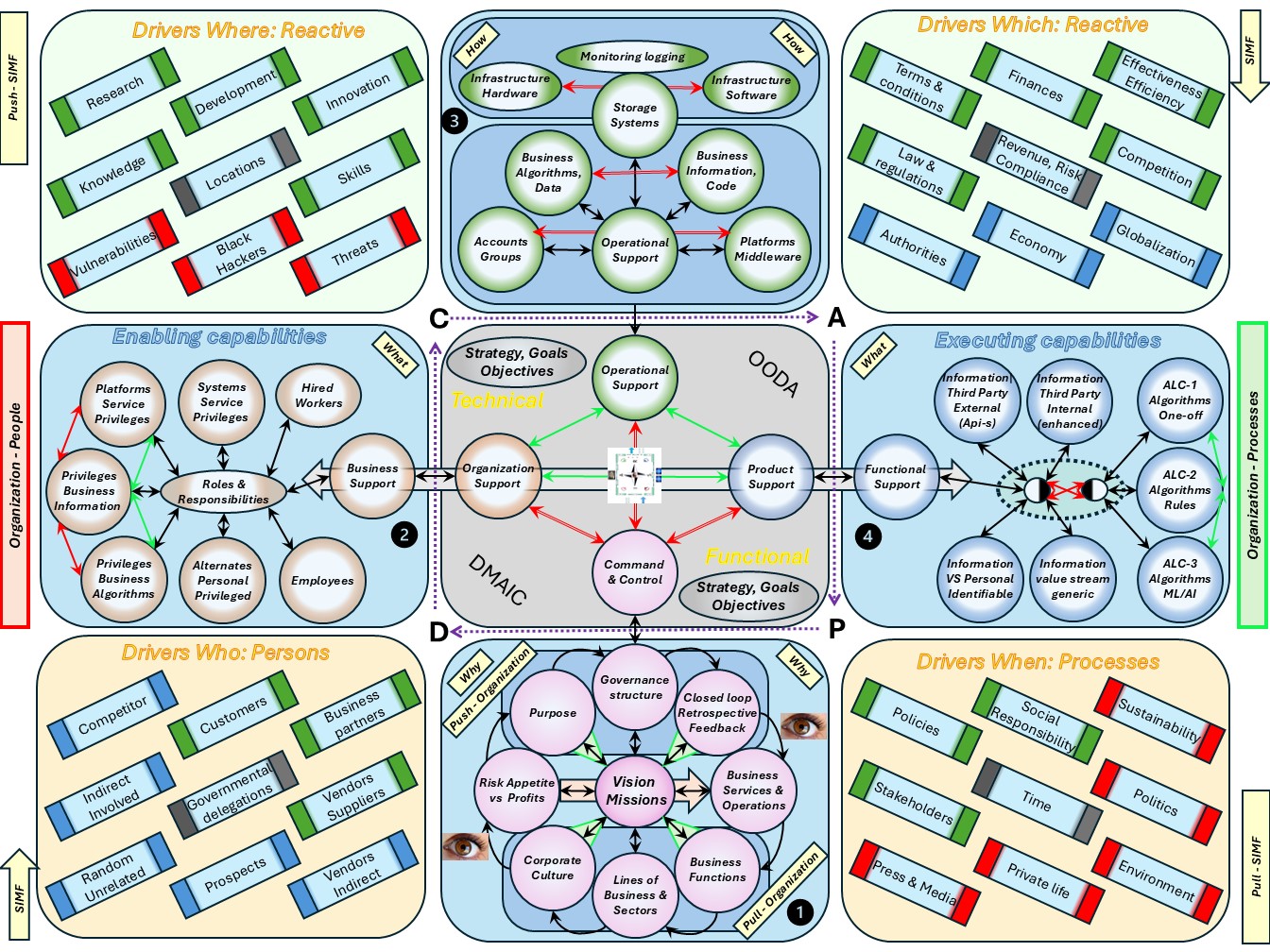

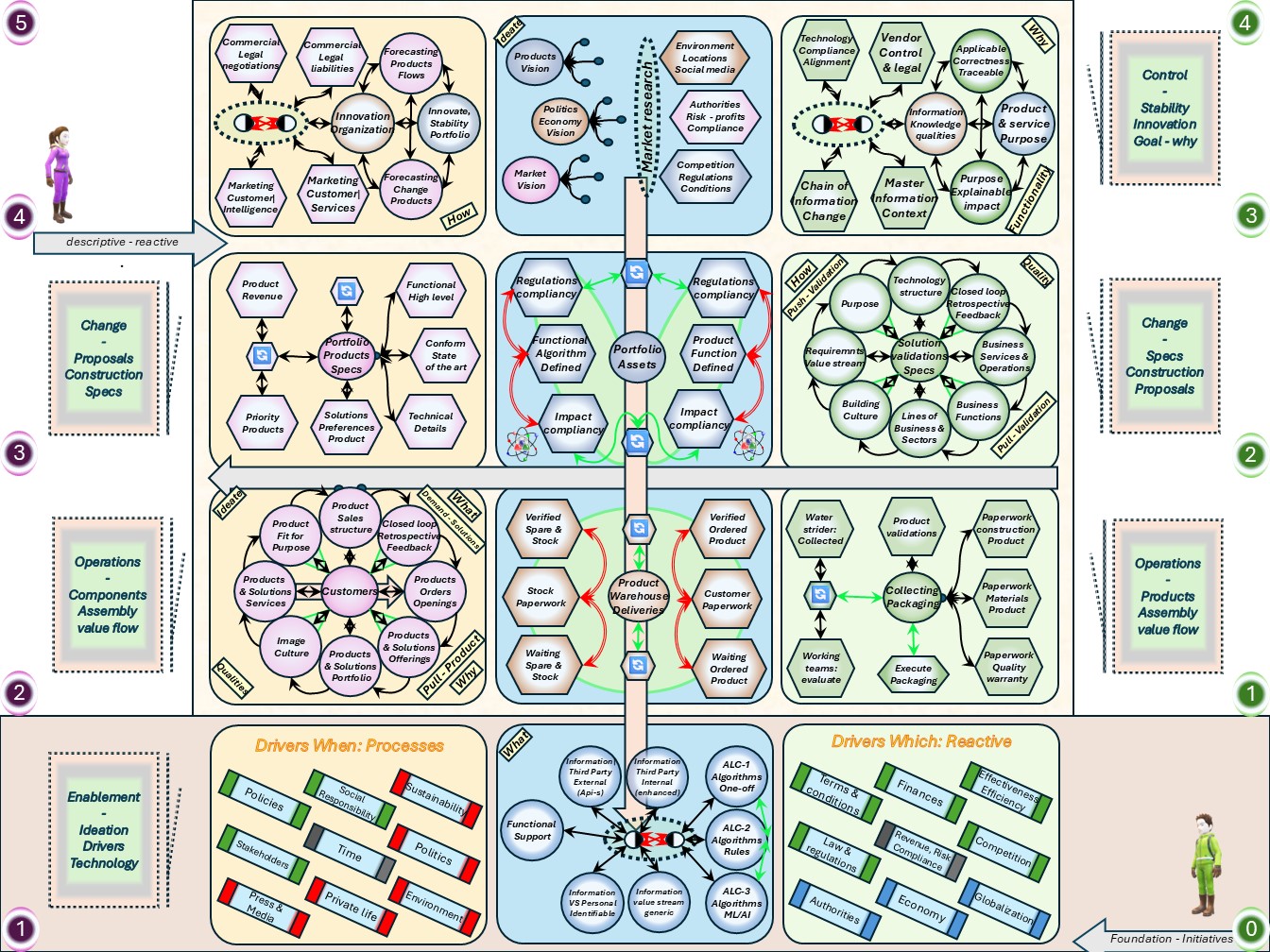

⚖ W-1.5.1 Chosen colours and shapes for the floor plans

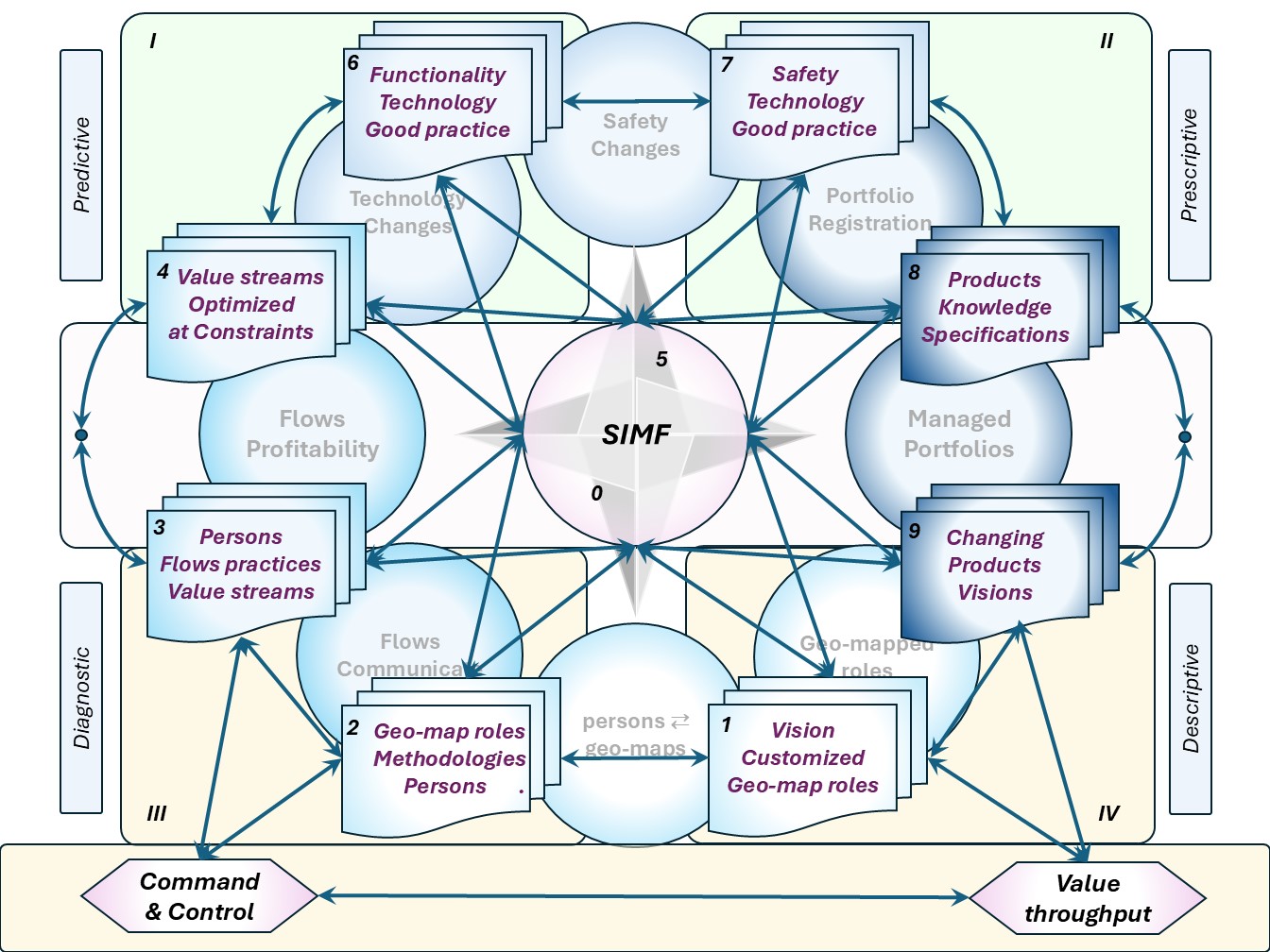

SIMF colours for area's

An organisation in two dimensional blueprints for a three (and more) dimensions needs elaboration.

Presenting idea's by only figures is too difficult to understand without an explanatory reference.

| Explanation for the areas | Image |

Steer: An orange colour are organisational command & control for:

- high abstracted level to the operational floor activities

- for functionality, the change and functioning getting the value.

|

|

Serve: A green colour are technology aspects for:

- high abstracted level to the operational floor activities

- for functionality, the change and functioning getting the value.

|

|

Shape: A blue colour are mediation aspects for:

- high abstracted level to the operational floor activities

- for functionality: medium & long term closed loop information

|

|

Synapse: A gray colour are the logical communication aspects for:

- The short term, quick communication, quick reaction at the same floor level.

- The short term, quick communication, quick reaction over floor levels.

This area is the equivalent of command & control of a viable system.

The viable system theory is mentions a fifth level. Questions for that one:

Who are our customers? What problems do we solve for them? What are they really willing to pay money for?

This is crucial because answering those provides the primary control criterion that anchors accountability.

|

|

SIMF structure in shapes

| Explanation for the areas | Image structures |

Steer: Structures:

- Circles

➡ Interactions

related to:

- Organisation

magenta

- Technology

green

- Consumer focus

brown

- Supplier focus

indigo

- A circle of circles, controlled

- Hexagons

➡ defined actions

- collection

delegated actions, controlled

- duality

➡ Materialised information

vs processing information

|

|

Serve: Structures:

- Circles

➡ Interactions

related to:

- Organisation

magenta

- Technology

green

- Consumer focus

brown

- Supplier focus

indigo

- A tree of circles, controlled

- A V-shape control

➡ adaptive change

- Hexagons

➡ defined actions

- Hexagon flow

➡ fast closed loops

|

|

Shape: Structures:

- Hexagons

➡ defined actions

- Hexagon flow

➡ fast closed loops

- Circles

➡ Interactions

related to:

- Organisation

magenta

- Technology

green

- Consumer focus

brown

- Supplier focus

indigo

- A collection of circles, controlled

|

|

Synapse: Structures:

- Circles

➡ Interactions

Same floor defined orientation:

- Organization

magenta

- Technology

green

- Consumer focus

brown

- Supplier focus

indigo

- Hexagons

➡ defined actions

- Rectangles

➡external influence

- Antennas

➡ receiving signals

|

|

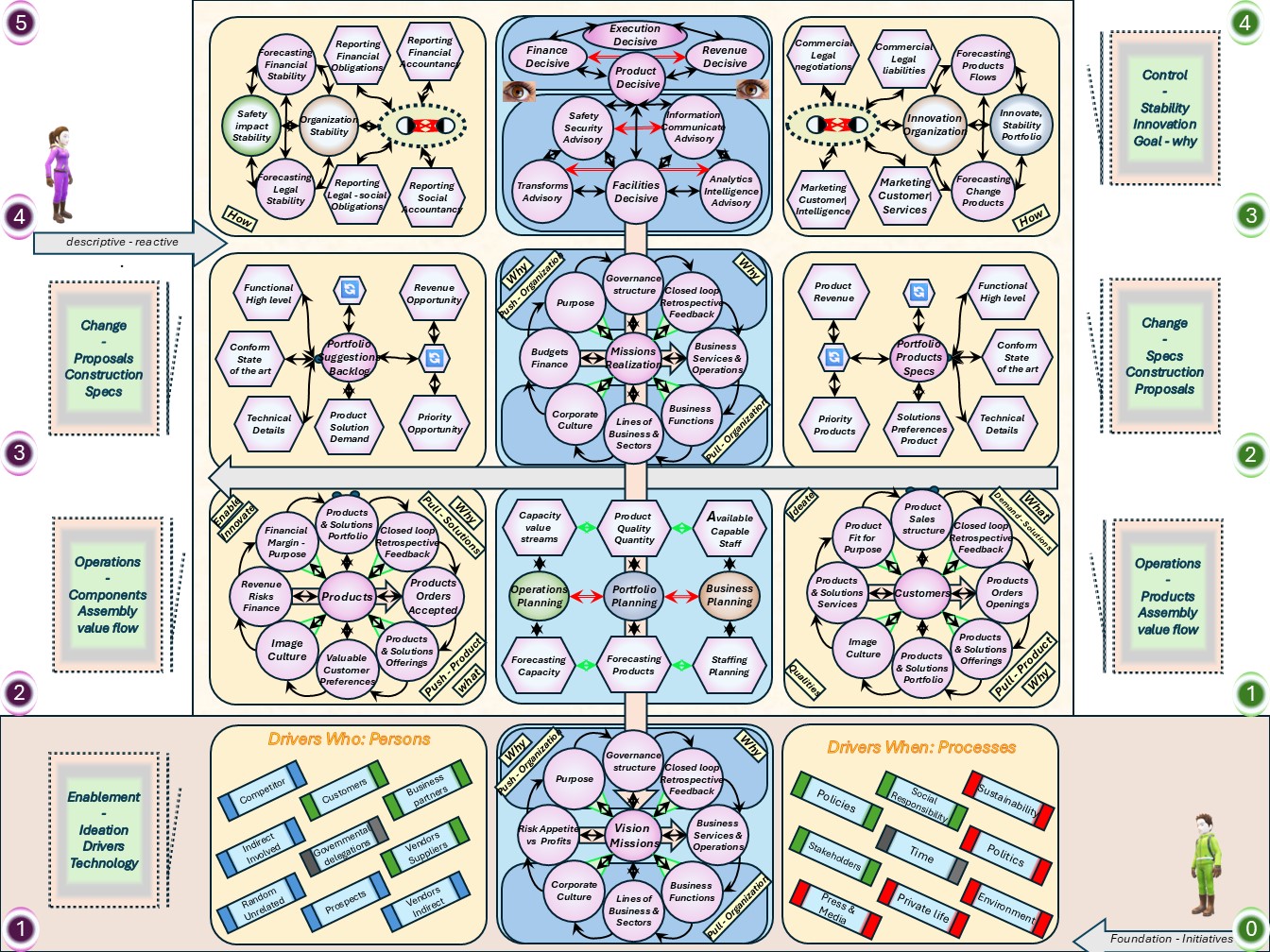

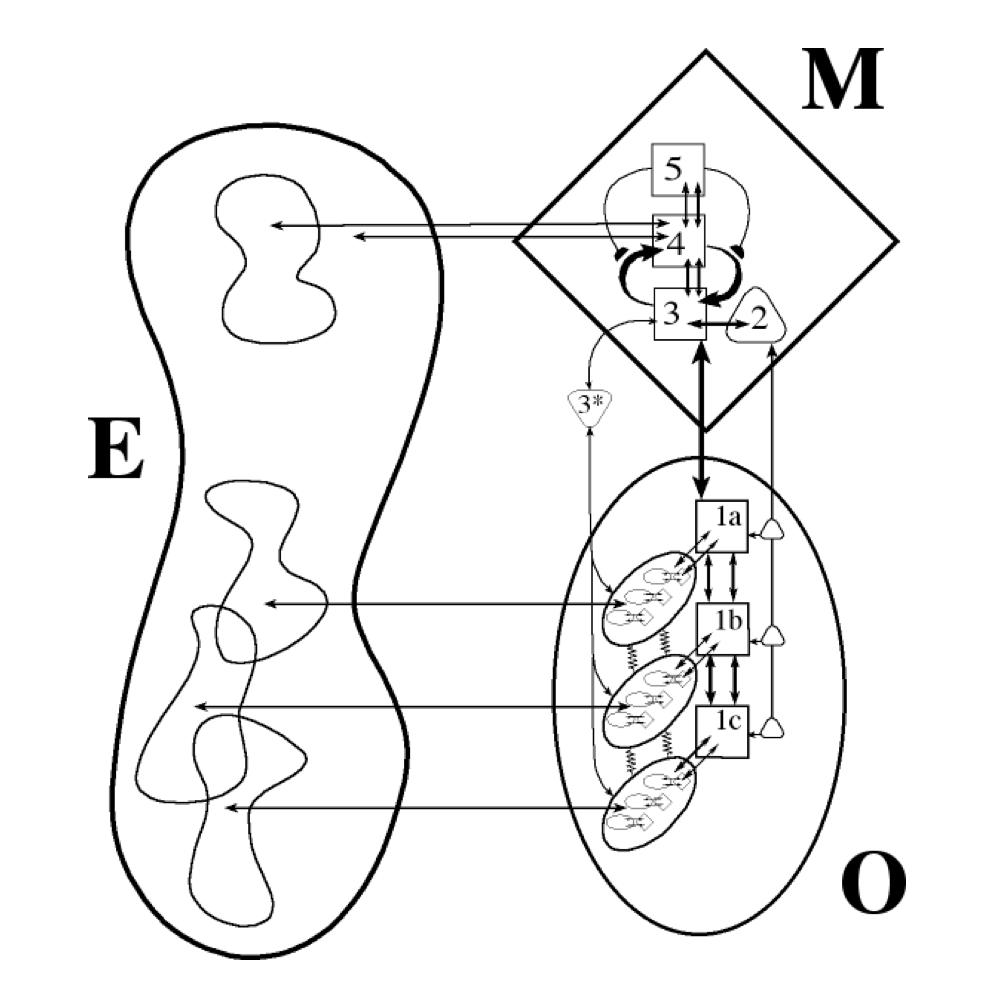

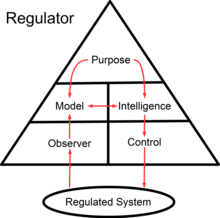

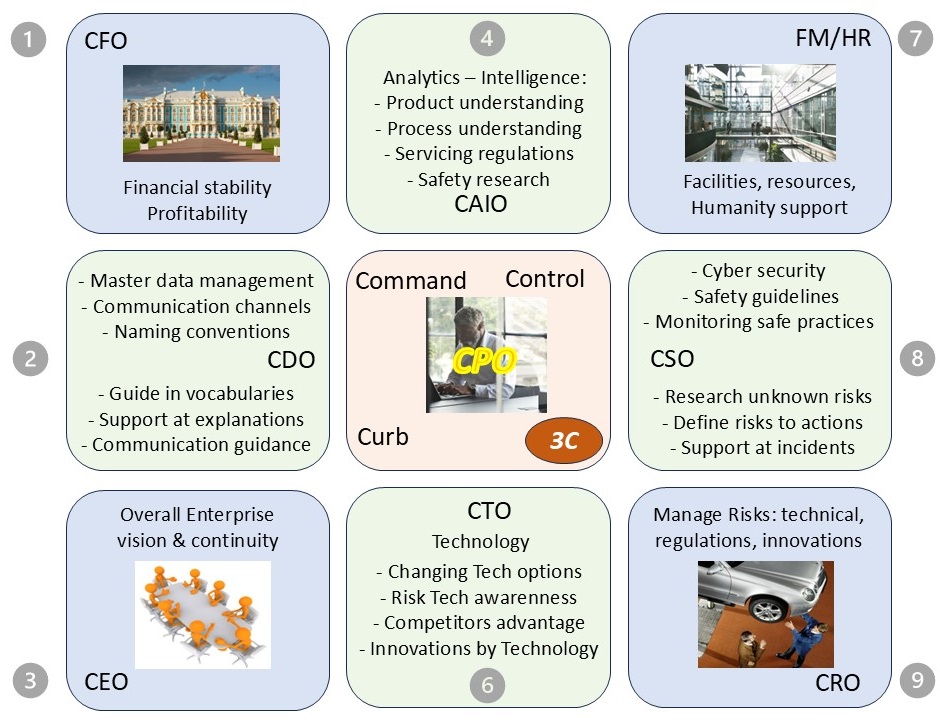

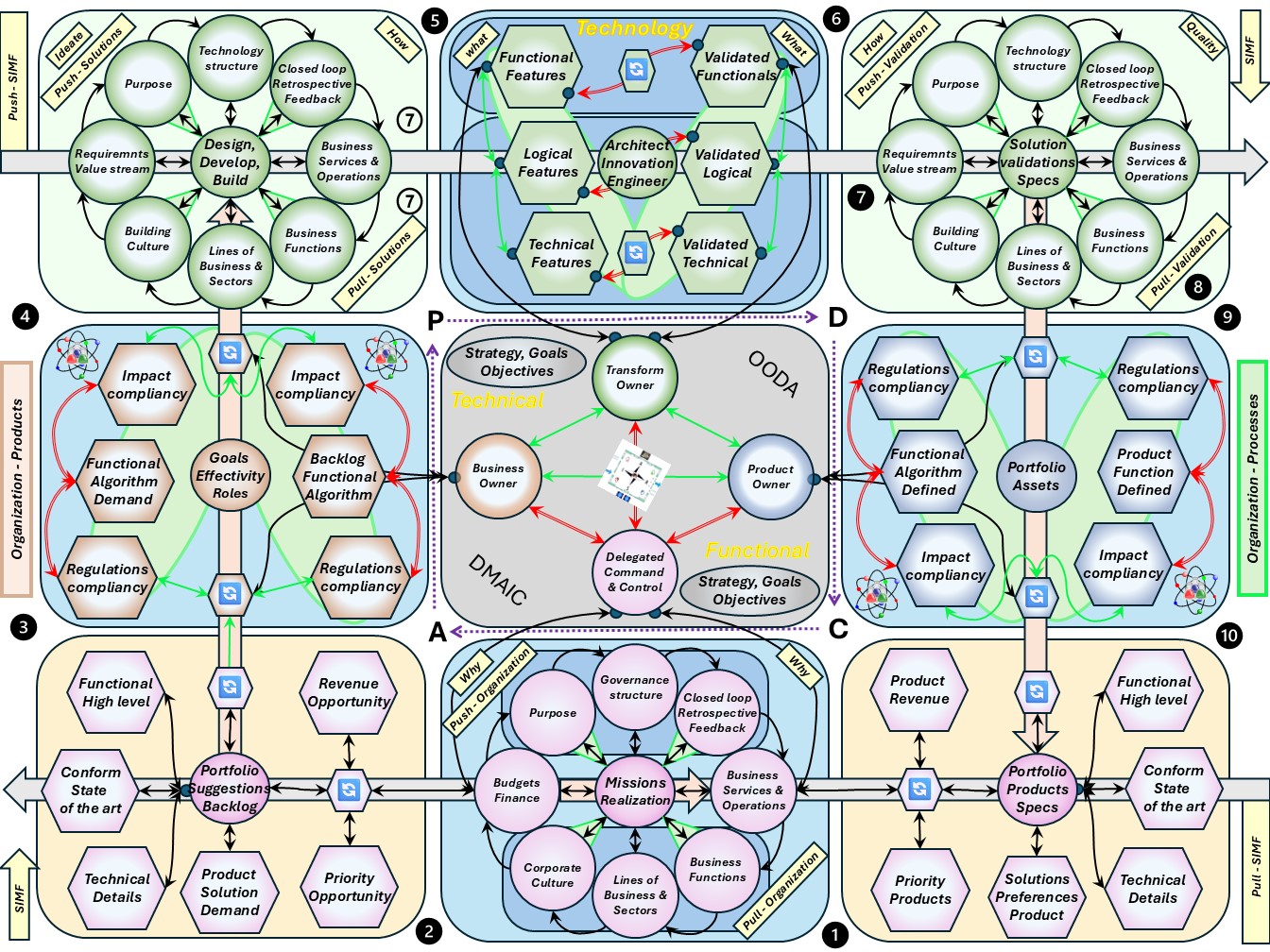

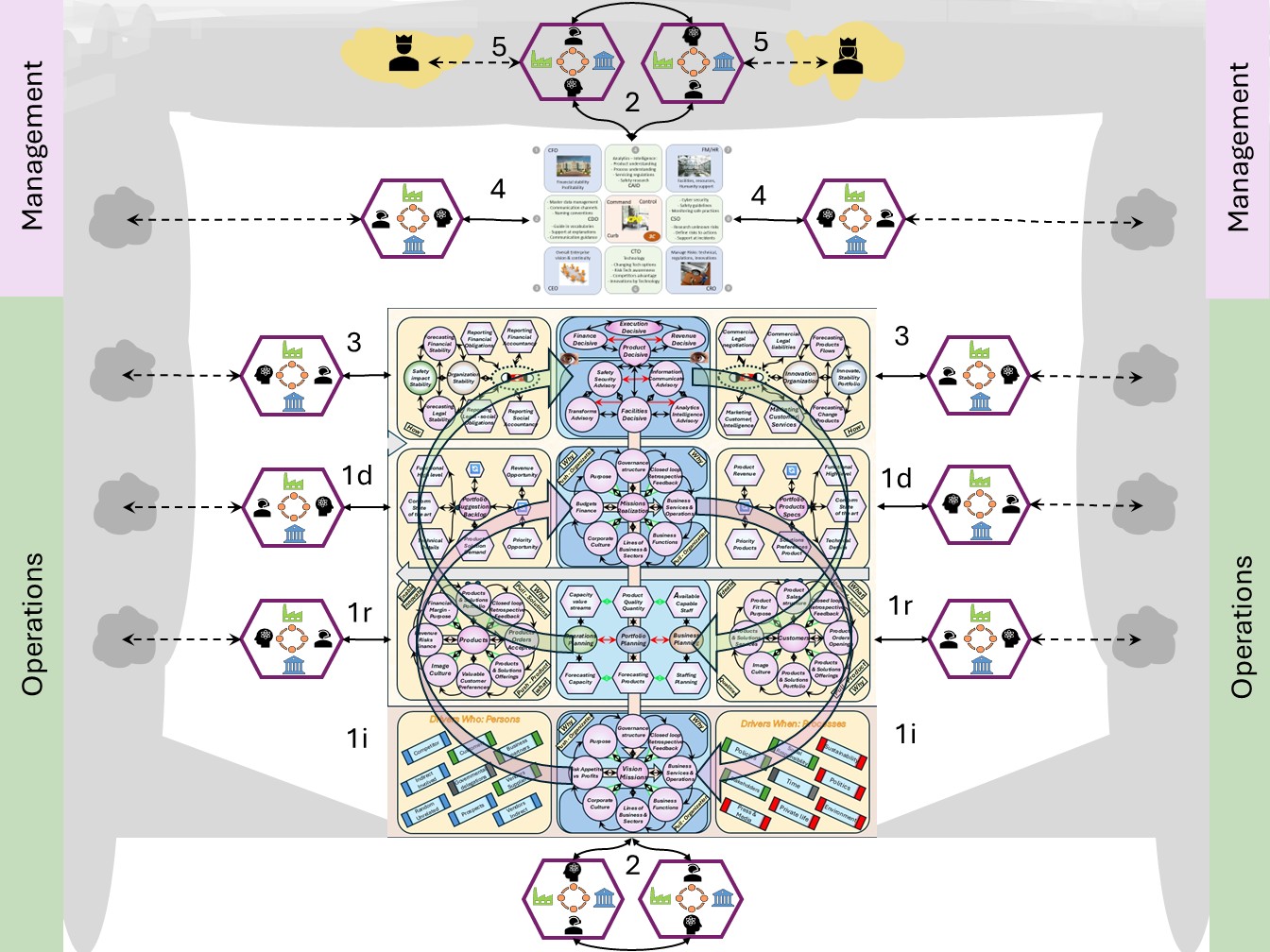

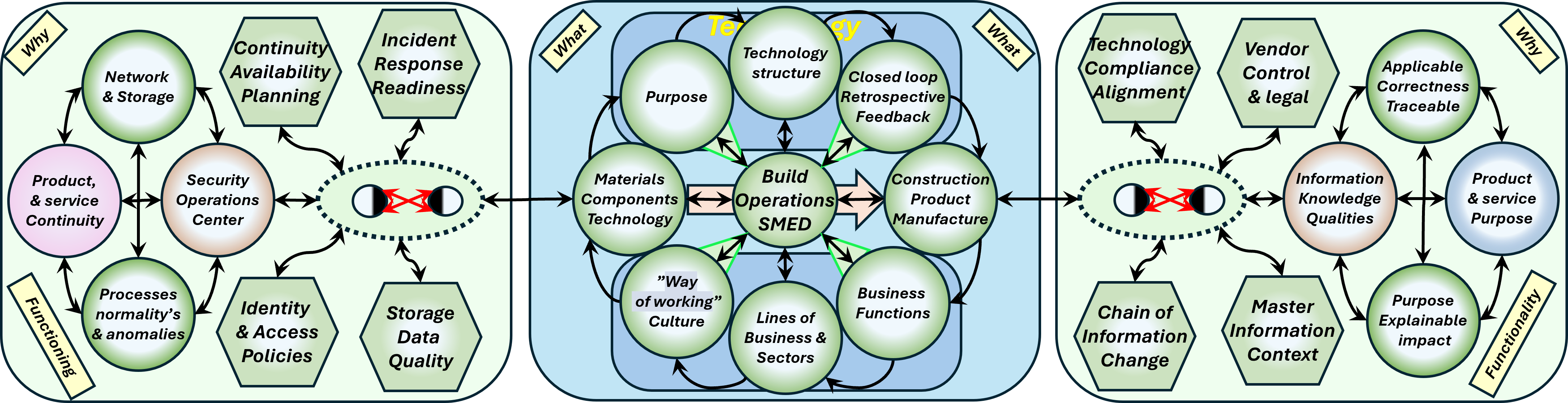

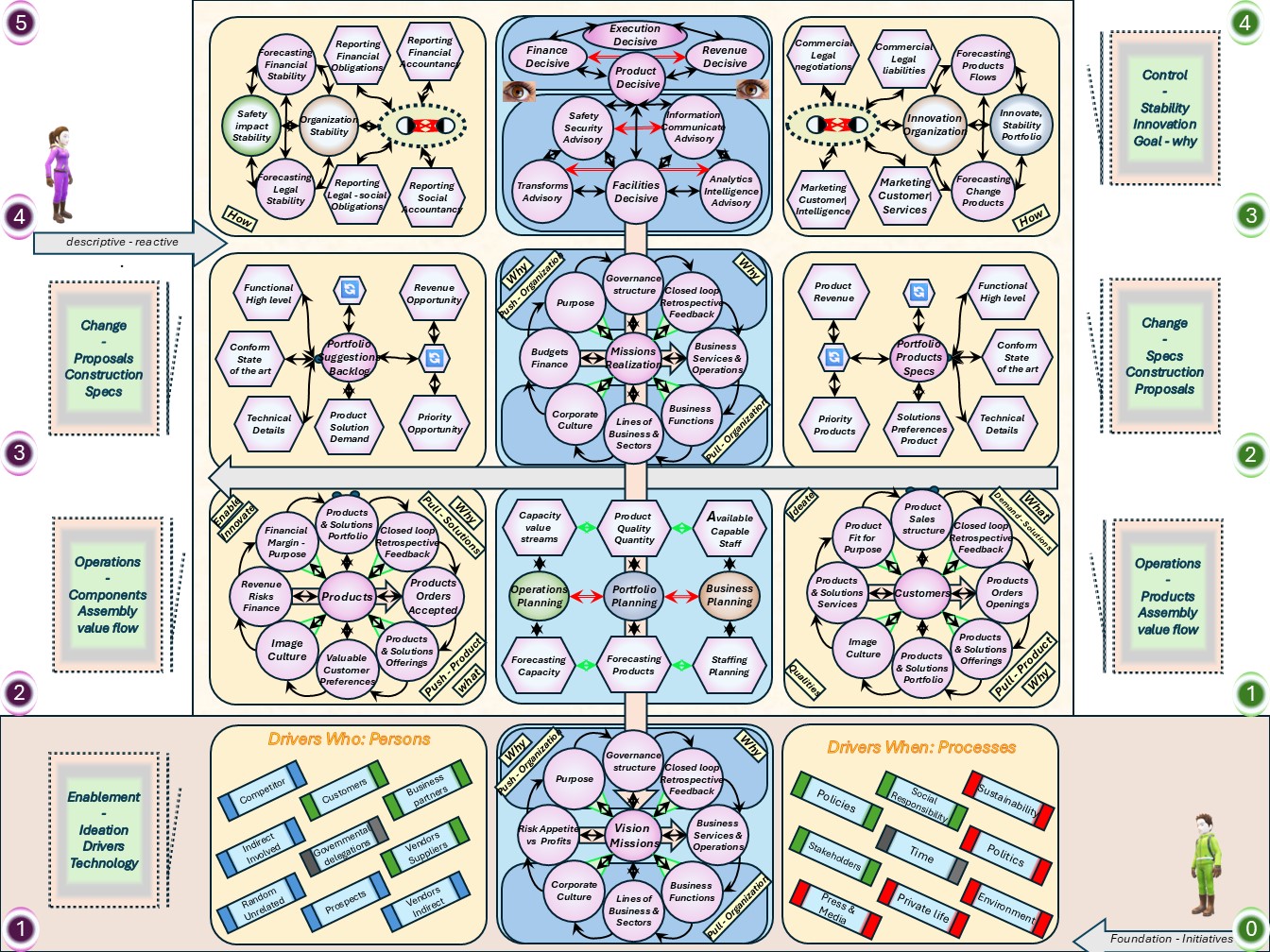

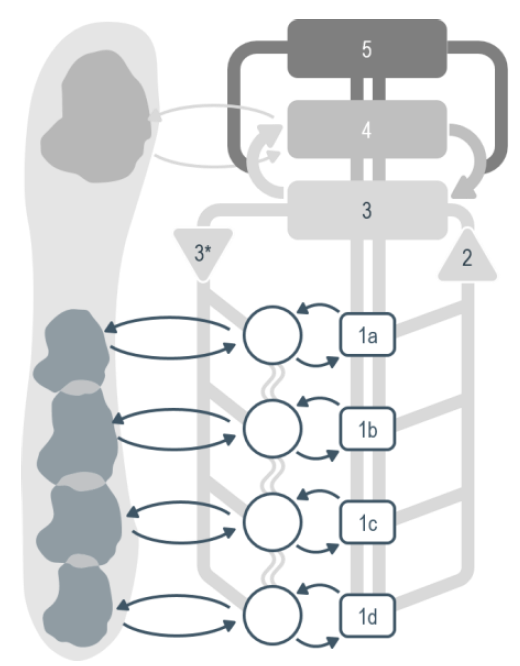

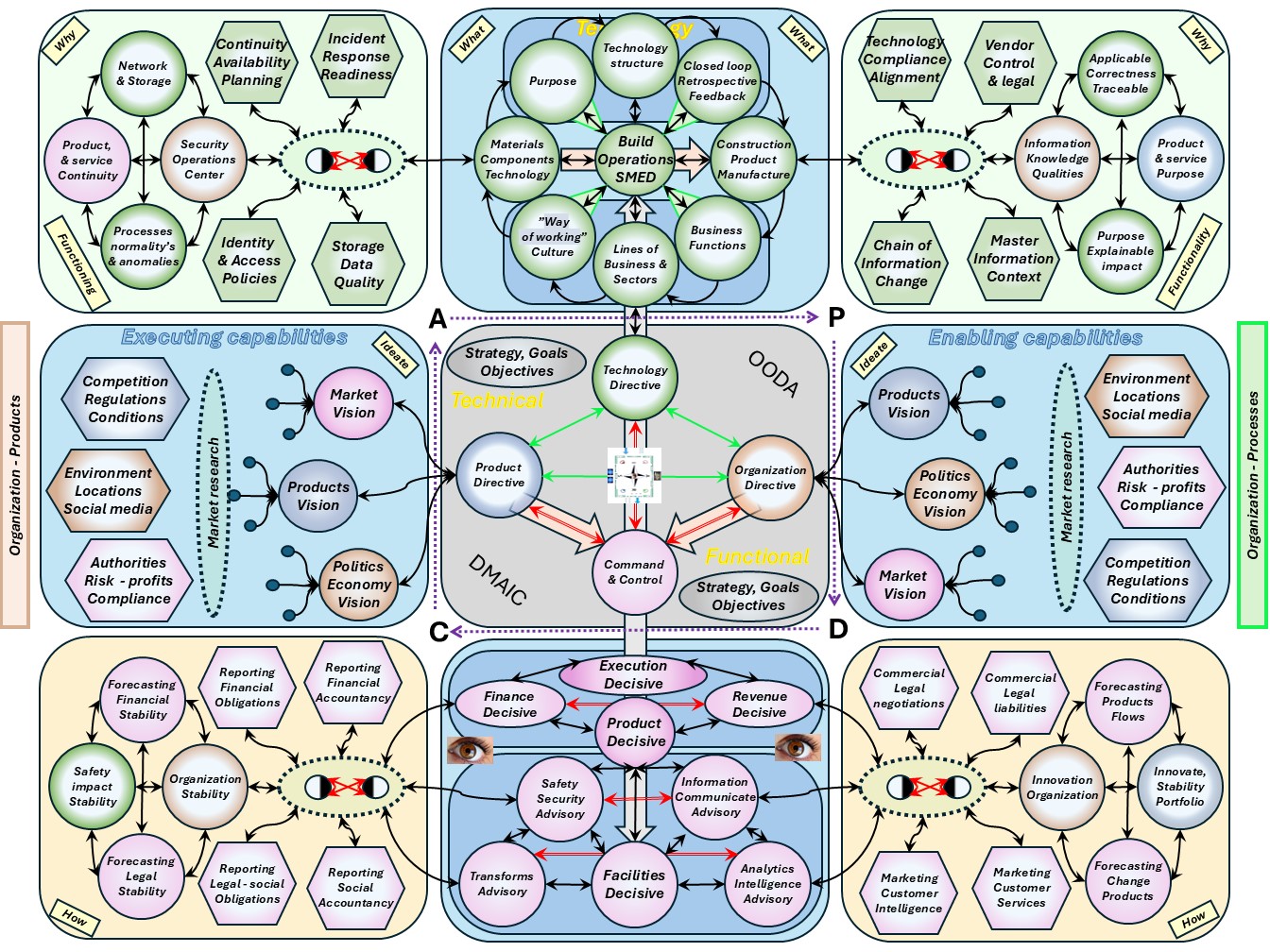

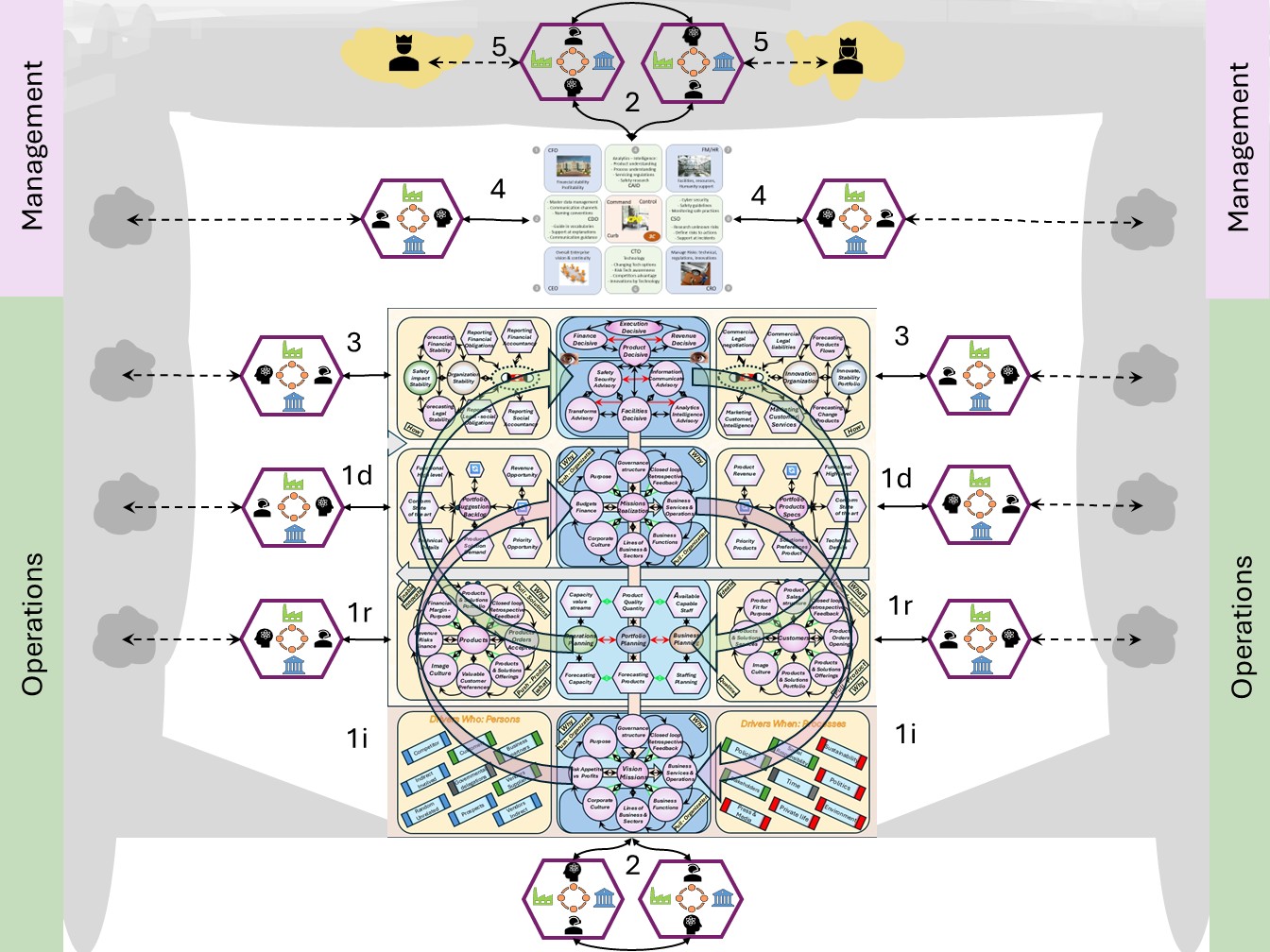

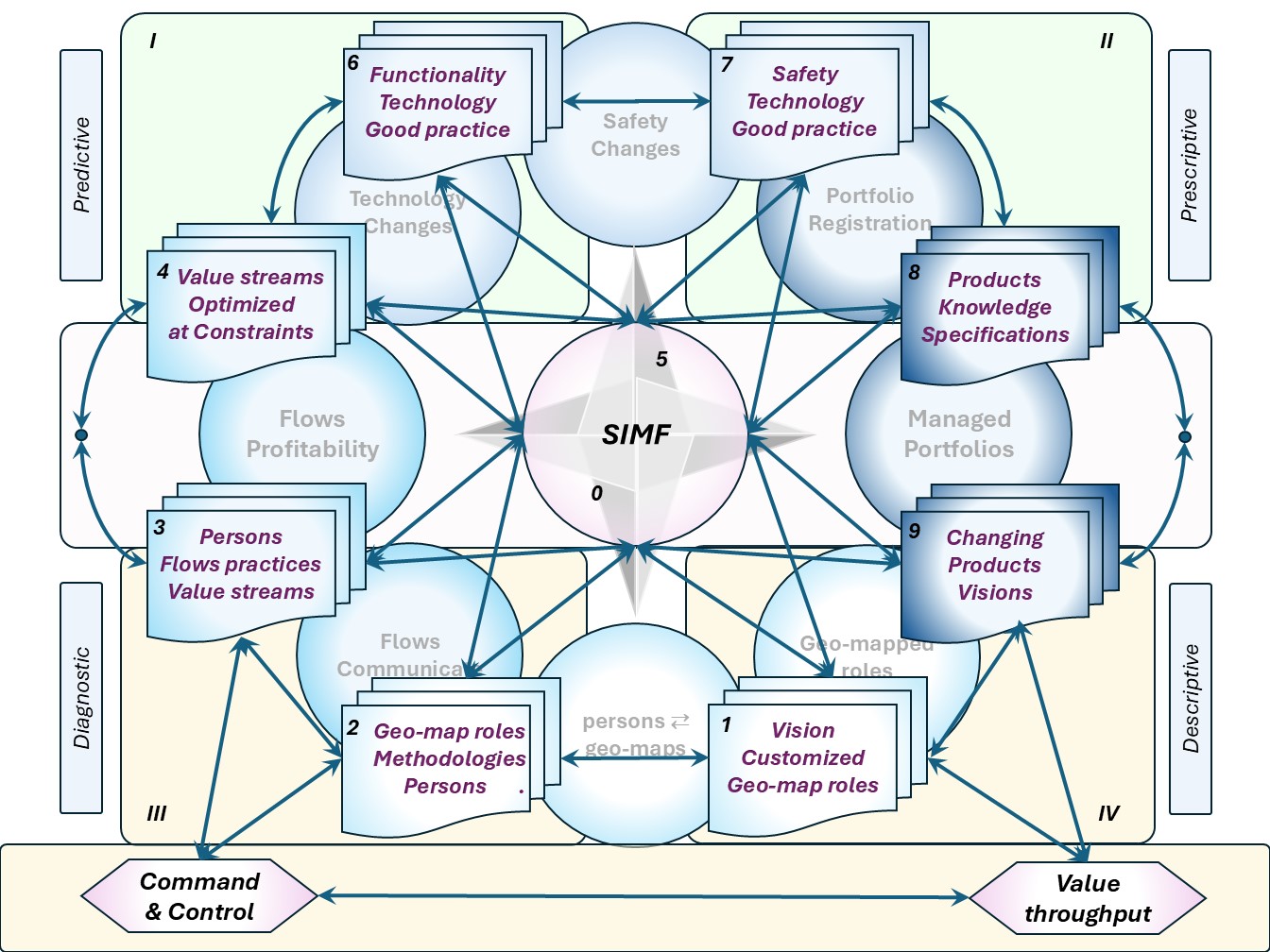

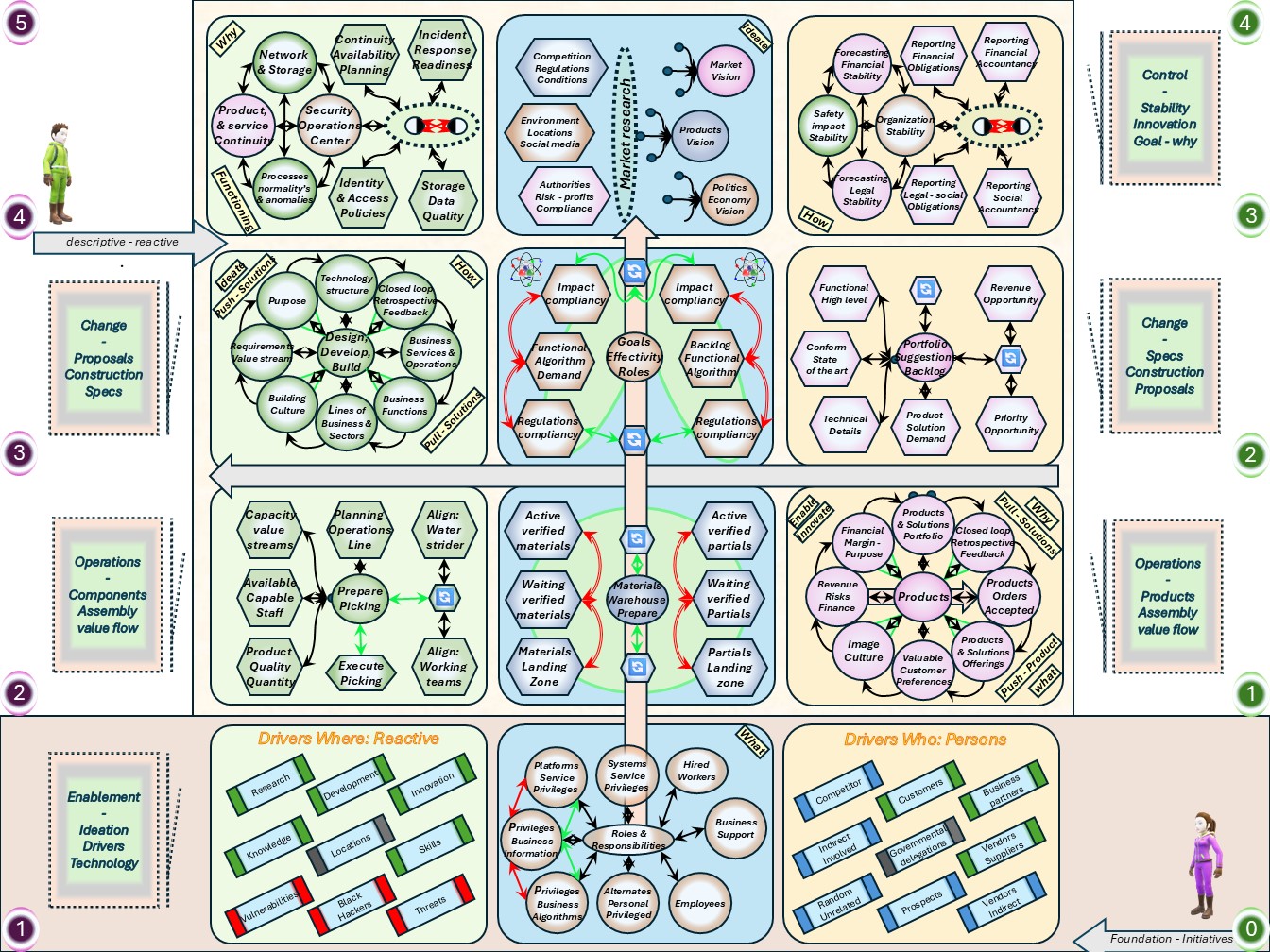

⚖ W-1.5.2 The viable system, conscious decisions

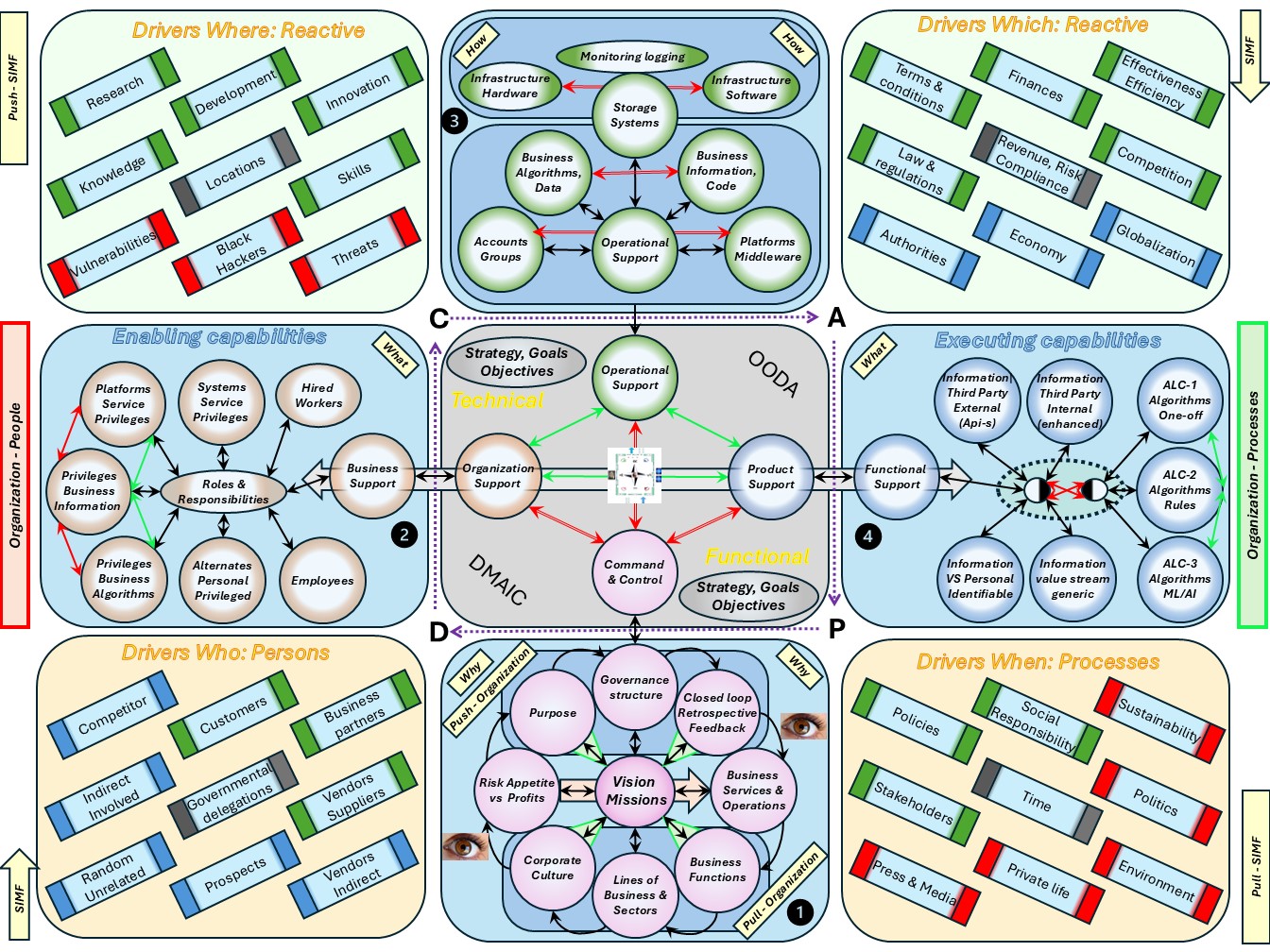

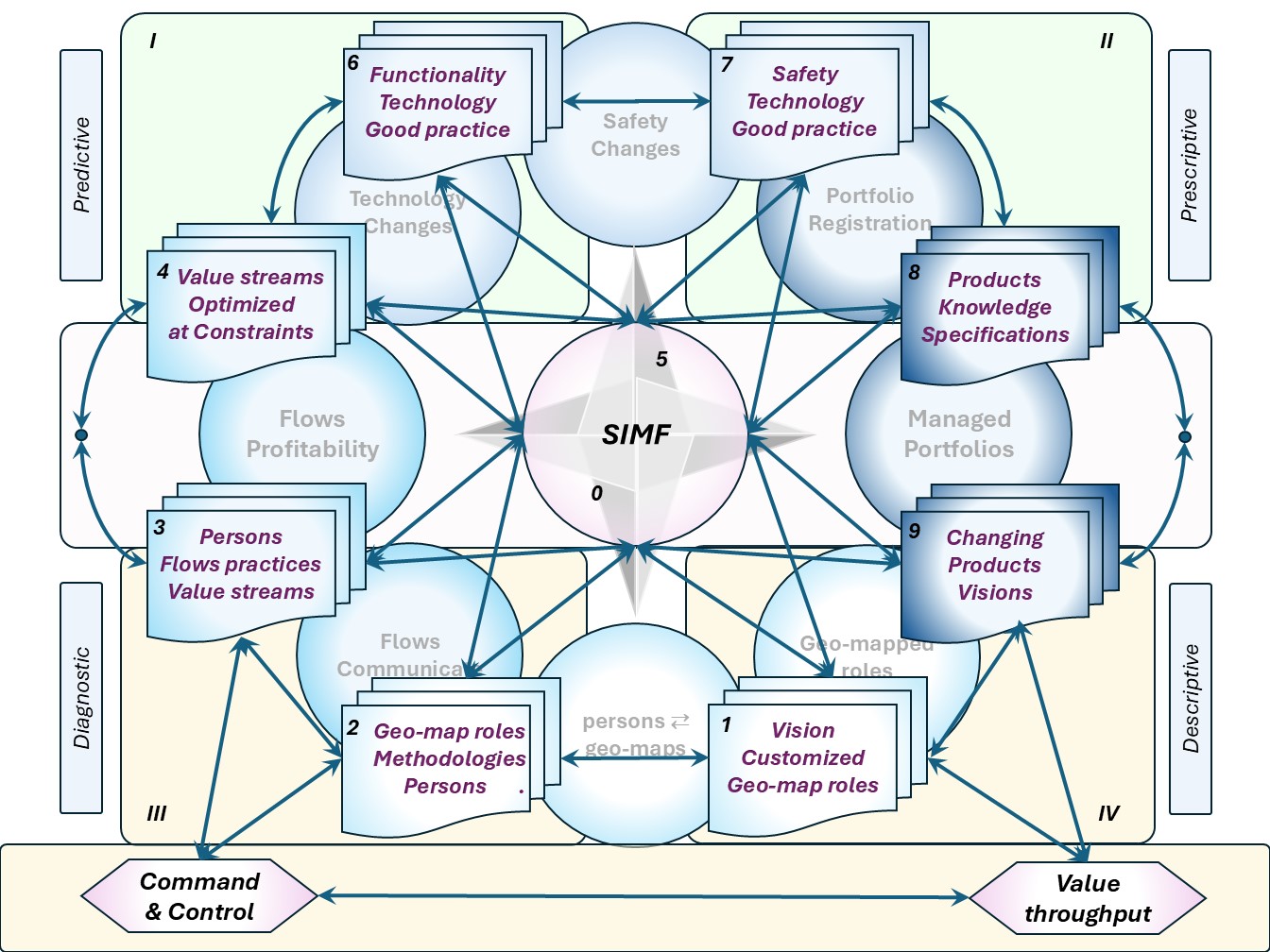

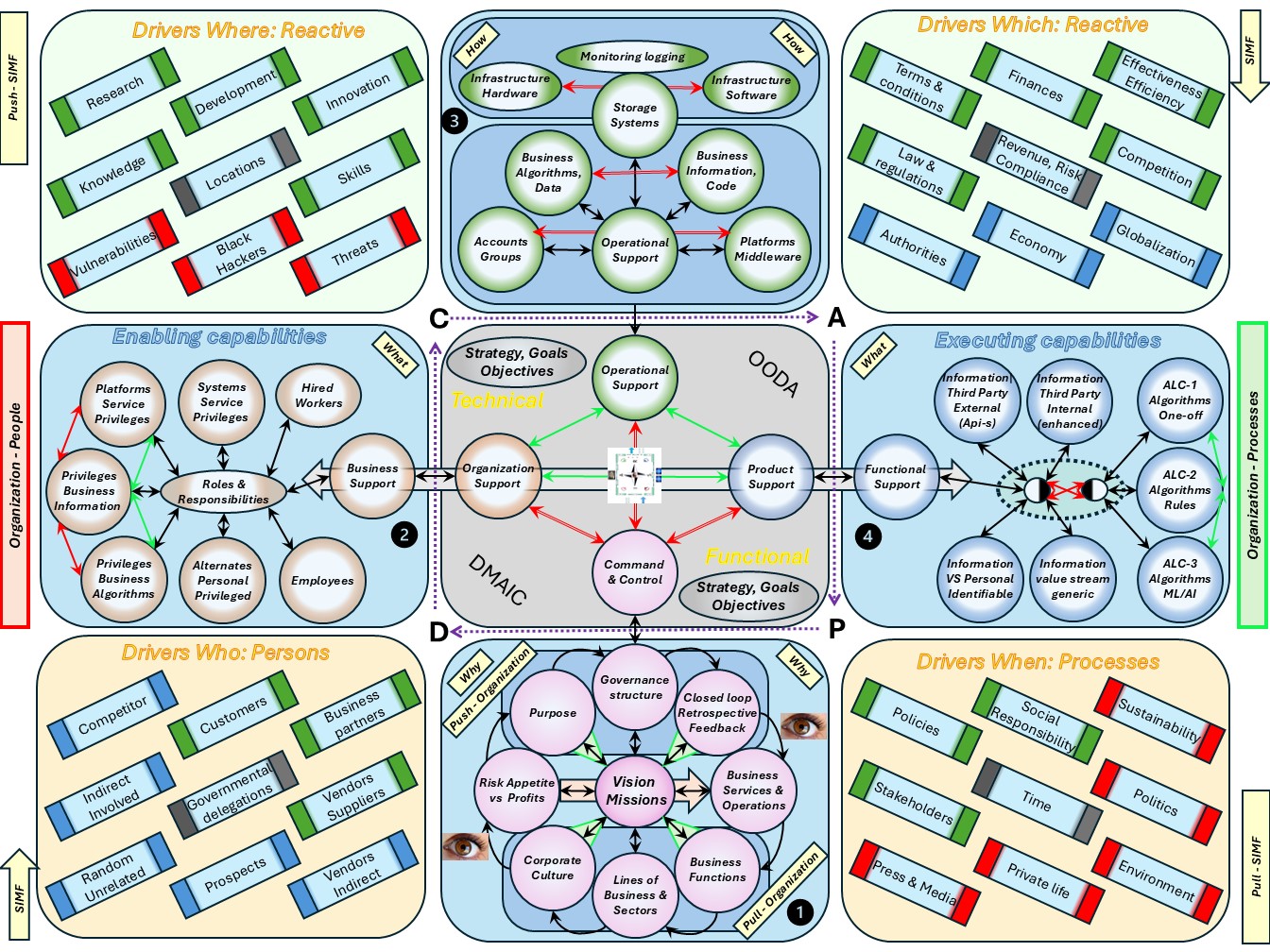

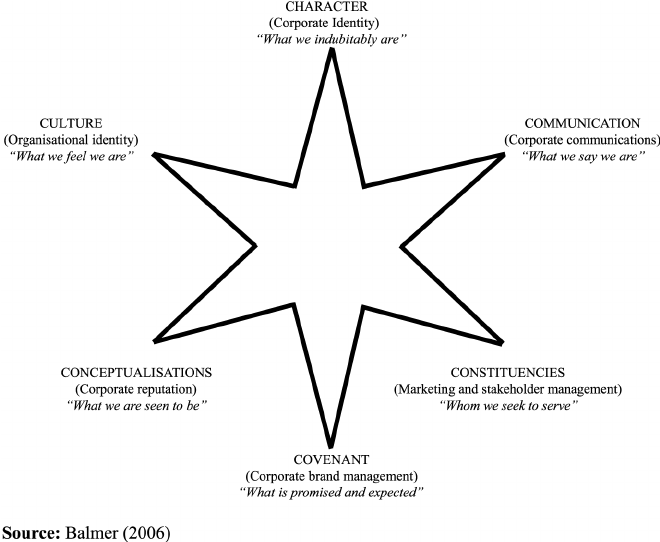

SIMF the organisation for realisations, outside view

👁 Industrial age: the manager knows everything, workers are resources similar to machines.

👁 Information age, required change: a shift to distributed knowledge, power to the edges.

Understanding the business, organisation in their four levels (bottom-up).

- VSM System-5 Mngmt Operational VSM-1i: Visions, Missions elementary, 0-1 floor.

Assuring they are shared values for all and everything. Defining Visions could be by the same persons (boardroom) but that is not necessary.

- VSM System-4 Mngmt Operational VSM-1r: Long term resource planning 1-2 floor.

This a core activity for organisational processes.

- VSM System-3 Mngmt Operational VSM-1r: Mission realisation, 2-3 floor.

Portfoliomanagement knowing and planning the products over the relevant time.

- VSM System-1 Mngmt Operational VSM-3 management. from 3-4. The important secundary functions of holistic strategy tactics finance marketing etc.

- Above this is VSM-4, VSM-5 and the scoped environment, VSM-6

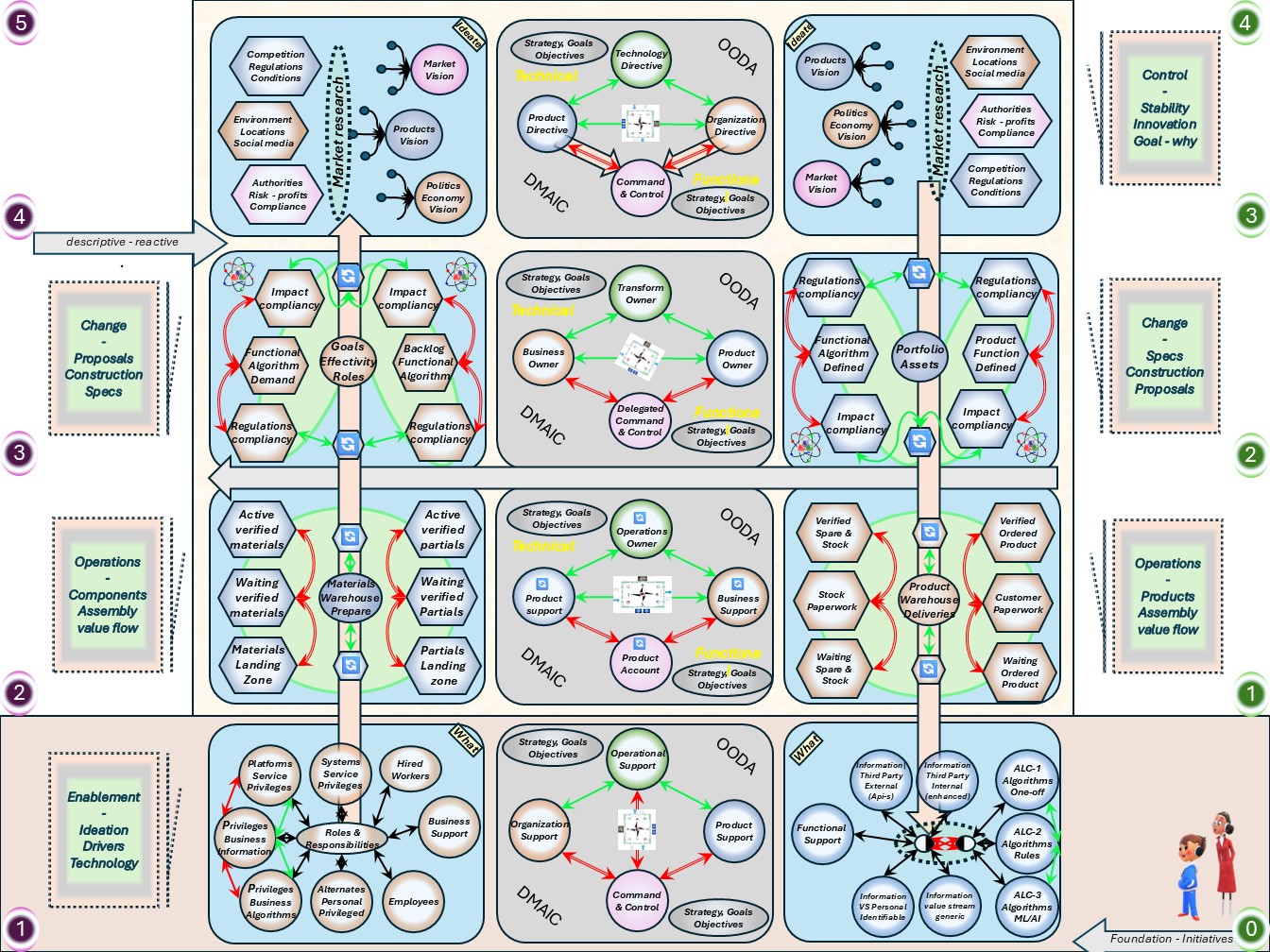

The complete area of information processing for the organisation in a figure:

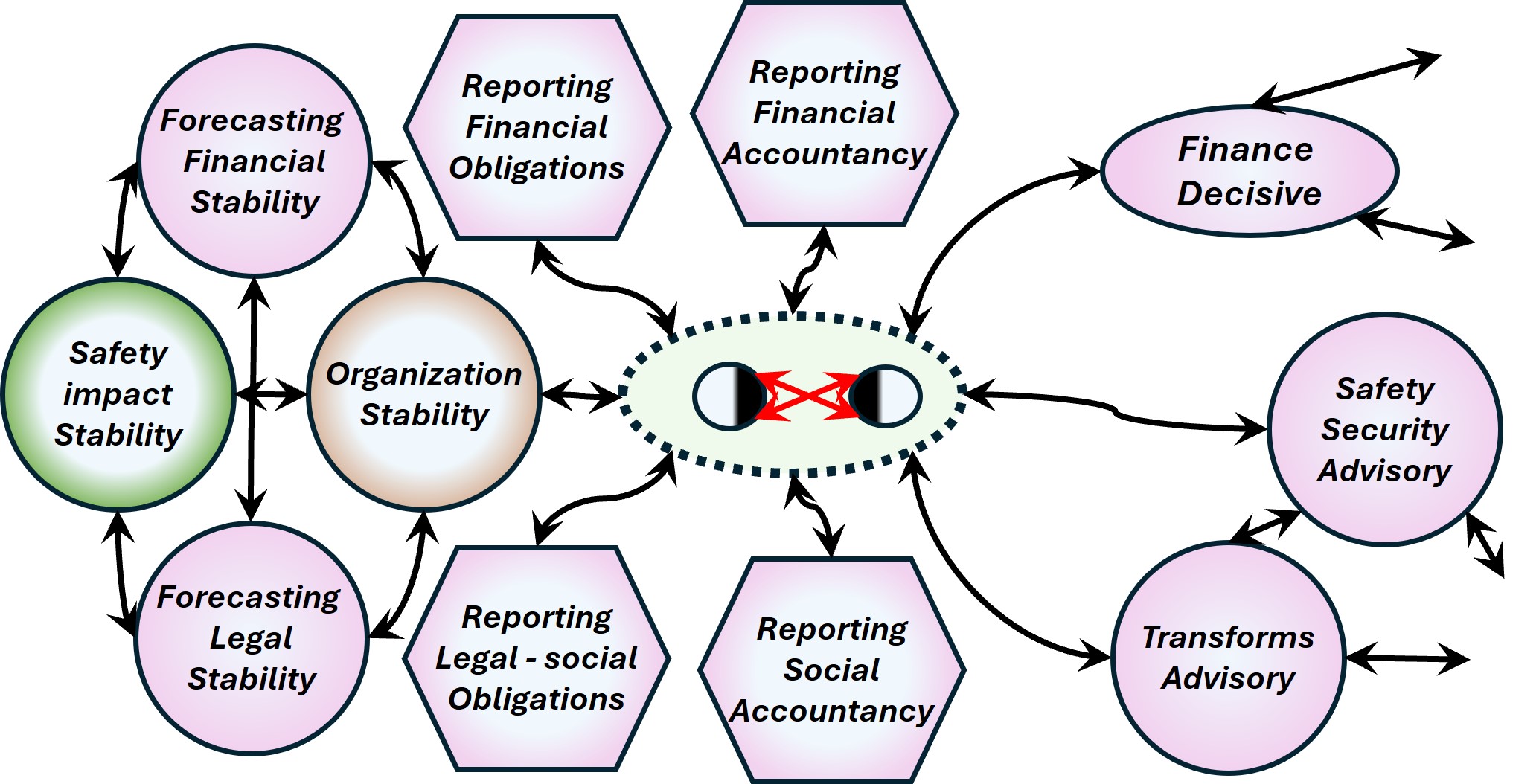

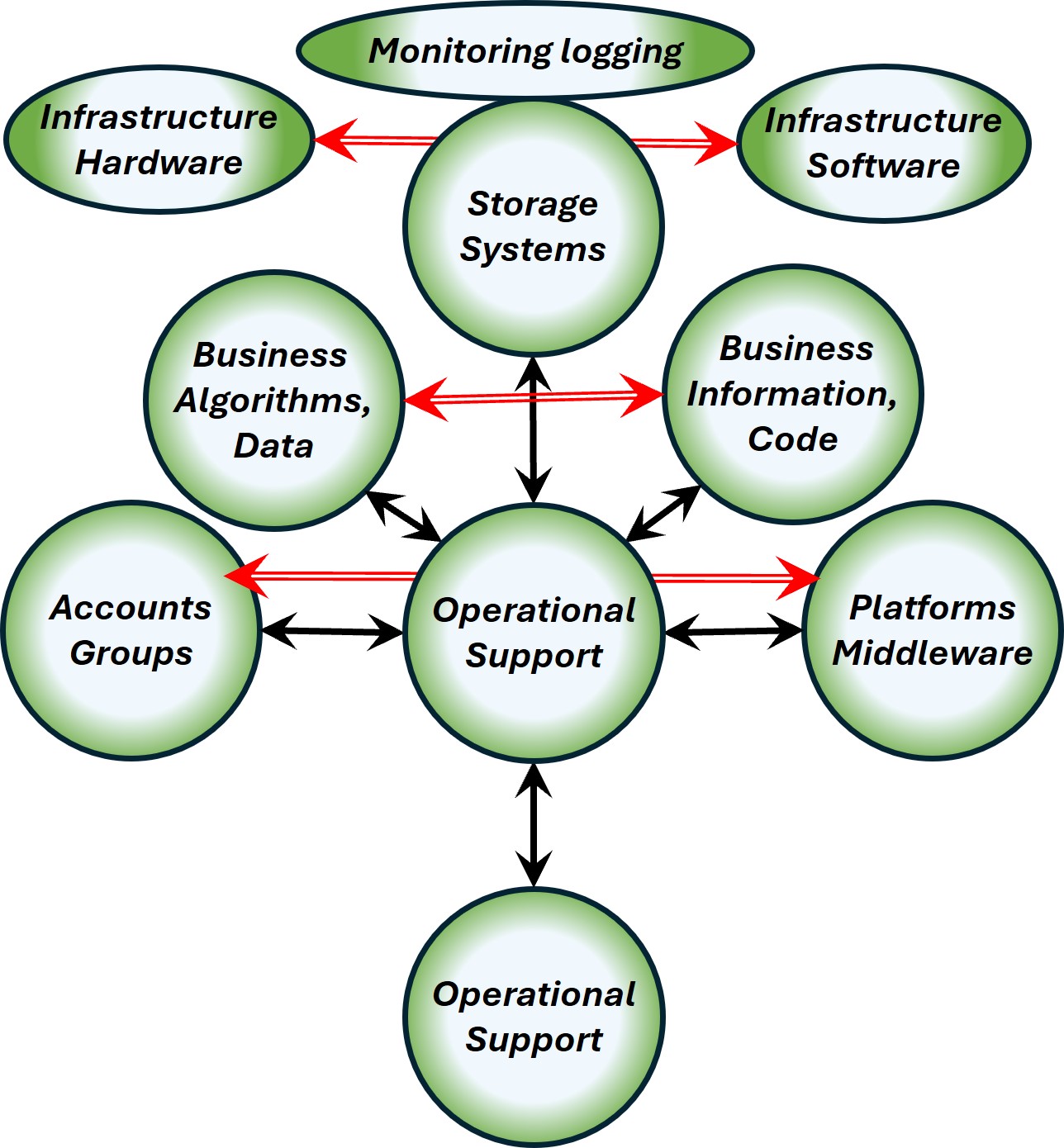

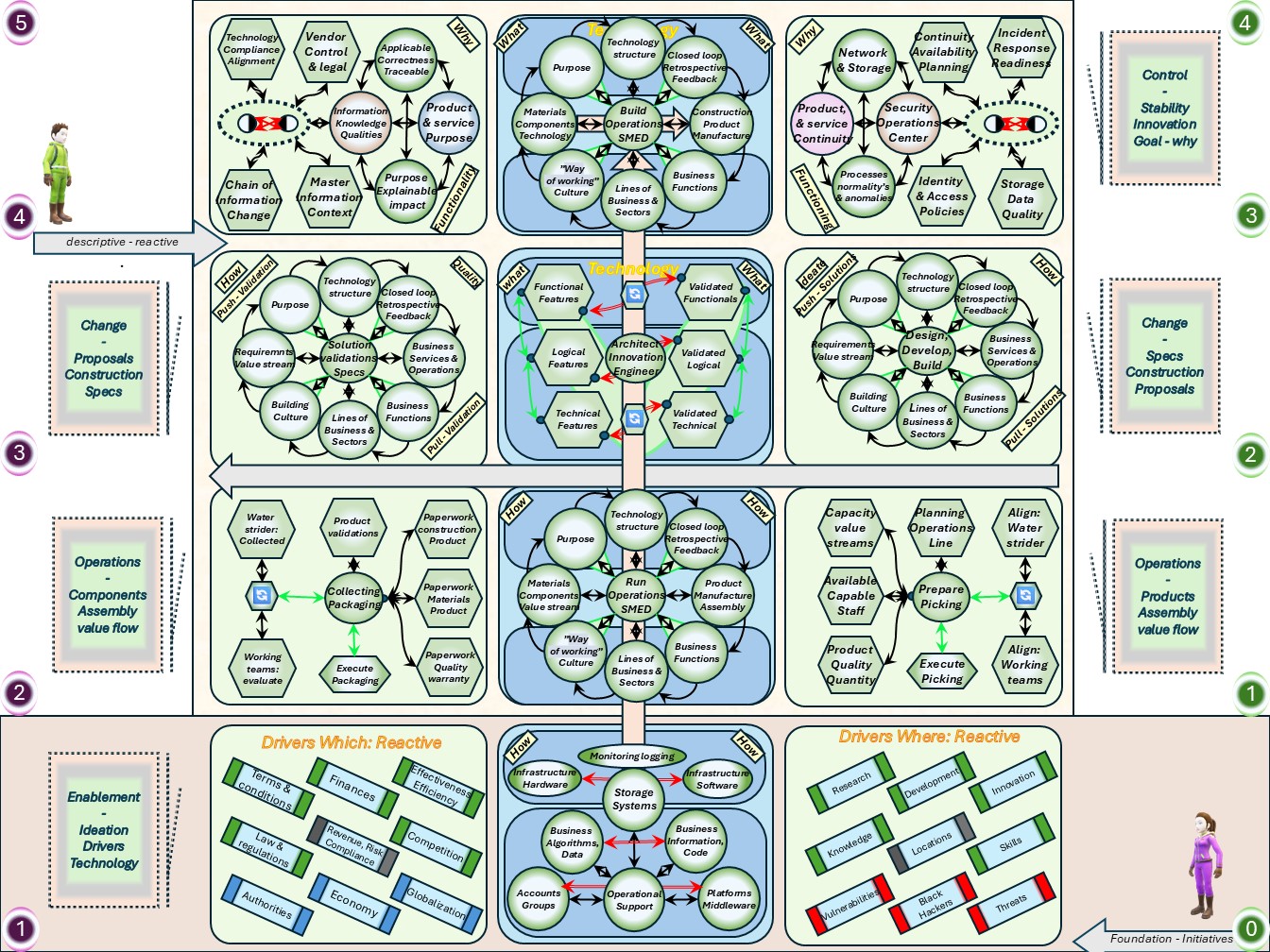

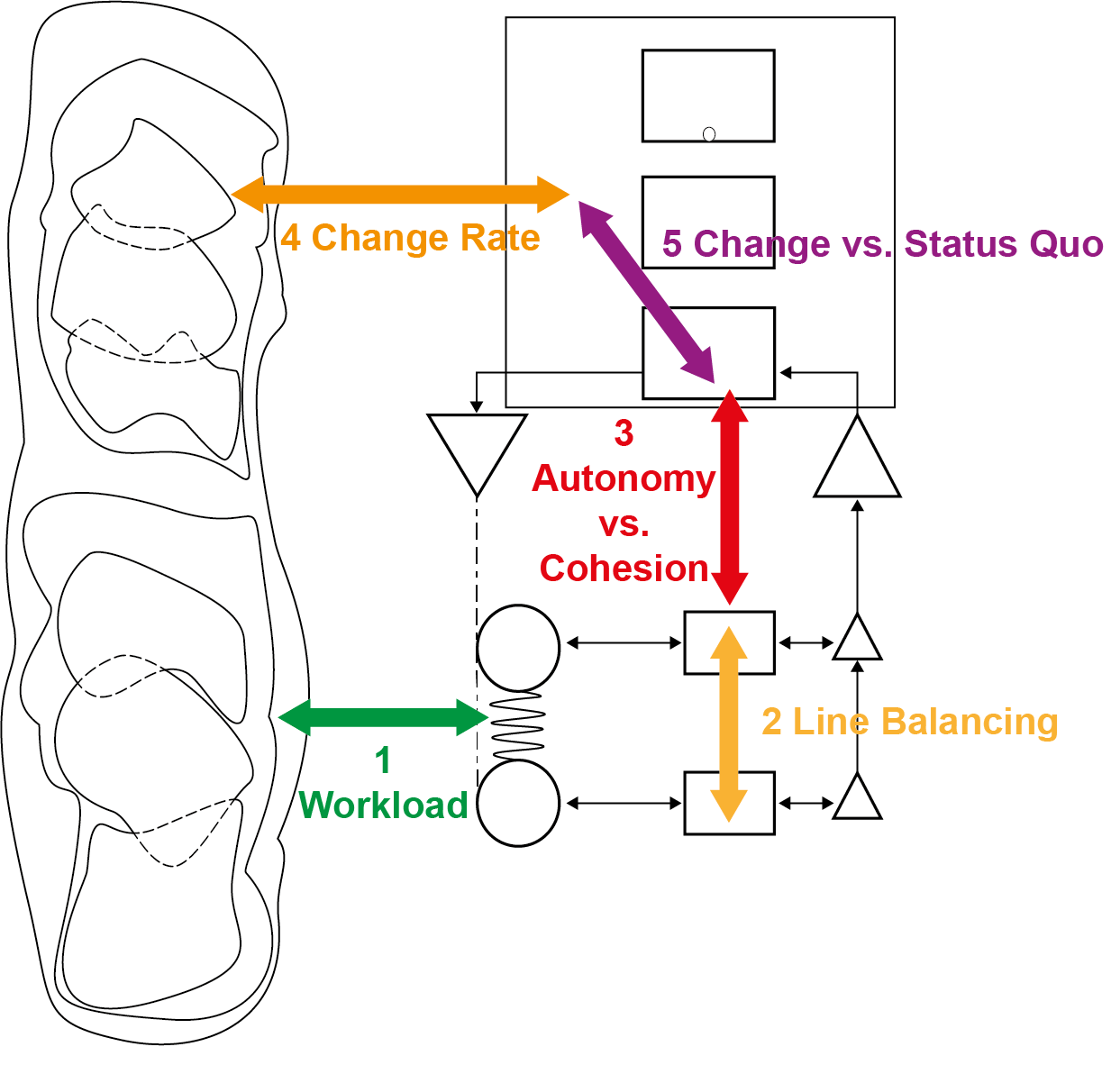

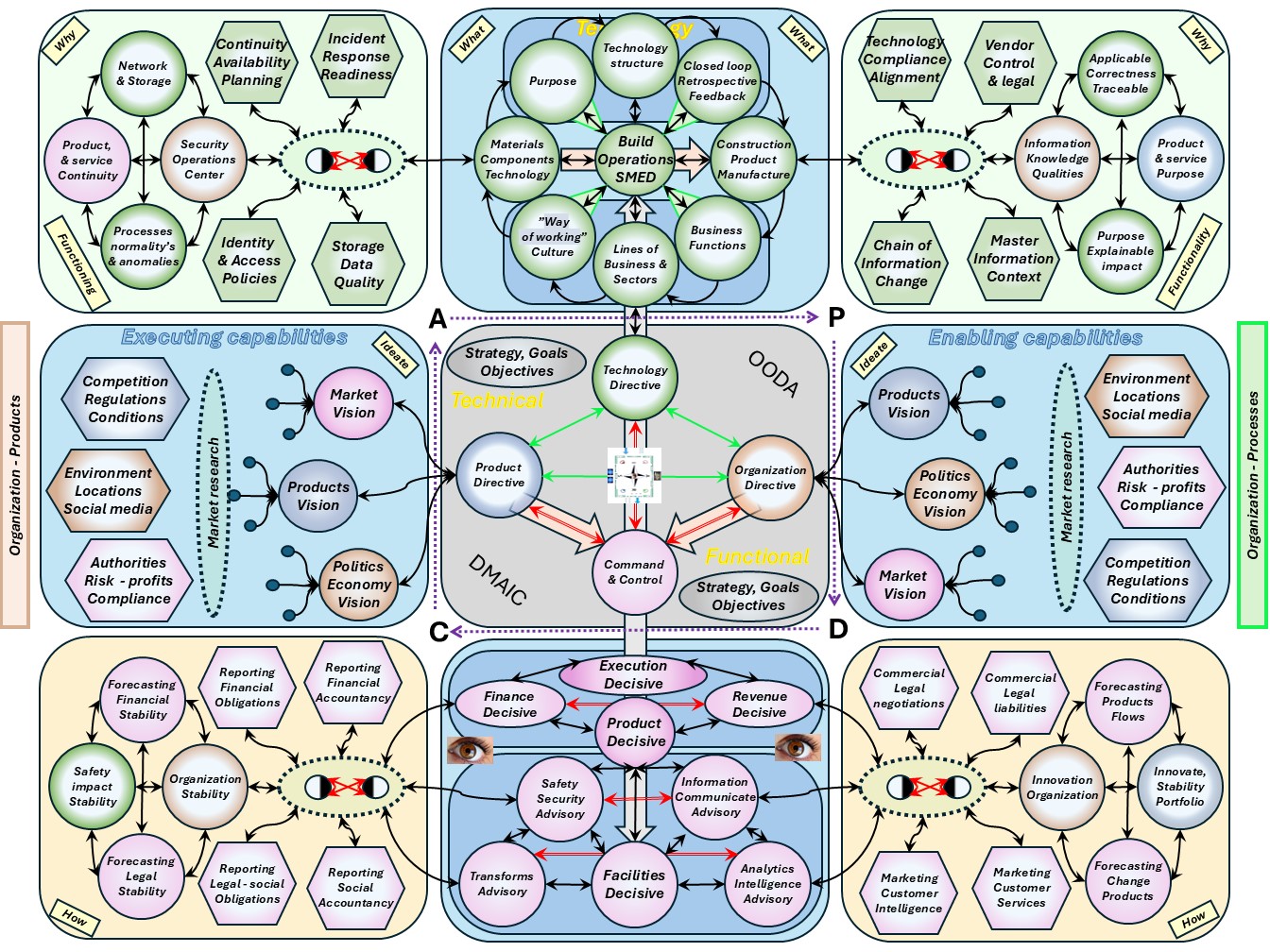

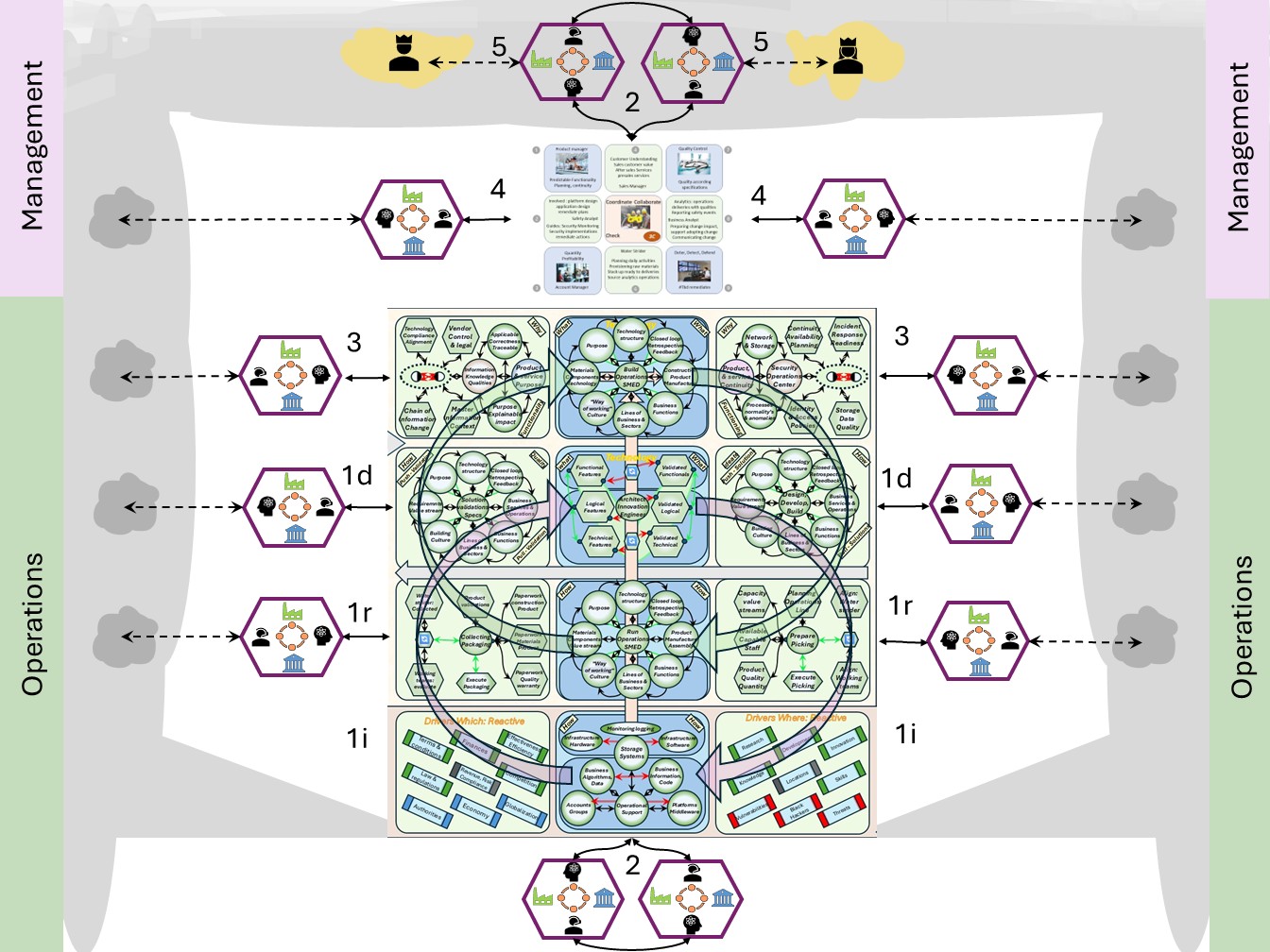

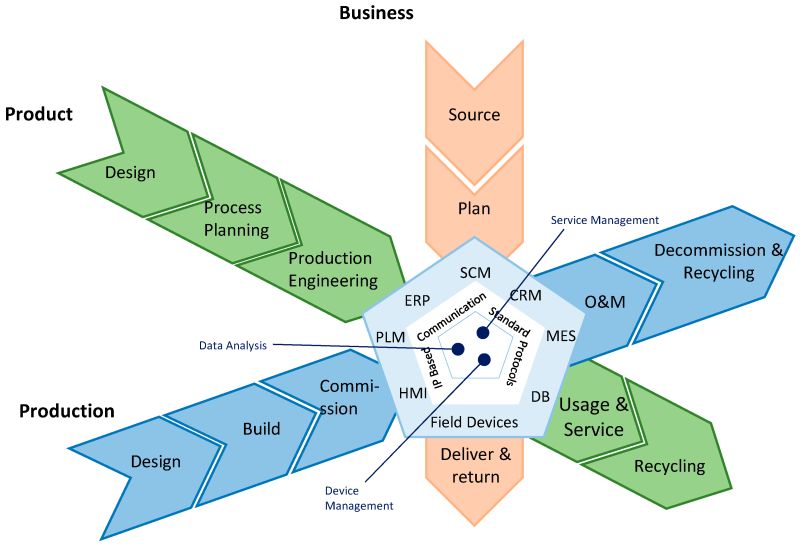

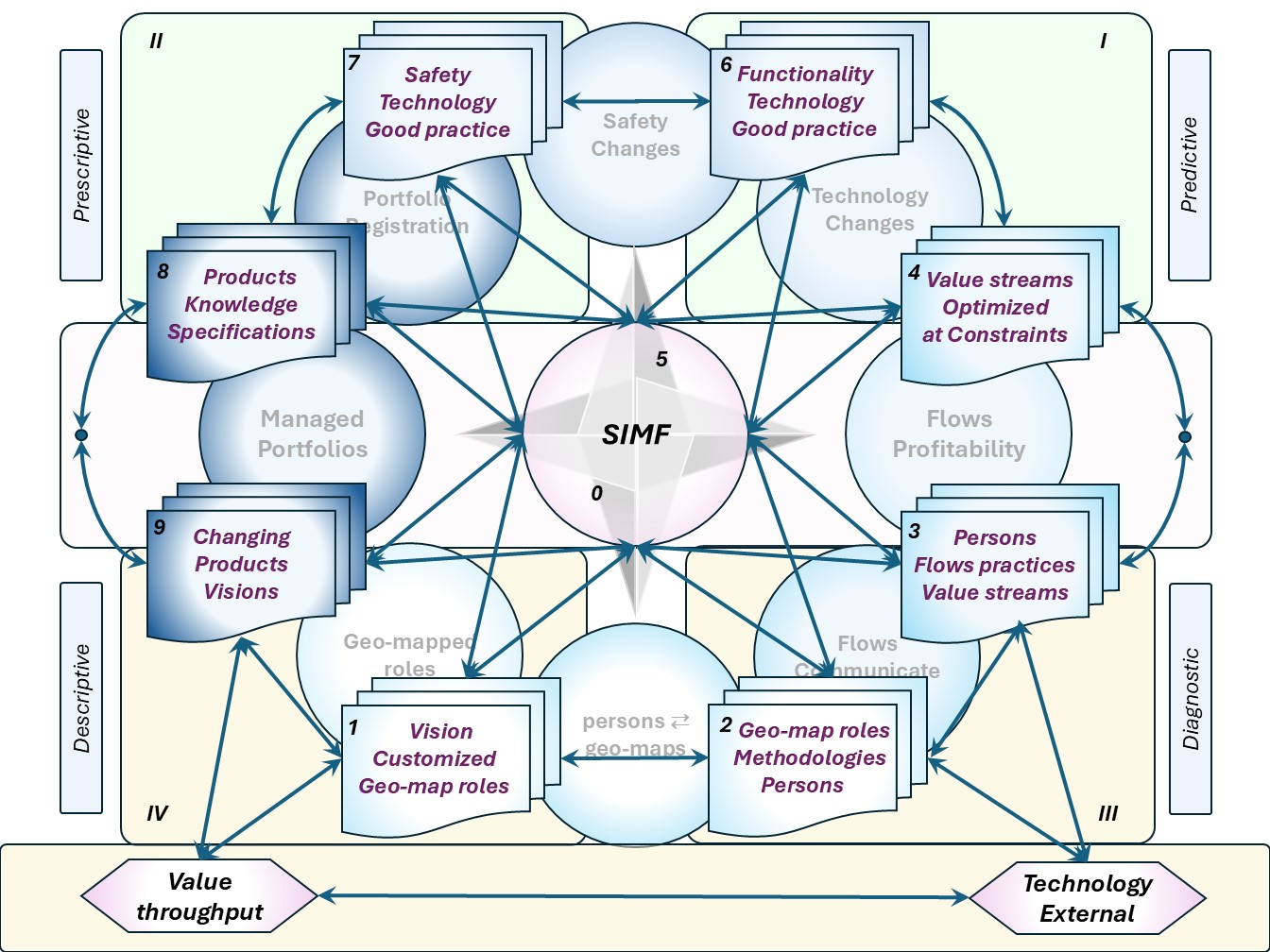

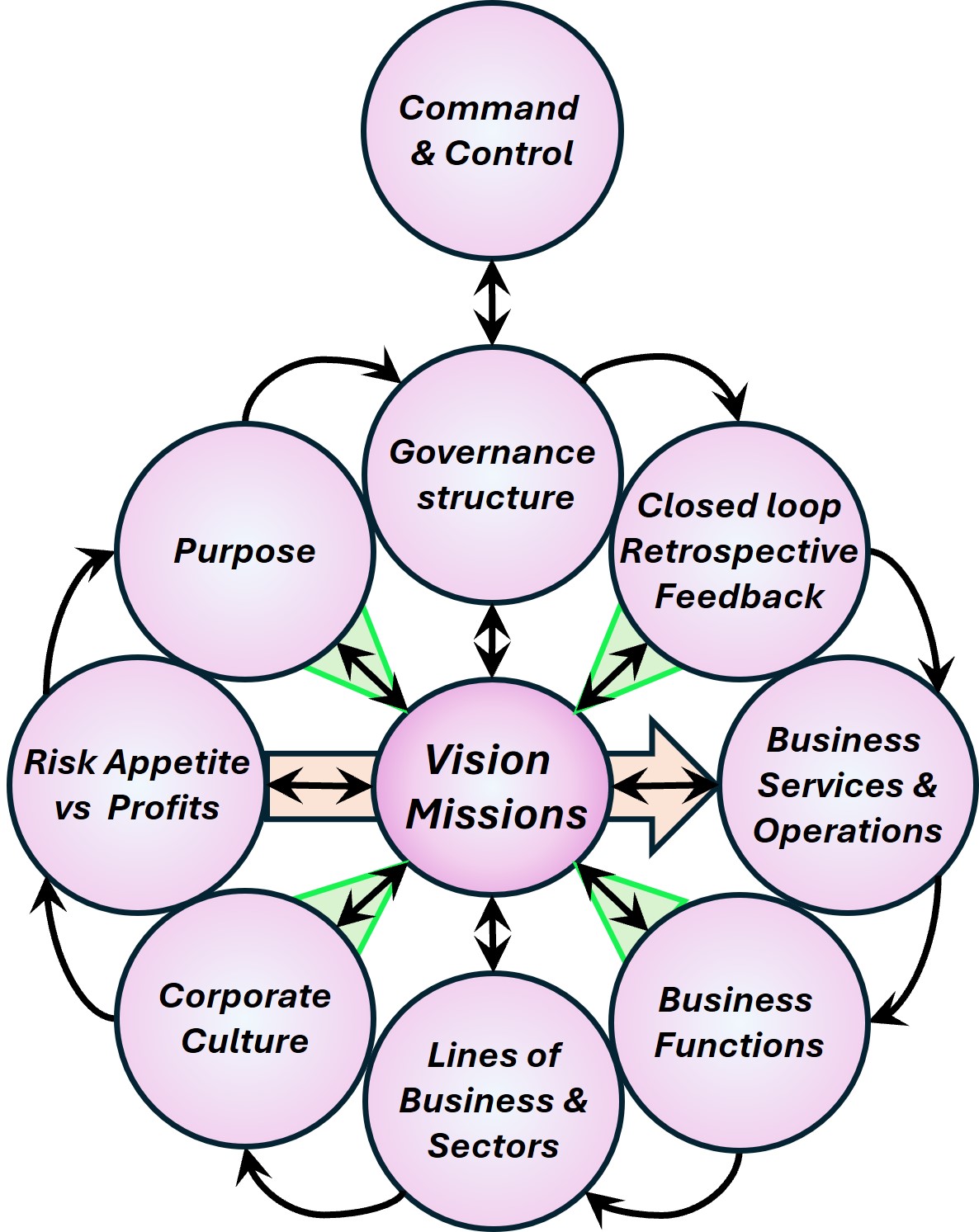

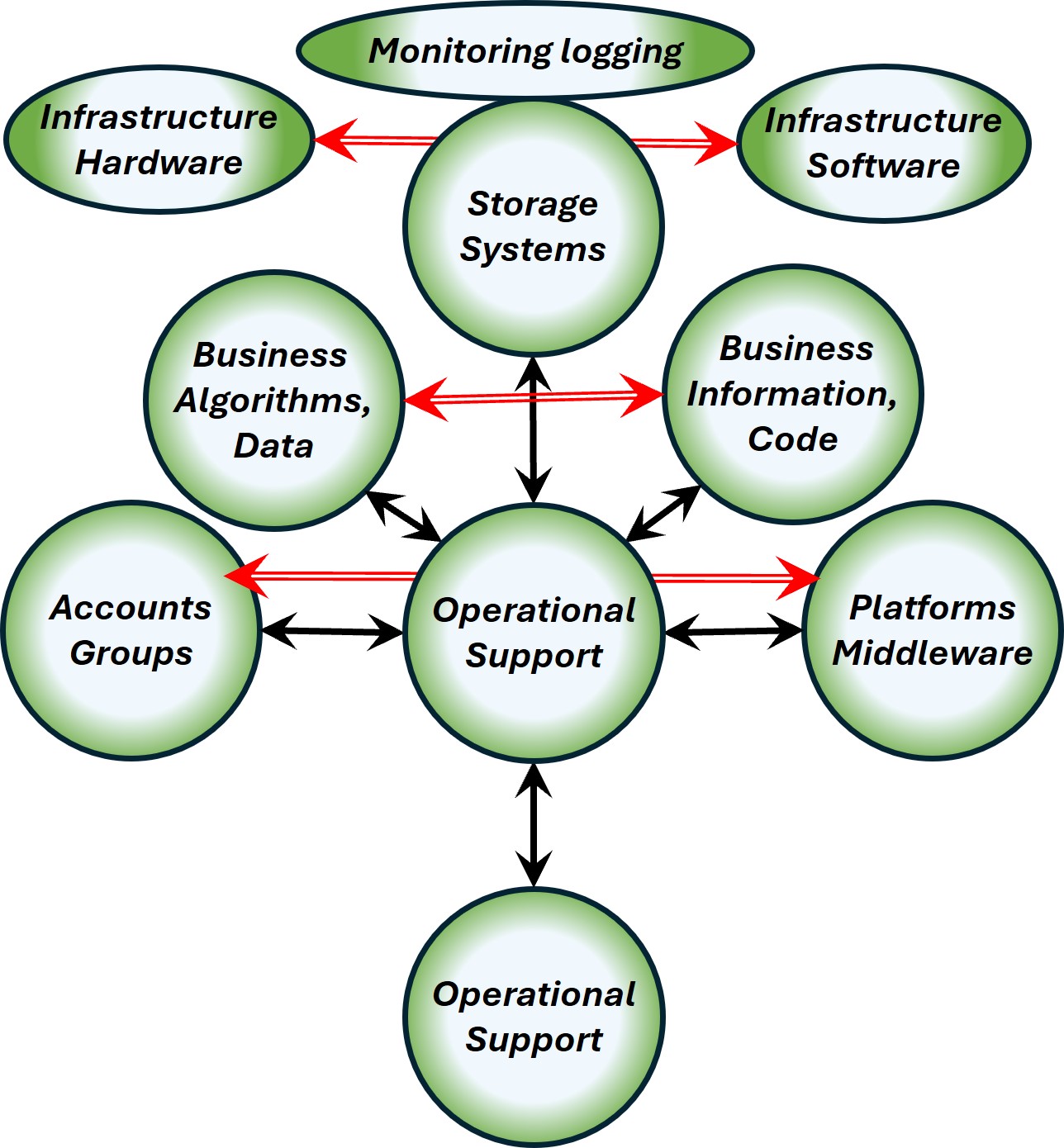

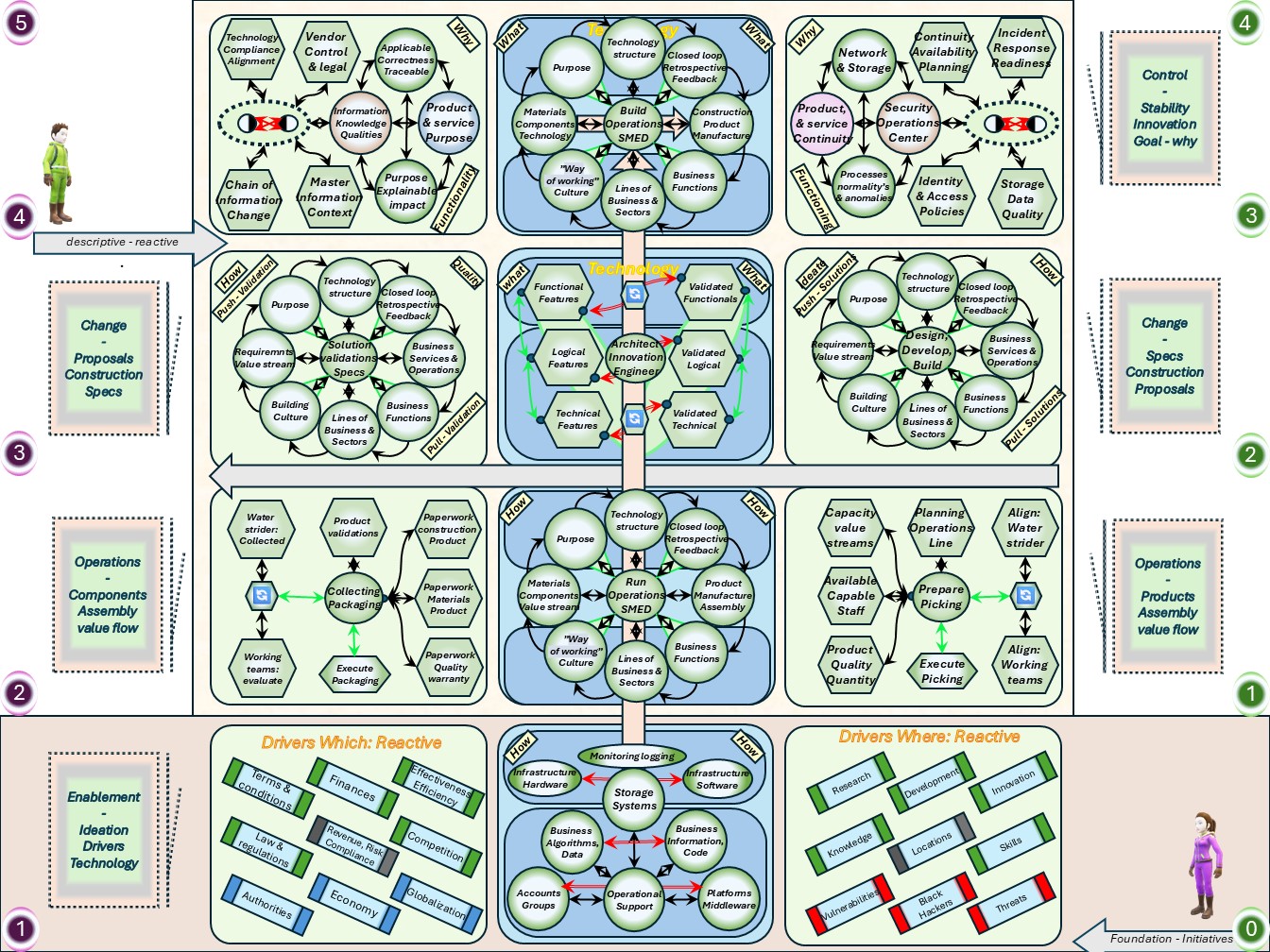

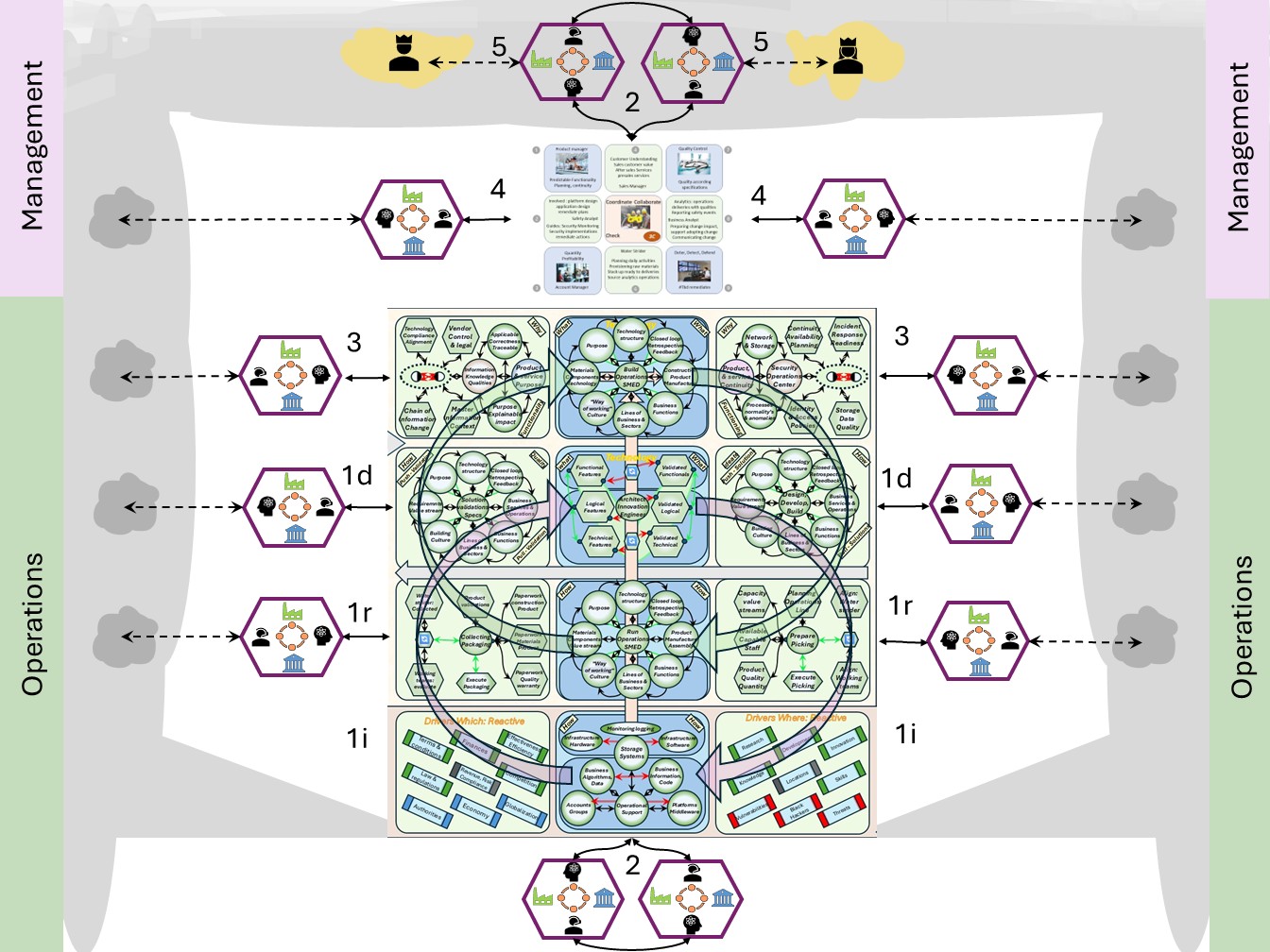

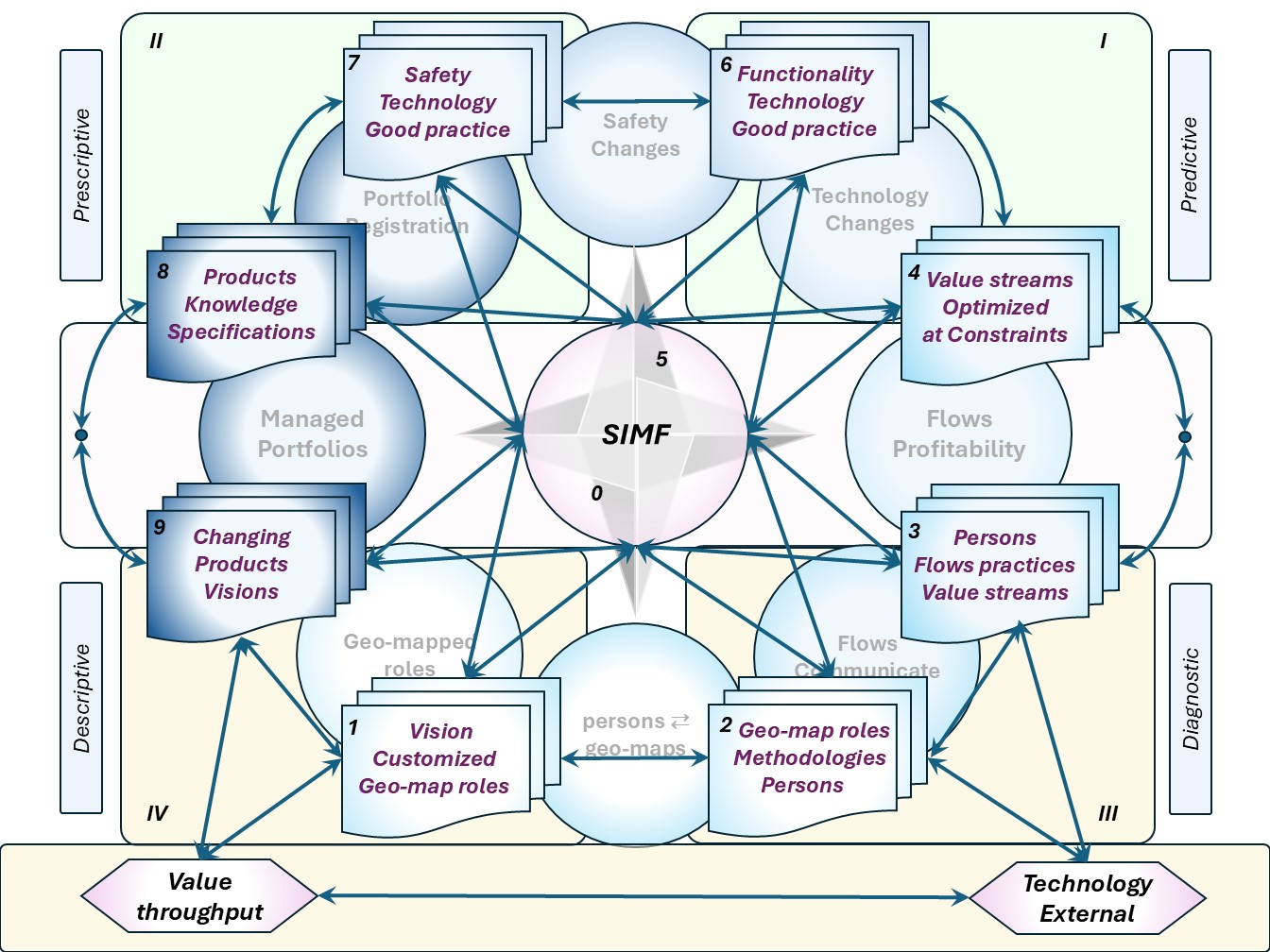

⚖ W-1.5.3 The viable system, autonomic technology

SIMF the processing for realisations, outside view

👁 Industrial age: the manager knows everything, workers are resources similar to machines.

👁 Information age, required change: a shift to distributed knowledge, power to the edges.

Understanding the technology service in their four levels (bottom-up).

- VSM System-1 Tech Operational VSM-1i the elementary 0-1 floor .

What is needed to react on immediate, very short term, is going by the synapse areas.

- VSM System-3 Tech Operational VSM-1r at the 1-2 floor. The centre where the organisationals value is created conform purpose.

The outside perspective changes: direction into right to left, rotation into clockwise.

- VSM System-4 Tech Operational VSM-1d Changes by missions: 2-3 floor level.

Applying the portfolio planning for purposes, goals with products.

- The change is triggered by feeds coming from the invisible backlog, suggestions.

- The result is going into the invisible adjusted portfolio.

- VSM System-5 Tech Operational VSM-3 mangement 3-4 floor, autonomic functions and algedonic channels.

A holistic information quality and holistic information safety is organisational dependent.

- Above this is VSM-4, VSM-5 and the scoped environment, VSM-6

The complete area of information processing for technology in a figure:

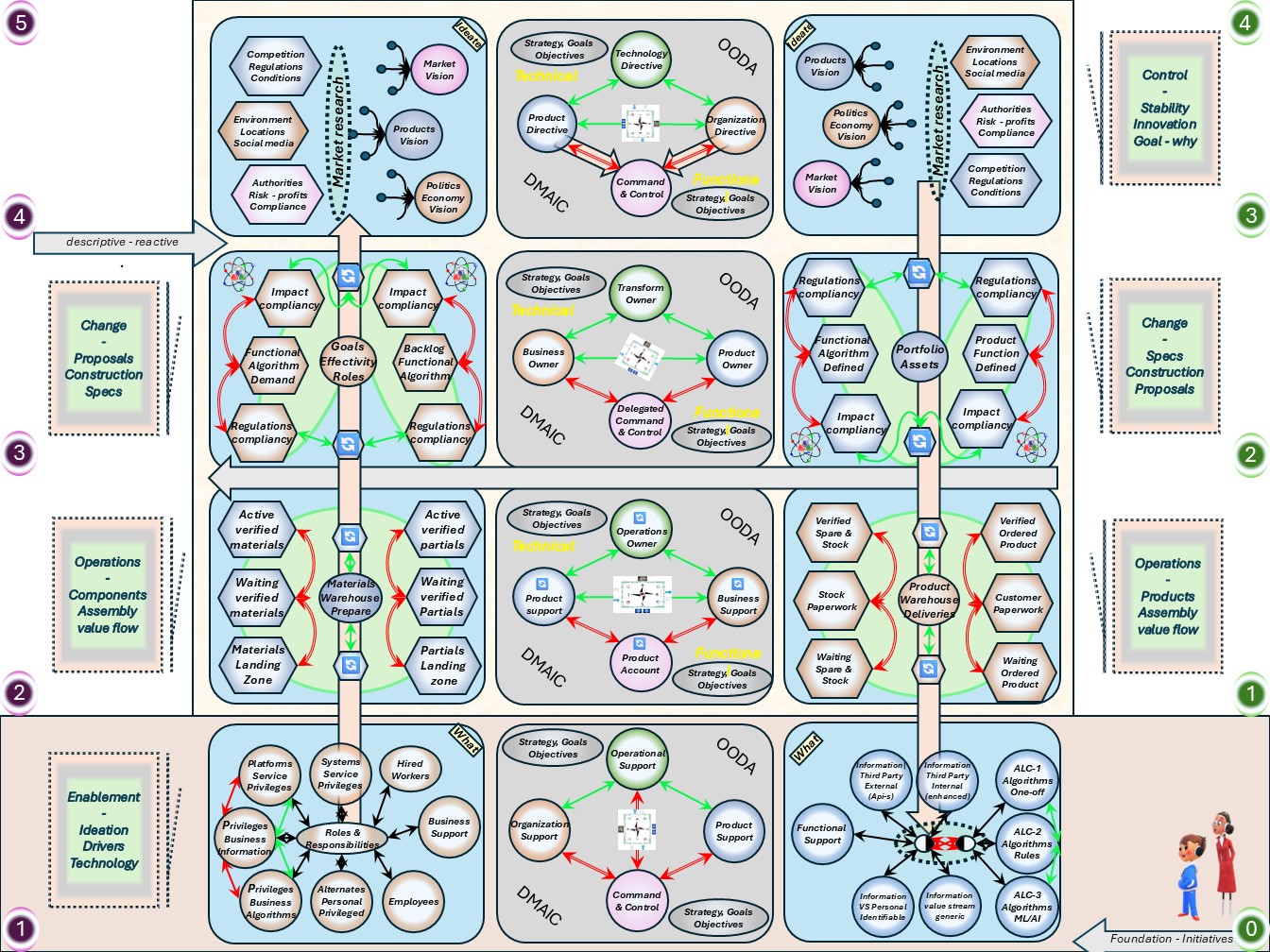

⚖ W-1.5.4 Mediation: Technical autonomy vs organisational control

SIMF Support in improving the product, service

The dichotomy Technical autonomy vs organisational control is not a common historical topic.

Diplomacy is not associated with wealth, honour and glory.

MEDIATION IN ARMED CONFLICT

The practice of mediation involves third-party intervention to facilitate conflict resolution between parties.

Mediators act as neutral facilitators, assisting in communication, negotiation, and finding common ground to reach a peaceful resolution.

It is a diplomatic tool used to de-escalate tensions, prevent conflicts, and promote cooperation.

Seeing the two different side that are a lot of frictions to manage:

- The situation of the different sides are a consequence of growth.

- With a lot of variety to confusion and ambiguity also grows.

Some peculiar interesting attention points:

- Operational VSM-1i 0-1 floor: mix system-5 orgnisation - system-1 technology.

Mediation:

- Conflict: Avoiding the easy choice in technology by the organisation.

- Goal: Promote product improvement, promote culture improvement.

- Operational VSM-1r flow: 1-2 floor. Both sides are missing system-3, synergy.

Mediation:

- Align: Choices for what is going into the process flow - Organisation.

- Align: Choices for what has been processed in the flow - Technology.

- Operational VSM-1d change : 2-3 floor. Both sides are missing system-3, synergy.

Mediation:

- Align wishes with expectations time/cost for activities - Organisation.

- Align the design build verify activities in qualities - Technology.

- Mangement VSM-3 : 3-4 floor. Mediation Technology Autonomic functions and the important secundary functions in the organisation.

The complete area of changing products, goods, services at information processing in a figure:

W-1.6 Maturity 0: Strategy impact understood

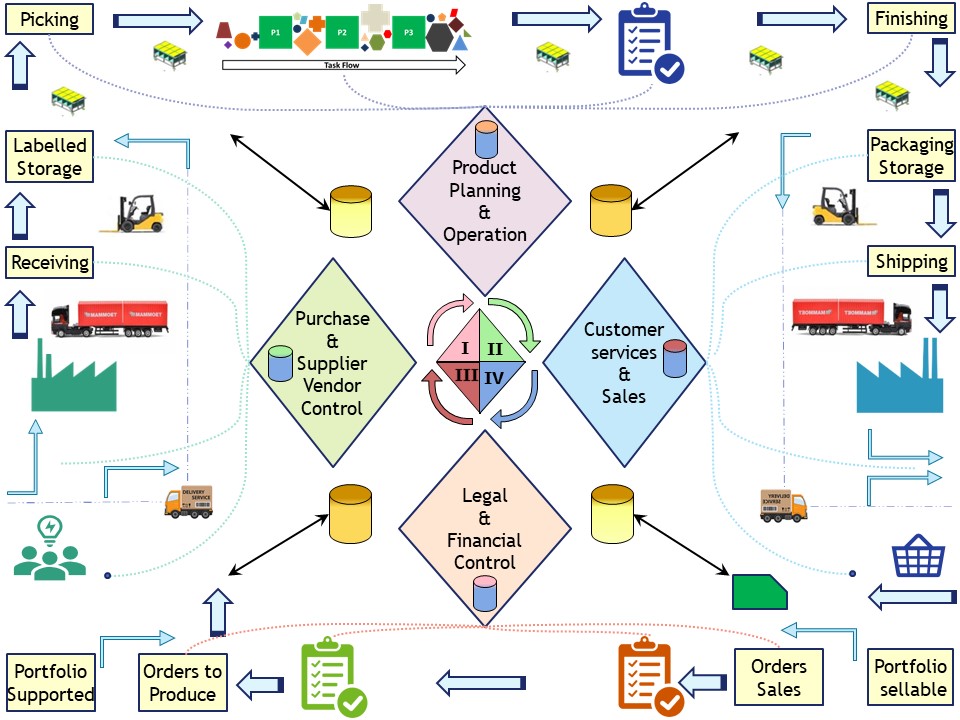

From the three PPT, People, Process, Technology interrelated areas in scopes.

- ❌ P - processes & information

- ❌ P - People Organization optimization

- ❌ T - Tools, Infrastructure

Only having the focus on others by Command and Control is not complete understanding of all laysers, not what Comand & Control should be.

Each layer has his own dedicated characteristics.

⚖ W-1.6.1 Determining the position, the situation

Don't waste your precious time on creating something that is not serving the greater good:

- People: find a way to empower employees to innovate and solve problems.

- processes: Start fostering a culture of long-term thinking, not short-term fixes and don't do the same strategy with same expectations year after year.

- Command & Control: Lead by example and start making things transparent.

Start moving beyond the “toolbox mentality” or "my Lean-thinking" and unlock real potential.

⚖ W-1.6.2 Individual logical irrational together

Paradoxes in lean, agile

Thinking about Lean Thinking Part IV Paradox Mindset

At 12m40 there is a nice list of paradoces in defining lean (see 12m40)

A complete list:

Lean Global 2024

Here's a terrific collection of paradoxes that are inherent in Lean, compiled by Rachel Reuter and Eric O. Olsen.

Rachel presented it, with John Shook, at last week's Lean Global Connection event.

Paradox, or apparent contradictions, can be in the challenge statement of the Improvement Kata model, or pop up just about anywhere along the way (Figure 2 below).

Practicing Toyota Kata teaches you to work toward challenges with a scientific mindset and approach, making you more accustomed to the discomfort that uncertainties and paradoxes bring.

It enhances your ability to create 'both/and' solutions rather than limiting yourself to 'either/or' options.

Scientific thinking and what Rachel and John refer to as 'paradox mindset' are closely related.

- Customers-focused yet employee empowering

Lean is driven by delivering value to the customer. Employees focus which ultimately benefits the customer.

Yet empowering and engaging employees to reach their full potential is the focus.

- Structured yet flexible

Lean views structure, including standardisation and stability as key enablers for flexibility, adaptability creativity and innovation.

The framework provides a foundation for continuous improvement.

- Bottom-up, yet top down

Lean requires leadership to provide clear direction and create an environment where all workers drive innovation and improvements.

Both leadership and individual contribution are essential.

- People-centric, respectful yet challenging

Lean shows respect for people by challenging them to learn, grow, and never settle for the status quo.

Respect includes providing physical professional and emotional safety to enable development.

The level of challenge is tailored to the individual's role and abelites, to stretch them appropriately.

- Stop, yet flow

Lean requires stopping to immediately address problems, yet this enables unsurpassed efficiency, productivity and flow.

You cannot have flow without quality vice versa.

- Reflection-seeking yet failure-tolerant

lean pursues perfection and defined by providing on demand detect free one by-one , waste free, safe products and services.

Yet it recognizes the importance of surfacing problems and learning from mistakes through continuous improvement, rather than wating for perfection.

Dominiating: "either-Or"

Befudding us for at leat 25000 years ...

- Heraclitus

- Lao Tsu, Tao Te Ching

- Zen, Either/Or_ Kierkegaard

With "Either-Or" explicitly dominiating much of our, especially western, thinking for centuries.

- Seeing situations in blach & white

- Right solutions vs wrong

No grey: no allowance for uncertaintity.

⚖ W-1.6.3 Value stream VaSM vs Viable system ViSM

Project, Programme, Portfolio (P3)

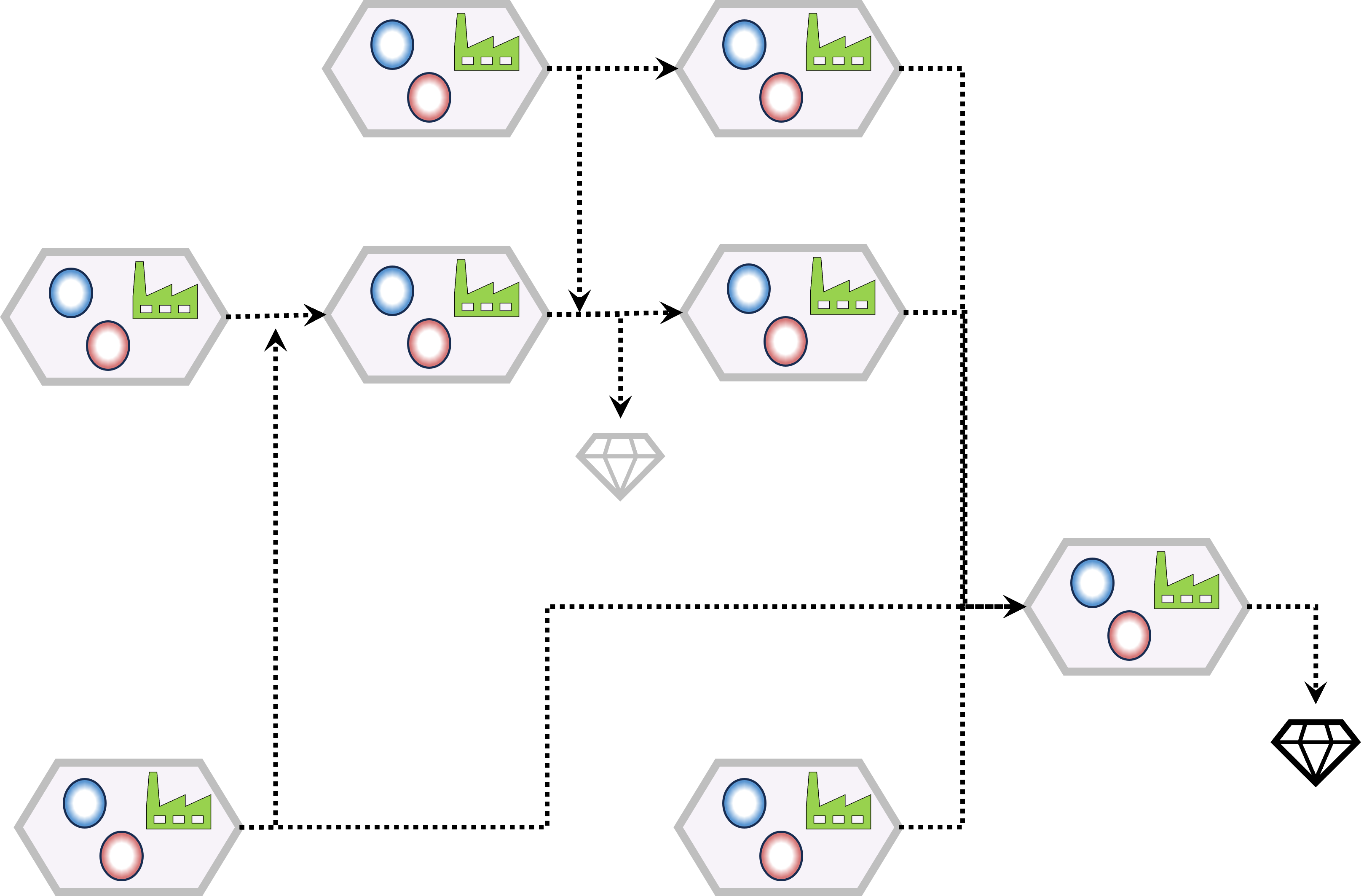

Project, Programme, and Portfolio Management (P3M) is the application of methods, procedures, techniques, and competencies to achieve a set of defined objectives.

It encompasses three key areas:

- Project Management: Focuses on delivering specific outputs within defined constraints such as time, cost, and quality. Projects are unique, transient endeavors with clear objectives and deliverables.

- Programme Management: Involves managing a group of related projects in a coordinated way to achieve benefits and control not available from managing them individually. Programmes are designed to deliver strategic objectives and transformational change.

- Portfolio Management: Concerns the centralized management of one or more portfolios to achieve strategic objectives. It involves selecting, prioritizing, and controlling an organization’s projects and programmes in line with its strategic goals and capacity to deliver.

Effective P3M ensures that initiatives are aligned with organizational strategy, resources are used efficiently, and desired outcomes are achieved.

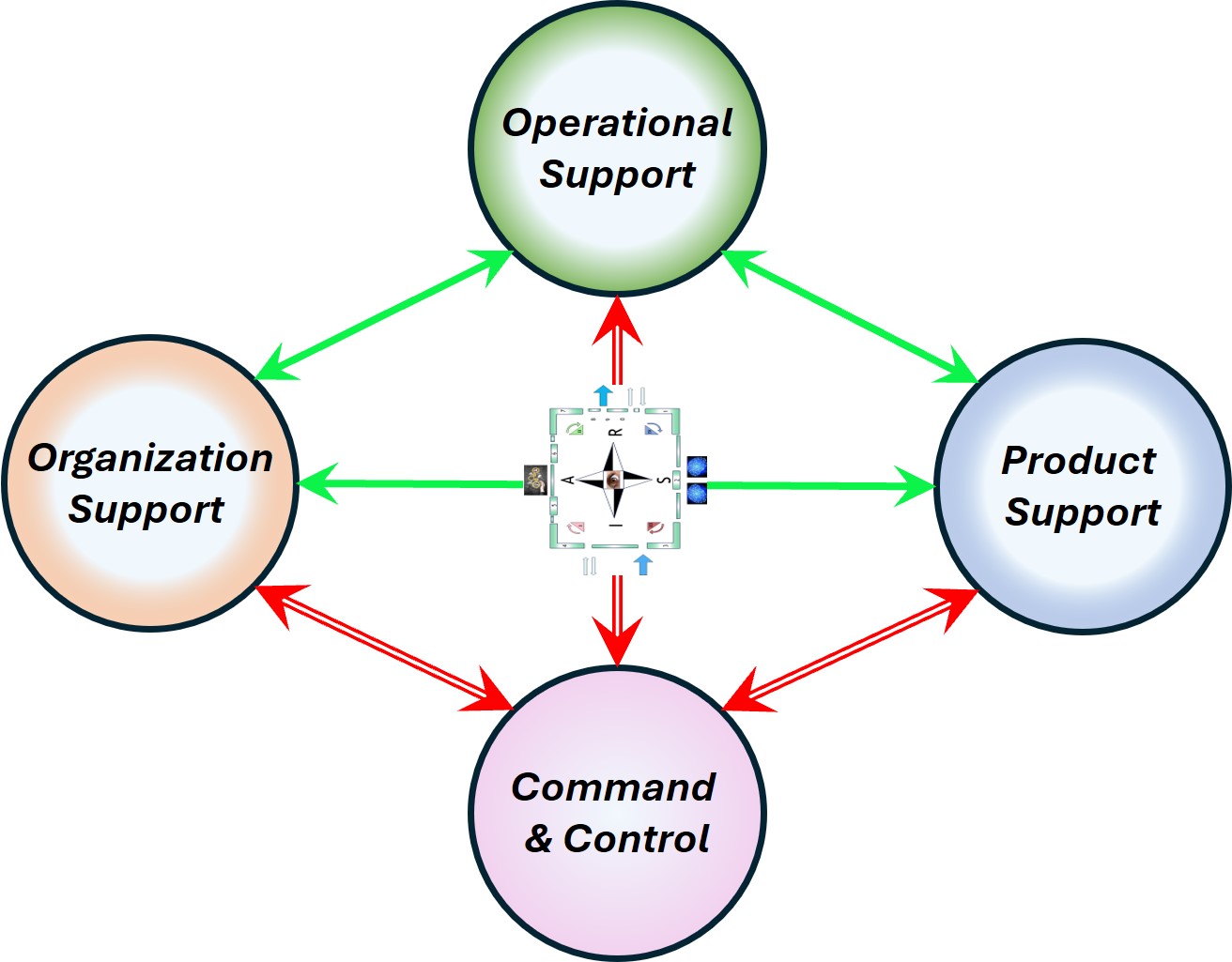

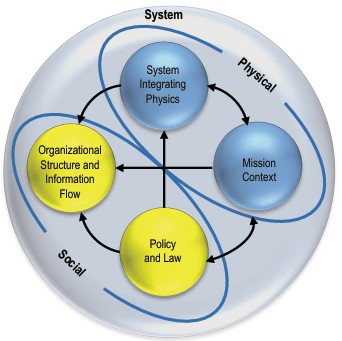

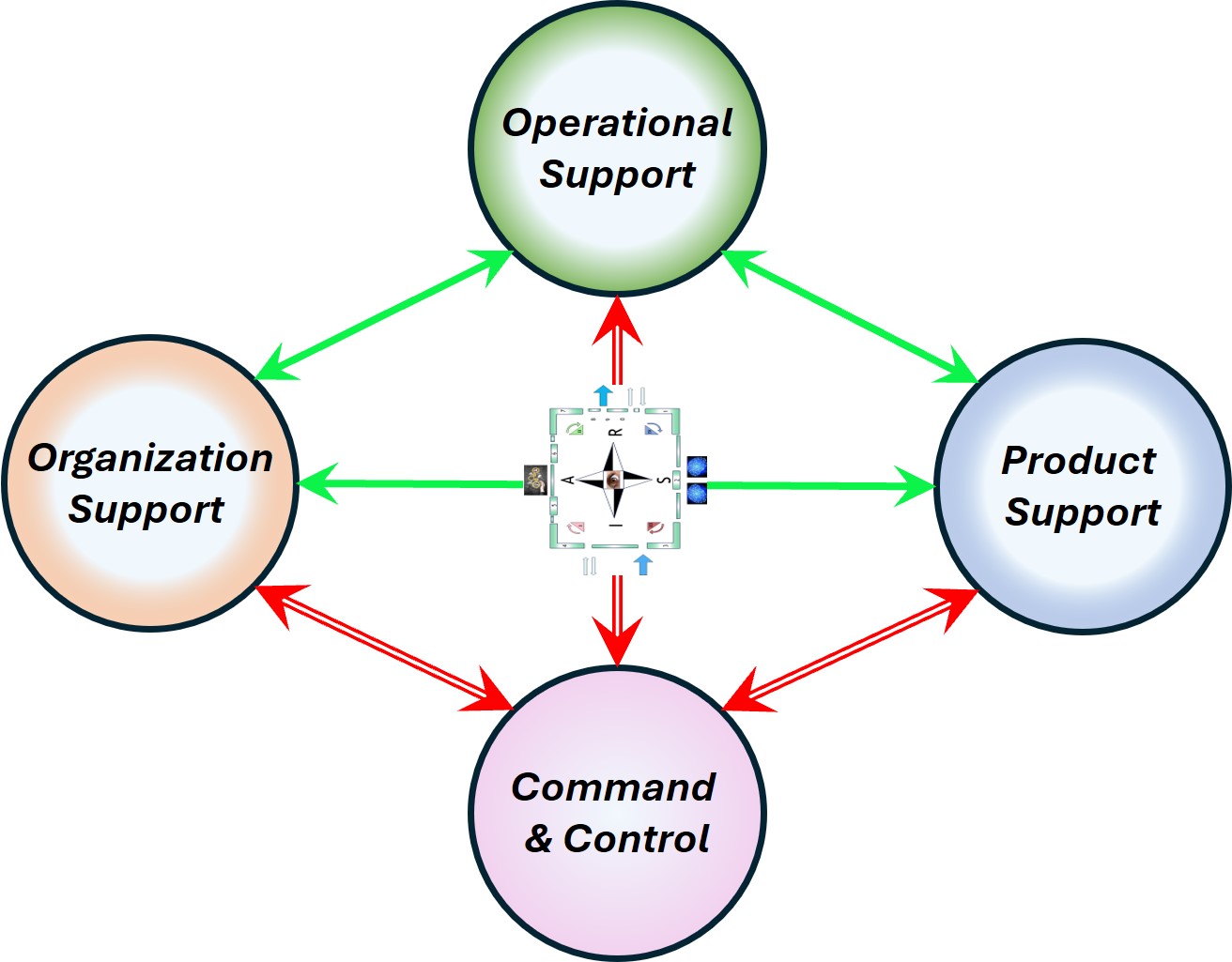

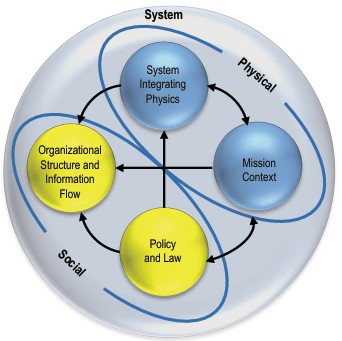

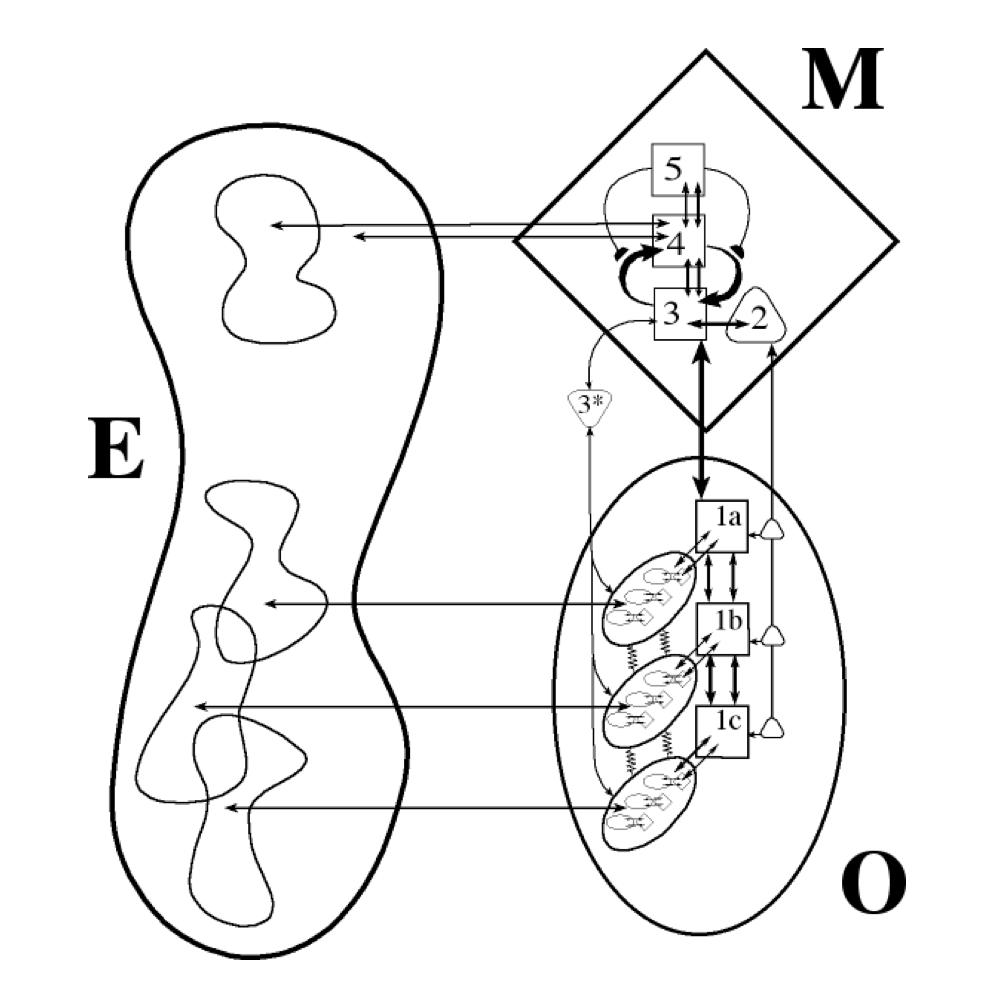

Cybernetics S1-S5 into S1-S6

The are three areas mentioned: Operation (O), Management (M) and the environment (E).

Systems are classified and numbered system-1 to system-5.

💡 The environment fits into the strict naming scheme when named system-6.

Command & control a viable system

"The commander must work in a medium which his eyes cannot see, which his best deductive powers cannot always fathom, and with which, because of constant changes, he can rarely become familiar."

Carl von Clausewitz, 1832. On War.

To put effective command and control into practice, we must first understand its fundamental nature—its purpose, characteristics, environment, and basic functioning.

We often think of command and control as a distinct and specialized function—like logistics, intelligence, electronic warfare, or administration—with its own peculiar methods, considerations, and vocabulary, and occurring independently of other functions.

But in fact, command and control encompasses all military functions and operations, giving them meaning and harmonizing them into a meaningful whole.

None of the above functions, or any others, would be purposeful without command and control.

Command and control is not the business of specialists—unless we consider the commander a specialist—because command and control is fundamentally the business of the commander.

Command and control is the means by which a commander recognizes what needs to be done and sees to it that appropriate actions are taken.

- Sometimes this recognition takes the form of a conscious command decision—as in deciding on a concept of operations.

- Sometimes it takes the form of a preconditioned reaction—as in immediate-action drills, practiced in advance so that we can execute them reflexively in a moment of crisis.

- Sometimes it takes the form of a rules-based procedure—as in the guiding of an aircraft on final approach.

- Some types of command and control must occur so quickly and precisely that they can be accomplished only by computers—such as the command and control of a guided missile in flight.

Other forms may require such a degree of judgment and intuition that they can be performed only by skilled, experienced people—as in devising tactics, operations, and strategies.

- Sometimes command and control occurs concurrently with the action being undertaken—in the form of real-time guidance or direction in response to a changing situation.

- Sometimes it occurs beforehand and even after. Planning, whether rapid/time-sensitive or deliberate, which determines aims and objectives, develops concepts of operations, allocates resources, and provides for necessary coordination, is an important element of command and control.

Furthermore, planning increases knowledge and elevates situational awareness.

Effective training and education, which make it more likely that subordinates will take the proper action in combat, establish command and control before the fact.

The immediate-action drill mentioned earlier, practiced beforehand, provides command and control.

A commander’s intent, expressed clearly before the evolution begins, is an essential part of command and control.

Likewise, analysis after the fact, which ascertains the results and lessons of the action and so informs future actions, contributes to command and control.

Some forms of command and control are primarily procedural or technical in nature—such as the control of air traffic and air space, the coordination of supporting arms, or the fire control of a weapons system.

Others deal with the overall conduct of military actions, whether on a large or small scale, and involve formulating concepts, deploying forces, allocating resources, supervising, and so on. This last form of command and control, the overall conduct of military actions, is our primary concern in this manual.

Unless otherwise specified, it is to this form that we refer. ...

An effective command and control system provides the means to adapt to changing conditions.

We can thus look at command and control as a process of continuous adaptation.

We might better liken the military organization to a predatory animal—seeking information, learning, and adapting in its quest for survival and success—than to some “lean, green machine.”

Like a living organism, a military organization is never in a state of stable equilibrium but is instead in a continuous state of flux—continuously adjusting to its sur- roundings. ...

Second, the action-feedback loop makes command and control a continuous, cyclic process and not a sequence of discrete actions—as we will discuss in greater detail later.

Third, the action-feedback loop also makes command and control a dynamic, interactive process of cooperation.

As we have discussed, command and control is not so much a matter of one part of the organization “getting control over” another as something that connects all the elements together in a cooperative effort.

All parts of the organization contribute action and feedback—“command” and “control”—in overall cooperation.

Command and control is thus fundamentally an activity of reciprocal influence—give and take among all parts, from top to bottom and side to side.

(MCDP6 1996).

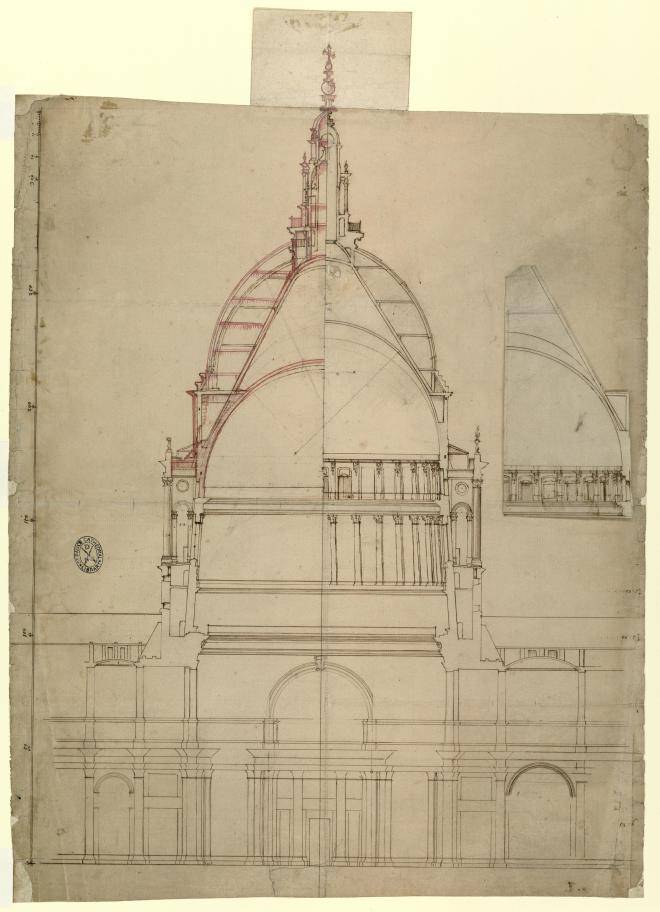

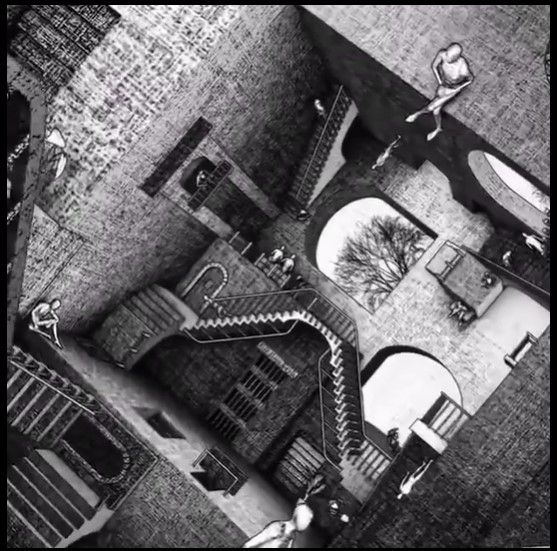

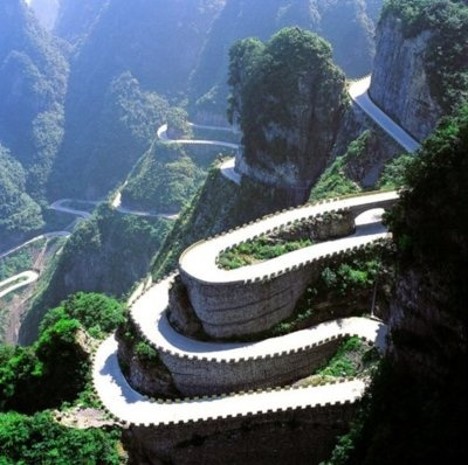

⚖ W-1.6.4 Flexibility in architecture, engineering, design

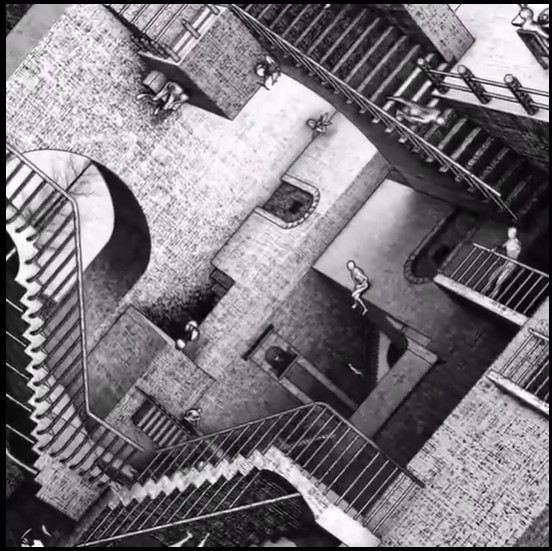

Fixed mindset trivial systems

The mindset for trivial systems is that they are that simple everyone understand it, anybody can do it.

As soon it is experienced non-trivial than nobody is there to do it.

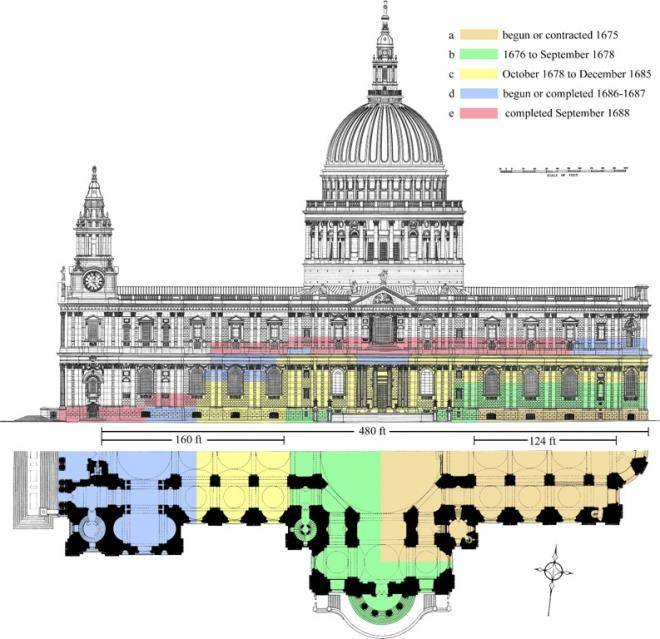

Example St-Pauls cathedral

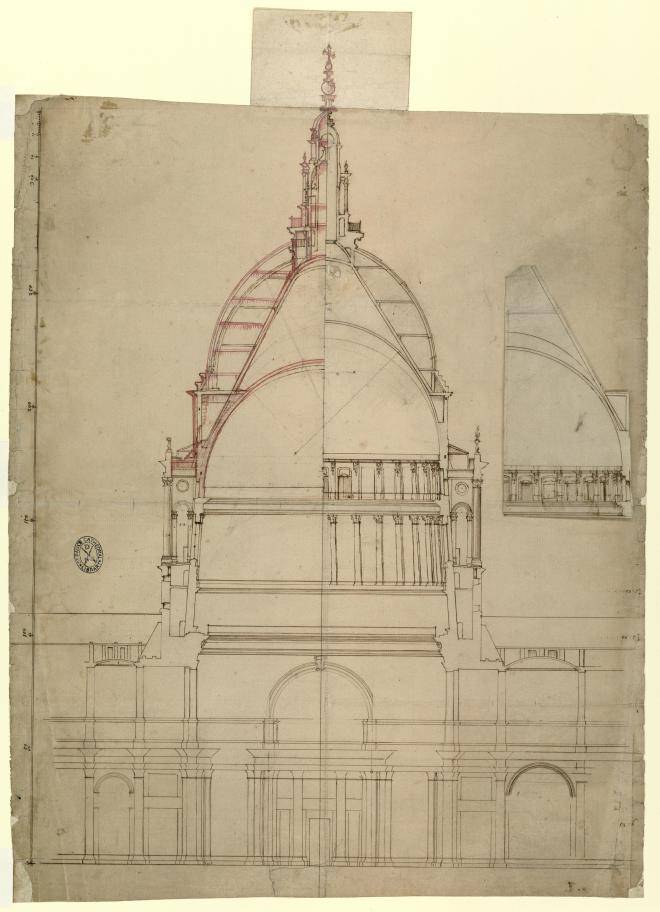

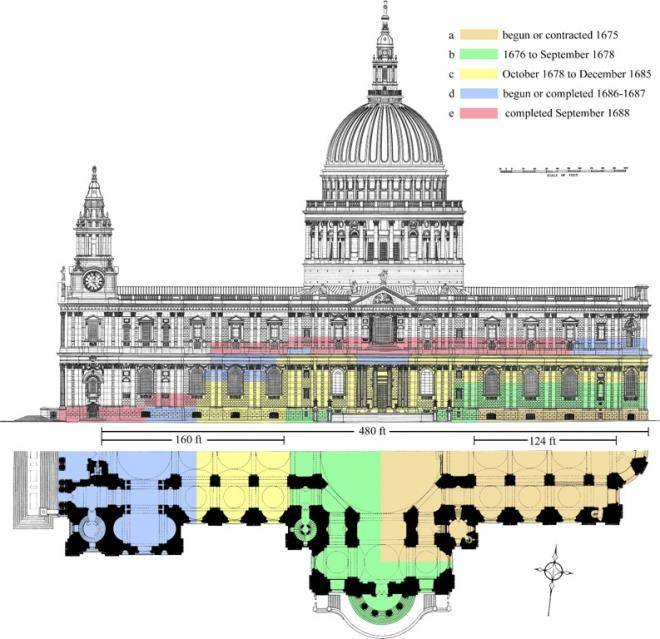

A cathedral is a non trivial construction, for example: St Pauls Cathedral .

(to visit) and

(wikipedia)

The task of designing a replacement structure was officially assigned to Sir Christopher Wren on 30 July 1669.

Charged by the Archbishop of Canterbury, in agreement with the Bishops of London and Oxford, to design a new cathedral that was "Handsome and noble to all the ends of it and to the reputation of the City and the nation".

The design process took several years, but a design was finally settled and attached to a royal warrant, with the proviso that Wren was permitted to make any further changes that he deemed necessary.

The cathedral was declared officially complete by Parliament on 25 December 1711 (Christmas Day).

The task of designing a replacement structure was officially assigned to Sir Christopher Wren on 30 July 1669.

Charged by the Archbishop of Canterbury, in agreement with the Bishops of London and Oxford, to design a new cathedral that was "Handsome and noble to all the ends of it and to the reputation of the City and the nation".

The design process took several years, but a design was finally settled and attached to a royal warrant, with the proviso that Wren was permitted to make any further changes that he deemed necessary.

The cathedral was declared officially complete by Parliament on 25 December 1711 (Christmas Day).

The final design as built differs substantially from the official Warrant design.

Many of these changes were made over the course of the thirty years as the church was constructed, and the most significant was to the dome.

The final design as built differs substantially from the official Warrant design.

Many of these changes were made over the course of the thirty years as the church was constructed, and the most significant was to the dome.

After the Great Model, Wren resolved not to make further models and not to expose his drawings publicly, which he found did nothing but "lose time, and subject [his] business many times, to incompetent judges".

The Great Model survives and is housed within the cathedral itself.

The cathedral is one of the most famous and recognisable sights of London.

Its dome, surrounded by the spires of Wren's City churches, has dominated the skyline for over 300 years.

At 365 ft (111 m) high, it was the tallest building in London from 1710 to 1963.

The dome is still one of the highest in the world.

The St Paul’s Collection of Wren Office drawings is unrivalled as a record of the design and construction of a single great building by one architect in the early modern era.

Consisting of 217 drawings for St Paul’s dating between 1673 and 1752 (nine others, catalogued in the final section, are unconnected with the building), the Collection was originally part of a much larger corpus.

This included 67 drawings now in the Wren Collection at All Souls College, Oxford, and a single plan at Sir John Soane’s Museum in London.

The whole corpus is only a fraction of what must originally have existed, for it contains very few executed designs and just one full-sized profile for construction, although hundreds – if not thousands – of such drawings must have been made.

The St Paul’s Collection of Wren Office drawings is unrivalled as a record of the design and construction of a single great building by one architect in the early modern era.

Consisting of 217 drawings for St Paul’s dating between 1673 and 1752 (nine others, catalogued in the final section, are unconnected with the building), the Collection was originally part of a much larger corpus.

This included 67 drawings now in the Wren Collection at All Souls College, Oxford, and a single plan at Sir John Soane’s Museum in London.

The whole corpus is only a fraction of what must originally have existed, for it contains very few executed designs and just one full-sized profile for construction, although hundreds – if not thousands – of such drawings must have been made.

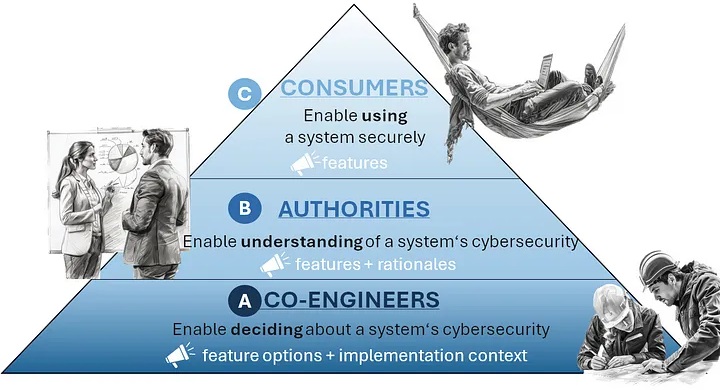

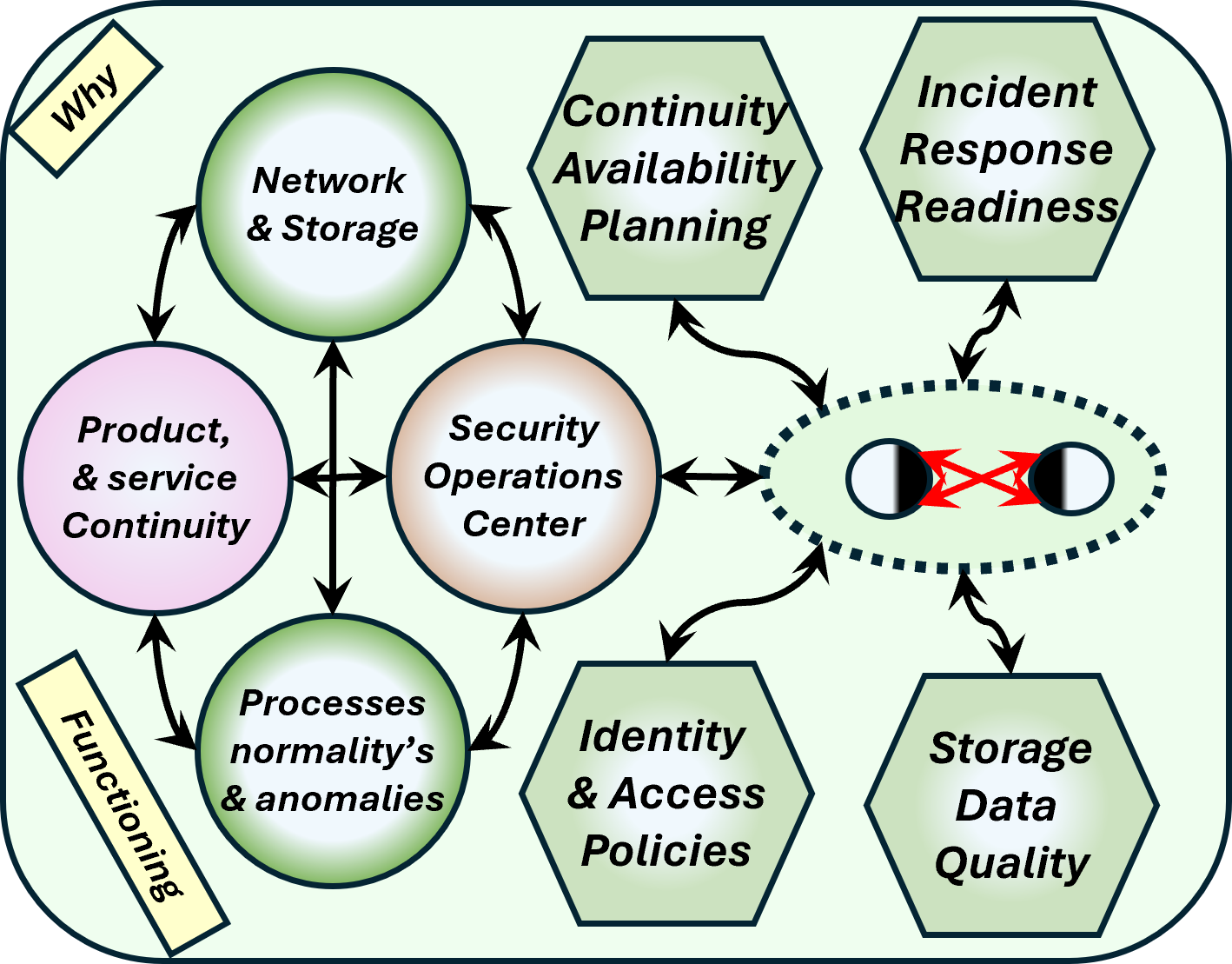

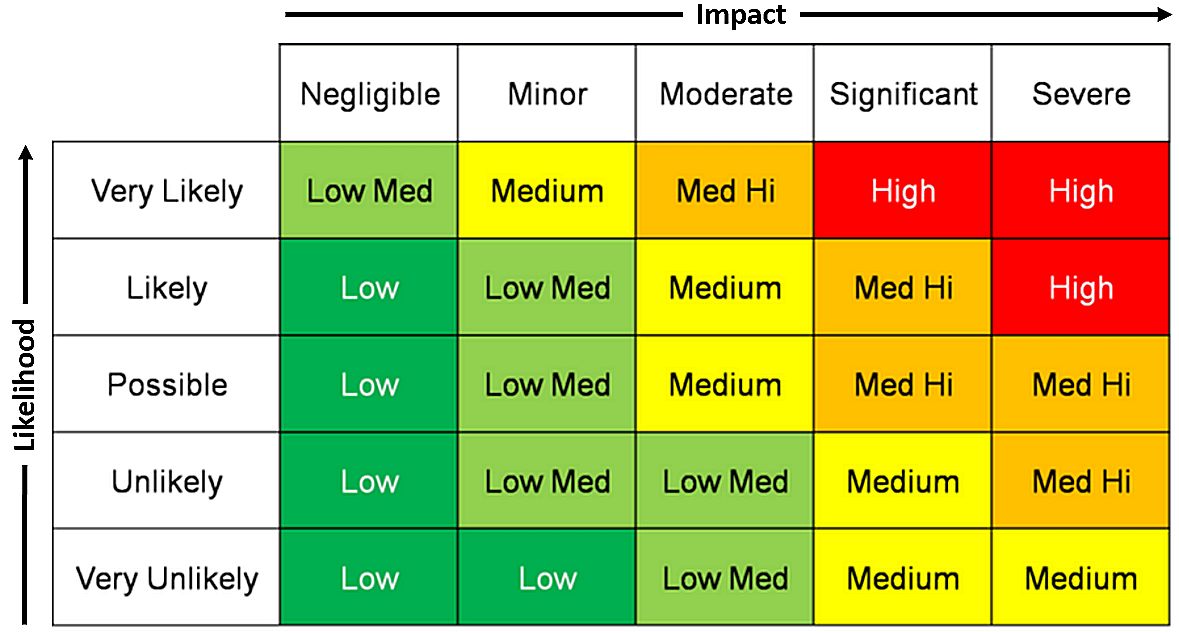

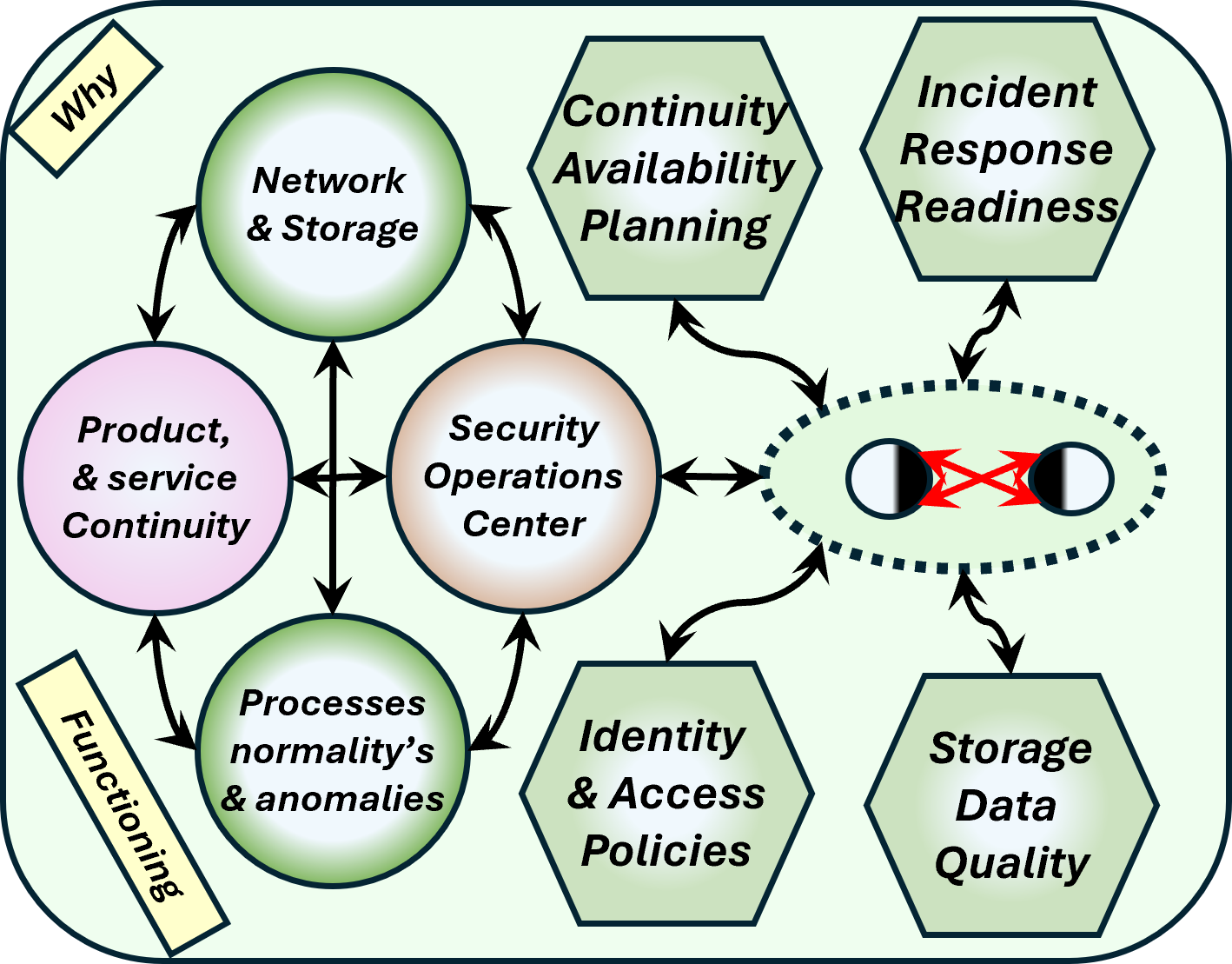

He revised the design stage by stage as work moved from one part of the building to the next.