Computer & Informmation technology.

Information Technology foundation.

Science & engineering.

The distance in a flat mapping looks big,

🎭 another dimension:

next door.

🔰 the most logical

begin anchor.

This is the most technical part.

Being enablers there should be no dependicies for business processes.

There are three subtopics:

- 1/ information - computers

- 2/ encryption, communication meaning

- 3/ statistics, data exploration

Contents

Progress

- 2019 week:27

- rebuilding as subpage with new CSS style. Only topic: Computer science.

Mechanical & Theoretical beginning.

Analalog computing.

A quick acceptavble result is a good option using analog devices. The problem with those is their single purpose and limited accuracy.

The advantage using dedicated scale models is seeing issues not in another possible. THe oldest kind of an application is navigation.

astrolabe

an elaborate inclinometer, and can be considered an analog calculator capable of working out several different kinds of problems in astronomy.

Information technology, 19th-20th century.

Using calculations for a goal lifted off with the industralisation.

Ada lovelace The first programmer. (wikipedia)

Between 1842 and 1843, Ada translated an article by Italian military engineer Luigi Menabrea on the calculating engine, supplementing it with an elaborate set of notes, simply called Notes.

These notes contain what many consider to be the first computer program?that is, an algorithm designed to be carried out by a machine.

Charles Babbage Considered by some to be a "father of the computer".

Babbage is credited with inventing the first mechanical computer that eventually led to more complex electronic designs,

though all the essential ideas of modern computers are to be found in Babbage's analytical engine.

I would prefer seeing the jaquard loom system as the origin of automatizaton.

That is in another dimension, next door.

General purpose powered by elektricity.

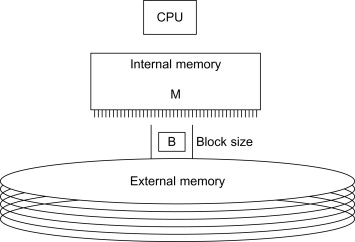

Cpu Memory Storage.

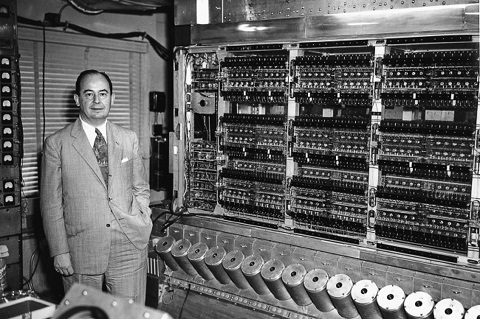

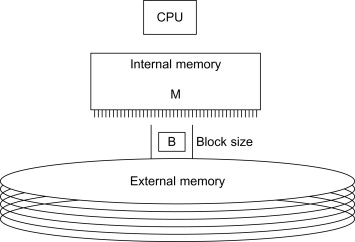

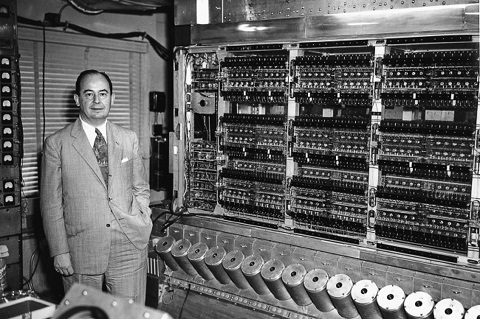

von Neumann Architecture is the foundation to investigate on performance going to do tuning.

The term "von Neumann architecture" has evolved to mean any stored-program computer in which an instruction fetch and a data operation cannot occur at the same time because they share a common bus.

This is referred to as the von Neumann bottleneck and often limits the performance of the system.

The design of a von Neumann architecture machine is simpler than a Harvard architecture machine?which is also a stored-program system but has one dedicated set of address and data buses for reading and writing to memory, and another set of address and data buses to fetch instructions.

Developping programming languages.

Mother of 3 GL languages

Coding changing hardwired conections (1 GL) or doing mmemnomics similar to assembler (2 Gl) is hard basic work for coding. GL is the Generation of the Language.

Grace Hopper (Yale edu)

Best known for developping programming languages. Did far more than that. Some of that in an era a lot was going on.

In addition to their work for the Navy, Hopper and her colleagues also completed calculations for the army and -ran numbers- used by John von Neumann in developing the plutonium bomb dropped on Nagasaki, Japan.

Being a lead in the programming, coining a word:

Though the term "bug" had been used by engineers since the 19th century to describe a mechanical malfunction, Hopper was the first to refer to a computer problem as a "bug" and to speak of "debugging" a computer.

Promoting educating:

As the number of computer languages proliferated, the need for a standardized language for business purposes grew. In 1959 COBOL (short for "common business-oriented language") was introduced as the first standardized general business computer language.

Although many people contributed to the "invention" of COBOL, Hopper promoted the language and its adoption by both military and private-sector users. Throughout the 1960s she led efforts to develop compilers for COBOL.

Data, Information structuring.

Relational data, Transactional usage.

Edgar F. Codd Codd, the man who killed codasyl.

An English computer scientist who, while working for IBM, invented the relational model for database management, the theoretical basis for relational databases and relational database management systems.

He made other valuable contributions to computer science, but the relational model, a very influential general theory of data management, remains his most mentioned, analyzed and celebrated achievement.

Relational data, Transactional usage.

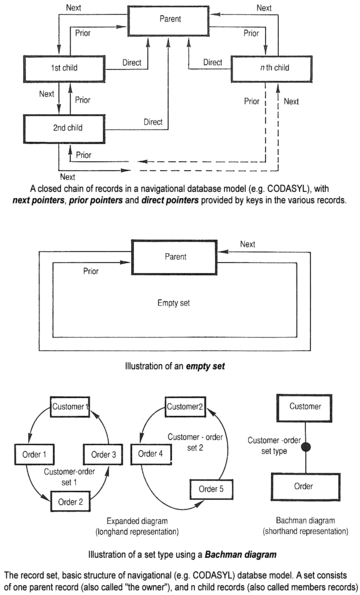

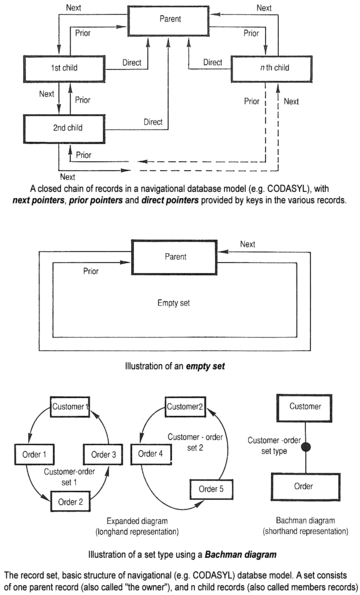

The DBTG group,

codasysl , greatest person doing the data network modelling:

Charles_Bachman, was the DBMS standard before SQL. (wikipedia)

CODASYL, the Conference/Committee on Data Systems Languages, was a consortium formed in 1959 to guide the development of a standard programming language that could be used on many computers.

This effort led to the development of the programming language COBOL and other technical standards.

Almost forgotten, a network model database is a no sql one.

In October 1969 the DBTG published its first language specifications for the network database model which became generally known as the CODASYL Data Model. (wiki)

This specification in fact defined several separate languages: a data definition language (DDL) to define the schema of the database, another DDL to create one or more subschemas defining application views of the database;

and a data manipulation language (DML) defining verbs for embedding in the COBOL programming language to request and update data in the database.

Science information processing.

Quality Correctness Elegance.

Edsger Dijkstra The question on software quality by mathematical abstractian.

One of the most influential figures of computing science's founding generation, Dijkstra helped shape the new discipline from both an engineering and a theoretical perspective.

His fundamental contributions cover diverse areas of computing science, including compiler construction, operating systems, distributed systems, sequential and concurrent programming, programming paradigm and methodology, programming language research, program design, program development, program verification, software engineering principles, graph algorithms, and philosophical foundations of computer programming and computer science.

Many of his papers are the source of new research areas.

Several concepts and problems that are now standard in computer science were first identified by Dijkstra or bear names coined by him.

Strcutured programming,

Jackson structured programming ,

Nassi Shneiderman diagram Algol all were basic elements at education touching software design in the first years thereafter.

"The revolution in views of programming started by Dijkstra's iconoclasm led to a movement known as structured programming, which advocated a systematic, rational approach to program construction. Structured programming is the basis for all that has been done since in programming methodology, including object-oriented programming."

The software crisis in the 60´s, organizing conferences.

Nato reports (brian randell) a beautiful document:

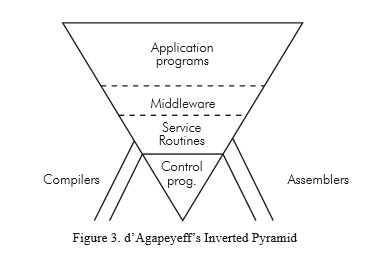

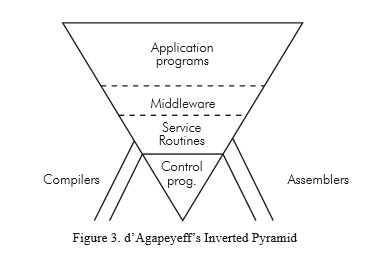

Nato 1969 Mentioning E.Dijkstra and d´Agapeyeff´ Inverted Pyramid.

Dependicies in layers where you don´t want to be dependent. Disconnection by defining interfaces (middleware).

This is because no matter how good the manufacturer´s software for items like file handling it is just not suitable; it´s either inefficient or inappropriate.

We usually have to rewrite the file handling processes, the initial message analysis and above all the real-time schedulers,

because in this type of situation the application programs interact and the manufacturers, software tends to throw them off at the drop of a hat, which is somewhat embarrassing.

On the top you have a whole chain of application programs.

The point about this pyramid is that it is terribly sensitive to change in the underlying software such that the new version does not contain the old as a subset.

It becomes very expensive to maintain these systems and to extend them while keeping them live.

This is because no matter how good the manufacturer´s software for items like file handling it is just not suitable; it´s either inefficient or inappropriate.

We usually have to rewrite the file handling processes, the initial message analysis and above all the real-time schedulers,

because in this type of situation the application programs interact and the manufacturers, software tends to throw them off at the drop of a hat, which is somewhat embarrassing.

On the top you have a whole chain of application programs.

The point about this pyramid is that it is terribly sensitive to change in the underlying software such that the new version does not contain the old as a subset.

It becomes very expensive to maintain these systems and to extend them while keeping them live.

Stating Virtual machines, docker containers, are cheap is not correct. The cost is in supporting, maintaining what is on top of that. Nothing has really changed sinde those 1969 days.

d´Agapeyeff: (from Reducing the cost of software) Programming is still too much of an artistic endeavour.

We need a more substantial basis to be taught and monitored in practice on the:

- structure of programs and the flow of their execution.

- shaping of modules and an environment for their testing.

- simulation of run time conditions.

TV Interview EWD Quality Correctness Elegance

🔰 the most logical

begin anchor.

Existing knowledge for doing ICT.

Software science.

Before doing a realisation in code the software design should be understood clearly.

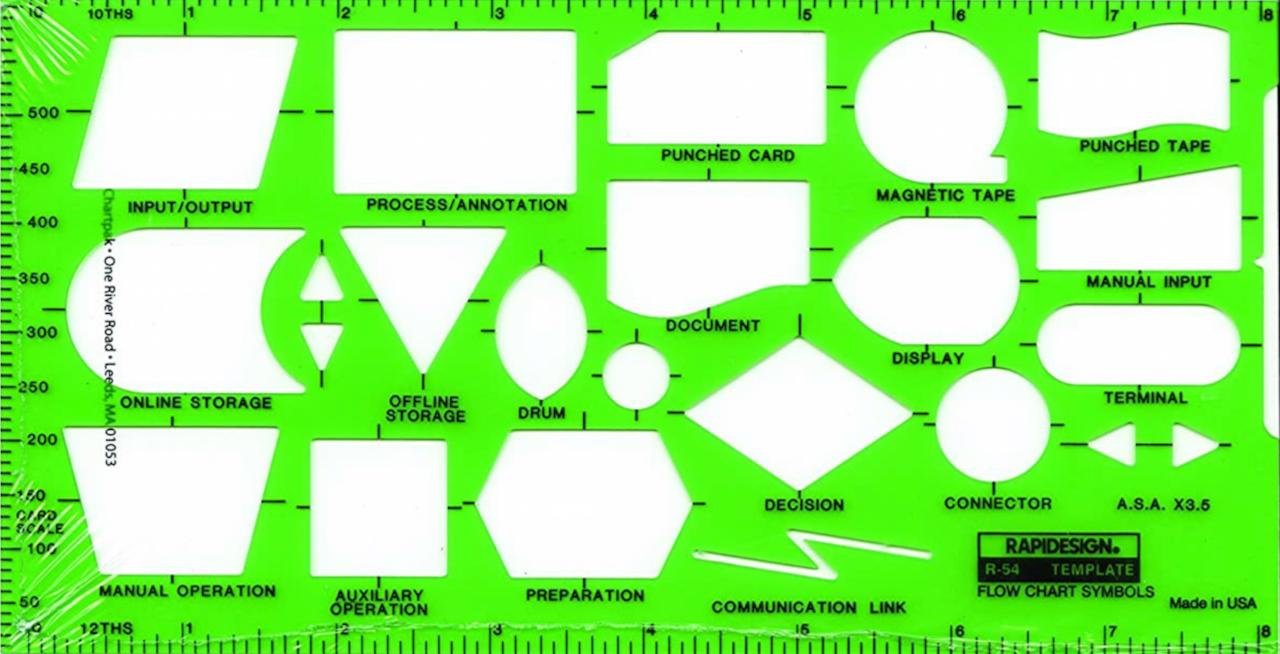

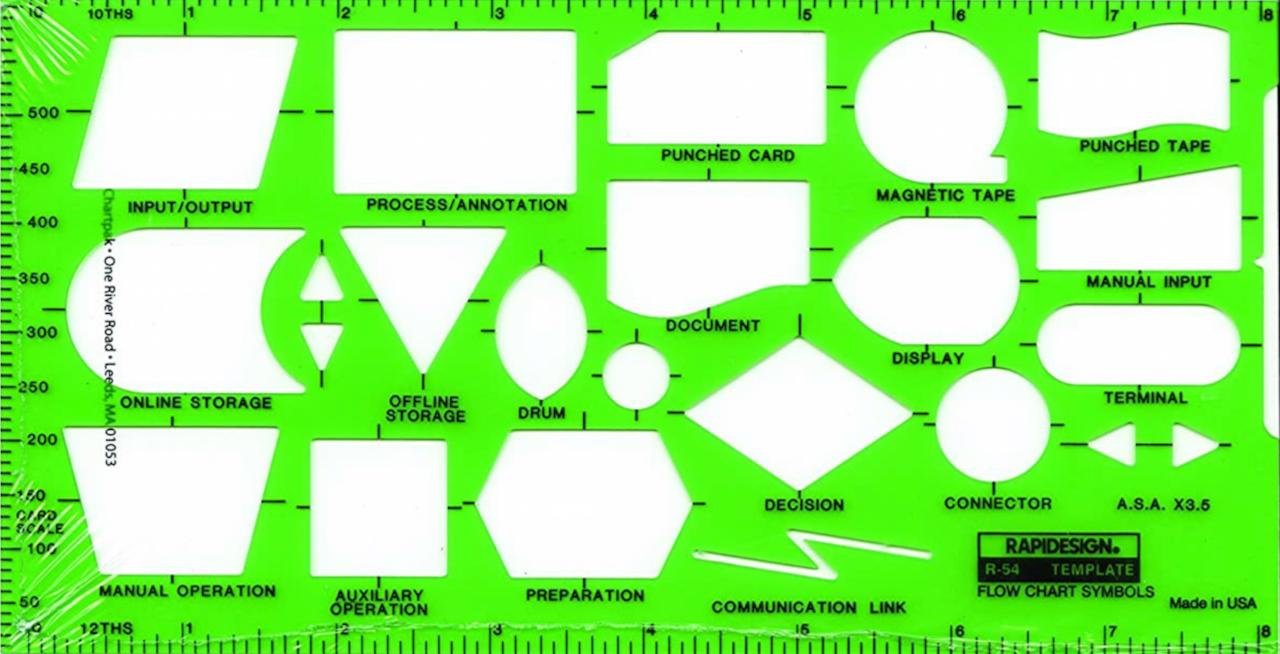

Charting flows.

In the stone age era of information technology this was very important. Because hardware was expensive, there was no escape to do it intensively.

The most used approach was using a flow-chart describing the process using information elements.

Nasi Schneidermann.

Using a flow-chart has the disadvantage of having not using logical constructs and using goto-s that are not silencing the flow.

Nassi–Shneiderman diagram (NSD)

Following a top-down design, the problem at hand is reduced into smaller and smaller subproblems, until only simple statements and control flow constructs remain.

Nassi–Shneiderman diagrams reflect this top-down decomposition in a straightforward way, using nested boxes to represent subproblems.

Consistent with the philosophy of structured programming, Nassi–Shneiderman diagrams have no representation for a GOTO statement.

🎯 Foundation Goal (lean).

It was instructed as a mandatory action without explanation why to do a program process design. The goals are the basics of:

- Correctness. The process should work without uncontrolled failures

- Adequacy. The process should be a good fit for the intended job.

- Elegance. A simple understandable process, avoiding unnecessary complexity, for the fittest.

In a trial and error approach in building something than surprises, errors, misunderstandings are common and unavoidable.

Some take-aways:

- Verify all input components are available before doing any processing.

- When an object is not complete to process, have it delayed for a limited period and/or let a person review it.

- Don´t show all possible exceptions in the normal flow. Use a dedicated list for that. Exceptions may break all sub process rules but must leave the environment a stable valid situation.

It are the same kind of principles as in lean manufacturing.

Software Languages.

The building of new software languages did not stop. That is a signal the Information processing is still not full grown to well founded labor activity.

semicolon based languages.

Algol 60 As a semicolon based langauge bypassed the requirements using hollerith cards. That was a good choice.

ALGOL 60 was used mostly by research computer scientists in the United States and in Europe.

Its use in commercial applications was hindered by the absence of standard input/output facilities in its description and the lack of interest in the language by large computer vendors.

ALGOL 60 did however become the standard for the publication of algorithms and had a profound effect on future language development.

Having them numbered in case of accidently dropping the compiler was instructed to ingonore position 73-80. In commmercial and technical environments Cobol and Fortran was used.

These two languages had more strict colomnns specfications and were not well in forcing an conforming strcutured approach in coding. These were all 3 GL languages.

Python based on columns by identation.

Python uses whitespace indentation, rather than curly brackets or keywords, to delimit blocks.

An increase in indentation comes after certain statements; a decrease in indentation signifies the end of the current block.

Thus, the program´s visual structure accurately represents the program´ss semantic structure.

This feature is sometimes termed the off-side rule, which some other languages share, but in most languages indentation doesn´t have any semantic meaning.

3 GL languages going into more advanced ones. low coding.

Procedural programming languages are leaving the details on accessing object fully to the programmer. Examples are Cobol Java Python.

When processing manay objects in a similar way much of coding on repetitions can handed over to the language. RPG and SAS (base) are examples.

🎯 New goal: Going into low coding, much more is handed over to the language and the language interface (graphical clicking).

Information named: "data".

The technical hardware does not diiferentiate between business logic (the process) and business data (what is processed).

Business data usually is modelled in relationships (DBMS).

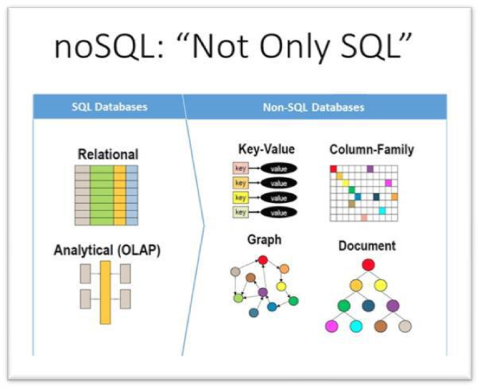

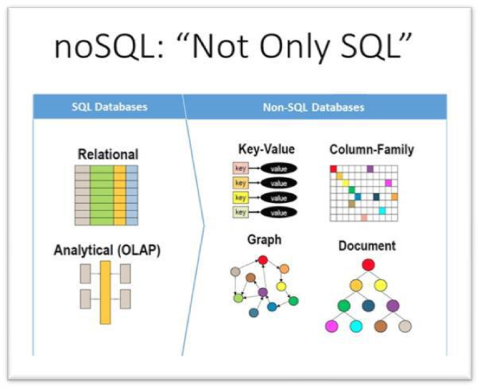

Not only relational data.

For Online transactions the shift into the relationals DBS was made in the 90´s.

No-sql databases are hot again because there are features not available in a relational approach. A social network or geographical path are not good fits for a relational approach.

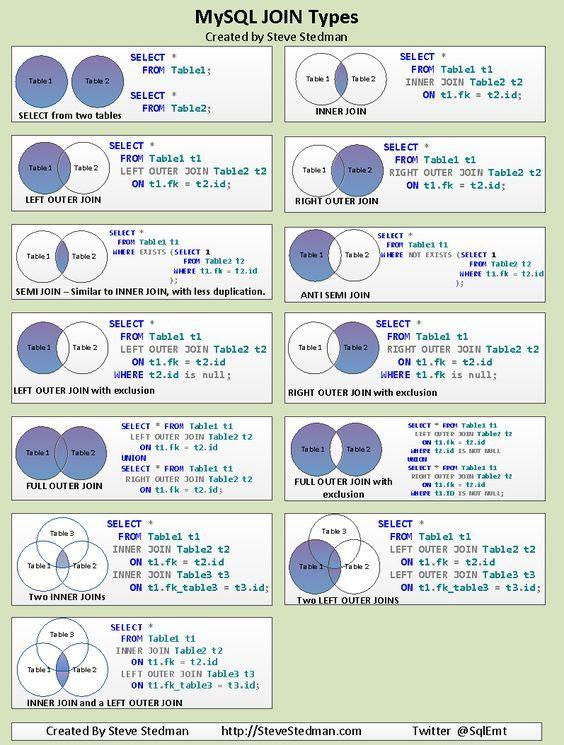

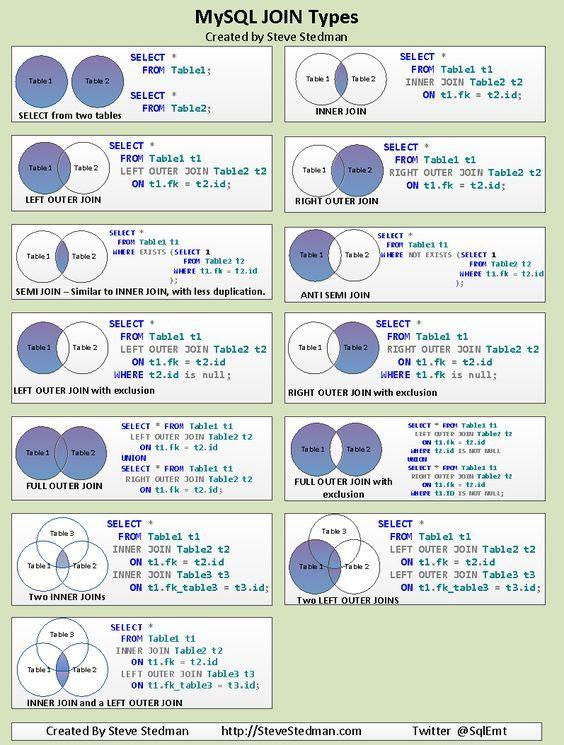

SQL manipulating sets.

The SQL usage should be easy.

It is that difficult you need to have specialist to have achieve accpetable performance.

Even with simple queries a template how to solve a logical question is needed. It is not that easy as is dictated to be aside the many dialects and different implementations.

⚠ What is missing is a fromat strcuture for representating variables. This adds unnecessary comlexity into DBMS systems.

🎯 simplified data models (lean).

Similar to programming and lean manufacturing, the basics are:

- Correctness. The data models should work without uncontrolled failures

- Adequacy. The data-model should be a good fit for the intended job.

- Elegance. A simple understandable data-model, avoiding unnecessary complexity, for the fittest.

Risk evaluation.

The risks usualy are classified as non-functionals, not being important for the functional owner.

A conceptual mistake because: these are basic requirements for the responsability for the fucntional owner.

Risk guidelines.

Risk Assessment

The level of risk can be estimated by using statistical analysis and calculations combining impact and likelihood.

Any formulas and methods for combining them must be consistent with the criteria defined when establishing the Risk Management context.

CIA temporal context with BIA.

CIA Confidentiality, Integrity Availability is related to the BIA, Business Impact Analyses for a known made list of risks.

The standard assumption is that the risk information is stable in time and by that stable behaviour the mitigations with implementations are stable in time.

Having deadlines for delivery information to authorities the risk and risks are variable.

Preparing information to get public published there is an important risk difference before and after the publishing.

Timelines and risk difference are to be inclusive documented.

CIA conversion into CIAV.

CIA Confidentiality, Integrity Availability and Verificable. Risk management is not a activity doing once, it should verified and evaluated regular. THe PDCA cyclus is an adviced approach.

That implies that what is done once should be verificable, adding the letter V.

BIO (dutch)

🎯 simplified risk models (lean).

Similar to programming and lean manufacturing, the basics are:

- Correctness. Have a list of all relevant risks and their mitigations.

- Adequacy. Mitigations should also have temporal dependencies correct mentioned.

- Elegance. An easy verifiable risk documents and implementation avoiding unnecessary complexity, for the fittest.

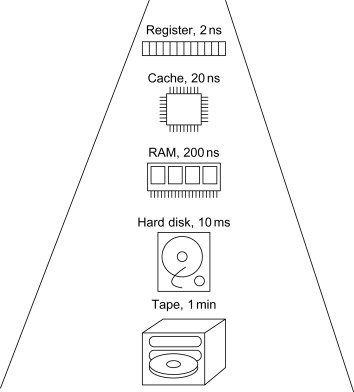

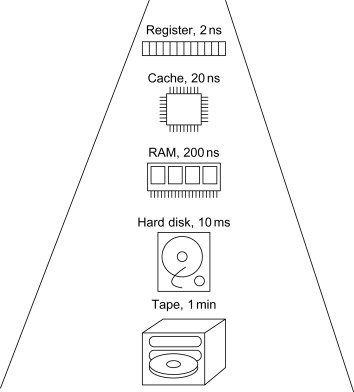

Hardware capacity.

Unlimited capacity, unlmited speed will never be reality.

Performance & Tuning.

Knowing the time is will cost for some request optimizing is doing choices moving around between:

- in minimizing resources that take the longest time. ⌛

🎯 better algorithm

- trying to run processes parallel instead of serial. ⏳

🎯 scheduling

- preventing overload 🚧 conditions in any resourced used in the chain.

🎯 load balancing

Choices made P&T.

There are a lot of choices to make at a generic design phase that are impacting P&T. The idea however is: because the realisation is technical engineering, than that all is not relevant.

That is ignoring technical limitations and technical possibilities in a way when it would be physical structures is not acceptable.

In those environments of physical there are a continuous feed back loops between what is possible to realise and what could be asked in a plan to realise.

Summarizing history information technology.

There is a lot of history having very good foundations to be reused and learning from those.

⚠ Reusing knowledge derived from others sounds sensible reasonible, there are many pitfalls.

Using knowledge of the founders.

Dijkstra has left many notes. Did have great distrust in doings things correct.

Edsger W. Dijkstra Archive (texas university)

Found some text, the essence:

In IT, the adage as in no other sector is valid:

"We don´t have time to do it right, so we will do it over later".

And so the laws of the economy have determined with unrelenting cruelty that until the day of his death Dijkstra had to stare at his own right in the form of a relentless stream of messages about faulty software and failed automation projects.

🔰 the most logical

begin anchor.

The distance in a flat mapping looks big,

The distance in a flat mapping looks big,

The DBTG group,

The DBTG group,

This is because no matter how good the manufacturer´s software for items like file handling it is just not suitable; it´s either inefficient or inappropriate.

We usually have to rewrite the file handling processes, the initial message analysis and above all the real-time schedulers,

because in this type of situation the application programs interact and the manufacturers, software tends to throw them off at the drop of a hat, which is somewhat embarrassing.

On the top you have a whole chain of application programs.

The point about this pyramid is that it is terribly sensitive to change in the underlying software such that the new version does not contain the old as a subset.

It becomes very expensive to maintain these systems and to extend them while keeping them live.

This is because no matter how good the manufacturer´s software for items like file handling it is just not suitable; it´s either inefficient or inappropriate.

We usually have to rewrite the file handling processes, the initial message analysis and above all the real-time schedulers,

because in this type of situation the application programs interact and the manufacturers, software tends to throw them off at the drop of a hat, which is somewhat embarrassing.

On the top you have a whole chain of application programs.

The point about this pyramid is that it is terribly sensitive to change in the underlying software such that the new version does not contain the old as a subset.

It becomes very expensive to maintain these systems and to extend them while keeping them live.