Job Submit Tool, Test automatization - JCL structuring

Making work of ICT colleagues easier.

Working experiences by some controls. The SIAR cycle is there using the corners 👓, topic cycle in the center.

SIAR:

S Situation

I Initiatives

A Actions

R Realisations

🔰 lost here, than..

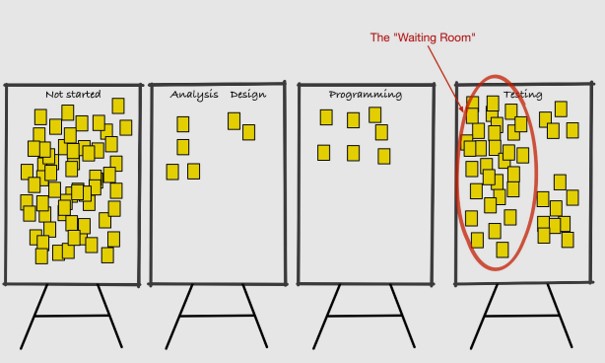

devops sdlc.

Progress

- 2020 week:11

- A split in what was the technical solution still her.

More important the analyses politics and impact.

- 2019 week:12

- Started trying a conversion from the old site.

- 2019 week:16

- Adjusting to the finished frame.

Contents

For the processes the limitations of what is technically available at a moment is important.

Although the technical situation is not important for the concepts of optimising, they are defining the options in realisations.

The mainframe era

As an example of analysing and solving an ICT problem in a software building process it is still nice.

In this case the way of structuring coding at testing and the organizational impact when it evolved.

Not an isolated problem is to solve, there are more in this rather simple question. Job submitting, Development Life Cycle, Technical tools availability.

The IBM mainframe approach isn´t that major leading ICT solution suppliers anymore as in the 70´s. Better, cheaper options have been introduced since those early years.

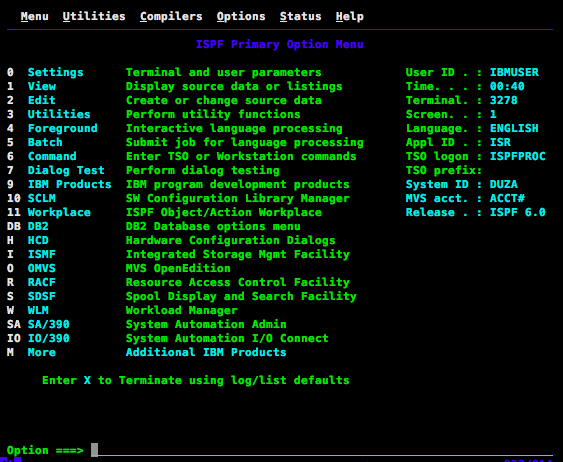

JCL (Job Control Language)

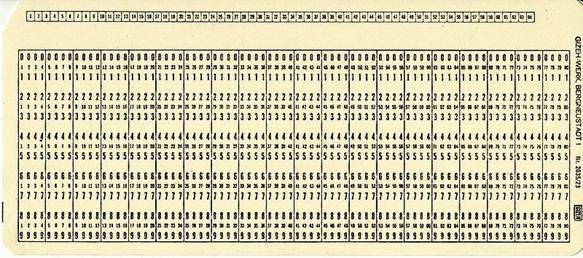

JCL is the script language coding tool in a classic IBM Mainframe used to run processes for the business.

JCL can also be used for a lot of other logic to run. JCL is just a coding language.

Before you can do anything to optimize the JCL process running business processes you must structure the way of how JCL is coded.

It has a huge advantage in disconnecting the physical data reference out of source code to this JCL storage location (punch cards).

What you do by that is defining which data should be used on the moment the job is run, JIT (Just In Time) definitions.

Any business software, logical program, can be reused in the DTAP setting without having being modified.

Avoiding not necessary modifications in business software (production, acceptance) is a quality and compliancy requirement.

Situation found with JCL

In the old previous days everything was on Hollerith cards.

After migration those to store on DASD system eliminating these physical artifacts, nothing had really changed.

Just in some rare cases a more modern approach has been implemented.

Putting a bunch of a Hollerith cards into a mechanical reader for running something is hard manual work and limited by the paper weight.

The next step was trying to forget the limitations of those old physical cards.

- Sorted numbering in positions 73-80 is not needed anymore. The risk of physical dropping it out of your hands is eliminated.

- No need to build a complete card-deck as you can get JCL-modules easily from multiple digital datasets

Users of any kind including ICT staff once were not allowed to operate machines. Operators did have the exclusive rights to do that.

This all changed when terminals became normal at the office.

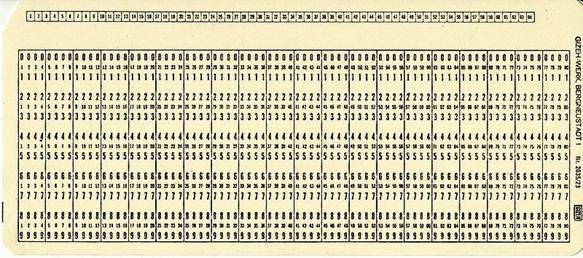

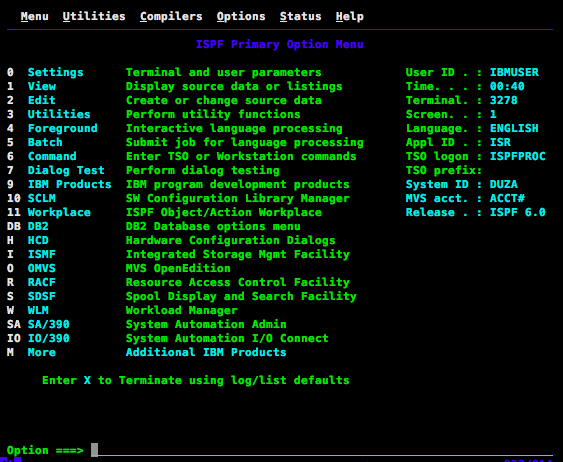

Terminal Interface, computer access

These kind of terminals were coax attached to a terminal control unit. They were big and clumsy and expensive at the same time very wanted.

Before this there wo no options to have access to computer systems.

All involved departments and people did have access to the computer system this way in those days.

The dark background has become very modern again recent years (2020).

The limitation was that number of lines * columns was limited, often used sizes were: 80*24 and 132*27.

This screen is an example how TSO did look like. The user interaction for any application had to be intuitive according to user expectations in the same approach.

Any modification to this kind of tailored menu had to be organised in a similar way of installing applications.

Situation found: Roscoe, TSO, Rexx, JES2, IDMS

Roscoe was the multiuser single adresspace product that was in place for coding and development used by ICT staf inlcuding testing and operations support.

The advantage was a relative limited system resource usage.

It had disadvantages:

- Security had to be implemented by user command analyses.

Breaches in the interface (code injections) were continously detected and needed continous maintenance.

- An additional product aside the standard available TSO/ISPF from IBM

- Every logical fucntion requiered needed additional (command) scripts to build

The decision was made to use TSO/ISPF (Time Sharing Option / Interactive System Productivity Facility) for all ICT staff while implementing central security using ACF2.

ISPF is offering Screen user interfaces and Rexx became available as language to complete the options for user customisation.

One of the possible tasks in TSO is submitting JCL to background jobs.

Background jobs running in job classes and Online systems started in job classes are in a well tuned mainframe system no problem.

The workload manager in the system is able to prioritize resource usage in a way critical systems can get the resources they needed.

Jes2 is then name of the environment handling the job classes and the JCL jobs submitted.

The interactive business applications are build in IDMS a network DBMS with an integrated data dictionary (IDD) and applications development system (ADS VVTS).

Testers reviewing these applications are having terminals for that.

Checklist required tools automated JOB Processing:

- ⚙ TSO/ISPF with REXX are able to submit jobs. A batch job can also start another batch job (same submit command or by using the internal reader.

- 🎭 Output & log of jobs can be reviewed using TSO/ISPF with SDSF.

- 📚 Terminals accessing the computer system are present.

- ⚖ Autorisations to all needed actions able to orgnise.

All necessary functionality and user interfaces are present to optimise the daily floor work processes.

The only thing left is really doing it.

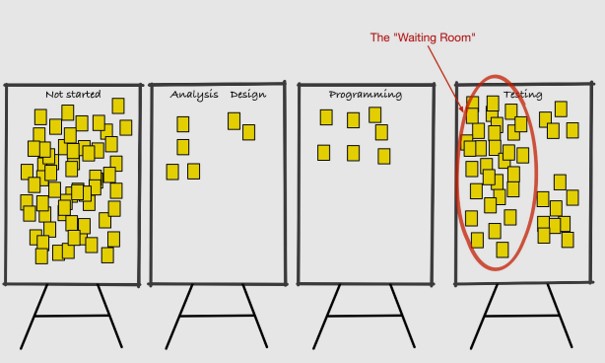

It al started when seeing all the manual work, the long time waiting somebody completed his part of work, the same work being repeated over and over again.

That repetition has historical grown because of it was done in several departments not having thoughts on cooperation.

JCL JOB guidance Form (JBF)

Found this form as guidance for workers and then got these questions:

- Why?

- Possible improvements?

- Who can help?

- What can I do?

- When to do it?

The answers for myself to start change by taking the initiative:

- It was historical grown

- remove the form by trying to automate in what is in there

- Allowance of managers team leads at involved departments

cooperation with workers at those departments fro changes

- I could build a tool for submitting those jobs.

- Just start and do what is acceptable to most of te involved persons

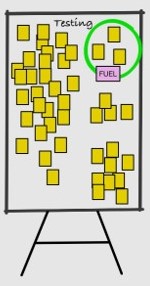

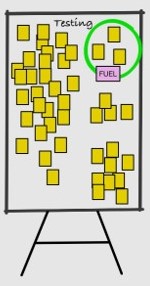

The often used jobs for testers (Pareto)

I got a limited list of often used test jobs.

✅ Build front end screen (ISPF panel) for each of then. PTG could enter the wanted data in, using some default setting to limit the amount of typing.

✅ The JCL for the jobs went to a skeleton (ISPF) dataset option. That was documented by IBM in ISPF documentation to generate data as JCL-jobs in an example.

---🤔--- Disadvantage: every job a unique set in panel, skeleton.

🎯 The result was that PTG could do more than a half of the jobs themselves abandoning most of the hardcopy paper work.

Structuring JCL, a requirement along with operations (JCL2000)

We got time to do a conversion off all test jobs.

✅ Copied all existing jobs to the a skeleton library. Stripping the header (jobname program location search). Changed fixed datasetnames into avariable name.

✅ Moved IO (input output definitions) into an "include lib".

✅ Made a new front end wiht panels (screens) allowing for the more generic appraoch. Saving what was has been input by PTG in an ISPF-table.

✅ Added in a trailer step what job to start when everything went right (cc=0).

🎯 The result was that PTG could do almost all of the jobs themselves.

Reruns became very easy. Abandoning the hardcopy paper work.

All kind of batch jobs for testers additional tools (compare)

The questions could all be solved with available tools, software:

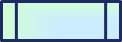

✅ Build in the backup restore program at several points and as dedicated tool.

✅ Made result of several jobs able to store in datasets.

✅ Build in compare options as dedicated tool.

🎯 The result more test-jobs could run without any additional human constraints.

🎯 PB (OPS) could copy wat has done at test. The JBF paper form abandoned.

The tools were described separately.

Comparing with the TSO tool was advanced with a lot of options what to include and what not.

Backup and restore needed to be aligned with naming conventions of the datasets.

The DTAP structure wiht naming convetions was not that evident. The problem is that the FLQ (first level qualifier) was based on department names.

- D AUTSO.- Development team

- T PTG.- Permanent Test group

- P PRDPB.- Operations support

- P SYSnm.- Company name

The Second level qualifier very distinctive for business logic, data, input logic etc.

The more got automated in the test, operations and development, more functionality was built in.

Education of people being an options in user acceptance testing using the operational code with selected test data.

Technical software changes is a similar way having several versions of the tools middleware on the same machine.

On line Transaction Processing (IDMS)

The question of the OLTP support triggred another usage type of the DBMS including the frontend (OLTP).

The education center for people did also use those OLTP systems wiht the differnce that the had production code.

Two series for OLTP systems tot start were defined:

✅ Defining the database to be used and the OLTP system number for parallel usage of an OLTP interface.

✅ Having the OLTP waited for the database availablity before continuing.

✅ Having simulated terminal input for the OLTP system settings waited until the OLTP system is responsive.

✅ Having simulated terminal input with business logic waiting until the OLTP system setting are finished. Continue with saved user sessions and user interactions.

🎯 The result a self service usage with test and education for OLTP usage.

Release mangement, parallel testing development (Endevor)

First versions JST aligned to DBA (OLTP versions IDD) and PB home grown tools.

The IDMS IDD needed an additional plug-in that was not common in use.

All OLTP programs had to move from internal IDMS (DBMS) storage to external system storage.

Naming conventions of used datasets having programs needed to change.

A lot of user modifications named endevor processes to build.

Two series for OLTP systems tot start were defined:

✅ All names of program libraries changed.

✅ For parallel development additional names storage and IDD´s were defined.

🎯 The mismatch in expectations caused DEV to use also the JST approach.

The full DTAP line under control of release management and able to run tests.

🎯 Also PB went to use it more for preproduction and acceptance testing.

Time travelling, unit conversions (Milo Euro)

The additional tool was added for easier time travelling:

✅ Options for shifting time interval added.

🎯 Tests for milo and euro very extensively done, no issued at those change moments.

Time travelling with data was a lot more complex than expected. The day of week and holiday indications are never getting similar.<

Worse was that some date fields were used with an invalid date because the functional management did not know of better options to have an indication of a system change.

Printing Services, IO guidelines

For printing using form layouts and signatures:

✅ additional statements for technology in use in the JCL header defined and maintained.

🎯 The best option I could think of to isolate this special technology.

I would have wished there was a better security and more proper test data.

The main technical issue was structuring JCL so it could be automated and reused.

The tool it self was build with TSO/ISPF using REXX at no additional cost with those common available tools.

Naming conventions and standard way of work is the key issue. Not easily copies into another organisation.

Used concepts & practical knowledge

Reconsidering this nice project, it was nice because it has that many connections to a diverse set of activities. It serves to connect all those topics.

Details to be found at:

👓 Software Life Cycle (business)..

👓 Security ..

👓 Data modelling ..

👓 EIS Business Intelligence & Analytics ..

👓 Tools Life Cycle (infrastructure)..

👓 Low code data driven work as design..

The security association may not that obvious.

Simulating the production using test-accounts, in a way they are like operational production usage, is a good practice.

Having the overall security and configuration in a way you can run a complete DTAP on a single box (Iron) having containerized anything, is a good practice.

Words of thank to my former colleagues

We had a lot fun in stressfull times working out this on the mainframe in the nineties. The uniquenes and difficulties to port it to somewhere else realising.

A few names:

- 🙏 J.Pak co-designer of the common job approach.

- 🙏 D.Roekel inventing more proc-bodies faster than me.

- 🙏 J.Hofman, P.Grobbee working it out smoothly to testers

Many more people to mention, sorry when I didn´t. (notes: 2008)

Not always unhappy by what is going on

this topic ends here. back to devops sdlc

👓 🔰

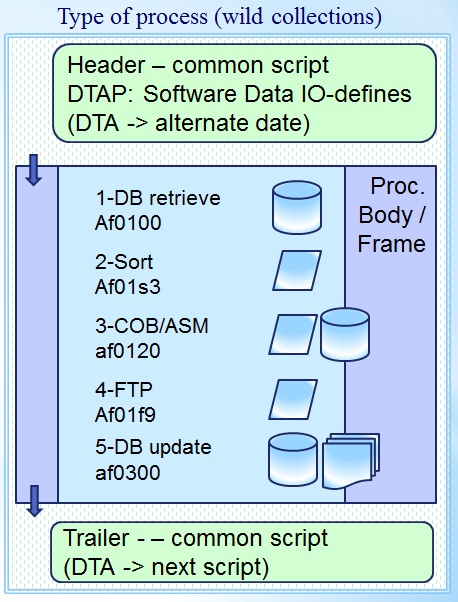

Implementing frames.

Standard JCL JOB procedures.

Goals Automated JOB Processing :

- Automated testing: regression approach and new logic or fixing found issues

- preparing the data needed for tests

- preparing jobs / scripts in a low code approach

- Automated running: all the jobs / scripts

- Saving & archiving & comparing the results.

Not all was don at the same moment, changes and functionality grew incremental.

First basic conversion to automatization

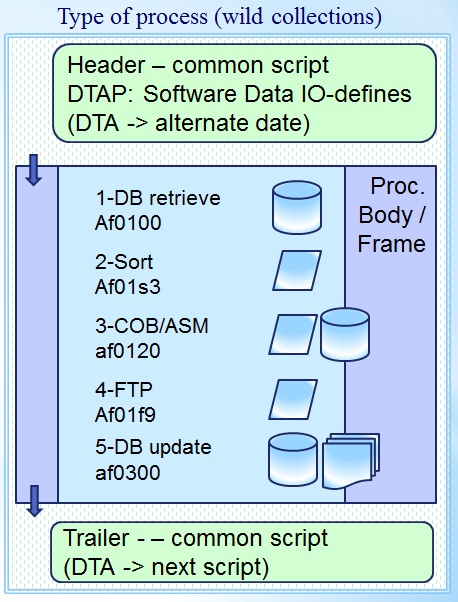

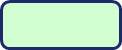

Header

Header

Defining all globals, job environment settings.

Bodies - Frames

Business logic software application programs.

Data Definitions

Input, Output, Report -log for every step.

Tail

Defining actions, jobs after this one.

Head :

- Joblib statement, where to look for Business Software Logic.

- Jcllib statement, where to look for Data Definitions, procedure Bodies.

- Prefix setting, defining the first levels Data Definitions. DTAP dependency.

- Output destinations (external). Fail safe asice security. DTAP dependency.

- Using a dedicated alternate date for Business Software Logic.

IO

You need somehow to define the datasets output / print / mail / databases / transfers to be used.

These definitions may not be hard coded in the business logic code. Why not?

- Having them managed in a release proces would become impossible.

- Develop acceptance testing would be possible using the same artifacts.

Tail :

- At non production (Business data) usage, triggers next process, next actions.

Standard JCL JOB procedures (continued).

Bodies - Frames (Unstructured) :

- The number of steps can vary between one (1) and many(*).

- Every Frame can be tailored made tot fulfil a dedicated functionality.

This will work and will be manageable when having not that many jobs to run.

A next improvement is structuring this. Define steps conforming business logic using standard building patterns.

Support by functional and technical owners infrastructure middleware components is needed.

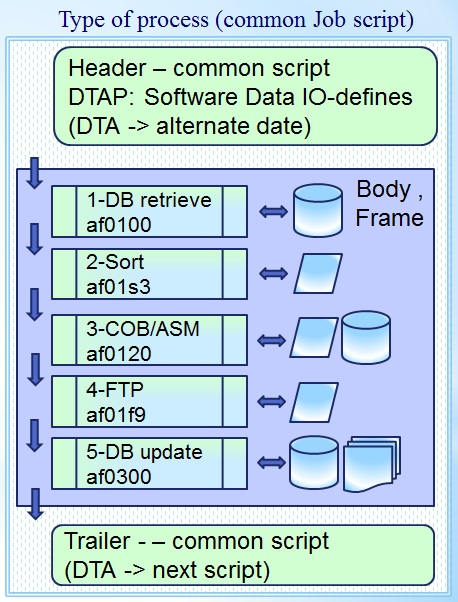

Normalised basic conversion to automatization

Common body / frame

Common body / frame

Used by business functional owners.

Common proc bodies

Technicals you can reuse very often.

These technicals are a limited number of JCL proc steps, like:

- run: database retrieve program

- run: program name .📚.

using IO definitions: .📚.

- sort: using code: .📚.

using IO definitions: .📚.

- sas: using code: .📚.

using IO definitions: .📚.

- file transfer: ftp-name: .📚.

using IO definitions: .📚.

- run: database update program

A modulair proc step in this way is having api (program interface) options.

The normalising of the data into segregated components is creating new artefacts.

New artefacts, objects naming conventions

- related DBMS data

- related local datasets

- related local outputs

The word local is used in context to the machine where the process is executed. Not necessary the same location as where the human worker is.

Standard user defined values requirement:

- Unique data identification (database, datasets, jobs)

reason: Allow to do tests in parallel.

- Data environment indicator.

One of the well known DTAP (Development Test Acceptance Production).

- Application code environment inidcatir.

One of the well known DTAP (Development Test Acceptance Production).

- Application code parallel development when applicable.

reason: Allow to do devlopment in parallel.

- Date indicator the application should use instead of the system date.

Underpinning: A process although designed on the current date should be able to run on an other date.

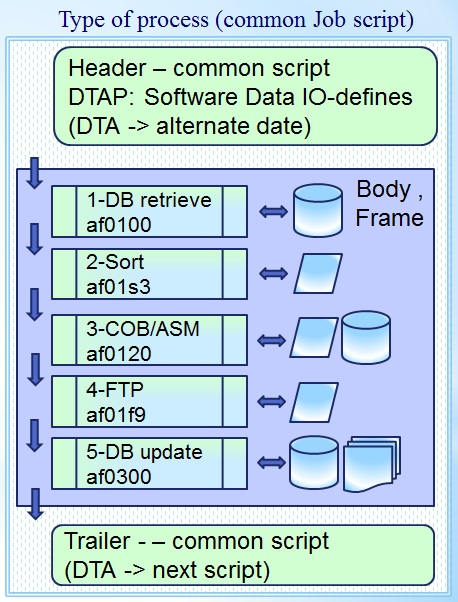

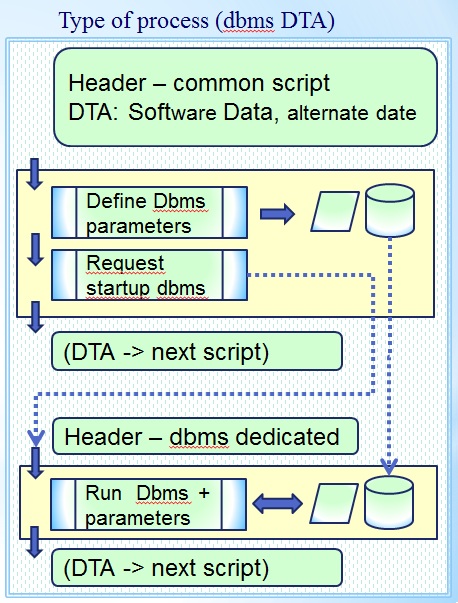

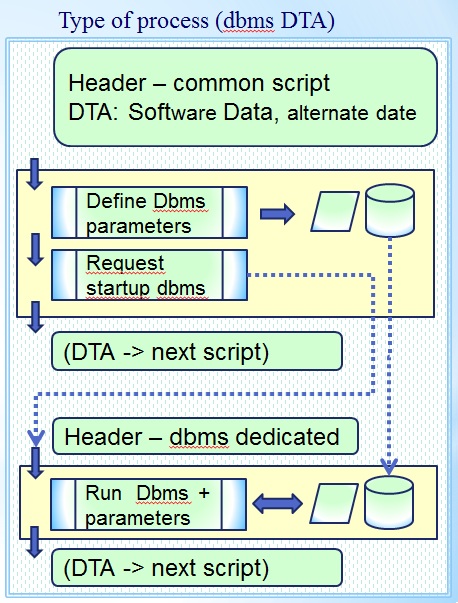

DTA support procedures.

Other procedures needed are :

- backup/restore test environment

- dedicated versions DBMS

- Saving results of processing

- compare & analyse results

- generate - define the structured steps )

- etc.

Procedures as additional needs

Backup Restore script D, T, A,

Backup & restore is essential to D,T,A Develop & Test, Acceptance environments. The test environment results are important business assets.

Reason:

Evidence of quality (auditable).

Infra-Process

Used by Testers.

To allow many testers to work parallel, segregation in naming and environment should be possible. Verifying content of backups must be possible.

Support by functional - technical owners infrastructure components.

A Acceptance tests differentations

DTA Develop, Test, Acceprance testing are phases before it goes into operations (Production).

Acceptance testing is with more variations as is visible in the SDLC reference.

Activities can have origins in project planning, for example education of humans.

Within the operations business requirements of continuity can get a solution.

Within A (acceptance) stage the business data can be different to it´s usage goal, as there are:

- PSP Acceptance: of the Business Software

- PSL Education: acceptance test of people learning software

- PSR Pre production run: limited run: ... using data definitions: ...

- PST Technical Test: new infrastructure components

- PSQ Acceptance to data quality (not the logic created the data)

Education is more eassy using a selected set (small data) conform Test (PTG).

Backup & Restore, Performance experiences

The backup and restore of a complete test plan used standard backup & restore utilities.

These are optimize for that work collecting and compressing everything as first stage.

Next stage writing that optimized for a tape device doing sequential IO.

💣 When getting forced not to use those tools found a big performance penalty because of locking queuing on shared DASD is much slower.

It is not what is told as dasd is promoted to be faster.

DTA support procedures advanced

Interactive Transactional Systems

Aside batch processing, interactive systems (transactional), are also part of business information system.

Mainframe workloads: Batch and online transaction processing

Most workloads fall into one of two categories: Batch processing or online transaction processing, which includes Web-based applications.

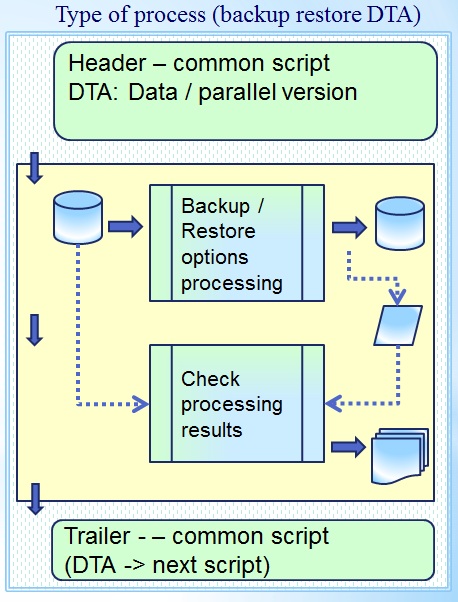

Integrated Data Dictionary (IDD IDMS Cullinet)

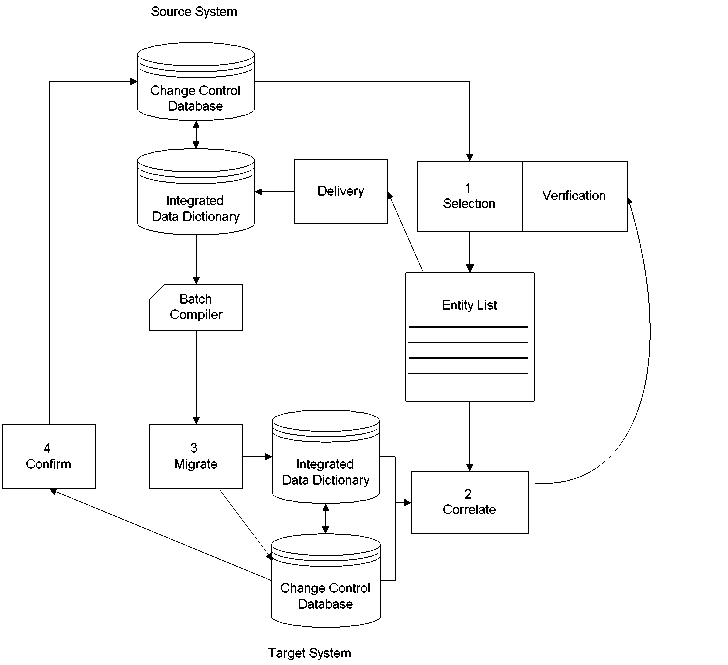

CA IDMS Reference - Software Management

Promotion Management is the process wherein entities are moved from environment to environment within the software development life cycle.

When these environments consist of multiple dictionaries, application development typically involves staged promotions of entities from one dictionary to another, such as Test to Quality Assurance to Production.

This movement can be in any direction, from a variety of sources.

CA IDMS Reference - Software Management

Promotion Management is the process wherein entities are moved from environment to environment within the software development life cycle.

When these environments consist of multiple dictionaries, application development typically involves staged promotions of entities from one dictionary to another, such as Test to Quality Assurance to Production.

This movement can be in any direction, from a variety of sources.

Dedicated DBMS script D, T, A,

This feature essential to D,T,A Test environments when transactional systems are involved.

The difference to backup restore support is: This implementation can be much more challenging is the complexity of start-up DBMS support requires to do so.

Infra-Process transactional.

Used by Testers.

Support by functional - technical owners infrastructure components.

Interactive Transactional Systems - Integrated Data Dictionary

These experiences are essential building up my mind. An IDD concept is hot in data governance. The new word is "Metadata".

Same concepts and approaches can be used with all those new tools.

Having multiple IDD's needed for the required DTAP segregations, used was:

- - system no 11: used as master defining other systems.

- D system no 66: Develop & module tests.

- T system no 88: integration Testing, education.

- A system no 77: Acceptance testing.

- P system no 01: real Production usage.

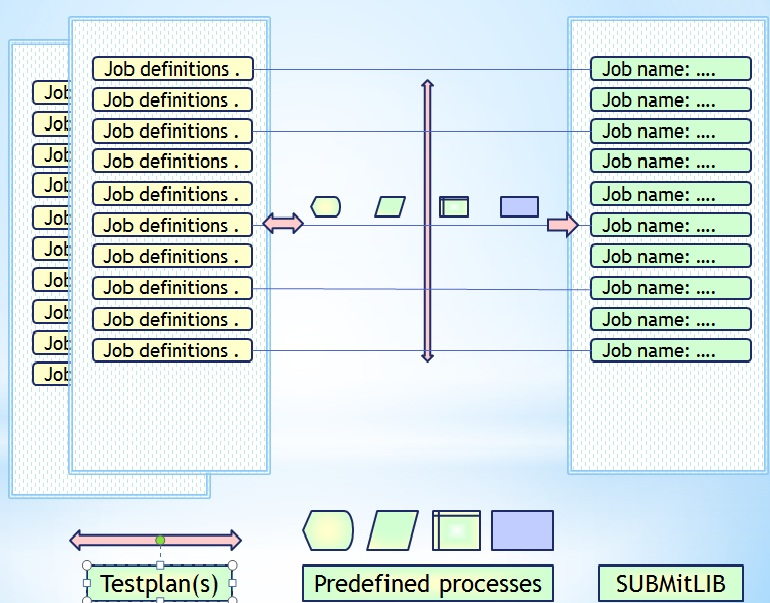

Running automated - hands free

Basic design

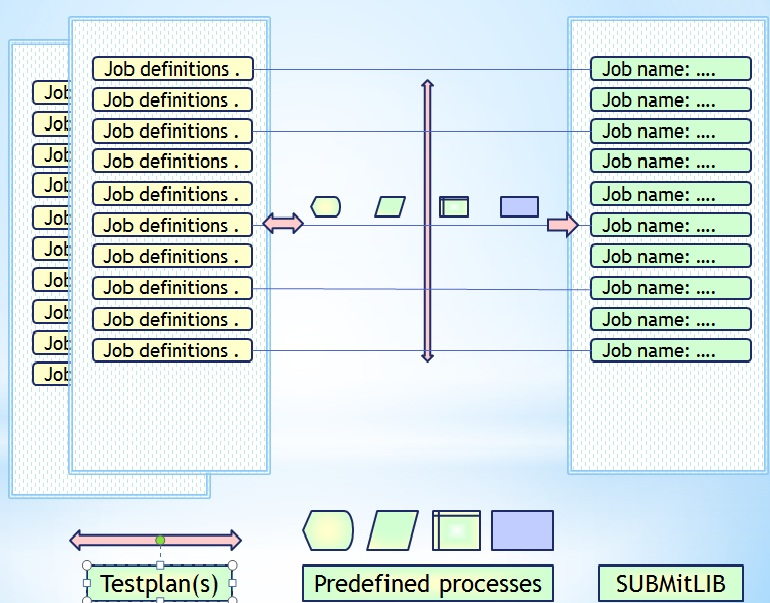

This looks at little bit complicated, the basics are:

- When al Jobs-scripts are generated, trigger the entire flow.

- Selection by choices to build jobs <SUBMiTLIB> out of <predefined processes>

- Archiving and restoring of all made choices, ability to rebuild completely.

There is an option to define a flow of processes to run.

Modelling the JST process

The proces model has several dimensions:

- job names

- job bodies, step procedure definitions, dataset io definitions

- environments: code, data, IO definitions

- simulated run date

The red arrows in the figure are indicating the optional selections for:

- jobs - All the defintions to get them run wiht predefined settings and values.

- testplans - The collection of jobs that make a testrun as unit.

- The fact in a star-schema ER-relationship is this the: "job".

Running local batch, central version transactional, experiences

Running with a local version of the DBMS is going for locking area´s not locking every reacord (OLTP).

Going for locking every record in a transactional way updating infroamtion has the advantages:

- 💡 Multiple jobs could run parallel on the same area

- 🤔 Same logic could be used in online, message driven, batch process.

of updateThese are optimize for that work collecting and compressing everything as first stage.

Next stage writing that optimized for a tape device doing sequential IO.

When getting forced not to use those tools found a big performance penalty because of locking queuing on shared DASD is much slower.

- ⚠ Logic for what has finished correctly and what not more complicated.

- 💣 huge increase in resource usages (cpu io memory) and elapse time.

Evaluating realistions.

Life cycle JCL transition

The change is a structured JCL generating and usage approach.

I designed that in coöperation with others.

Splitting up the old Job´s into basic building blocks, reuse them in every job for every type of usage.

⚙ When you can generate JCL, it is also possible to generate a lot of different ones with the goal of segregated parallel testing.

Starting a next job when one is ready is as easy as a manual start.

💰 The cost saving in time is obvious: doing something once instead the same thing over and over again by different people.

💣 Difficult to get it accepted by ICT staf users. Remember: You are (I am) automating the daily work of some or more persons (ICT staff)

Missing for deploying JST As A Service JST-AAS

⚠ Naming Conventions

- When having a well defined set of names, associatied with their types and meaning (metadata).makes a technical realization easy.

- As those namings conventions aren´t generic, porting the JST solution to another environment is almost impossible.

⚠ Life Cycle Management

- Every enviroment has his omn interpretation for Life Cycle Management on: Infrastrcuture, Tools, Business Applications.

- Any testing tool should accomply all those differences and by: Develop, Test, Accept, Production. Porting any solution is almost a mission impossible.

⚠ Security (business: data / code)

- As business data and business logic is the real goal of all this al lot of security related issues are coming in.

Some of those questions are releated to the data, pseudomized anonomized minimimized but still according to the production environment.

- Other questions are business related whether the logic itself is that sensitive it get constraints for accesability because of that.

As of those non standard security approaches porting a solution to another environment is almost impossibles

Not always unhappy by what is going on

Working experiences by some controls. The SIAR cycle is there using the corners 👓, topic cycle in the center.

SIAR:

S Situation

I Initiatives

A Actions

R Realisations

🔰 lost here, than..

devops sdlc.

Working experiences by some controls. The SIAR cycle is there using the corners 👓, topic cycle in the center.

Working experiences by some controls. The SIAR cycle is there using the corners 👓, topic cycle in the center.

These kind of terminals were coax attached to a terminal control unit. They were big and clumsy and expensive at the same time very wanted.

Before this there wo no options to have access to computer systems.

These kind of terminals were coax attached to a terminal control unit. They were big and clumsy and expensive at the same time very wanted.

Before this there wo no options to have access to computer systems.  The dark background has become very modern again recent years (2020).

The limitation was that number of lines * columns was limited, often used sizes were: 80*24 and 132*27.

The dark background has become very modern again recent years (2020).

The limitation was that number of lines * columns was limited, often used sizes were: 80*24 and 132*27.

The IDMS IDD needed an additional plug-in that was not common in use.

The IDMS IDD needed an additional plug-in that was not common in use.

Defining all globals, job environment settings.

Defining all globals, job environment settings.  Business logic software application programs.

Business logic software application programs.

Input, Output, Report -log for every step.

Input, Output, Report -log for every step.

Technicals you can reuse very often.

Technicals you can reuse very often.

Used by Testers.

Used by Testers.  Aside batch processing, interactive systems (transactional), are also part of business information system.

Aside batch processing, interactive systems (transactional), are also part of business information system.

Used by Testers.

Used by Testers.  The red arrows in the figure are indicating the optional selections for:

The red arrows in the figure are indicating the optional selections for: