Design Data - BI, Analytics

information, data: improving orgnsiations BI Analytics.

BI, Analytics reporting.

The data explosion. The change is the ammount we are collecting measuring processes as new information (edge).

📚 Information questions.

⚙ measurements data figures.

🎭 What to do with new data?

⚖ legally & ethical acceptable?

🔰 Most logical

back reference.

Contents

| Reference | Topic | Squad |

| Intro | BI, Analytics reporting. | 01.01 |

| Goal BI | The goal of BI Analytics | 02.01 |

| dataprep | Preparing data for BI Analtyics. | 03.01 |

| tech perform | Technology push focus BI tools. | 04.01 |

| BI omissions | Omissions in BI, Analytics reporting. | 05.01 |

| What next | Changing the way of informing. | 06.00 |

| | Combined pages as single topic. | 06.02 |

Combined pages as single topic.

👓 info types different types of data

👓 Value Stream of the data as product

👓 transform information data inventory

🚧 data silo - BI analytics, reporting

🕶 bi tech Business Intelligence, Analytics

Progress

- 2020 week:44

- Reordererd content with the other related data pages.

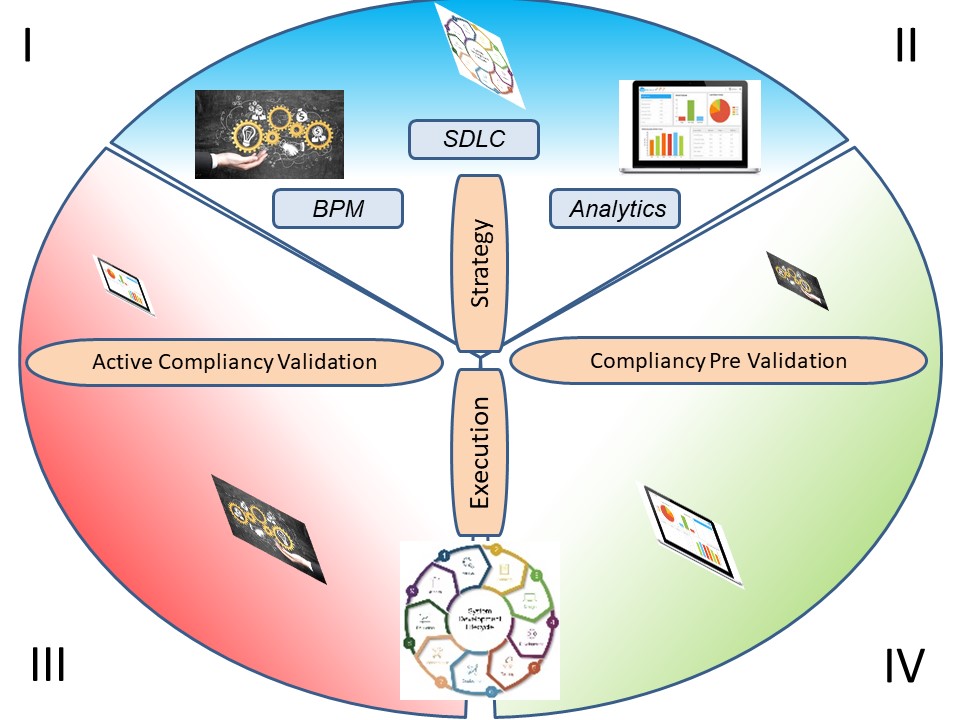

- Added Demo idea in four quadrants, getting many edges to fill for BI.

- 2019 week 45

- Changed by splitting up the location of dwh BI.

- Instead of being a main goal to achieve as BI, it is now a silo-ed approach blocking IT improvemetns as having reserved the warehouse for his own purpose.

The goal of BI Analytics.

The understandable goal of BI reporting and analytics reporting is rather limited, that is:

📚 Informing management with figures,

🤔 so they can make up their mind on their actions - decisions.

Reporting Controls (BI).

When controlling something it is necessary to:

👓 Knowing were it is heading to.

⚙ Able to adjust speed and direction.

✅ Verifying all is working correctly.

🎭 Discuss destinations, goals.

🎯 Verify achieved destinations, goals.

It is basically like using a car.

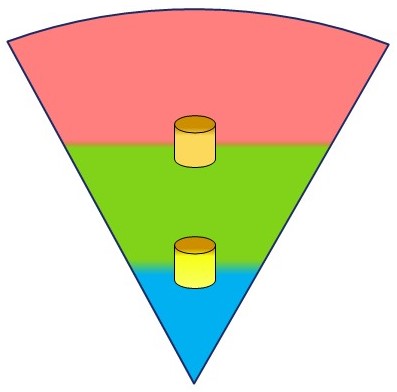

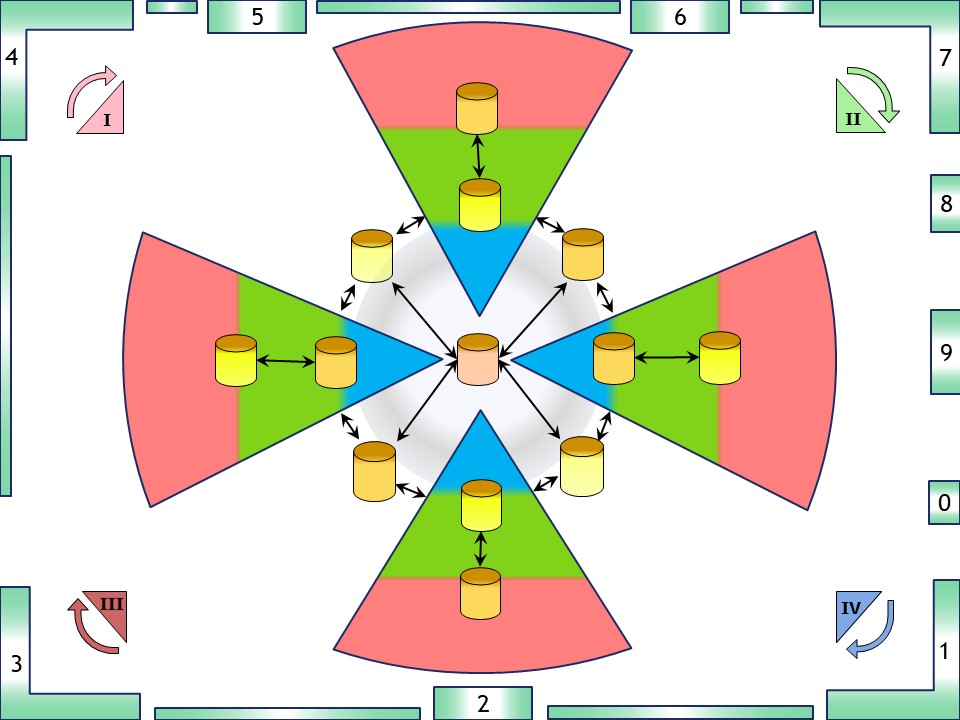

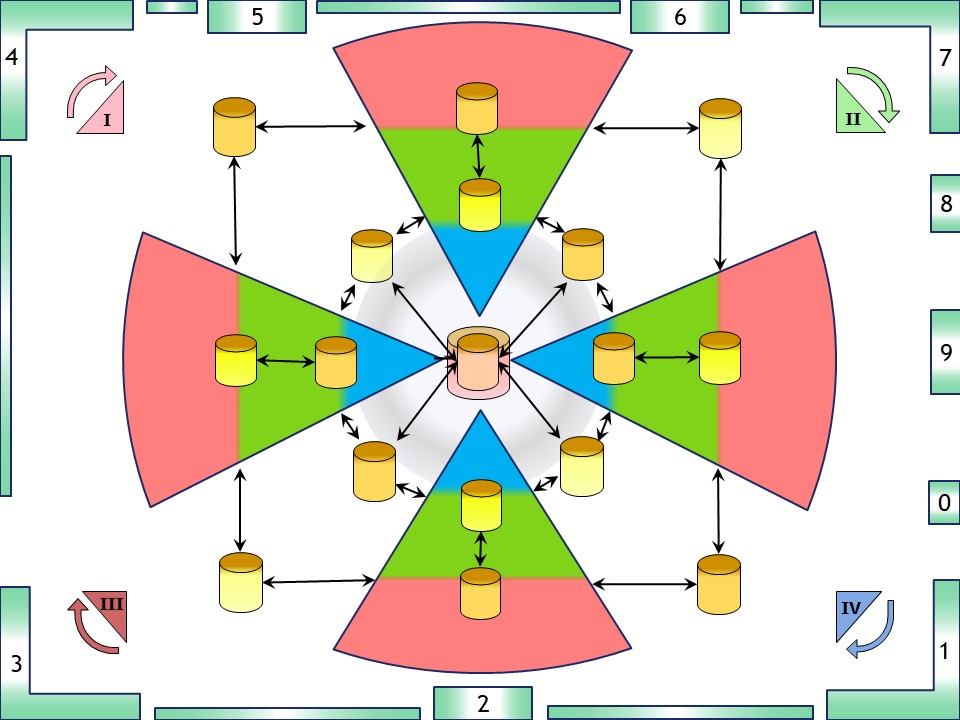

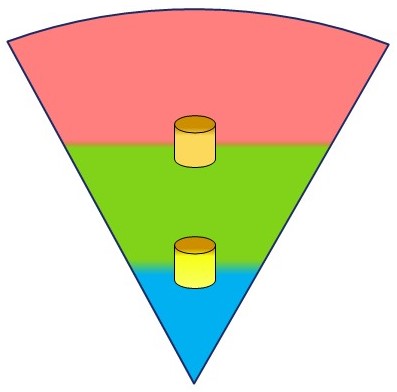

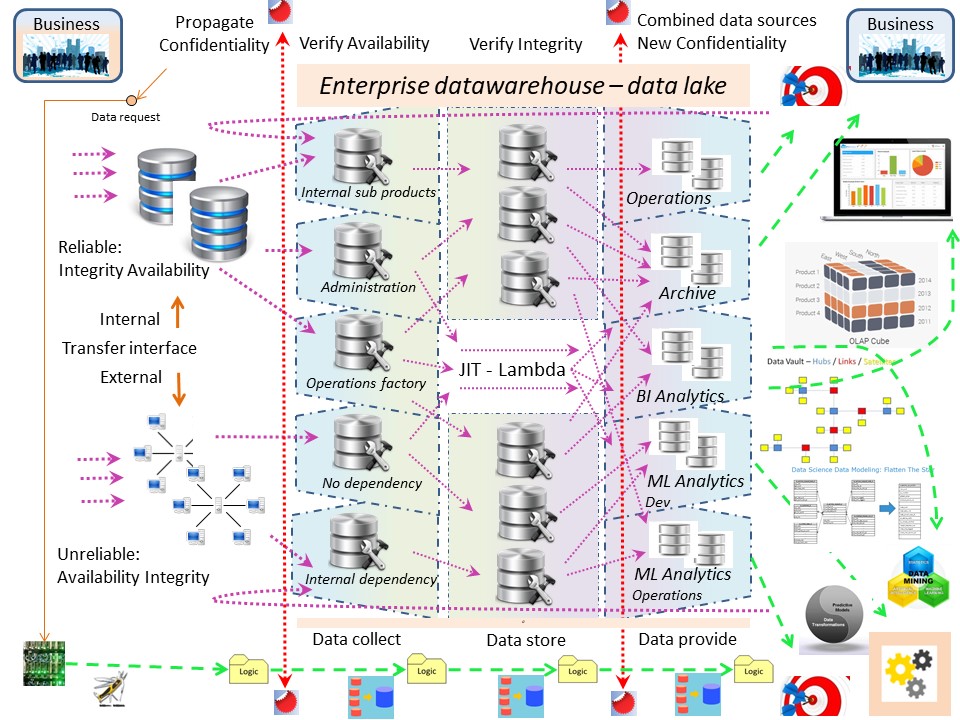

Adding BI (DWH) to layers of enterprise concerns.

Having the three layers, separation of concern :

- operations , business values stream (red)

- documentation (green)

- part of the product describing it for longer period

- related to the product for temporary flow reasons

- control strategy (blue))

At the edges of those layers inside the hierarchical pyramid interesting information to collect for controlling & optimising the internal processes.

For strategic information control the interaction with the documentational layer is the first one being visible.

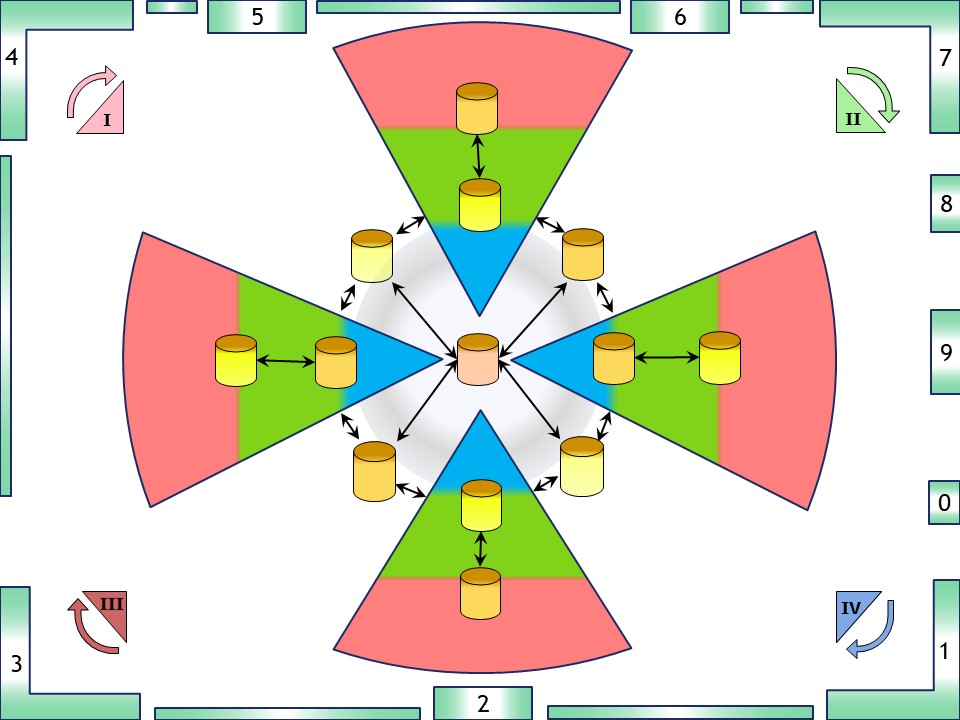

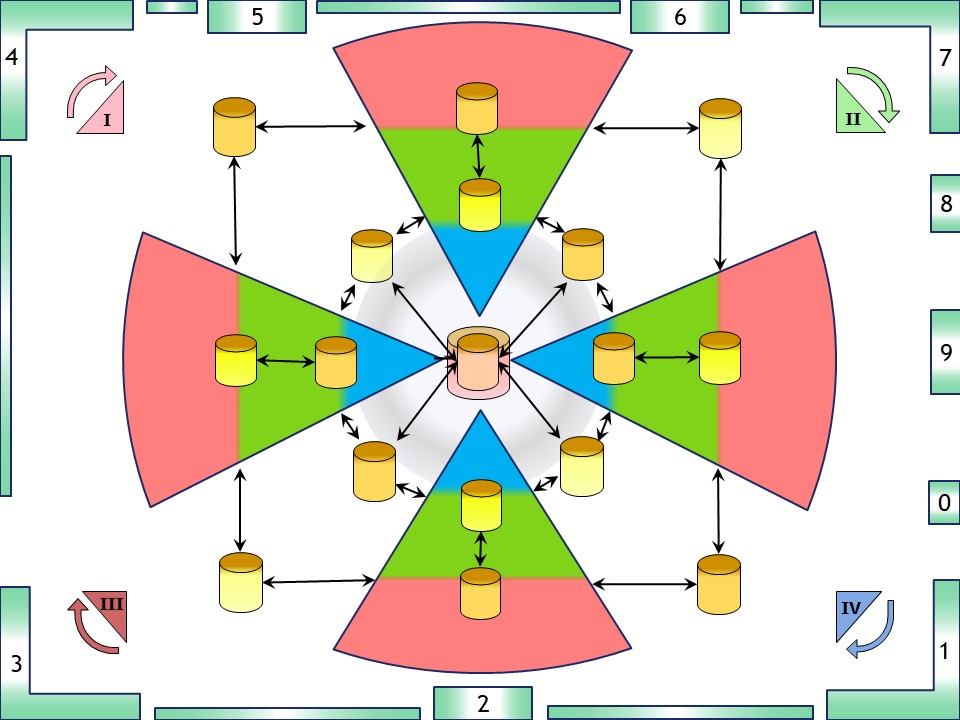

Having the four basic organisational lines that are assumed to cooperate as a single enterprise in the operational product value stream circle, there are gaps between those pyramids.

Controlling them at a higher level is using information the involved parties two by two, are in agreement. This is adding another four points of information.

Consolidating those four interactions point to one central point makes the total number of strategic information containers nine.

Too complicated and costly BI.

When trying to answer every possible question:

💰 requiring a of effort (costly)

❗ every answer 👉🏾 new questions ❓.

🚧 No real endsituation

continus construction - development.

The simple easy car dashboard could endup in an airplane cockpit and still mising the core business goals to improve

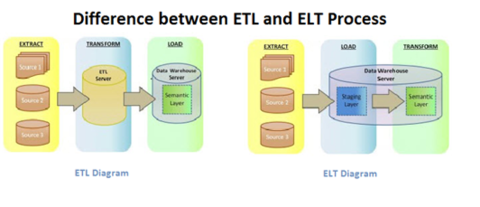

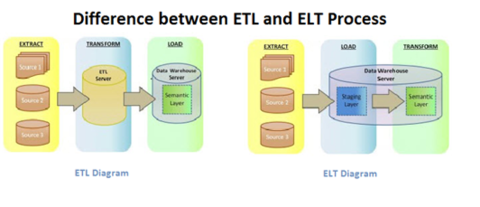

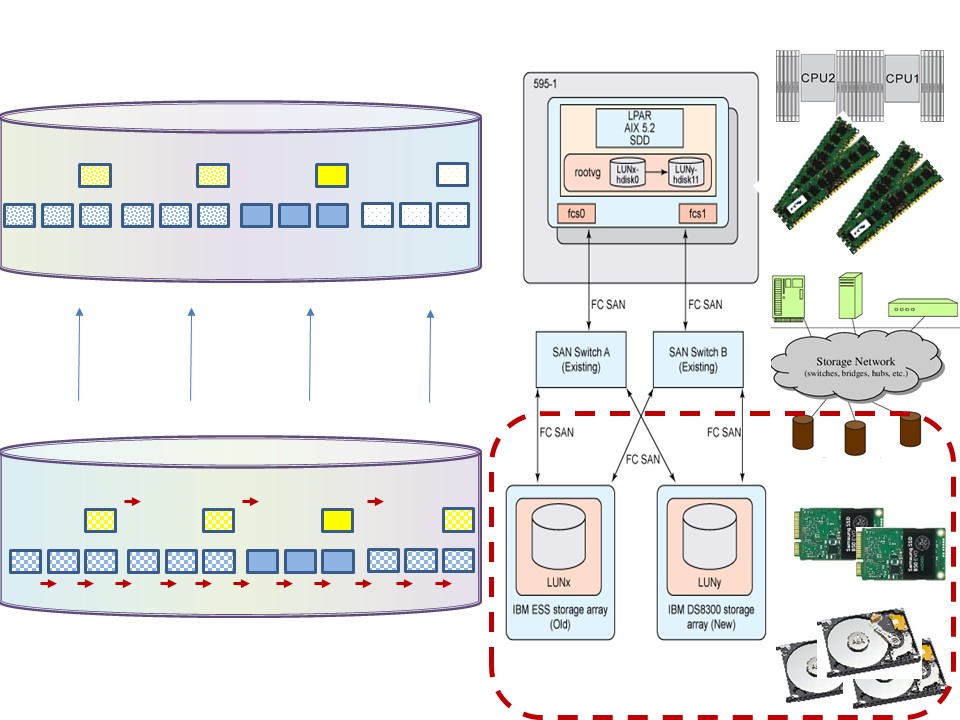

⚠ ETL ELT - No Transformation.

Classic is the processing order:

⌛ Extract, ⌛ Transform, ⌛ Load.

For segregation from the operational flow a technical copy is required.

Issues are:

- Every Transform is adding logic that can get very complicated. Unnecesary complexity is waste to be avoided.

- The technical copy involves conversions between technical systems when they are different. Also introduce integrity questions by synchronisation. Unnecesary copies are waste to be avoided.

- Transforming (manufacturing) data should be avoided, it is the data-consumer process that should do logic processing.

Translating the physical warehouse to ICT.

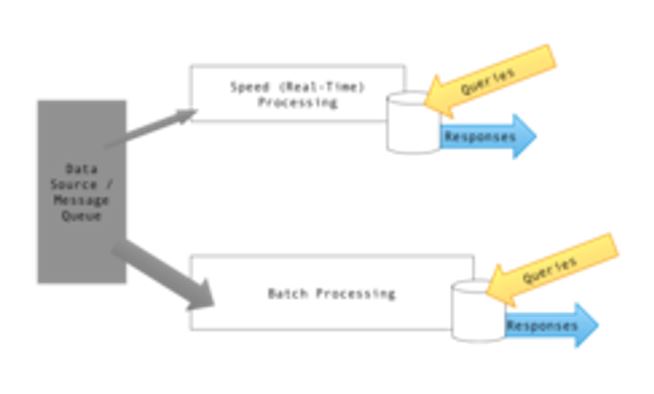

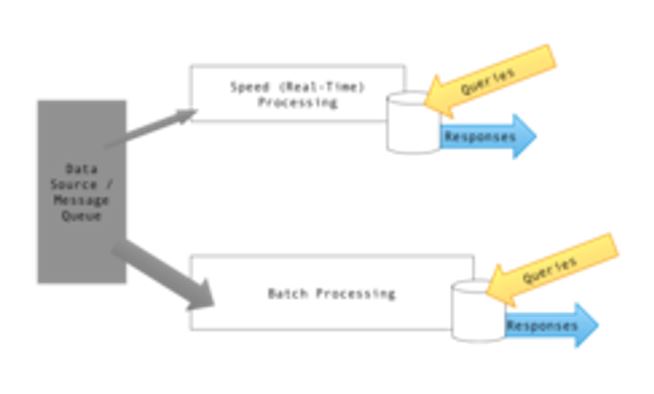

All kind of data (technical) should get support for all types of information (logical) at all kinds of speed.

Speed, streaming, is bypassing (duplications allowed) the store - batch for involved objects. Fast delivery (JIT Just In Time).

💣 The figure is what is called lambda architecture in data warehousing.

lambda architecture. (wikipedia).

With physical warehouses logistics this question for a different architecture is never heard of.

The warehouse is supposed to support the manufacturing process.

For some reason the data warehouse has got reserved for analytics and not supporting the manufacturing process.

Preparing data for BI Analtyics.

Once upon a time there were big successes using BI and Analytics. The success were achieved by the good decisions, not best practices, made in those projects.

To copy those successes the best way would be understanding those decisions made. As a pity these decisions and why the were made are not published.

The focus for achieving success changed in using the same tools with those successes.

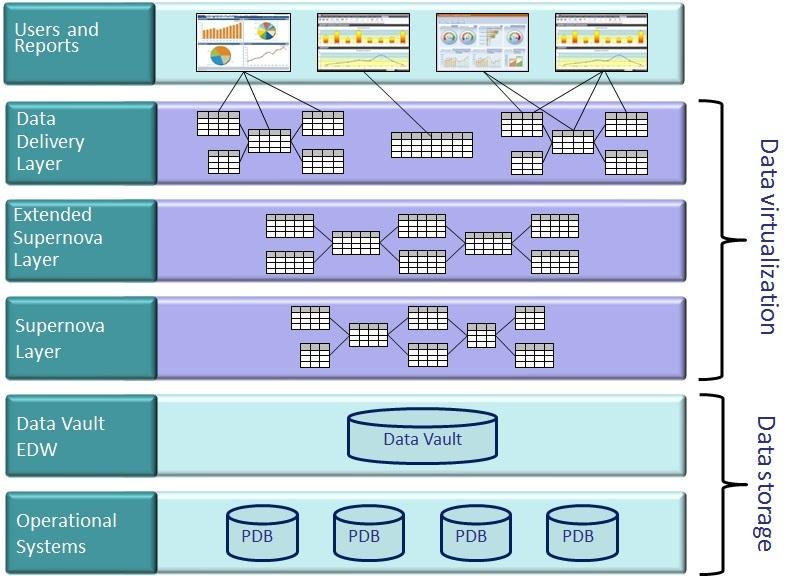

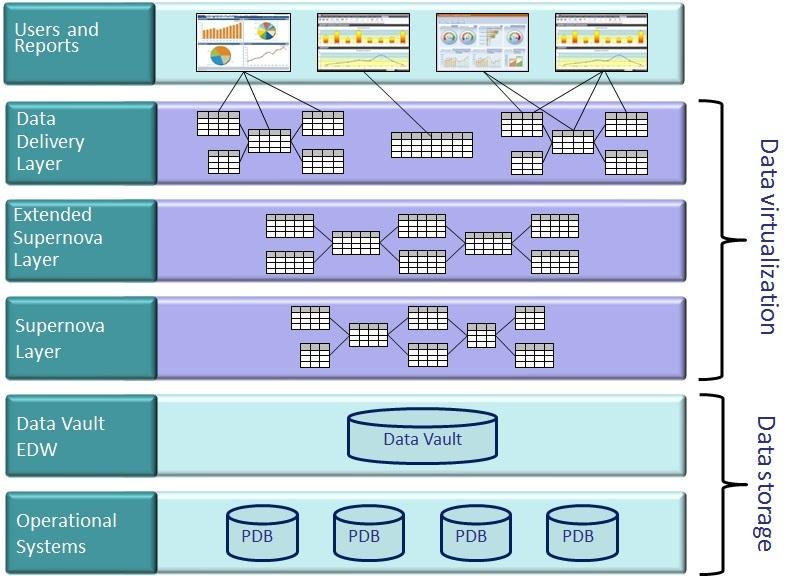

BI DWH, datavirtualization.

BI Business Intelligence has for long claiming being the owner of the E-DWH.

Typical in BI is almost all data is about periods. Adjusting data matching the differences in periods is possible in a standard way.

The data virtualization is build on top of the "data vault" DWH 2.0 dedicated build for BI reporting usage.

It is not virtualization on top of the ODS or original data sources (staging).

Presenting data using figures as BI.

The information for managers commonly is presented in easily understandable figures.

When used for giving satisfying messages or escalations for problems there is bias to prefer the satisfying ones over the ones alerting for possible problems.

😲 No testing and validation processes being necessary as nothing is operational just reporting to managers.

💡

💡 The biggest change for a DWH 3.0 approach is the shared location of data information being used for the whole organisation, not only for BI.

The Dimensional modelling and the Data Vault for building up a dedicated storage as seen as the design pattern solving all issues.

OLap modelling and reporting on the production data for delivery new information for managers to overcome performance issues.

A more modern approach is using in memory analytics. In memory analytics is still needing a well designed data structure (preparation).

😱 Archiving historical records that may be retrieved is an option that should be regular operations not a DWH reporting solution.

The operations (value stream) process is sometimes needing information of historical records.

That business question is a solution for limitations in the operational systems. Those systems were never designed and realised with archiving and historical information.

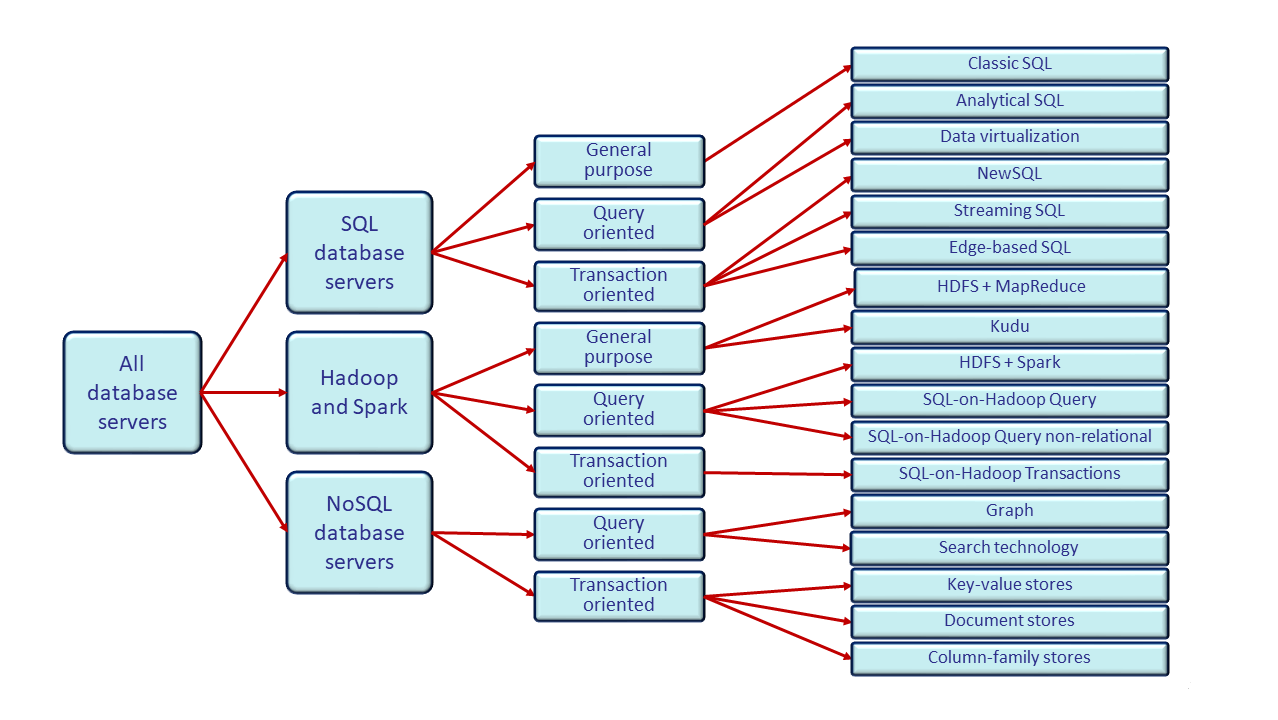

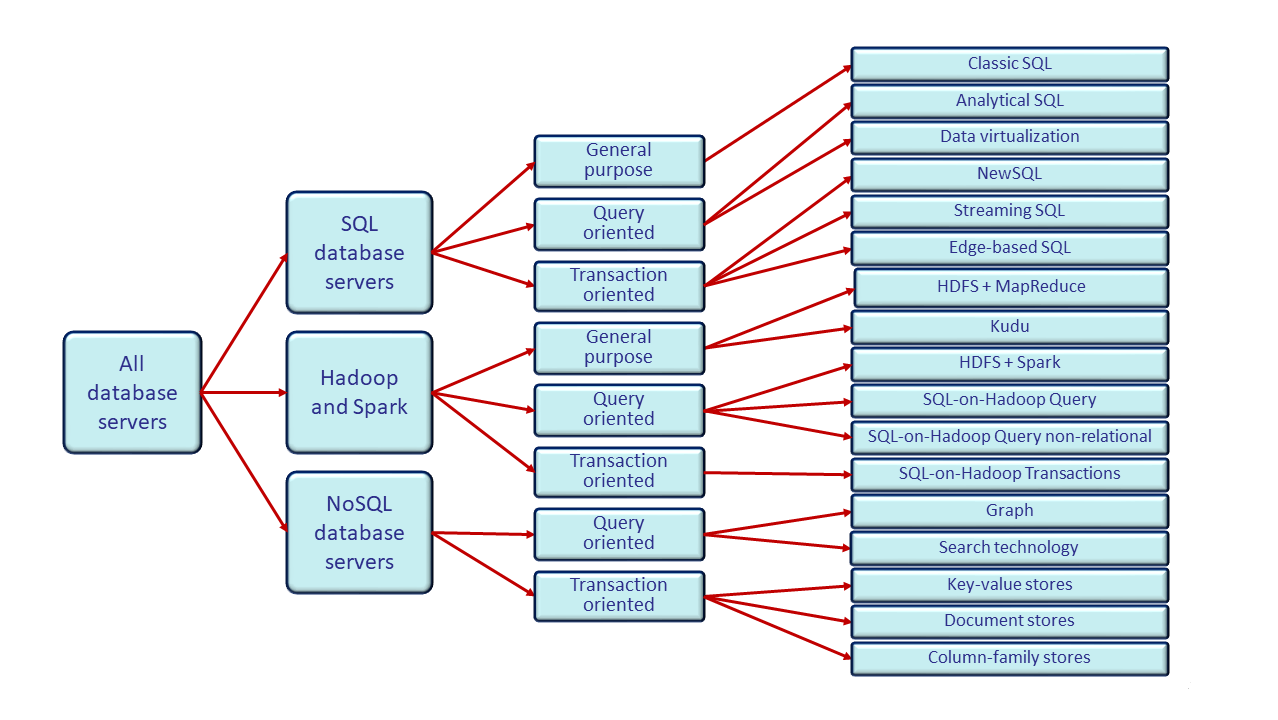

⚠ Storing data in a DWH is having many possible ways. The standard RDBMS dogma has been augmented with a lot of other options.

Limitations: Technical implementations not well suited because the difference to an OLTP application system.

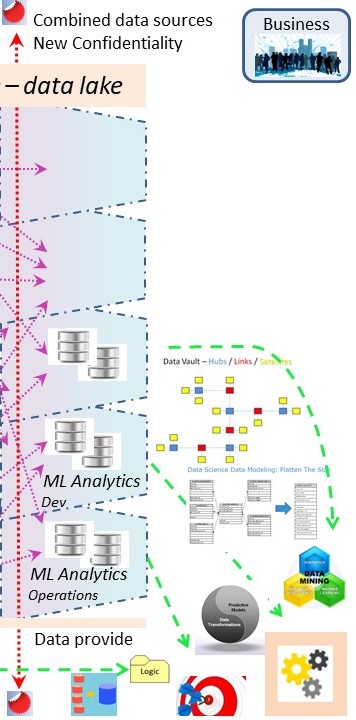

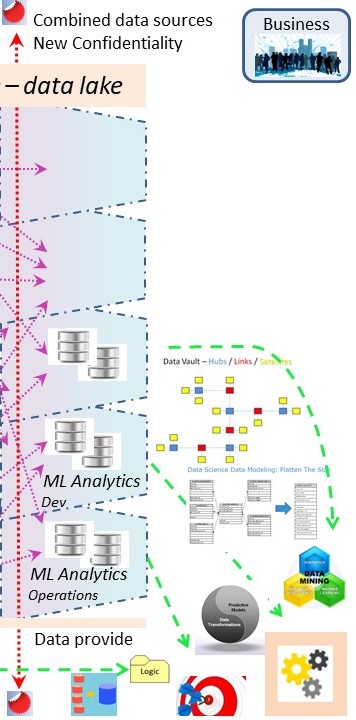

Applied Machine learning (AI), operations.

Analytics, Machine Learning, is changing the way of inventing rules to only human invented to helping humans with machines.

💡 The biggest change is the ALC type3 approach. This fundamentally changes the way how release management should be implemented.

ML is exchanging some roles in coding and data to achieve results at development but not in other life cycle stages.

When only a research is done for a report being made only once, the long waiting on data deliveries of the old DWH 2.0 methodology is acceptable.

⚠ Having a (near) real time operational process the data has to be correct when the impact on the scoring is important.

Using that approach, at least two data streams are needed:

- ML model Development: accept delays information delivery.

- ML exploitation (operations): No delay in deliveries.

🤔 The analytics AI ML machine learning has a duality in the logics definition.

The modelling stage (develop) is using data, that data is not the same, although similar, as in the operational stage.

Developing is done with operational production data. The sizing of this data can be much bigger than that of what is needed at operations due to the needed history.

The way of developping is ALC type3.

❗ The results of what an operational model is generating should be well monitored for many reasons. That is new information to process.

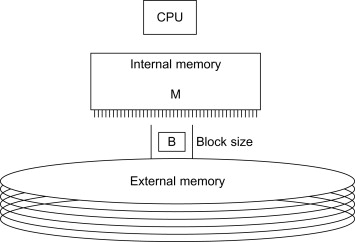

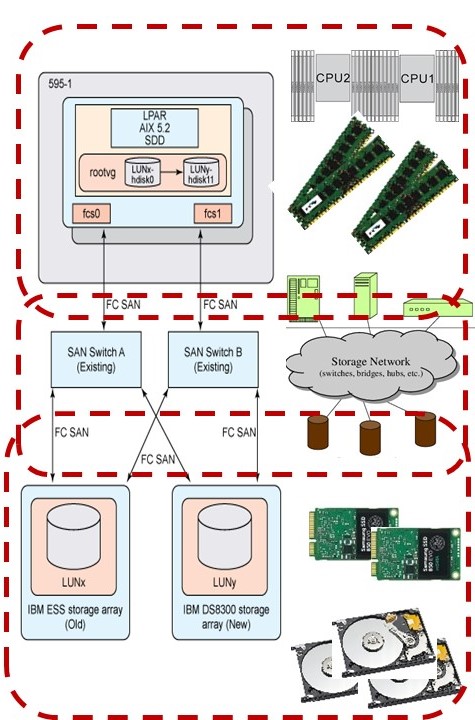

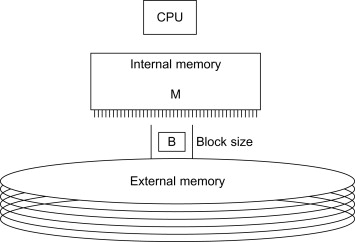

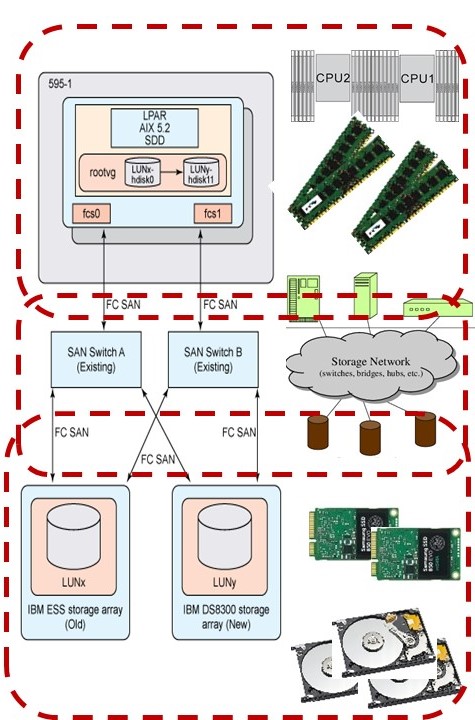

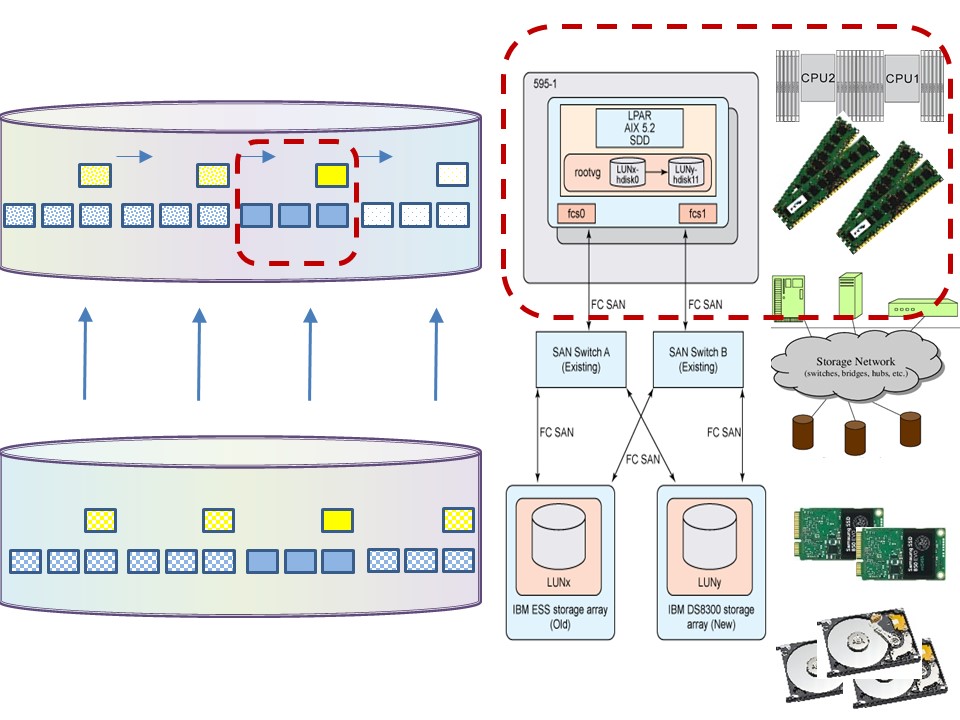

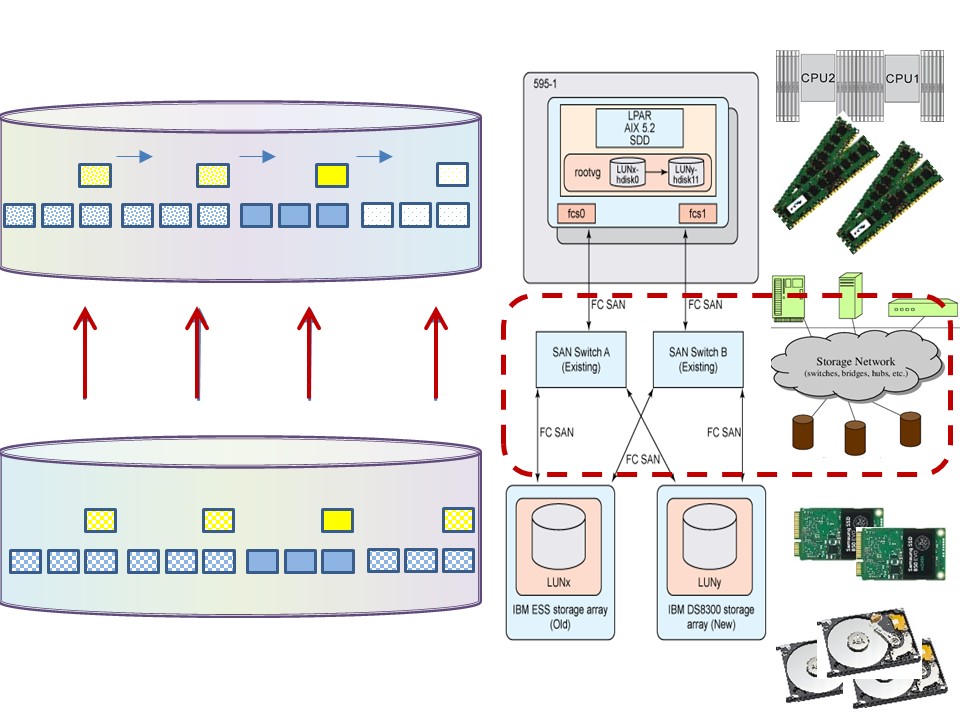

EDW performance challenges.

Solving performance problems requires understanding of the operating system and hardware.

That architecture was set by von Neumann (see design-math).

Tuning performance basics.

A single CPU, limited Internal Memory and the external storage.

The time differences between those resources are in magnitudes (factor 100-1000).

Optimizing is balancing between choosing the best algorithm and the effort to achieve that algorithm.

That concept didn´t change. The advance in hardware made it affordable to ignore the knowledge of tuning.

The Free Lunch Is Over .

A Fundamental Turn Toward Concurrency in Software, By Herb Sutter.

If you haven´t done so already, now is the time to take a hard look at the design of your application, determine what operations are CPU-sensitive now or are likely to become so soon,

and identify how those places could benefit from concurrency. Now is also the time for you and your team to grok concurrent programming´s requirements, pitfalls, styles, and idioms.

Additional component, the connection from machine, multiple cpu´s - several banks internal memory, to multiple external storage boxes by a network.

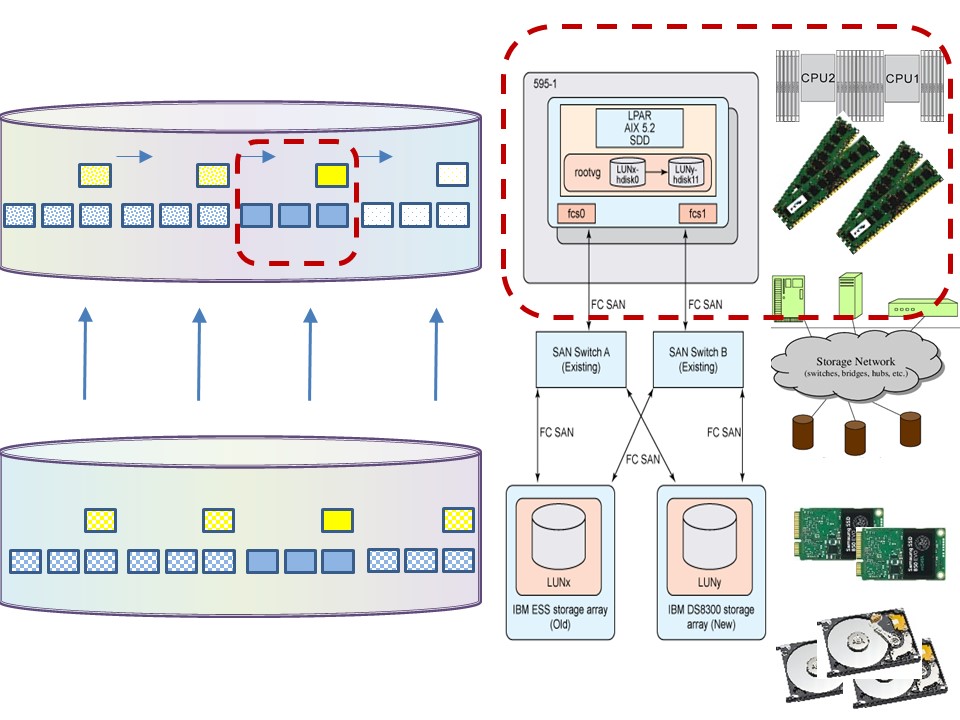

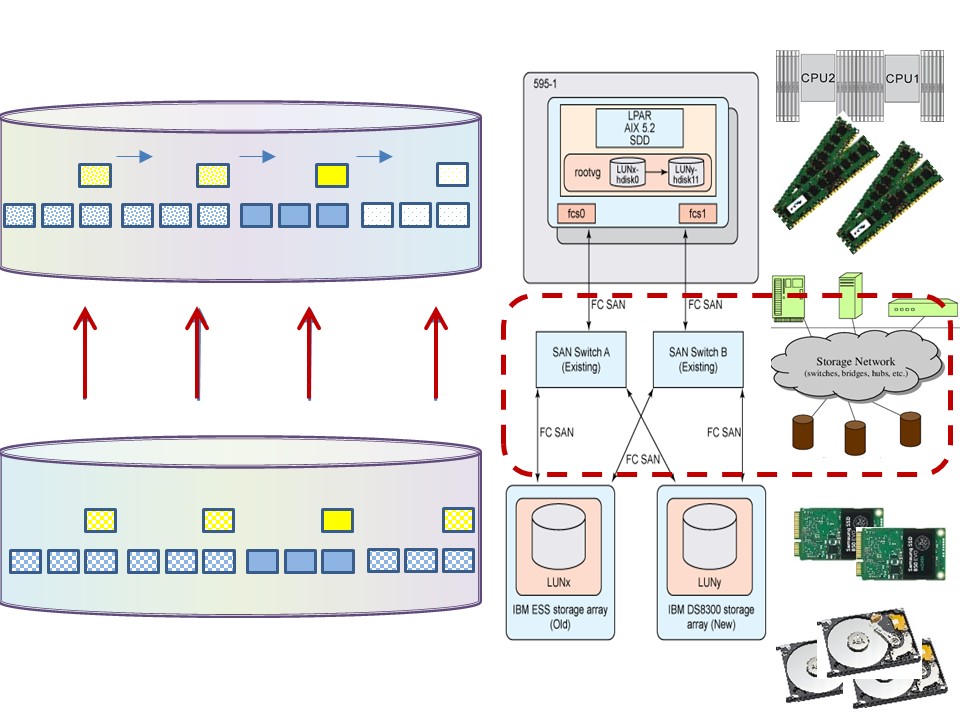

Tuning cpu - internal memory.

Minimize resource usage:

- use data records processing in serial sequence. (blue)

- indexes bundled (yellow).

- Allocate correct size and correct number of buffers.

- Balance buffers between operating system (OS) and DBMS. A DBMS normally is optimal without OS buffering (DIO).

❗ The

"balance line" algorithm is the best.

A DBMS will do that when possible.

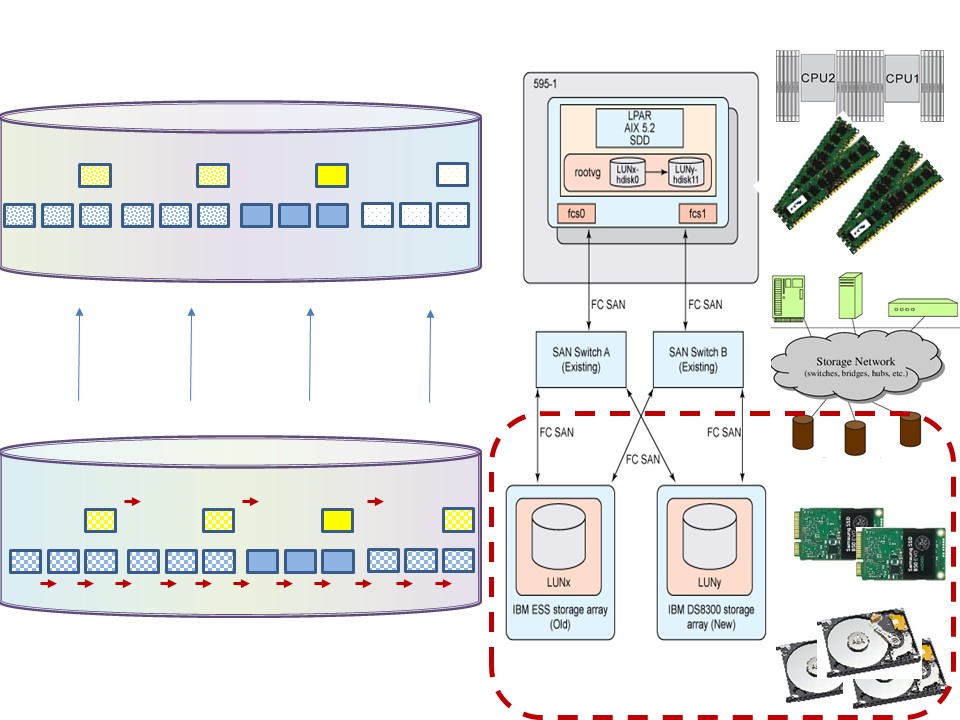

Network throughput.

Minimize delays, use parallelization:

- Stripe logical volumes (OS).

- Parallelize IO, transport lines.

- Optimize buffer transport size.

- Compress - decompress data at CPU can decrease elapse time.

- Avoid locking caused by: shared storage - clustered machines.

⚠ Transport buffer size is a coöperation between remote server and local driver. The local optimal buffer size can be different.

Resizing data in buffers a cause of performance problems.

Minize delays in the storage system.

- Multi tiers choice SSD- Harddisk -Tape, Local unshared - remote shared.

- Prefered: sequential or skipped sequential.

- tuning with Analytics is big block bulk sequential instead of random small block transactional usage.

⚠ Using Analtyics, tuning IO is quite different to transactional DBMS usage.

💣 This different non standard approach must be in scope with service management. The goal of sizing capacity is better understood than Striping for IO perfromance.

⚠ DBMS changing types

A mix of several DBMS are allowed in a EDWH 3.0. The speed of transport and retentionperiods are important considerations.

Technical engineering for details and limitations to state of art and cost factors.

Omissions in BI, Analytics reporting.

As the goal of BI Analytics was delivering reports to managers, securing informations and runtime performance was not relevant.

⚠ Securing information is too often an omission.

⚠ ETL ELT - No Transformation.

Transforming data should be avoided.

The data-consumer process should do the logic processing.

Offloading data, doing the logic in Cobol before loading, is an ancient one to be abandoned.

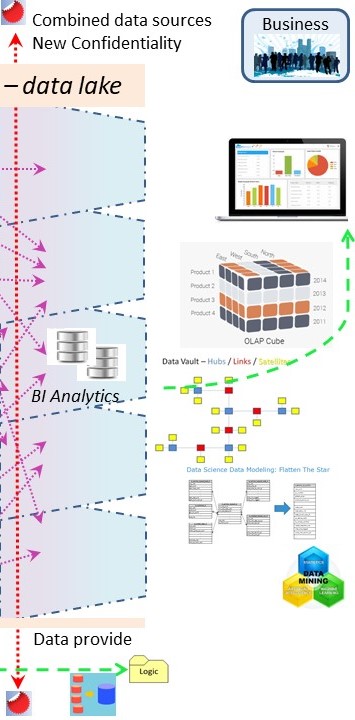

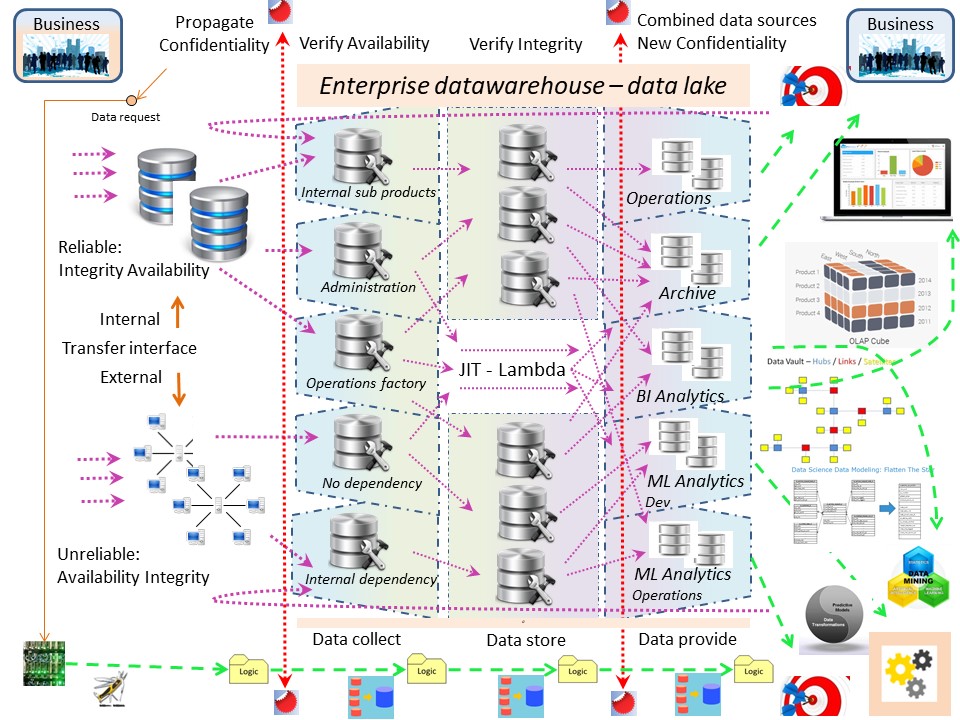

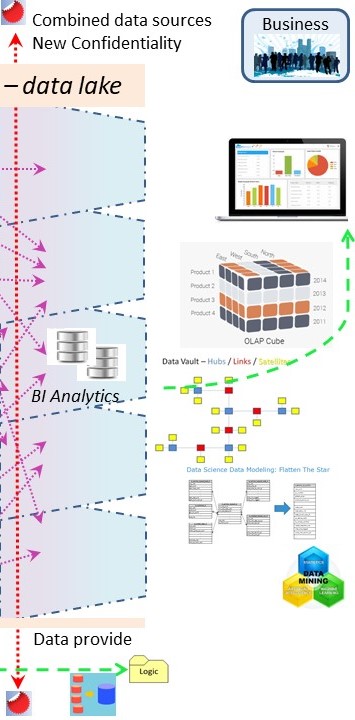

💡 Logistics of the EDWH - Data Lake. EDWH 3.0

Processing objects, information goes along with responsibilities.

❗ A data warehouse is allowed to receive semi-finished product for the business process.

✅ A data warehouse is knowing who is responsible for the inventory being serviced.

❗ A data warehouse has processes in place for deleivering and receiving verified inventory.

In a picture:

The two vertical lines are managing who´s has access to what kind of data, authorized by data owner, registered data consumers, monitored and controlled.

The confidentiality and integrity steps are not bypassed with JIT (lambda).

CIA Confidentiality Integrity Availability. Activities.

- Confidentiality check at collect.

- Integrity verified before stored.

- Availability - on stock, in store.

- Availability - "just in time".

- Confidentiality at delivery.

- Integrity at delivery.

CSD Collect, Store, Deliver. Actions on objects.

- Collecting, check confidentiality.

- Storing, verify Integrity before.

- Stored, mark Availability.

- Collect JIT, mark Availability.

- Deliver check Confidentiality.

- Deliver verify Integrity.

There is no good reason to do this also for the data warehouse when positioned as a generic business service. (EDWH 3.0)

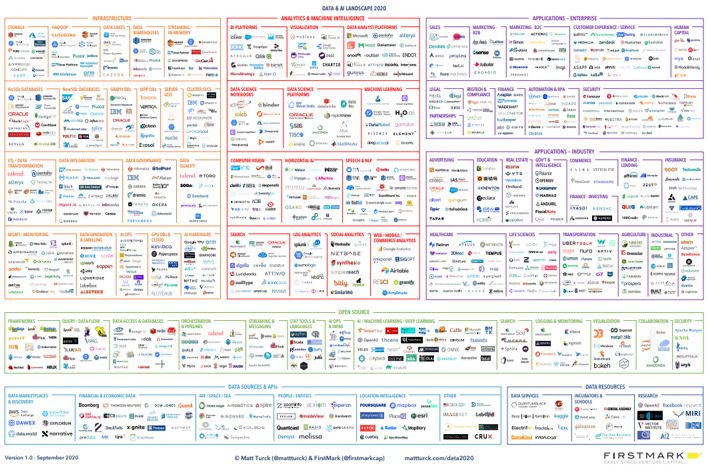

Technology push focus BI tools.

The technology offerngs are rapidly changing the last years (as of 2020). Hardware is not a problemtic cost factor anymore, functionality is.

hoosing a tool or having several of them goes with personal preferences.

Different responsible parties have their own opinion how conflicts should get solved. In a technology push it is not the organisational goal anymore.

It is showing the personal position inside the organisation.

🤔 The expectation of cheaper and having better quality is a promise without warrants .

🤔 Having no alignment between the silo´s there is a question on the version of the truth.

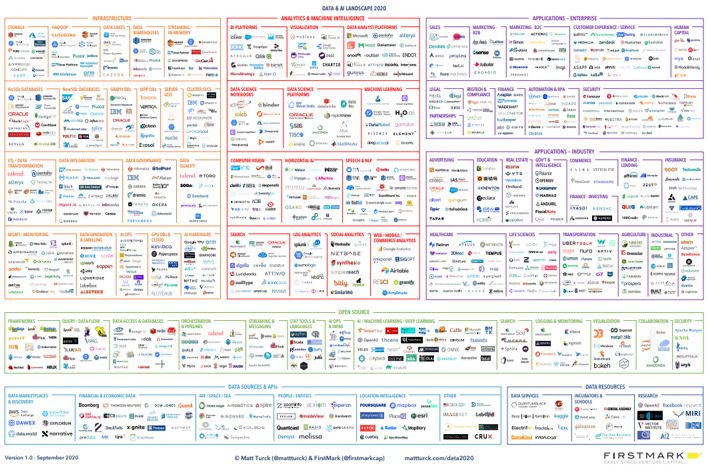

Just an inventarization on the tools and the dedicated area they are use at:

Mat Turck on

2020 ,

bigdata 2020 An amazing list of all,kind of big data tools at the market place.

Changing the way of informing.

Combining the data transfer, microservices, archive requirement, security requirements and doing it like the maturity of physical logistics.

It goes into the direction of a centralized managed approach while doing as much as possible decentralised.

Decoupling activities when possible to get popping up problems human manageable small.

Combining information connections.

There are a lot of ideas giving when combined another situation:

💡

💡 Solving gaps between silos supporting the values stream.

Those are the rectangular positioned containers connecting between the red/green layers. (total eight internal - intermediates)

💡 Solving management information into the green/blue layers in every silo internal.

These are the second containers in every silo. (four: more centralised)

💡 Solving management information gaps between the silos following the value stream at a higher level .

These are the containers at the circle (four intermediates).

Consolidate that content to a central one.

🎭 The result is Having the management information supported in nine (9) containers following the product flow at strategic level. Not a monolithic central management information system but one that is decentralised and delegate as much as possible in satellites.

💡 The outer operational information rectangle is having a lot of detailed information that is useful for other purposes. One of these is the integrity processes.

A SOC (Security Operations Centre) is an example for adding another centralised one.

🎭 The result is Having the management information supported in nine (9) containers following the product flow at strategic level. Another eight (8) at the operational level another and possible more.

Not a monolithic central management information system but one that is decentralised and delegate as much as possible in satellites.

🤔 Small is beautiful, instead of big monolithic costly systems, many smaller ones can do the job better an more efficiënt. The goal: repeating a pattern instead of a one off project shop.

The duality when doing a change it will be like a project shop.

Containerization.

We are used to the container boxes as used these days for all kind of transport.

The biggest of the containerships are going over the world reliable predictable affordable.

Normal economical usage, load - reload, returning, many predictable reliable journeys.

The first containerships where these liberty ships. Fast and cheap to build. The high loss rate not an problem but solved by building many of those.

They were build as project shops but at many locations. The advantage of a known design to build over and over again.

They were not designed for many journeys, they were designed for the deliveries in war conditions.

project shop.

to cite:

This approach is most often used for very large and difficult to move products in small quantities.

...

There are cases where it is still useful, but most production is done using job shops or, even better, flow shops.

💣 The idea is that everything should become a flow shop even when not applicable. At ICT delivering software in high speed is seen as a goal, that idea is missing the data value stream as goal.

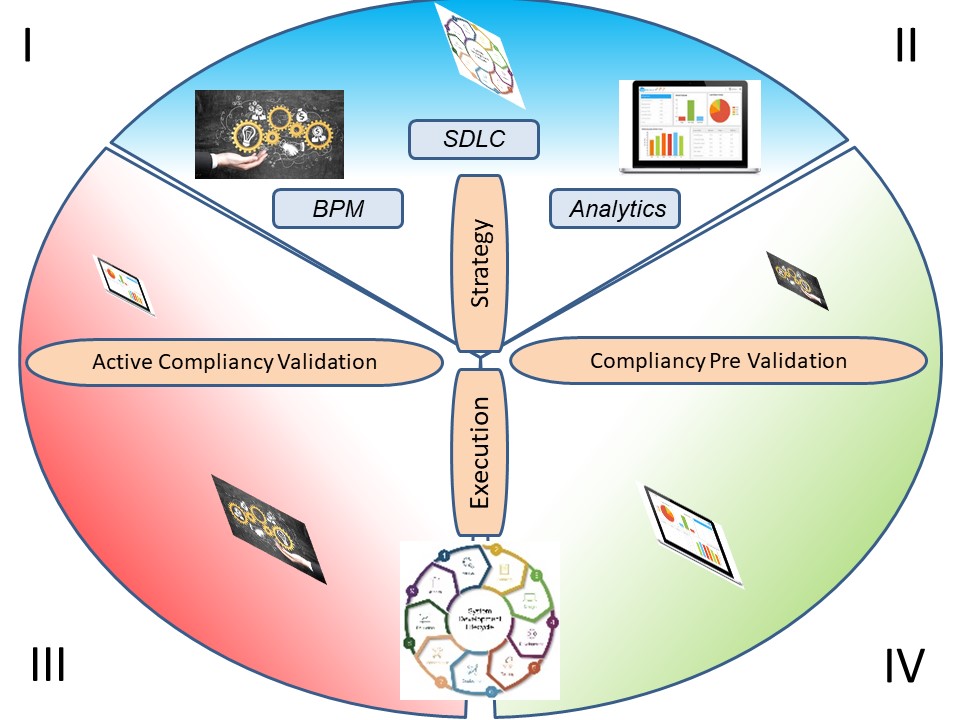

Bi Analytics helping in the business laysers.

Positioned in a small segment wiht the goal of quality and compliance improvements related to others.

The distance tot the shop-floor and possible breaking compliancy rules are some challenges in the cycle with a triad in relationships.

Combined pages as single topic.

👓 info types different types of data

👓 Value Stream of the data as product

👓 transform information data inventory

✅ data silo - BI analytics, reporting

🕶 bi tech Business Intelligence, Analytics

🔰 Most logical

back reference.

The data explosion. The change is the ammount we are collecting measuring processes as new information (edge).

The data explosion. The change is the ammount we are collecting measuring processes as new information (edge).

Having the four basic organisational lines that are assumed to cooperate as a single enterprise in the operational product value stream circle, there are gaps between those pyramids.

Having the four basic organisational lines that are assumed to cooperate as a single enterprise in the operational product value stream circle, there are gaps between those pyramids.

Classic is the processing order:

Classic is the processing order:  All kind of data (technical) should get support for all types of information (logical) at all kinds of speed.

Speed, streaming, is bypassing (duplications allowed) the store - batch for involved objects. Fast delivery (JIT Just In Time).

All kind of data (technical) should get support for all types of information (logical) at all kinds of speed.

Speed, streaming, is bypassing (duplications allowed) the store - batch for involved objects. Fast delivery (JIT Just In Time).

The two vertical lines are managing who´s has access to what kind of data, authorized by data owner, registered data consumers, monitored and controlled.

The two vertical lines are managing who´s has access to what kind of data, authorized by data owner, registered data consumers, monitored and controlled.  Different responsible parties have their own opinion how conflicts should get solved. In a technology push it is not the organisational goal anymore.

It is showing the personal position inside the organisation.

Different responsible parties have their own opinion how conflicts should get solved. In a technology push it is not the organisational goal anymore.

It is showing the personal position inside the organisation.

The first containerships where these liberty ships. Fast and cheap to build. The high loss rate not an problem but solved by building many of those.

They were build as project shops but at many locations. The advantage of a known design to build over and over again.

The first containerships where these liberty ships. Fast and cheap to build. The high loss rate not an problem but solved by building many of those.

They were build as project shops but at many locations. The advantage of a known design to build over and over again.

Positioned in a small segment wiht the goal of quality and compliance improvements related to others.

Positioned in a small segment wiht the goal of quality and compliance improvements related to others.