Design SDLC - Software Development Life Cycle

ALC type3 Low code Analytics, Business

Automatization of decisions using ICT.

Changing an organizaton can have big impact, even change the core business during transitions.

At the end of the day, there is no obligation to things as they always have been done. Switching back to small research is an option.

🔰 Most logical

back reference.

Contents

| Reference | Topic | Squad |

| Intro | Automatization of decisions using ICT. | 01.01 |

| V3onV2 | Building on the ALC-v2 into an ALC-v3 proces. | 02.01 |

| alcv3_1 | ALC-v3, 1 data provide. | 03.01 |

| alcv3_2-3 | ALC-v3, 2 - 3 ABT & model. | 04.01 |

| alcv3_4-5 | ALC-v3, 4 - 5 Delivery & model information. | 05.01 |

| What next | Step by step, Travelling the unexplored. | 06.00 |

| | Following steps | 06.02 |

Combined pages as single topic.

👓 deep dive

layers , VMAP

👓 Multiple

Dimensions by layers

👓 ALC type2 Business Apllications - 3GL

🚧 ALC type3 Low code Analytics, Business

🕶 ALC type3 Security Access (Meta)

Progress Conversion

- 2019 week:17

- This is an fresh page set up by experiences recent years (2015-2018).

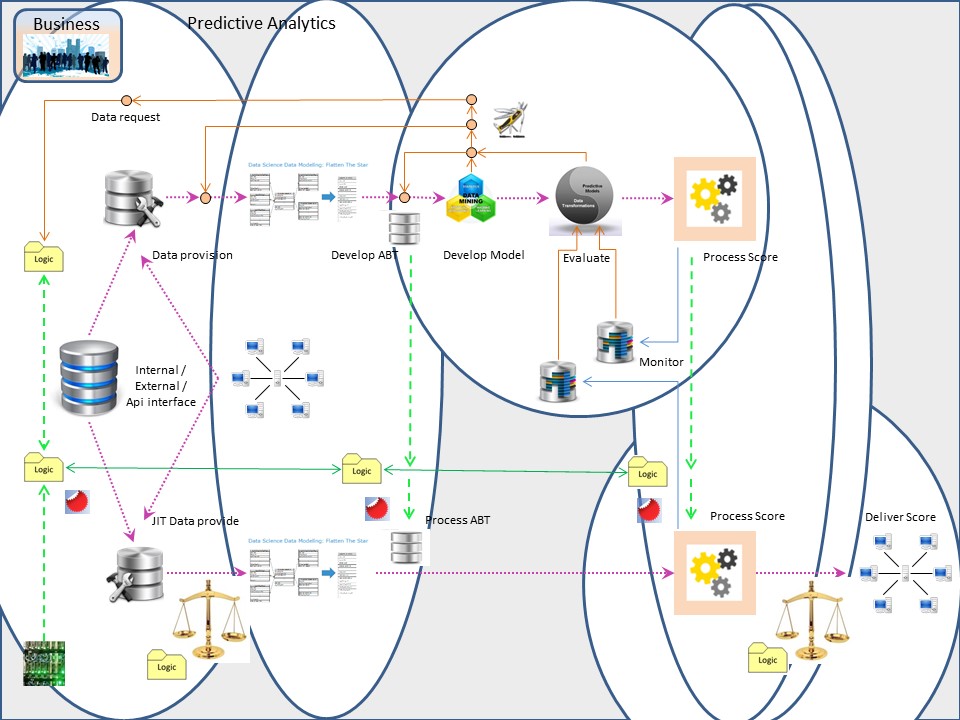

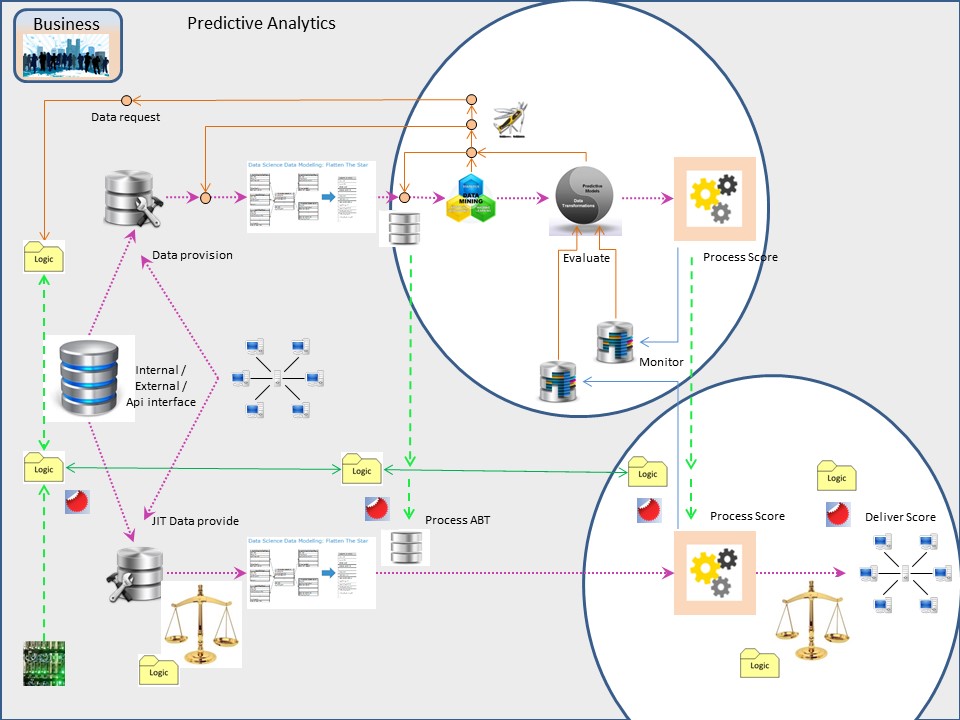

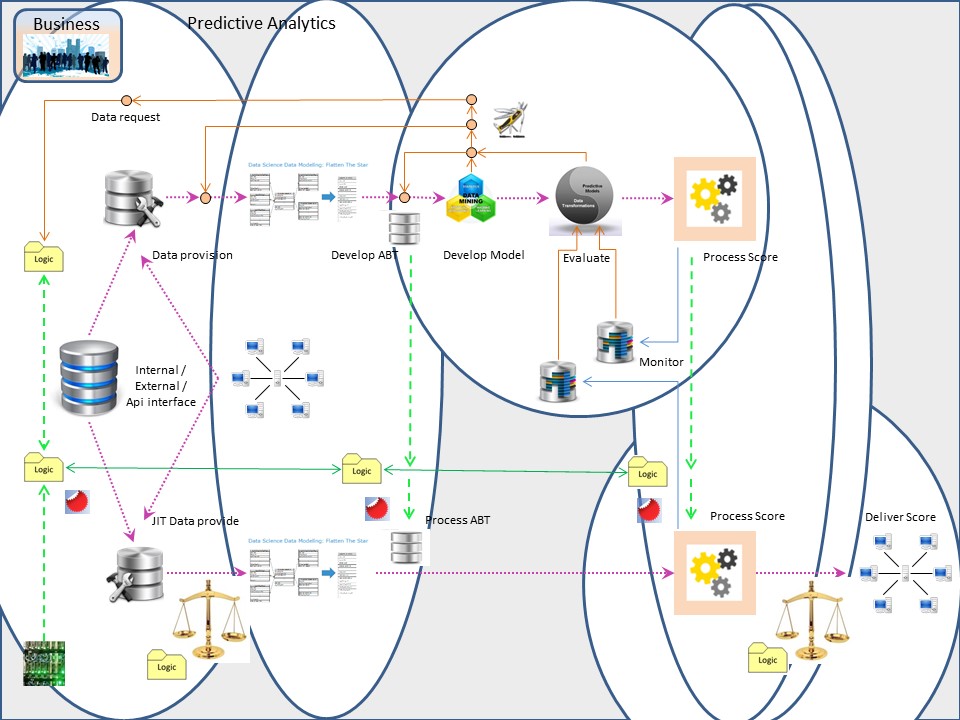

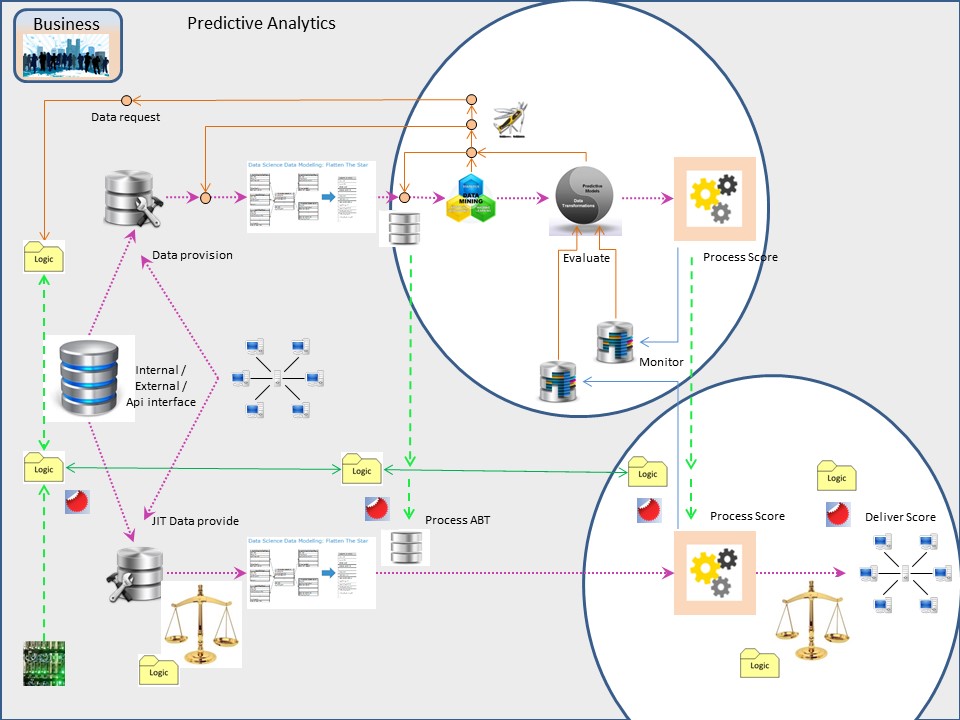

Building on the ALC-v2 into an ALC-v3 proces.

The business process life cycle has three moments where release are implemented:

🕒 IT

⚙ input -Data prepare (DS) releasing to DS

🎭

🕕 DS

🎭 proces - releasing: abt,models to IT approved by BU

⚙ (⚖)

🕘 IT

⚙ Score- deliver (BU) operational to BU informing DS

⚖ 📚 🎭

The moving circle with four stages.

The difference between ALC-v2 and ALC-v3 is going from a single code line to maintain and release into multiple ones.

All promotion lines and release lines: applicable to release management according the ALC type2 3gl software development lifecycle.

Just doing the same thing more often.

The several release lines of artifacts. Each of them having all the life cycle challenges.

Promotion lines bij dashed vertical green:

- input data. Getting the information transform into a processable format

- abt (analytic base table). Transform the processable format into one usuable for analytics tools

- score model. The model is the business logic, code for business

Release lines to add for:

- report score. Generation of results

- delivery score. Delivery of results

- * validations. Don´t let wrong results go out to others.

- * monitoring. Don´t get behindered by wrong behavior of machines.

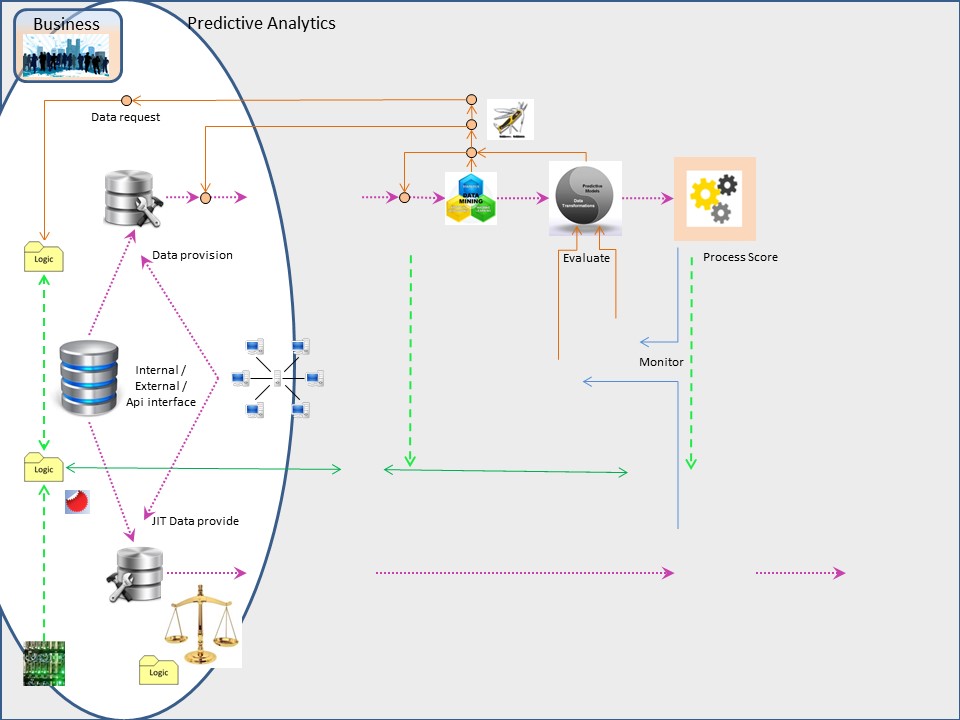

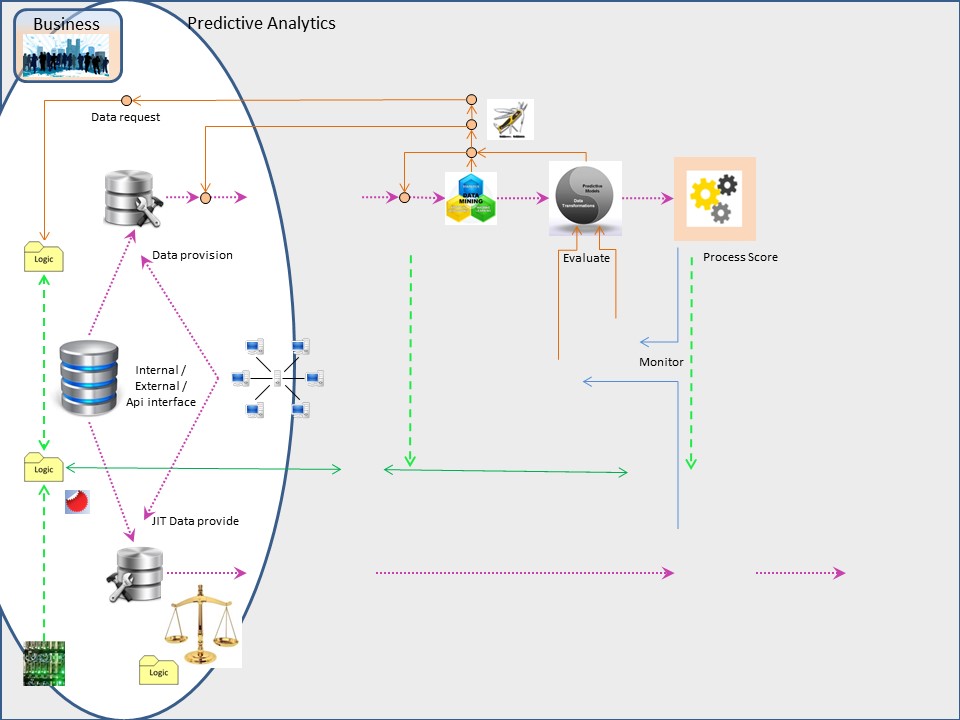

ALC-v3, 1 data provide.

Not quite classical data preparation (ETL/ELT). The data doesn't need to be in a 3nf star diagram (one fact).

ER-star,allowing not relevant data in dimensions is sufficiënt.

retrieve & verify

Involved proces steps are:

-00- ⚙ Input data from the dwh can be mass data.

Attention: performance issues while processing them.

-10- ⚙ Input data from direct resources (streaming lambda) should be able to process fast enough not using too much resources.

The performance to be aligned with mass data processing.

-80- ⚙ Validation of the input data (ER relations star):

- logical number of changes within expectations

- Differences with original source systems.

Notes:

- The numbering -80- is not a logical ordered on this place.

It is the logical order of the validations on results deliveries.

- Having an update process on changed facts implemented:

a working list of the changed facts is additional input for the ABT-Sscoring.

The first bundle for release management:

Parallel development - test

The development of the input data connections, data mart - score mart can connect to test (fake) business data.

The technical connectivity and data descriptions (metadata) are functionalities in scope.

Builing models (data mart) is only possible using real production data extracts. As a result an analytics environment is needing parallel development, parallel testing.

DS

🎭 DS Models (D) on delivered IT validated data (datamart - score mart = T)

IT

⚙ IT Develops "score reporting", delivery using the ABT and model code and data (score mart = T) handed over from DS.

The score delivery is developed and tested with a Test business data connection

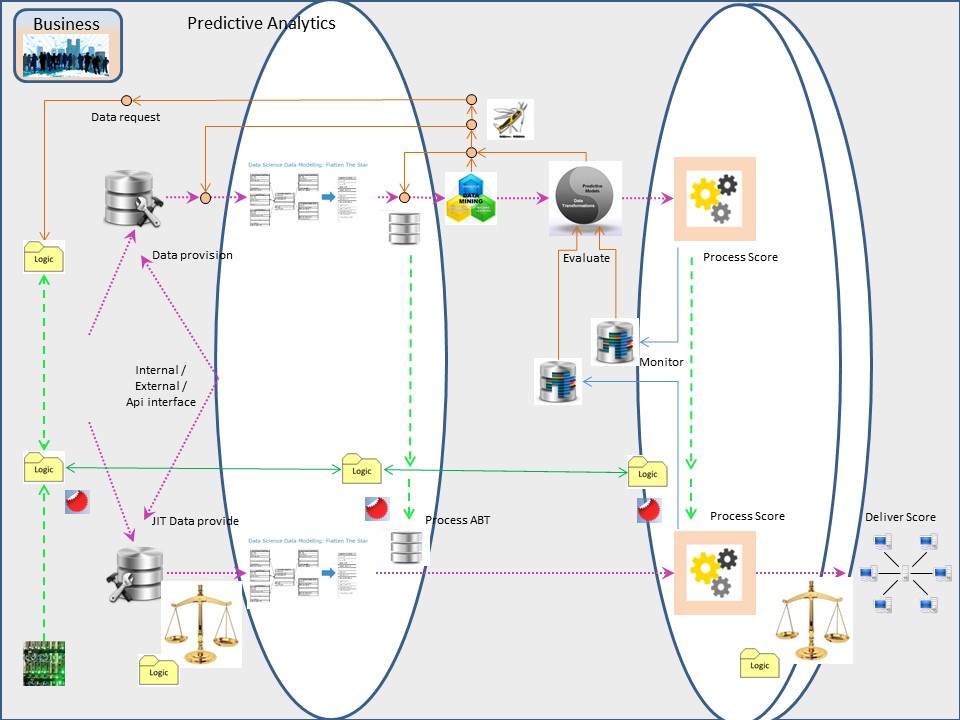

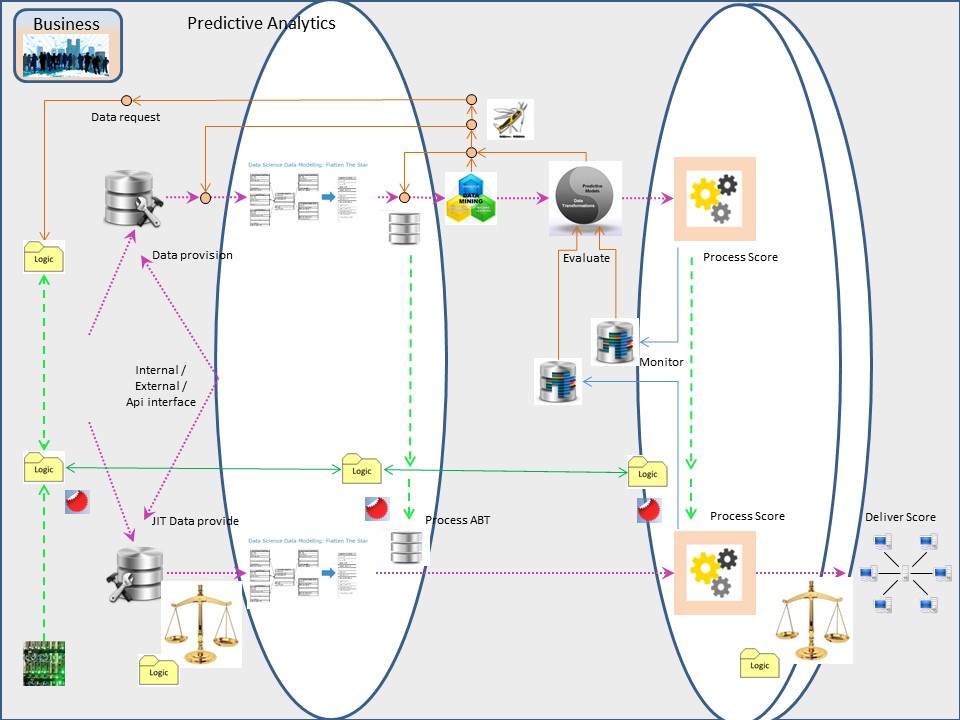

ALC-v3, 2 - 3 ABT & model.

Model development & releasing:

- The scoring, running the analytical model operational, is the commonly accepted release management line.

- Doing release mangement is assuming the same tools and unchanged runnable code is applicable for building the model and running it.

- Having multiple analytical iterations active on the same data is possible and make sense in score variations.

Involved proces steps are:

-20- 🎭 Optional: split iteration start.

-30- 🎭 Data preparation, features. (first vertical line)

-40- 🎭 Scrings (decision logic).

-50- 🎭 Optional: split iteration end. (second vertical lines)

The second release management bundle:

Involved proces steps are (continued):

-60- ⚙ Prepare data, documents. Change detection current - previous results.

-70- ⚙ Archive changed score results by cumulation cases.

Marked on timestamps and score-model versions.

-80- ⚙ Validate results: logical value expectations, number of changes.

These are not visible as green vertical lines, marked activities (bottom right).

Parallel development - test

The metadata for "edata provide" and the model developping is to be segregated.

cooperation:

parallel development

parallel test

An easy wasy to do that is wihtin the shared devlopement. Duplicating those data defintions and connecting them by dummy copy jobs, completing data lineage.

A good collaboration between

(analytics operations) and

(analytics development)

is a prerequisite for planning the scheduling of the processes.

ALC-v3, 4 - 5 Delivery & model information.

Model deployment & running:

- Delivering scores to an operational environment should not be possile in the development - test of the operational environment.

- A DR (Disaster Recovery) environment, shadow production acceptance, should be capable of easy switching on/off the external delivery.

Involved proces steps are:

-80- ⚙ Validation of results (interation optional).

-90- ⚙ ⚖ 📚 🎭 Preparing datasets documents extracted from score resulst and previous run results. Change detection.

Delivering operational score results is a shared responsability.

These are not visible as green vertical lines, marked activities inside circles.

The third release management bundle:

Model explanation

The production data - datamart being used for the modelling should be archived wiht the modelling tool version wiht all intermediate steps.

💣 The sizing of the data makes it impossible to do that. The size is easily 10-60Gb or far higher.

Release management tools used with code artifact are not able to support this. A backup/restore approach is realizable.

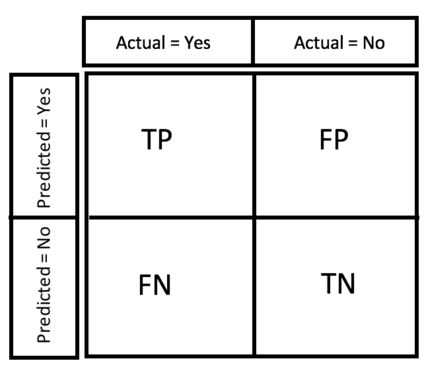

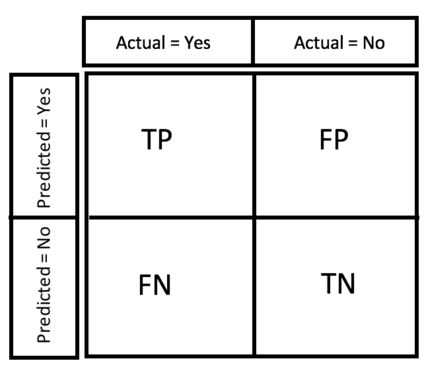

Basic model performance:

right-metric-evaluating ML (kdnuggets confusion matrix).

There are many metrics to explain what is the balance. The same kind of numbers to be made using the operational score results for the real perfomance (BI Business Intelligence).

Alternatives:

dmg org

dmg org (4.3 model explanation.)

PMML provides applications a vendor-independent method of defining models so that proprietary issues and incompatibilities are no longer a barrier to the exchange of models between applications.

It allows users to develop models within one vendors application, and use other vendors applications to visualize, analyze, evaluate or otherwise use the models.

MLmatters (wagstaff)

This paper identifies six examples of Impact Challenges and several real obstacles in the hope of inspiring a lively discussion of how ML can best make a difference.

Aiming for real impact does not just increase our job satisfaction (though it may well do that); it is the only way to get the rest of the world to notice, recognize, value, and adopt ML solutions.

Step by step, Travelling the unexplored.

⚠ Challenge: remaining at human only invented decisions.

The ALC-v3 is more complicated as giving an order to creating change code (software). The decision on simple required business logic is moving away from human decison makers.

Those changes are disturbing for decision makers.

⚠ Challenge: human understandable explanations of ML decisions.

The underpinnig of human guided machine learning decisions is not well settled. Neither by ICT neither by business, neither by data-scientists.

The acceptance of profiling is a public generic aversions. Legal guidelines when natural persons are involved are mentioned eg in the GDPR.

⚠ Challenge: change in sizing computer resources, technical performance & tuning.

The old paradigm was that machines for operational production are better than the ones used at development - test.

When the hardware was much more expensive than human working hours, software was human coded, that was true.

The machines used for modelling are needing far more computer resources than at production layers. As the hardware has become cheap, the cost argument is gone.

The isse: technical design paradigma´s not adjusted conform modern requirements.

Transforming processes.

Change is a the only constant factor of a journey. Never knowing for sure what is next. Changing fast is exploring where no one has gone before.

The people around ICT are a different kind of species than the ones running the business.

Understanding the business during fast transitions is another world.

Combined pages as single topic.

👓 deep dive

layers , VMAP

👓 Multiple

Dimensions by layers

👓 ALC type2 Business Apllications - 3GL

✅ ALC type3 Low code Analytics, Business

🕶 ALC type3 Security Access (Meta)

🔰 Most logical

back reference.

Changing an organizaton can have big impact, even change the core business during transitions.

Changing an organizaton can have big impact, even change the core business during transitions.

(analytics operations) and

(analytics operations) and  (analytics development)

is a prerequisite for planning the scheduling of the processes.

(analytics development)

is a prerequisite for planning the scheduling of the processes.

Basic model performance:

Basic model performance: