Devops Data - practical data cases

Lineage, value stream, governance , operations

Lineage Patterns as operational constructs.

data lineaage usage, xml json, why is it critical?

There are a lot of questions to answer:

📚 Information data is describing?

⚙ Relationships data elements?

🎭 Who is using data for what proces?

⚖ Inventory information being used ?

🔰 Somewhere in a loop of patterns ..

Most logical back reference:

previous.

Contents

| Reference | Topic | Squad |

| Intro | Lineage Patterns as operational constructs. | 01.01 |

| process lineage | Value stream events - lineage. | 02.01 |

| pull events | Value stream events, intake up to picking. | 03.01 |

| push events | Value stream events, produce & delivery. | 05.01 |

| BI lineage | Generic Analytical services - BI transformations. | 05.01 |

| What next | Events Lineage - Executing & Optimisations. | 06.00 |

| | Dependencies other patterns | 06.02 |

Progress

- 2020 week 06

- Page filled with new content. Only some of the data lineage is old.

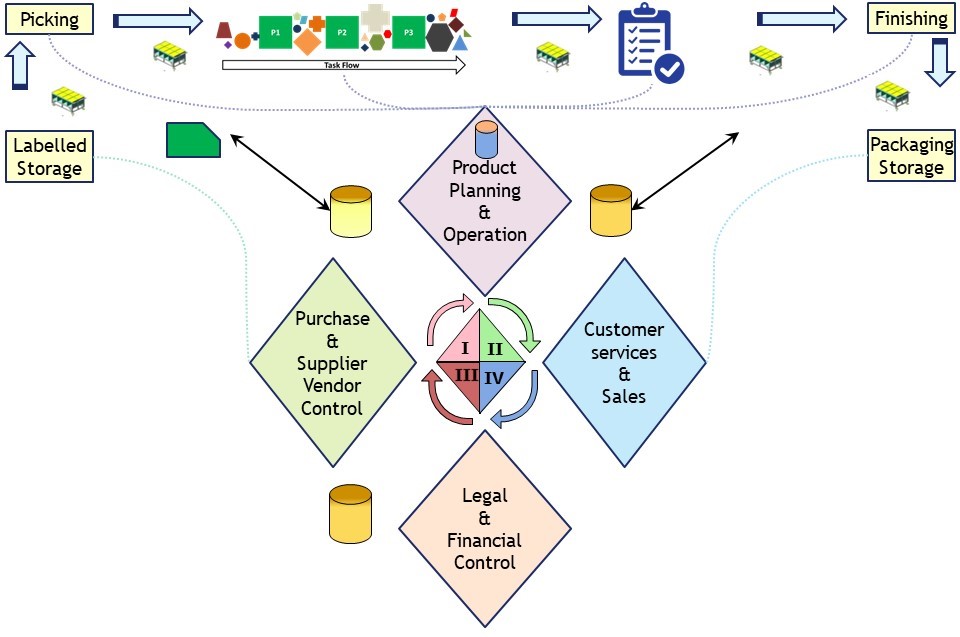

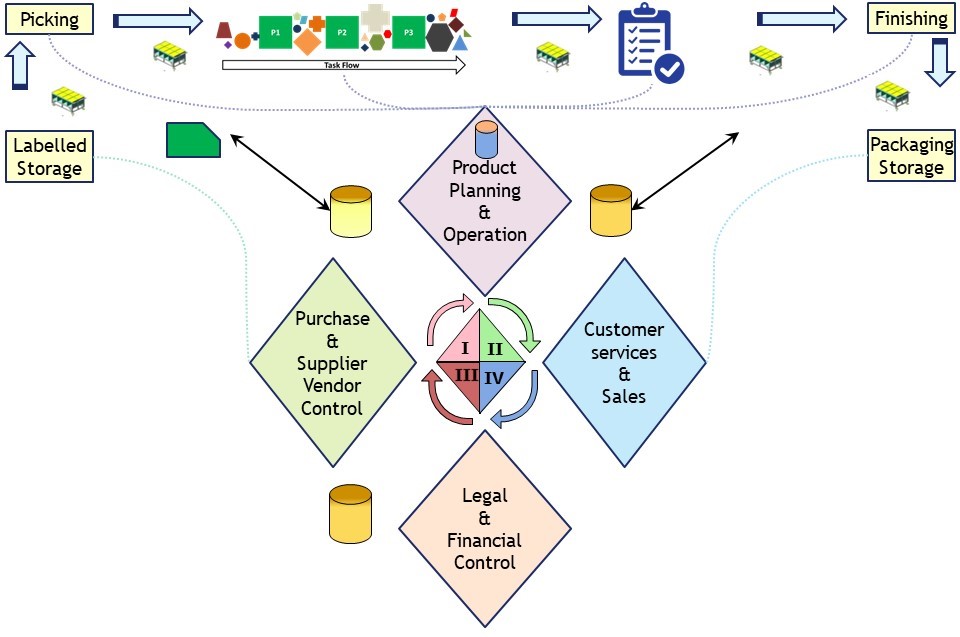

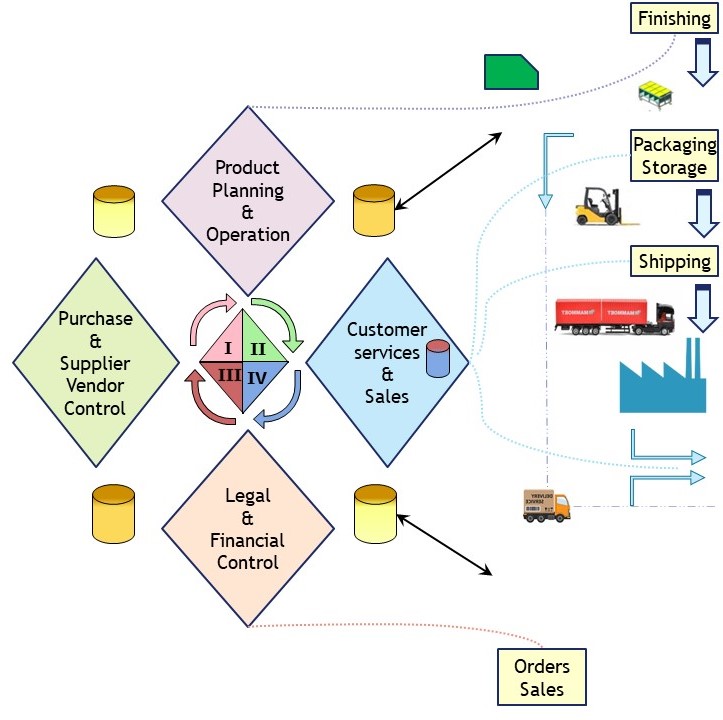

Duality service requests

sdlc: The value stream is linear having several responsible parties.

Every party can have his own administration but is needing alignment with the two neighbours.

bianl: Every party can have his own way of optimisation. Those details are appropriate for process mining.

There are four decoupling lines for cooperation. They are the ones for communication and analytics. Consolidation the fifth.

bpm tiny: I an small organisation there is no need for a split up in smaller units.

There is also no requirement to make the processes very detailed and strict by responsibilities.

bpm big: Splitting up big environments into smaller ones will make the units more manageable but will require more managed cooperation.

Generic services as a new phenomenon.

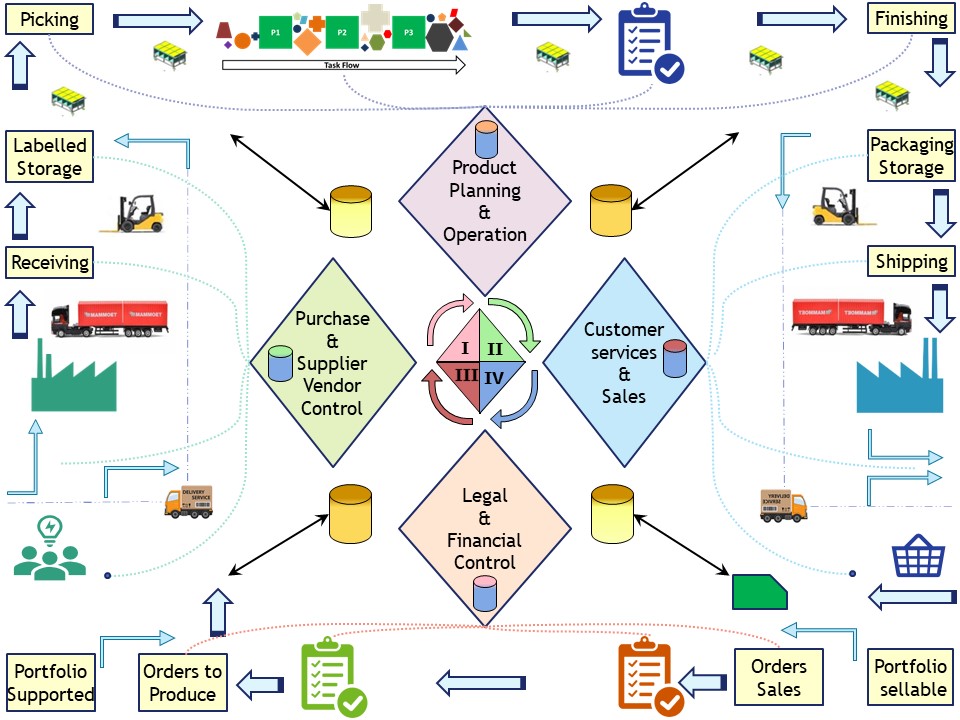

Value stream events - lineage.

The organisational process from "service request , initiator" to "delivery , actor" is linear.

Some Events cannot started before the previous one is completed. The delivery is often at a moment: handover of the product.

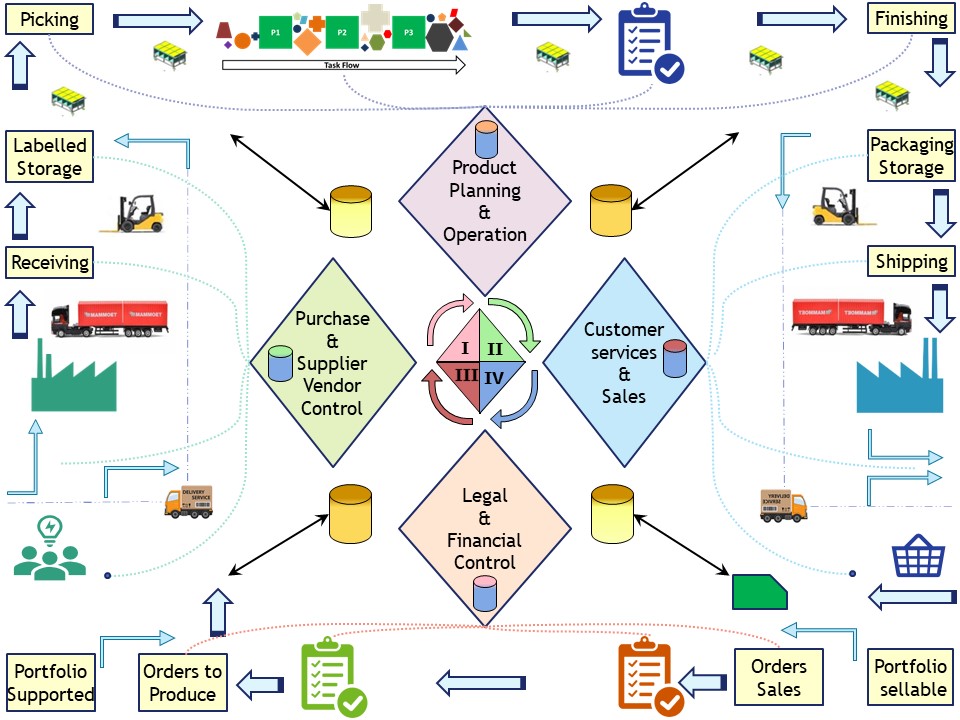

Events in the value stream process. (WMS))

The abstraction of this sentence is very generic, it is applicable tot any product physical or administrative not just for software systems.

Events are marked by a date-time stamp when it is very detailed. At a summarisation level for management information a date stamp is sufficient.

Issues:

- The technical representation as data is not standardized.

- The goal and use of an event is not standardized.

- What events are important in the value stream is not always clearly defined.

- Stakeholders in partial processes are not speaking a same language on artefacts and/or events.

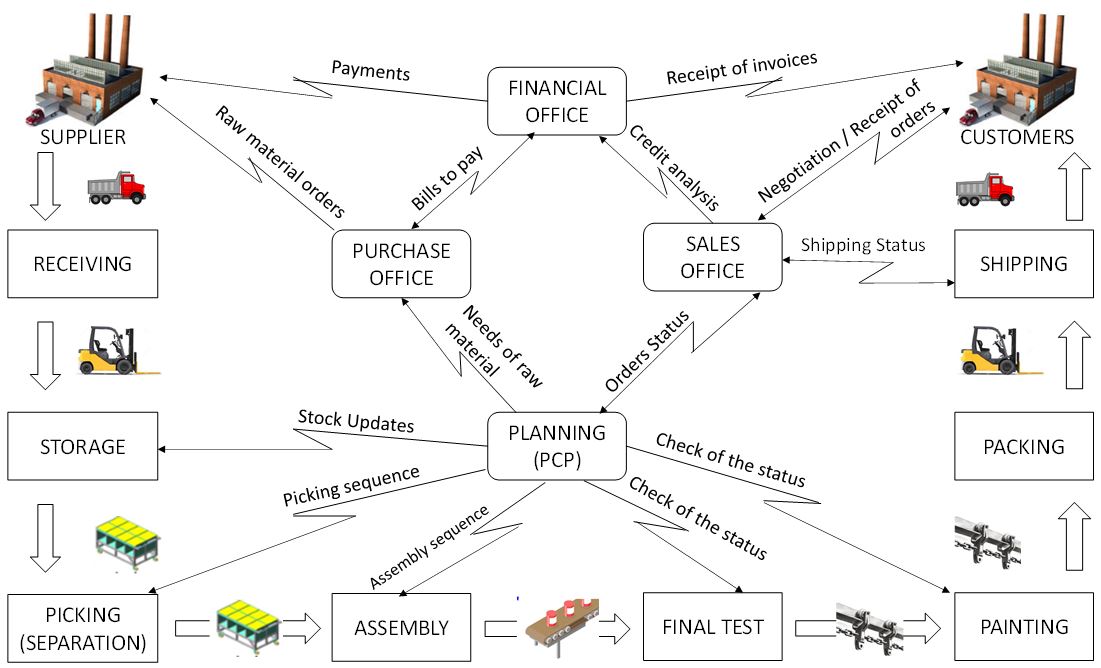

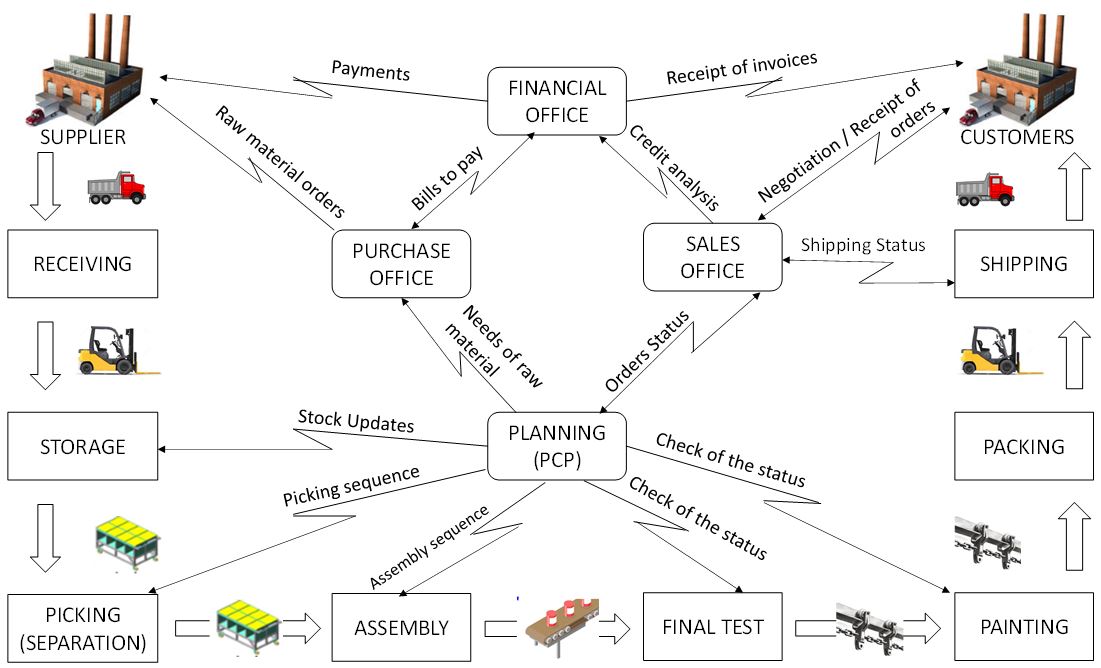

Searching for what has done for that the following figure was found: 👓

"Assessment of the implementation of a Warehouse Management System in a multinational company of industrial gears and drives" by Rafael de Assis, Juliana Keiko Sagawa (2018)

The focus for that paper is the WMS (software system) and not the manufacturing or sales flow. It is nicely showing the events and linear steps controlled by four different departments (stakeholders).

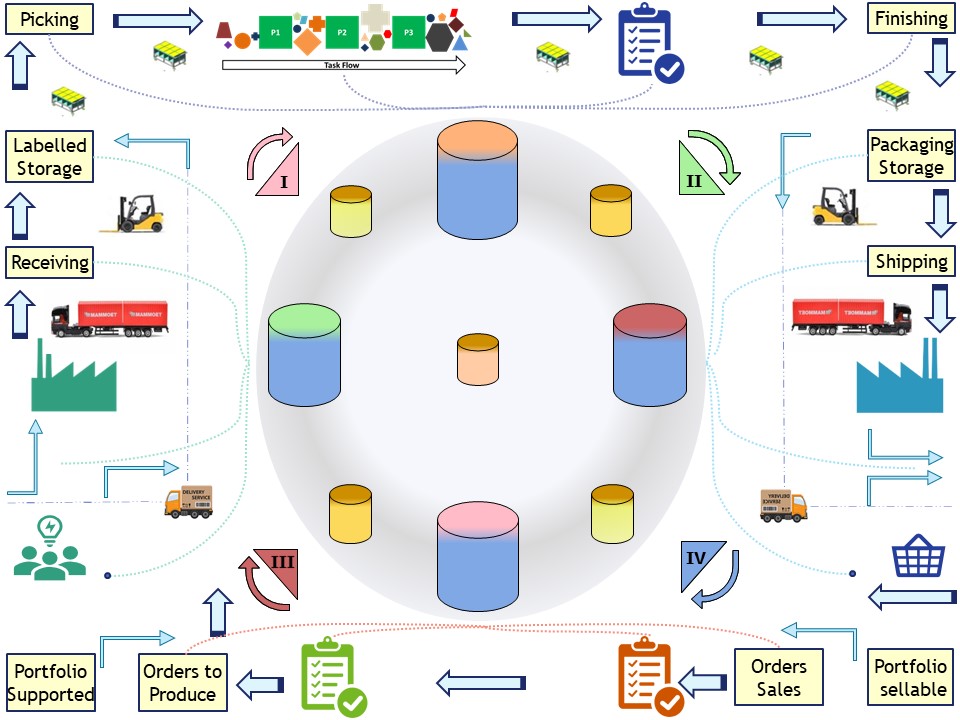

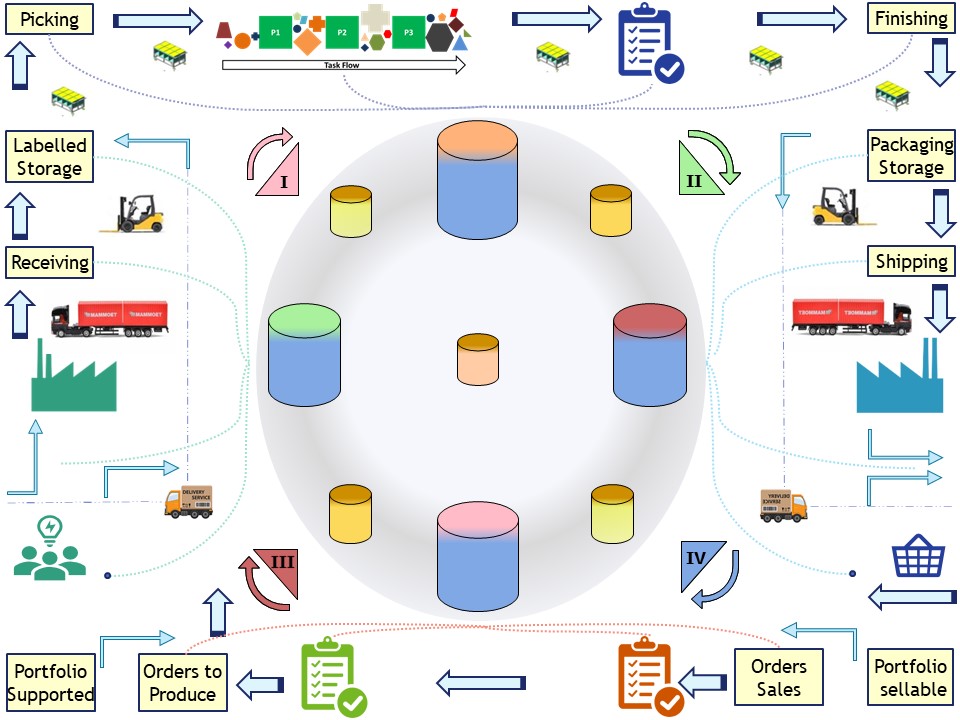

Events in the value stream process. (abstract level full))

I made my personal version having a clockwise process, adding the pull sales and intake process.

There are horizontal vertical and diagonal lines. The clokcwise lineage is:

- IV The pull request, initiated at the right (basket pull)

- IV-III Verifying requests on conditions (reject) before produce at the bottom

- III-I inbound warehouse preparin collection external & internal materials

- I-II Manufacturing with quality check (push) at the top

- II-IV Delivering using possible multi channels at the right, outbound warehouse

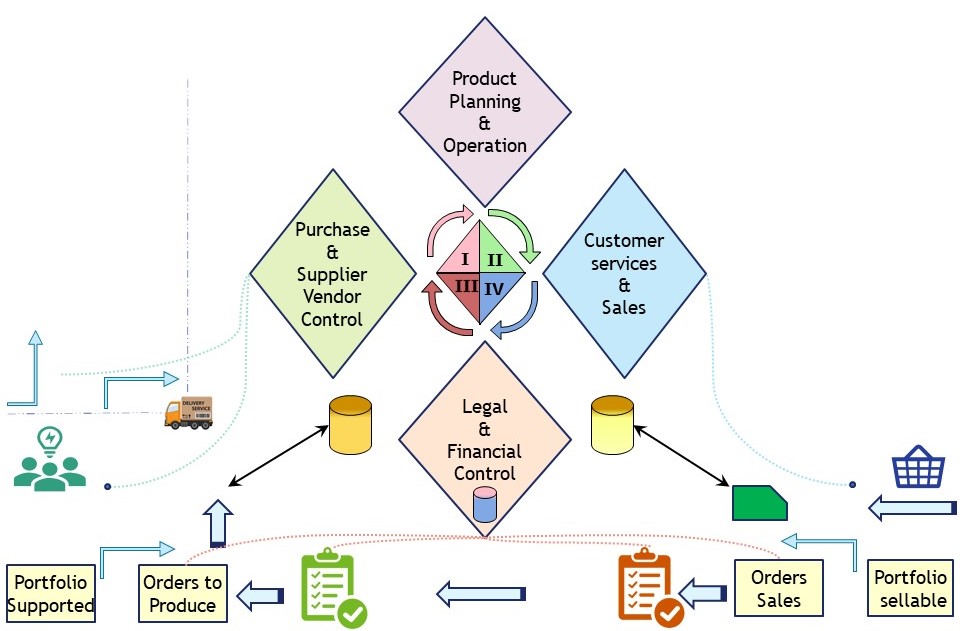

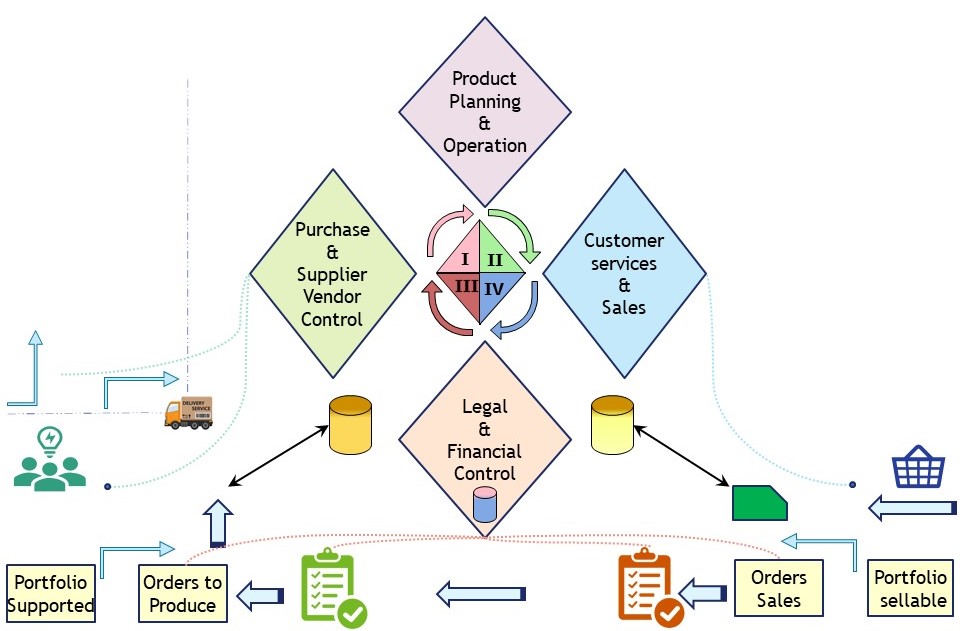

Value stream events, intake up to picking.

The full circle split for what is done before getting orders and "manufacturing the product".

In a pull process system the start is at the customer demand. With marketing the customer demand can be influenced. The demand is for products that are in a portfolio (sellable).

Getting demand requests, verifying for acceptance.

Starting (event 1 ✅): An order is coming in.

At sales expectations for sales for a longer period in the future is expected to communicatie with others. Results from the paste are historical facts may be some closing actions with adjusted results to communicate.

Assuming there is some relationship with the customers doing a request for service, the first question is whether you want to work on that request and on what kind of conditions.

Creditability and reliability and trustworthiness in the customer relationship are reviewed.

May be a condition, paying in advance is administrated, payments in evolving deliveries, quality of the product, expected delivery moment.

Goal (event 2 ✅): the sales order is verified.

👓 see figure. The second question is whether it is possible to deliver that product with the conditions according to the demand request.

It could be that there are missing materials needed for the assembly. Those could be needed to purchase or it is something to include what the customer has.

Support for a previous sold product is a valid request in the support portfolio. Including something of the customer like a passport photo could be a requirement in the product.

Goal (event 3 ✅): order has clearance to produce.

Continuation clearance preparation

Before the product is able to completely manufactured all component materials mut be in time available

Bill of Materials (Wikipedia)

It is the responsibility of "Supplier, Supplier vendor control" to fulfil these requirements.

Just in time delivery of all materials versus having enough in house to feed the line for efficient usage is seeking a balance continuously.

There are external vendors to manage but also internal inventory and information and / or materials from the customer intended with the product.

When there are unsolvable issue or the needed time is too long, communication with the other stakeholder is required.

What is going to be set as "collection completed" is to be agreed on with "product planning.

Goal (event 4 ✅): order has clearance to manufacture.

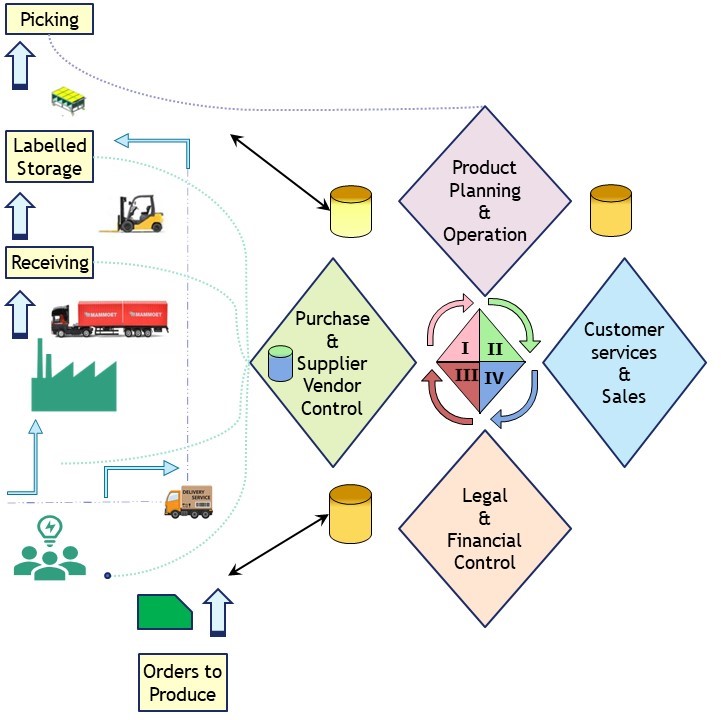

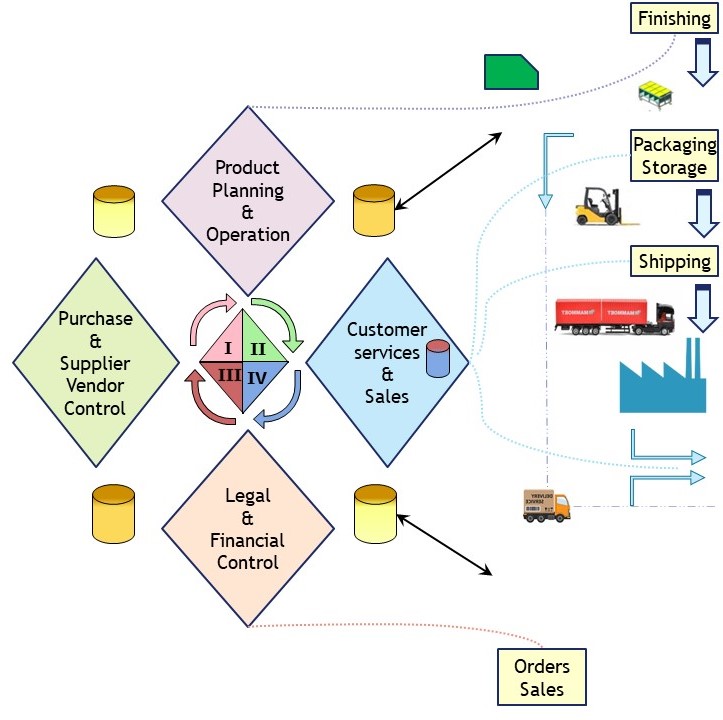

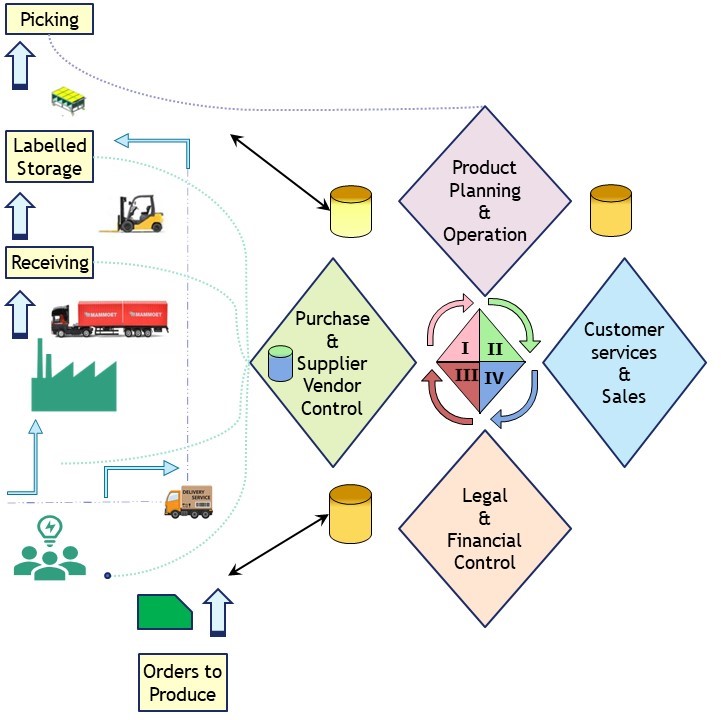

Value stream events, produce & delivery.

The full circle split for what is done for "manufacturing the product" and delivery.

The push delivery is the manufacturing with the available materials having a list what is requested to be delivered at agreed moments.

manufacturing push fullfill

Production planning is continously seeking a balance in availabe production capacity and the demand in delivering products.

The production capacity may vary in time due to maintenance in machines (robots) and / or available workers (human resources).

This small part of the whole product cycle has got the most attention with lean manufacturing. The agile manifesto for creating software software has even a more limited focus.

The assembly line is creating products. The variaton and then number of steps in the assembly line are undefined.

The products should have no defects but that is sure at this phase.

One of the goals wiht lean manifacturing is decrease loss by decreasing products with defects.

Goal (event 5 ✅): start assembly of product.

👓 see figure. The second question is whether the products are really without defects. Non destructive testing when applicable is verifying all products.

Quality control with destructive effects are only possible on products that are not going to be delivered (sampling). They are required for product quality purposes.

Goal (event 6 ✅): Verification product without defects (correct or reproduce).

Goal (event 7 ✅): Certificated products having the finishing touch completed.

Delivering product

When the product is has got the finishing touch the delivery phase can start.

Goal (event 8 ✅): Initiate product delivery according order definitions.

Delivering a product does not necessary have a fixed relationship with an address associated to customer attributes.

Delivery is possible by multiple channels. All depends what is applicable is a special situation.

Communication with finance is closing the internal business financial cycle.

Goal (event 9 ✅): Deliver to customer conforming sales order conditions

Goal (event 0 ✅): Verify satisfaction customer with delivered product

Payments are events possible delayed or done in advance. Financial results having other dates than the product deliveries.

Product types match on the abstracted cycle with events

Wondering whether this abstracted approach would be applicable in other situations? Just try it with &products" like:

- desktop services including a ticket system

- collecting reports of business figures in audit reports for building up new reports (stock evaluations for trade decisions)

- insurance policies, selling those has to calculate expectations in future.

- insurance claims, these will override the expectations by real experiences. Adding a complex of adjusted new expectations.

- mortgages the duration with this is many years, doing an agreement on a moment.

- emergency assistance eg for health.

Car assistance is another option.

Generic Analytical services - BI transformations.

The business process is also needing Confidentiality Integrity Availability (CIA) and Business Continuity (BCM).

These are generic pillars not for one of the departments in the values stream flow but for all shared.

CIA Confidentiality Integrity Availability

With a BIA, Business Impact Analyses going for the CIA:

- Confidentiality - Information: consumers, workers, trade and product details.

ICT security is important. Computers are used to store and use information.

A Security Operations Centre (SOC) needing an own analytics environment.

- Integrity - the quality of the product

- Availability - Managing the production assets (machines) that will include computers and software. SAM: software Asset management.

Planning maintenance, replacements is needing an own analytics environment. The production capacity prediction is important to align.

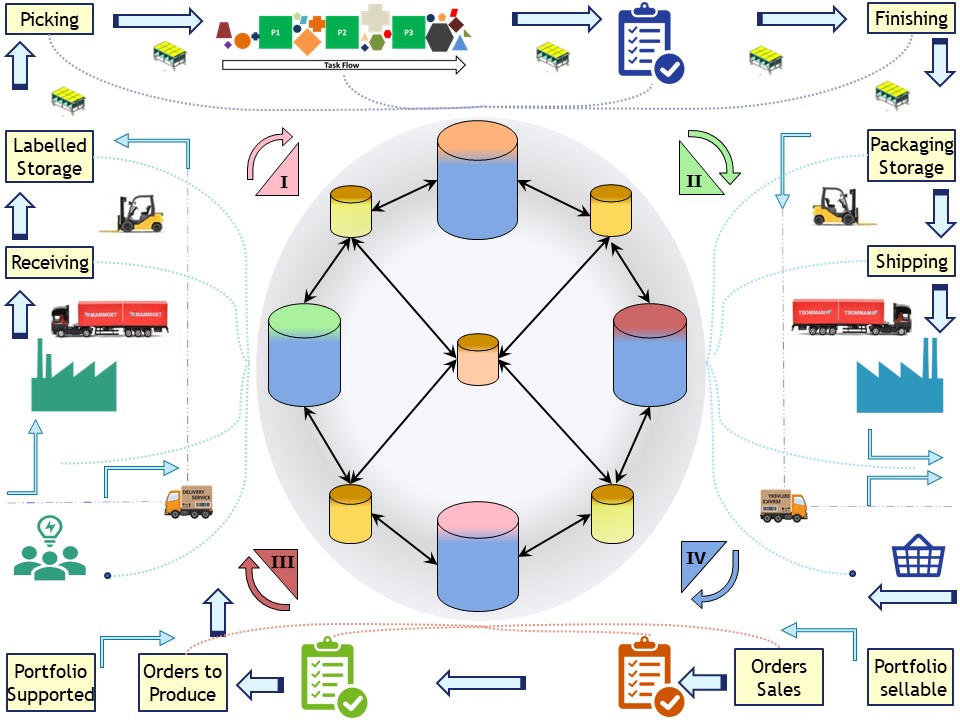

The four interactions covering stages (yellow containers) are aggregated to a central one for the shared vision

All containers endpoints access to assets mandatory managed. SOC data is not made visible.

Maintenace, updates, replacements to assets will break the running flow.

Data for Management of assets is not made visisble.

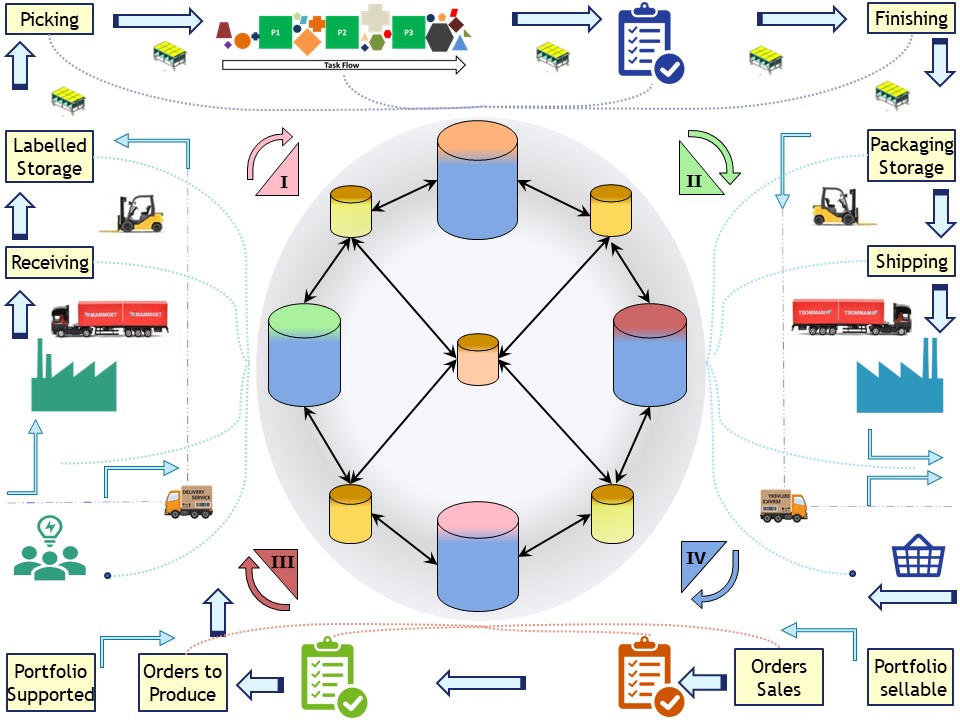

XML json usage or DBMS connections.

All data containers have to exchange data when they are on seperated systems. System seperation has the advantage of more easily replacing components.

Information exchange is possible by a vendor specific dbms connection or by a more rudimentair file transfer in a format like xml or json.

Connections are between:

- dedicated area´s responsible parties with the shared edges of their counterparts.

- From the shared edges of responsible parties into the one central consolidations.

BI ETL, Business Intelligence processes

Extract Transform Load was for a long time something being isolated from operational processes.

Having all history kept was an escape when the operational administrative process did not keep those by capacity reasons.

Keeping all history only in the analytical environment could give new challenges. The data retention policy is for all information somewhere originated from an organisation.

The ETL tooling got more nice options to follow information when used to build processes while at the same moment the operational administration software got realisations by standard commercial packages.

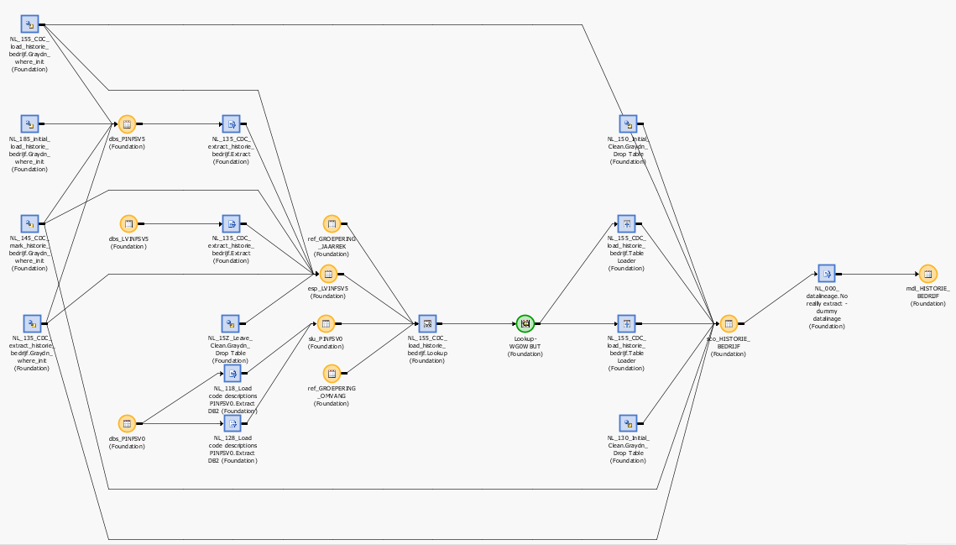

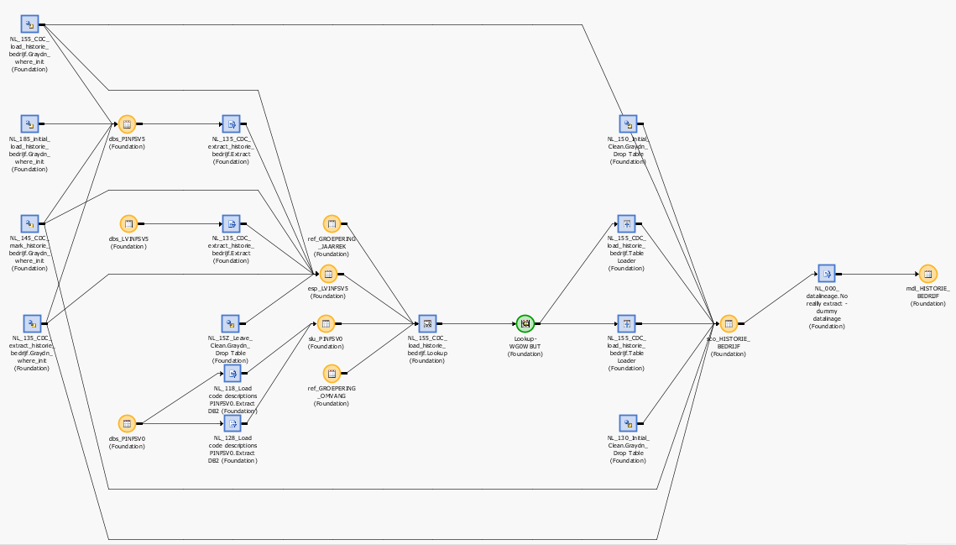

Using Etl tools to build data flows could look like:

When doing data transformations following the flow is important metadata. Important to understand impact when changing something.

Blue squares are representing processes, orange circles data tables. In this flow (monolithic job/program) many tables are got processed by transformations.

Using the table registrations it is possible to follow how elements are processed.

It is called 👓

"data lineage".

Events Lineage - Executing & Optimisations.

Pattern: Have 5 business analytics data environments

Lineage all steps of operational life cycle in products & services for customers of the organisation are those 4 phases with different focus, different interests, different names at details.

Those 4 have a decoupled shared handover of responsibilites.An additional one for the central consolidated view makes 5 analytics data environments.

Pattern: Any dedicated analytical environment wille use central MDM

Master Data Management (MDM) is standarizing the way elements of informations will realised in data represetantion.

Which data in what technical representation including numbers, characters, text, temporal and geospatial should have strict data governance guidelines.

Trying to adjust this later when technical conflicts and information loss is happening is a mission impossible.

Pattern: Define events in the production cycle important for the organisation.

Those events should be the most important anchor for measurements to get an idea what is going on.

For example:

- Event 1 ✅: An order is coming in.

- Event 2 ✅: the sales order is verified.

- Event 3 ✅: order has clearance to produce.

- Event 4 ✅: order has clearance to manufacture.

- Event 5 ✅: start assembly of product.

- Event 6 ✅: Verification product without defects (correct or reproduce).

- Event 7 ✅: Certificated products having the finishing touch completed.

- Event 8 ✅: Initiate product delivery according order definitions.

- Event 9 ✅: Deliver to customer conforming sales order conditions

- Event 0 ✅: Verify satisfaction customer with delivered product

Pattern: Have generics analytics environments for asset management

- 🎯 Asset management, SAM Software Asset Management for measuring & predicting availability, capacity.

- 🎯 Security of information for the confidentiality of information.

Pattern: An easy standard for information exchange between insulated systems

Insulated are the standard in realisations. Information is more useful when shared.

Make the sharing of what is really needed simple to implement safe with monitoring and easy to replace

Pattern: Used data lineage when building software if possible

Following what element in what record is going to is very informative. 🎭 Many tools are supporting this in their ETL tools.

History as memories.

Processing information was done in old times with technical limitations of those times. There is no reason to do things as always has been done.

🚧 Computers machines once had very limited capacity, this all has changed.

- Smarter machines 👓 Industry 4.0 can take advantage of this.

- A management information system can take advantage of this.

🚧 Assuming the management information system must be a central enterprise data warehouse (EDWH) is not according warehousing or value stream process flow.

🚧 Additional in house services base on analytics are needed not direct visible when only following the value stream with the main events. They once were that simple it got no defined place as key activity.

Dependicies other patterns

This page is a pattern on data lineage xml json.

Within the scope of metadata there are more patterns like exchaning information (data) , building private metadata and securing a complete environment.

🔰 Somewhere in a loop of patterns ..

Most logical back reference:

previous.

data lineaage usage, xml json, why is it critical?

data lineaage usage, xml json, why is it critical? sdlc: The value stream is linear having several responsible parties.

sdlc: The value stream is linear having several responsible parties.  bpm tiny: I an small organisation there is no need for a split up in smaller units.

bpm tiny: I an small organisation there is no need for a split up in smaller units.

The four interactions covering stages (yellow containers) are aggregated to a central one for the shared vision

The four interactions covering stages (yellow containers) are aggregated to a central one for the shared vision Information exchange is possible by a vendor specific dbms connection or by a more rudimentair file transfer in a format like xml or json.

Information exchange is possible by a vendor specific dbms connection or by a more rudimentair file transfer in a format like xml or json.