Design Data - BI, Analytics

RO-1 Introductions for functional details at realisations

RO-1.1 Contents

⚙ RO-1.1.1 Looking forward - paths by seeing directions

A reference frame in mediation innovation

When the image link fails,

🔰 click

here.

There is a revert to main topic in a shifting frame.

Contexts:

◎ r-steer the business

↖ r-shape mediations change

↗ r-serve split origin

↙ technical details

↘ data value stream

Fractal focus for fucntionality by technology

The cosmos is full of systems and we are not good in understanding what is going on.

In a ever more complex and fast changing world we are searching for more certainties and predictabilities were we would better off in understanding the choices in uncertainties and unpredictability's.

Combining:

- Systems Thinking, decisions, ViSM (Viable Systems Model) good regulator

- Lean as the instantiation of identification systems

- The Zachman 6*6 reference frame principles

- Information processing, the third wave

- Value Stream (VaSM) Pull-Push cycle

- Improvement cycles : PDCA DMAIC SIAR OODA

- Risks and uncertainties for decisions in the now near and far future, VUCA BANI

The additional challenge with all complexities is that this is full of dualities - dichotomies.

⚙ RO-1.1.2 Local content

⚖ RO-1.1.3 Guide reading this page

The position of this pages in the whole

This page is positioned as the

functionality details that are a split from the concepts in the Zarf JAbes technology idea for enabling a realisation.

The technology concepts page is a split from the generic technology page (r-serve). That page is part of the generic 6*6 reference frame.

There is no intention to have all chapters completely filled ar achieve a belanced load in the content.

The goal is a collection of what I have in a more understandable strcuture than beig spread all over many pages.

| | | | Details |

| | | | Technology |

| Context | r-serve: SDLC DevOps | Concepts | 🕳 |

| | | | Functional |

| | | | Details |

The entry anchor will be the RO-2 chapters.

An introdcution when appplicable RO-1

The impact when applicable in RO-3

The quest for methodlogies and practices

⚒ RO-1.1.4 Progress

done and currently working on:

- 2026 week 4

- Starting to refill this page in a new structure

The topics that are unique on this page

RO-1.2 The technological approach in performance

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

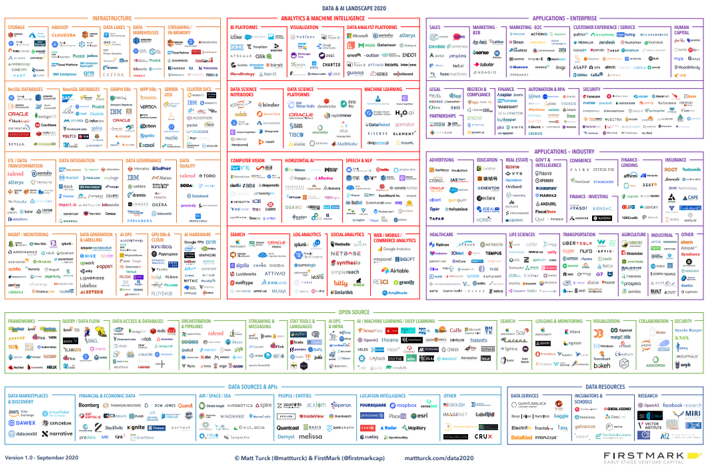

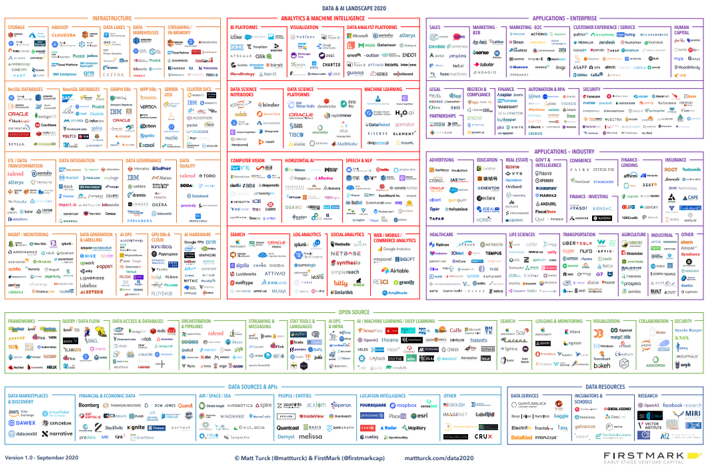

⟲ RO-1.2.1 Info

butics

Technology push focus BI tools.

The technology offerngs are rapidly changing the last years (as of 2020). Hardware is not a problemtic cost factor anymore, functionality is.

hoosing a tool or having several of them goes with personal preferences.

Different responsible parties have their own opinion how conflicts should get solved. In a technology push it is not the organisational goal anymore.

It is showing the personal position inside the organisation.

🤔 The expectation of cheaper and having better quality is a promise without warrants .

🤔 Having no alignment between the silo´s there is a question on the version of the truth.

Just an inventarization on the tools and the dedicated area they are use at:

Mat Turck on

2020 ,

bigdata 2020 An amazing list of all,kind of big data tools at the market place.

RO-1.3 Competing functionality vs safety to realisation

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

⟲ RO-1.3.1 Info

Safety first by design, a pracatical case II

The question:

Why spending capitals on hiring, while their best people walk out the door?

is about culture trust, ethics, conflicts, commitment, accountability, team results for the service outcome.

- A limited list of culture killers - (LI: P.Evans 2025)

That's because a high-performing culture isn't built, it's engineered.

And most leaders don't realise they've hard-coded failure into their system.

I've seen it happen across startups, scale-ups, and global giants...

- Great people

- Great products

- Slowly pulled apart by how they operate internally.

Because culture isn't built through slogans on the wall.

It's the unseen behaviours that either build trust or break it.

Here are 8 hidden culture killers that quietly drain performance:

- '"We're a family" ➡ Sounds warm, but it blurs boundaries and excuses.

👉🏾 Instead: Build a team, not a family. Clear roles and fair expectations create psychological safety, not forced intimacy.

- Micromanagement ➡ Kills initiative, grows dependence on leaders, and destroys creative confidence.

👉🏾 Instead: Replace control with clarity. Define outcomes, not tasks, and let people own how they get there.

- Too many managers, not enough doers ➡ Suddenly, meetings multiply, progress slows, and still, no one's held accountable.

👉🏾 Instead: Flatten decision-making. Reward action over alignment.

- Ignoring feedback ➡ If people stop speaking up, you've already lost them.

👉🏾 Instead: Build feedback loops into your system. Retros, pulse surveys, open channels. But remember, listening is only powerful if it leads to visible change.

- Decisions behind closed doors ➡ Secrecy leads to suspicion faster than any pay gap.

👉🏾 Instead: Default to transparency. Share the '"why" behind decisions, not just the '"what." It builds trust and alignment faster than any "all-hands" speech.

- Overloading top performers ➡ You don't reward excellence by exhausting it.

👉🏾 Instead: Scale their impact, not their workload. Automate, delegate, and invest in systems that protect your best people from burnout.

- No work-life boundaries ➡ If rest feels like guilt, performance will collapse.

👉🏾 Instead: Treat recovery as performance infrastructure. Model it yourself, when leaders rest, permission follows.

- Silent meetings➡ When the same voices dominate, innovation slowly dies.

👉🏾 Instead: Engineer participation. Rotate facilitators, ask for written input. Inclusion is a design choice.

You can't just '"hope" your culture into being. You have to engineer it.

Every system either builds trust or breaks it, and if you don't fix it, someone else will leave because of it.

Construction: existing systems that are hard to change

Construction regulations for 2025 focus heavily on sustainability, safety, and digitalization, with key changes including stricter energy performance, new Digital Product Passports (DPP) for materials in the EU, updated health & safety roles (like registered safety managers), and a push for greener building methods (heat pumps, solar). In the UK, the Building Safety Levy and new protocols for remediation orders are emerging, while globally, there's a trend towards clearer, faster permitting and greater accountability in construction.

Key Themes & Regulations

- Sustainability & Energy (EU & UK Focus):

- Digital Product Passports (DPP): Mandatory digital IDs for construction products under the EU's Ecodesign Regulation, tracking materials, performance, and recyclability.

- Energy Efficiency: Stricter standards for new builds, pushing low-carbon heating (heat pumps) and better insulation.

- Embodied Carbon: Increasing focus on calculating and reducing the carbon footprint of materials.

- Health & Safety (Global Updates):

- Professional Registration: Introduction of registered Construction Health & Safety Managers (CHSM) in some regions (e.g., South Africa draft regs) to elevate standards.

- Ergonomics: Greater emphasis on worker well-being and preventing musculoskeletal disorders.

- Notification Changes: Some areas are expanding the scope of all construction work requiring notification to authorities, not just high-risk activities.

- Building Safety (UK Specific):

- Building Safety Levy: A new levy on new homes in England to fund remediation of building safety defects.

- Legal Protocols: New court guidance expected for building safety remediation orders and liability orders.

- Permitting & Process (EU Trend):

- One-Stop Shops: Calls for simplified, digital, single-permit systems with clearer timelines for approvals.

Legal Protocols: New court guidance expected for building safety remediation orders and liability orders.

What it Means for You (General)

- Design for Green: Incorporate heat pumps, solar, and high insulation from the start.

- Track Materials: Be ready for DPP requirements and provide detailed environmental data.

- Elevate Safety: Expect new training and potentially registered safety roles.

- Expect More Scrutiny: Authorities are increasing oversight on safety, sustainability, and permit compliance.

Note: Regulations vary significantly by country.

Guide to Construction Products Regulation (CPR)

The Construction Products Regulation (CPR) is a pivotal EU legislation that sets standardized safety, performance, and environmental impact requirements for construction products across the EU. Originally established in 2011 to streamline the circulation of construction products within the Single Market through standardized guidelines, the CPR was updated in 2024 to address modern environmental challenges, advancing sustainability and transparency in the construction sector.

Health:

cdisc

In July 2022, the FDA published, in Appendix D, to their Technical Conformance Guide (TCG), a description of additional variables they want in a Subject Visits dataset. A dataset constructed to meet these requirements would depart from the standard, so validation software would create warnings and/or errors for the dataset. Such validation findings can be explained in PHUSE?s Clinical Study Data Reviewer?s Guide (cSDRG) Package.

phuse

The Global Healthcare Data Science Community Sharing ideas, tools and standards around data, statistical and reporting technologies

phuse

PHUSE Working Groups bring together volunteers from diverse stakeholders to collaborate on projects addressing key topics in data science and clinical research, with participation open to all.

RO-1.4 Defining taxonomies - concepts - ontology

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

⟲ RO-1.4.1 Info

The 4 leadership behaviors that drive transformation

An email promotion:

"Many leaders discover: they are the problem." (email: lean.org. 2025)

Tools account for 20% of success. Leadership behaviors account for 80%.

David Mann, in his research on lean management systems, found that "implementing tools represents at most 20% of the effort in lean transformations; the other 80% is expended on changing leaders' practices and behaviors, and ultimately their mindset."

Yet most organizations invest heavily in tool training while treating leadership development as optional.

Four behaviors that drive transformation:

- Go See (Gemba)

Regular presence where value is created. To understand, not inspect.

- Ask Why (Coach)

Develop capability through questions. Build scientific thinking.

- Show Respect (Safety)

Create environments where problems surface early.

- Connect to Strategy (Hoshin)

Ensure every level understands how daily work supports objectives.

These aren't separate activities. They're interconnected behaviors that create the management system for sustained performance.

Daily management boards drive problem-solving (not just tracking). Teams catch issues early because they understand targets and feel safe surfacing problems.

From "Managing on Purpose" (book): "Hoshin kanri is an excellent opportunity for leaders to learn to lead by responsibility as opposed to authority."

butics

Moral Complexity of Organisational Design (LI:R.Claydon 2025)

Buurtzorg has become a kind of organisational Rorschach test. In his original essay, Stefan Norrvall reads it through a lens of organisational physics:

- complexity is conserved,

- work is stratified,

and Buurtzorg works because it relocates integrative load from managers into small whole-task teams, architecture, and an unusually supportive Dutch welfare ecosystem.

In response, Otti Vogt argues that this frame is ontologically and morally too thin: Buurtzorg is not just a clever cybernetic design, but a solidaristic, post-neoliberal project grounded in care ethics, widening moral circles, and a refusal to treat nursing as timed piecework.

Certainty uncertainty in the theory of constraints

Continuation of the LI article on TOC is claiming TOC felt as being incomplete but the question is what that is.

The Illusion of Certainty (LI: Eli Schragenheim Bill Dettmer 2025)

❶

A typical example of ignoring uncertainty is widespread reliance on single-number discrete forecasts of future sales.

Any rational forecast should include not just the quantitative average (a single number), but also a reasonable deviation from that number.

The fact that most organizations use just single-number forecasts is evidence of the illusion of certainty.

Organizations typically plan for long-term objectives as well as for the short-term.

A plan requires many individual decisions regarding different stages, inputs or ingredients.

All such decisions together are expected to lead to the achievement of the objective.

But uncertainty typically crops up in the execution of every detail in the plan.

This forces the employees in charge of the execution to re-evaluate the situation and introduce changes, which may well impact the timely and quality of the desired objective.

What motivates people to make the decisions that they do?

Many readers will be familiar with Abraham Maslow's hierarchy of needs.

Maslow theorized that humans have needs that they strive to satisfy.

Further, Maslow suggested that it's unsatisfied needs that motivate people to action.

Maslow also suggested that human needs are hierarchical.

This means that satisfying needs lower in the hierarchy pyramid captures a person's attention until they are largely (though not necessarily completely) satisfied.

At that point, the these lower level needs become less of a motivator than unsatisfied higher level needs.

The person in question will then bend most of his or her efforts to fulfilling those needs.

The Dod Strategy statement knowledge management: data safety

DoD data strategy (2020) Problem Statement

Make Data Secure

As per the DoD Cyber Risk Reduction Strategy, protecting DoD data while at rest, in motion, and in use (within applications, with analytics, etc.) is a minimum barrier to entry for future combat and weapon systems.

Using a disciplined approach to data protection, such as attribute-based access control, across the enterprise allows DoD to maximize the use of data while, at the same time, employing the most stringent security standards to protect the American people.

DoD will know it has made progress toward making data secure when:

| | Objective | information Safety |

| 1 | Platform access control | Granular privilege management (identity, attributes, permissions, etc.) is implemented to govern the access to, use of, and disposition of data. |

| 2 | BIA&CIA PDCA cycle | Data stewards regularly assess classification criteria and test compliance to prevent security issues resulting from data aggregation. |

| 3 | best/good practices | DoD implements approved standards for security markings, handling restrictions, and records management. |

| 4 | retention policies | Classification and control markings are defined and implemented; content and record retention rules are developed and implemented. |

| 5 | continuity, availablity | DoD implements data loss prevention technology to prevent unintended release and disclosure of data. |

| 6 | application access control | Only authorized users are able to access and share data. |

| 7 | information integrity control | Access and handling restriction metadata are bound to data in an immutable manner. |

| 8 | information confidentiality | Access, use, and disposition of data are fully audited. |

Retrosperctive for applying collective intelligence for policy.

Ideas into action (Geoff Mulgan )

What's still missing is a serious approach to policy.

I wrote two pieces on this one for the Oxford University Press Handbook on Happiness (published in 2013), and another for a Nef/Sitra publication.

I argued that although there is strong evidence at a very macro level (for example, on the relationship between democracy and well-being), in terms of analysis of issues like unemployment, commuting and relationships, and at the micro level of individual interventions, what's missing is good evidence at the middle level where most policy takes place.

This remains broadly true in the mid 2020s.

I remain convinced that governments badly need help in serving the long-term, and that there are many options for doing this better, from new structures and institutions, through better processes and tools to change cultures.

Much of this has to be led from the top.

But it can be embedded into the daily life of a department or Cabinet.

One of the disappointments of recent years is that, since the financial crisis, most of the requests to me for advice on how to do long-term strategy well come from governments in non-democracies.

There are a few exceptions - and my recent work on how governments can better 'steer' their society, prompted by the government in Finland, can be seen in this report from Demos Helsinki.

During the late 2000s I developed a set of ideas under the label of 'the relational state'.

This brought together a lot of previous work on shifting the mode of government from doing things to people and for people to doing things with them.

I thought there were lessons to learn from the greater emphasis on relationships in business, and from strong evidence on the importance of relationships in high quality education and healthcare.

An early summary of the ideas was published by the Young Foundation in 2009.

The ideas were further worked on with government agencies in Singapore and Australia, and presented to other governments including Hong Kong and China.

An IPPR collection on the relational state, which included an updated version of my piece and some comments, was published in late 2012.

I started work on collective intelligence in the mid-2000s, with a lecture series in Adelaide in 2007 on 'collective intelligence about collective intelligence'.

The term had been used quite narrowly by computer scientists, and in any important book by Pierre Levy.

I tried to broaden it to all aspects of intelligence: from observation and cognition to creativity, memory, judgement and wisdom. A short Nesta paper set out some of the early thinking, and a piece for Philosophy and Technology Journal (published in early 2014) set out my ideas in more depth.

My book Big Mind: how collective intelligence can change our world from Princeton University Press in 2017 brought the arguments together.

RO-1.5 Defining temporal boundaries dependencies

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

⟲ RO-1.5.1 Info

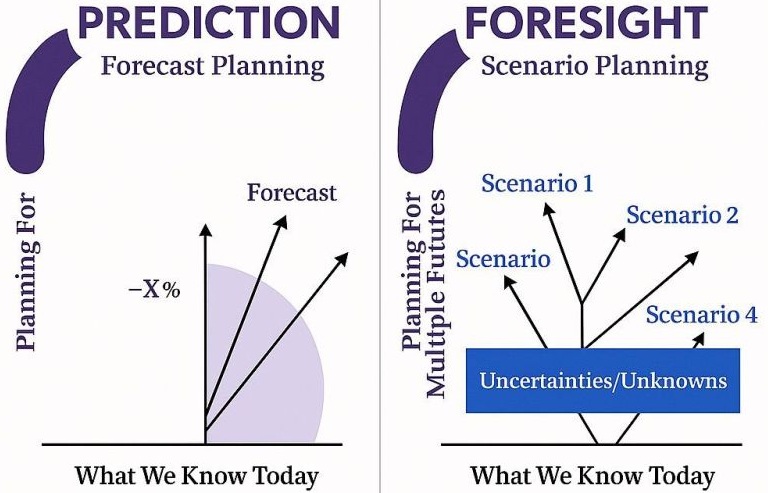

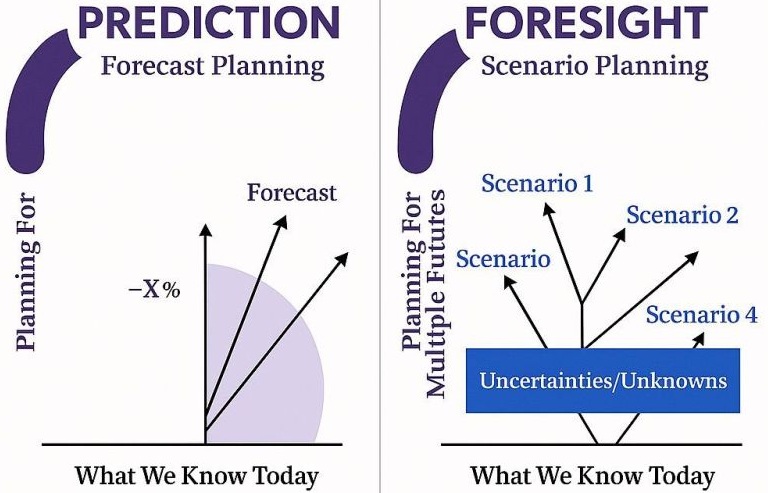

The Certainty loophole in wanting predictability

(LI: A.Constable 2025)

In strategy, understanding the distinction between scenarios and forecasts can be crucial to achieving long-term success.

The distinction is this:

- Forecasts ➡ What is likely to happen:

- Based on historical data, trends, and expected developments

- Provides a single probable outcome

- Often used for budgeting and short-term planning

- Scenarios ➡ What could happen:

- Considers multiple possible futures based on key uncertainties

- Helps organisations prepare for different potential outcomes

- Critical for long-term strategic resilience and stress testing

While forecasts help navigate the near future, scenario planning equips organisations to anticipate shifts, adapt strategies, and stay ahead in an unpredictable world.

Lean accounting removing certaintity constraints

The Danaher Business System (DBS), developed by Mark DeLuzio, is a comprehensive Lean-based operating model that transformed Danaher Corporation into one of the most successful industrial conglomerates in the world.

It integrates strategy deployment, continuous improvement, and cultural alignment into a unified system for operational excellence.

| Element | Description |

| Lean foundation | Built on Toyota Production System principles, emphasizing waste elimination, flow, and value creation. |

| Policy Deployment (Hoshin Kanri) | Strategic alignment tool that cascades goals from top leadership to frontline teams. |

| Kaizen culture | Continuous improvement through structured problem-solving and employee engagement. |

| Visual management | Dashboards, metrics boards, and process visibility tools to drive accountability and transparency. |

| Standard work | Codified best practices for consistency, training, and performance measurement. |

| Lean accounting | Developed by DeLuzio to align financial systems with Lean operations , focusing on value streams rather than traditional cost centers. |

Mark DeLuzio's Role and Philosophy

- Architect of DBS: As VP of DBS, DeLuzio led its global deployment and helped Danaher become a benchmark for Lean transformation.

- Lean Accounting Pioneer: He introduced the first Lean accounting system in the U.S. at Danaher's Jake Brake Division.

- Strategic Integrator: DeLuzio emphasized that Lean must be tied to business strategy , not just operational tools.

- Respect for People: A core tenet of DBS, ensuring that transformation is sustainable and human-centric.

| Activity | Description |

| Eliminating waste in accounting processes | Traditional month-end closes and cost allocations often involved redundant steps. Lean Accounting applies value-stream mapping to streamline closing cycles, freeing finance teams to focus on strategic analysis |

| Value-stream based reporting | Instead of tracking costs by departments, Lean Accounting organizes them by value streams , the end-to-end activities that deliver customer value. This provides clearer insight into profitability tied to actual products or services |

| Real-time decision support | Lean Accounting emphasizes timely, actionable data rather than lagging reports. This enables leaders to make faster, more informed investment and governance decisions |

| Continuous improvement in finance | Just as Lean manufacturing fosters kaizen, Lean Accounting embeds continuous improvement into financial governance, ensuring reporting evolves with operational needs |

| Integration with agile governance | Lean financial governance adapts investment tracking to modern delivery methods (agile, hybrid, waterfall), ensuring funding and prioritization align with how initiatives are actually execute |

| Transparency and cultural alignment: | By eliminating complex cost allocations and focusing on value creation, Lean Accounting fosters a culture of openness and accountability across departments |

Why This Matters for Governance

Traditional accounting often obscured the link between operations and financial outcomes. Lean Accounting reshaped governance by:

- Making financial metrics operationally relevant.

- Aligning investment decisions with customer value creation.

- Enabling adaptive governance models that support agile and Lean transformations.

This is why companies like Danaher, GE, and others used Lean Accounting as a cornerstone of their governance systems , it provided clarity, speed, and alignment between finance and operations.

RO-1.6 Defining what is learned for systems maturity

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

⟲ RO-1.6.1 Info

butics

⟲ RO-1.6.2 Info

butics

⟲ RO-1.6.3 Info

butics

⟲ RO-1.6.4 Info

butics

RO-2 Anchorpoints for functional details at realisations

RO-2.1 Using standard patterns for component in lines

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

⟲ RO-2.1.1 Info

butics

RO-2.2 Performance of the processing for flow

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

⟲ RO-2.2.1 Info

butics

RO-2.3 Tradeoffs in achieving functionality vs safety

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

⟲ RO-2.3.1 Info

butics

RO-2.4 Understanding taxonomies - concepts - ontology

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

⟲ RO-2.4.1 Info

butics

RO-2.5 Understanding temporal boundaries dependencies

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

⟲ RO-2.5.1 Info

butics

RO-2.6 Understanding for what drives systems maturity

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

⟲ RO-2.6.1 Info

butics

RO-3 Impacts consequences for functional details at realisations

RO-3.1 Analytics reporting.

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

⟲ RO-3.1.1 Info

butics

RO-3.2 The goal of BI Analytics.

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

⟲ RO-3.2.1 Info

butics

RO-3.3 Preparing data for BI Analtyics.

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

⟲ RO-3.3.1 Info

butics

RO-3.4 EDW performance challenges.

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

⟲ RO-3.4.1 Info

butics

RO-3.5 Omissions in BI, Analytics reporting.

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

⚠ RO-3.5.1 ETL ELT - No Transformation.

butics

RO-3.6 .....Omissions in BI, Analytics reporting.

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

⟲ RO-3.6.1 Info

butics

© 2012,2020,2026 J.A.Karman

When the image link fails, 🔰 click here.

When the image link fails, 🔰 click here.

Different responsible parties have their own opinion how conflicts should get solved. In a technology push it is not the organisational goal anymore.

It is showing the personal position inside the organisation.

Different responsible parties have their own opinion how conflicts should get solved. In a technology push it is not the organisational goal anymore.

It is showing the personal position inside the organisation.

(LI: A.Constable 2025)

In strategy, understanding the distinction between scenarios and forecasts can be crucial to achieving long-term success.

The distinction is this:

(LI: A.Constable 2025)

In strategy, understanding the distinction between scenarios and forecasts can be crucial to achieving long-term success.

The distinction is this: