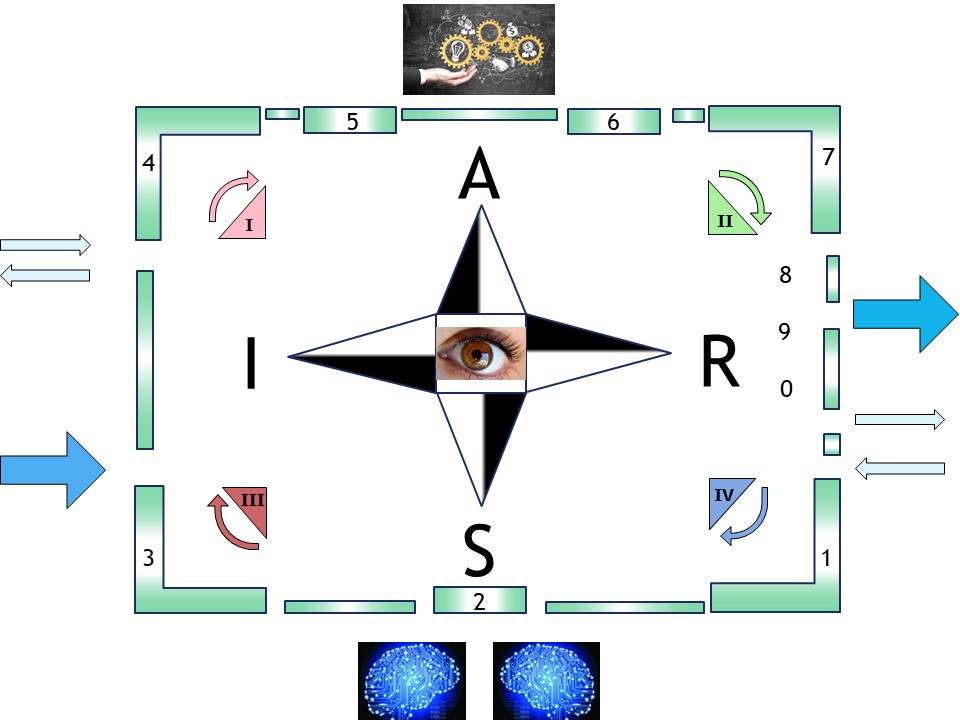

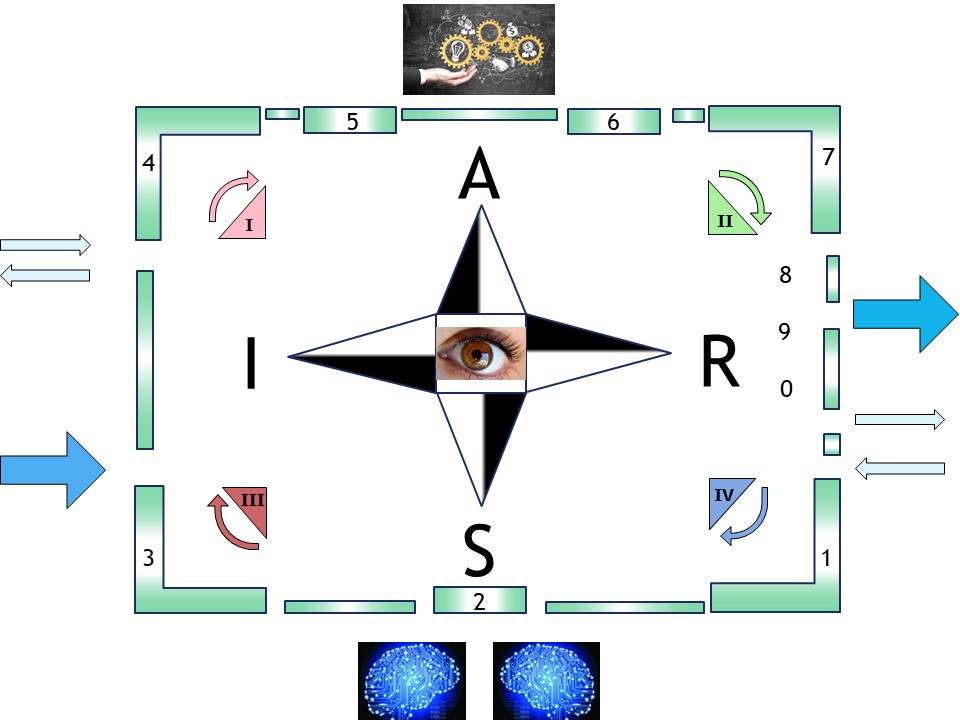

Design Data - Information flow

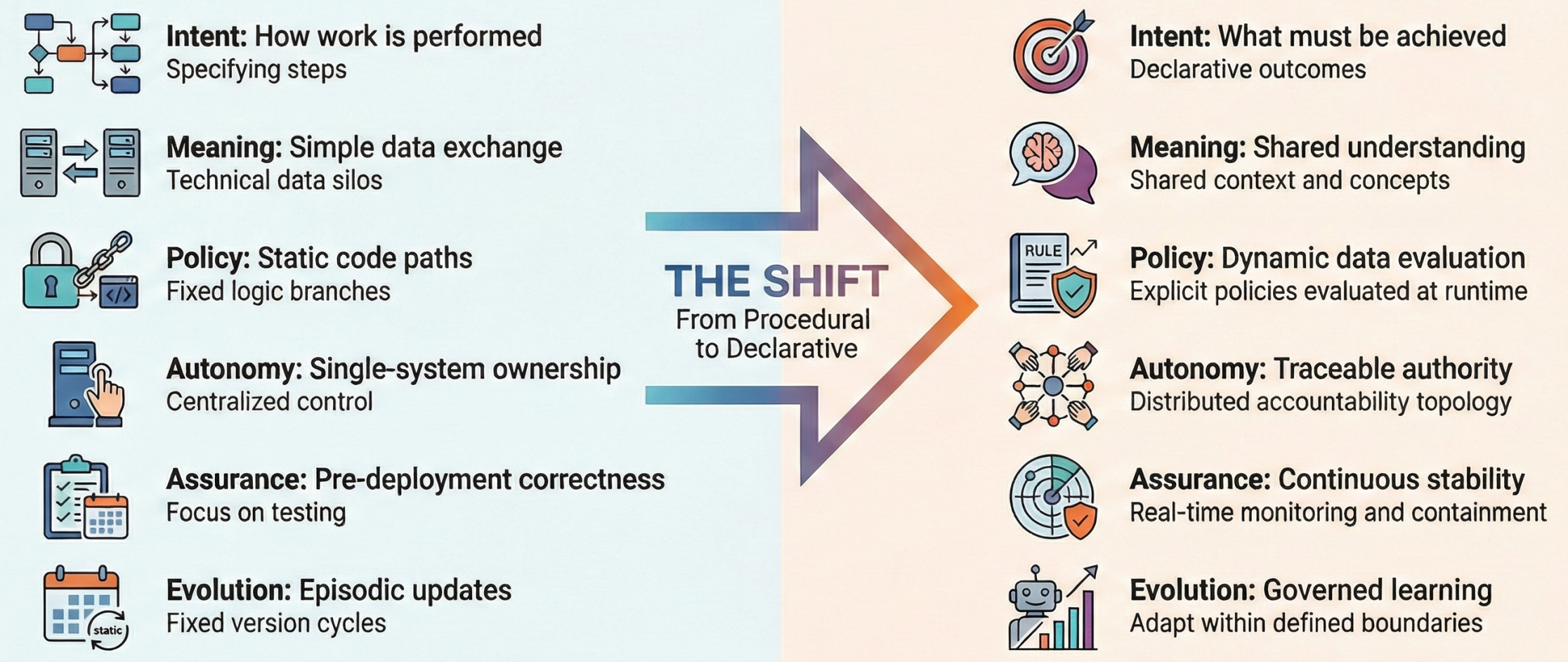

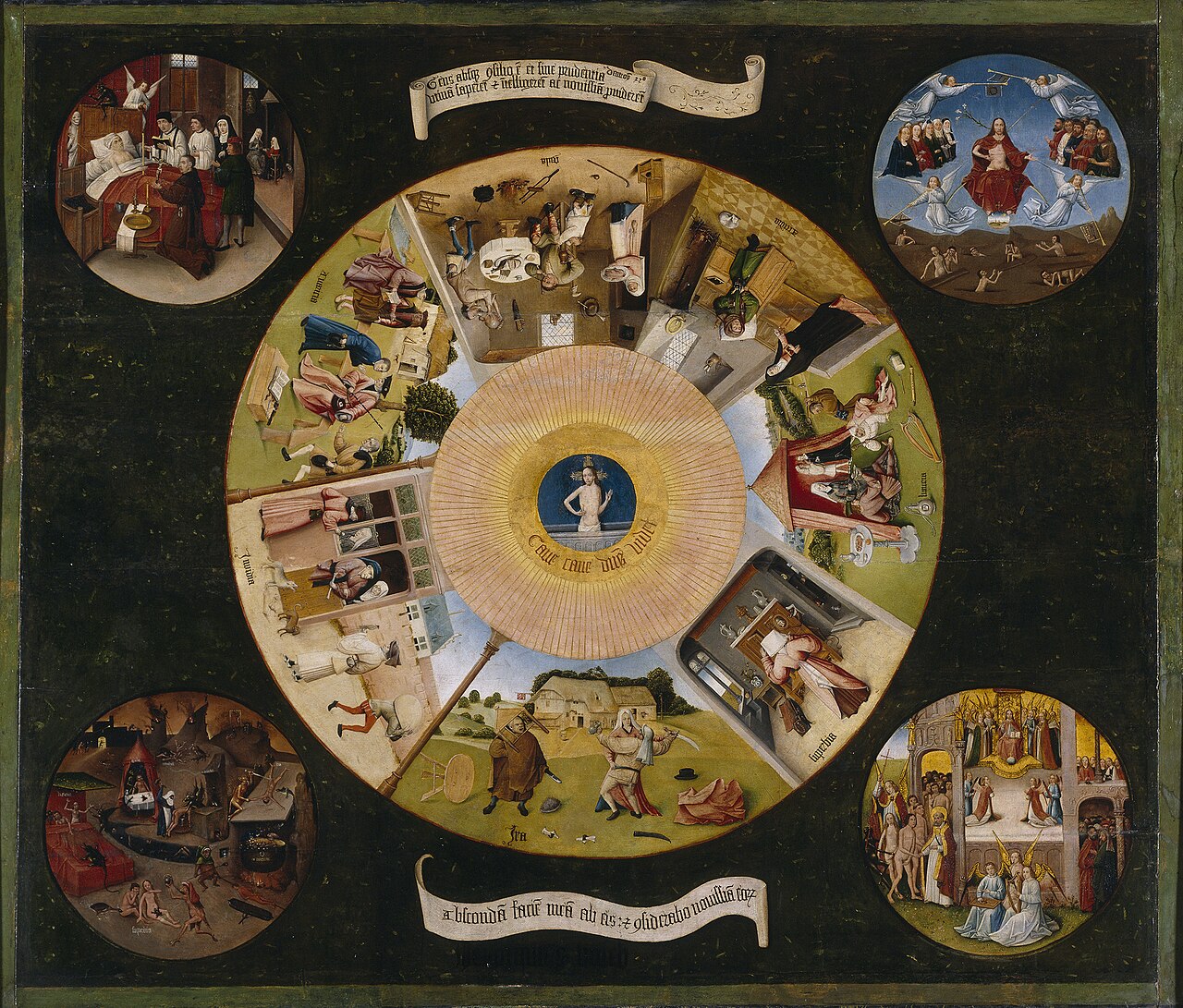

RN-1 Changing the classic technological perspective

RN-1.1 Contents

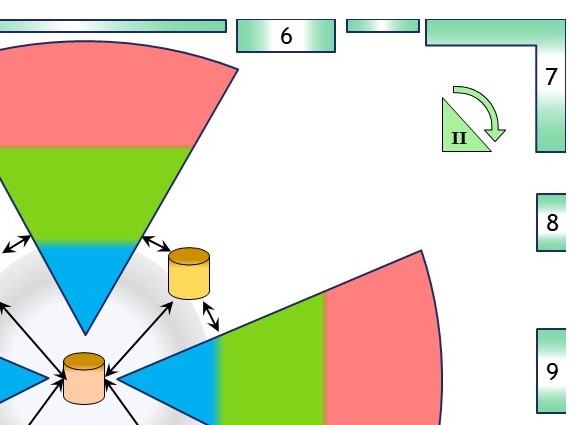

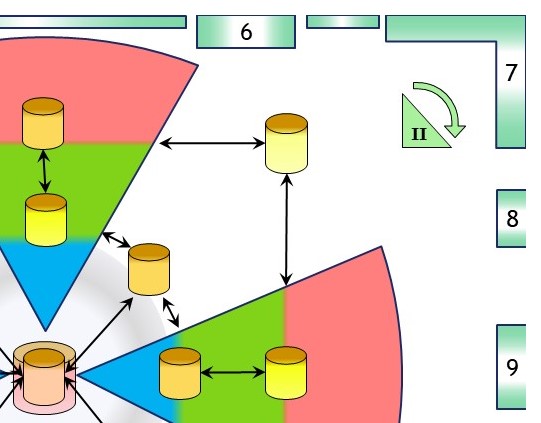

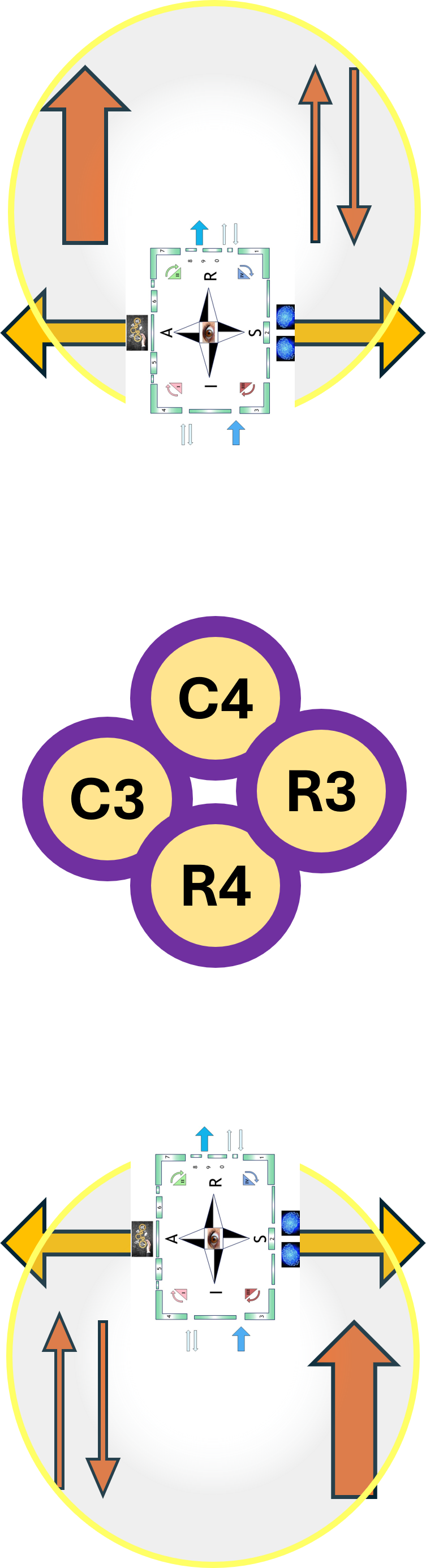

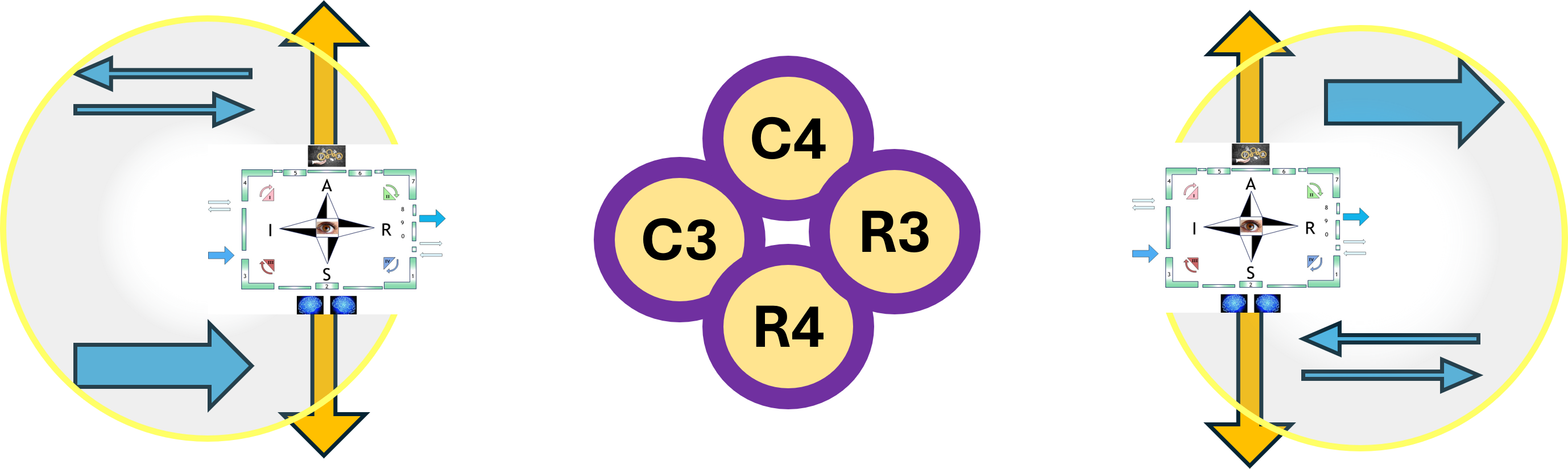

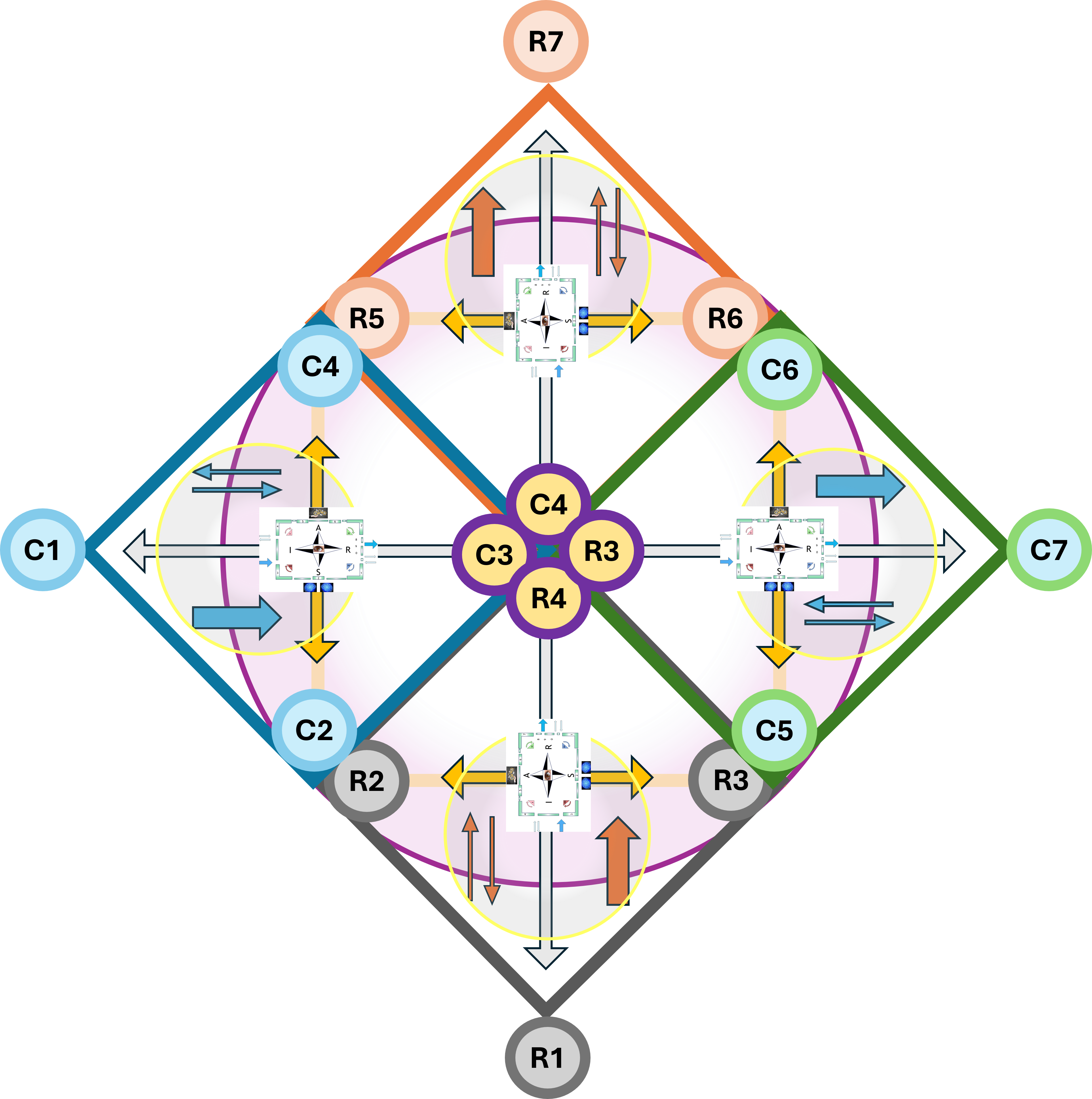

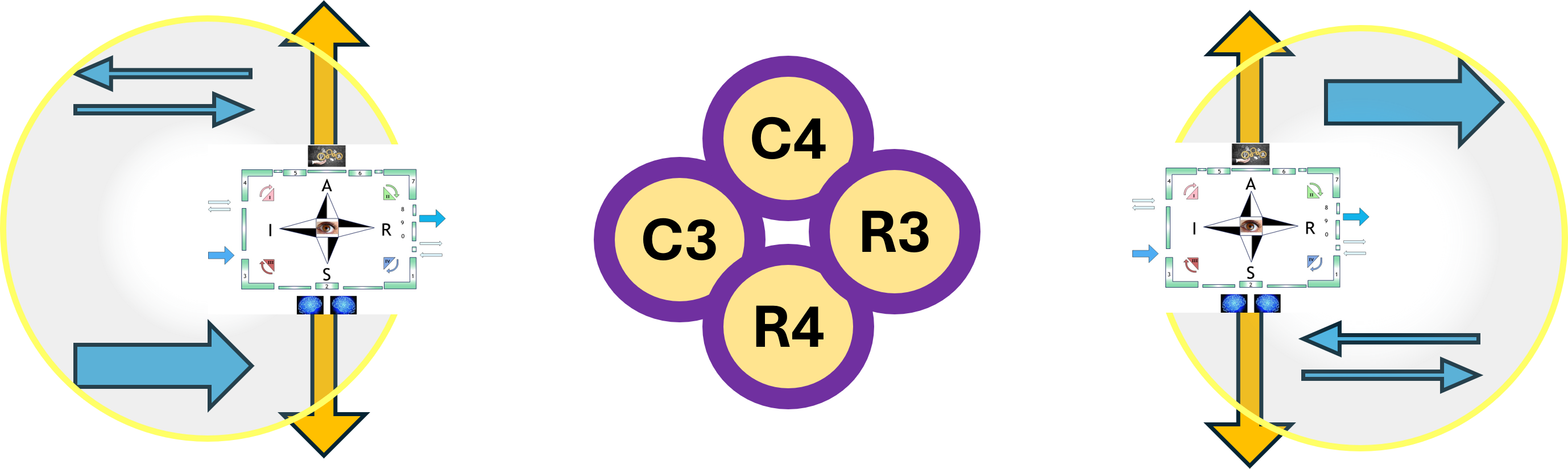

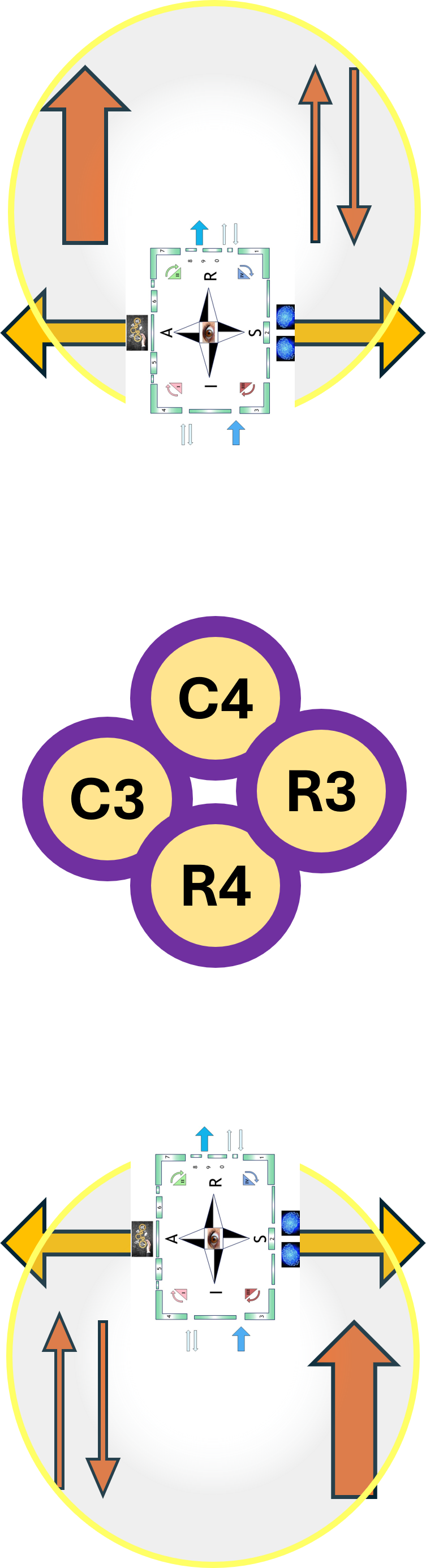

⚙ RN-1.1.1 Looking forward - paths by seeing directions

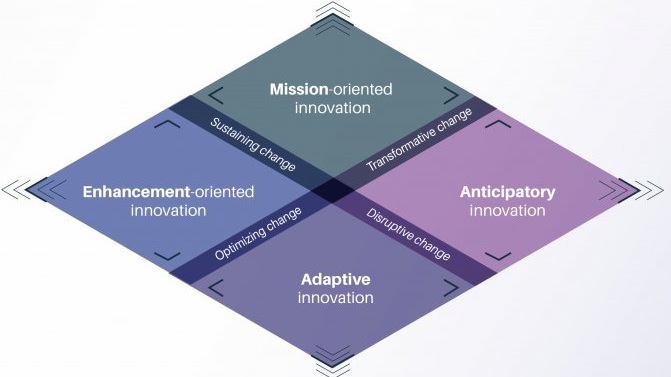

A reference frame in mediation innovation

When the image link fails,

🔰 click

here for the most logical higher fractal in a shifting frame.

Contexts:

◎ r-steer the business

↖ r-shape mediation change

↗ r-serve split origin

↙ technical details

↘ functional details

There is a counterpart

💠 click

here for the impracticable diagonal shift to shaping change.

The quest for methodologies and practices, dialectical closure

This page is about a mindset framework for understanding and managing complex systems.

The type of complex systems that is focussed on are the ones were humans are part of the systems and build the systems they are part of.

The phase shift from classic linear and binary thinking into non-linear dialectal is brought to completion in aliging the counterpart of this page.

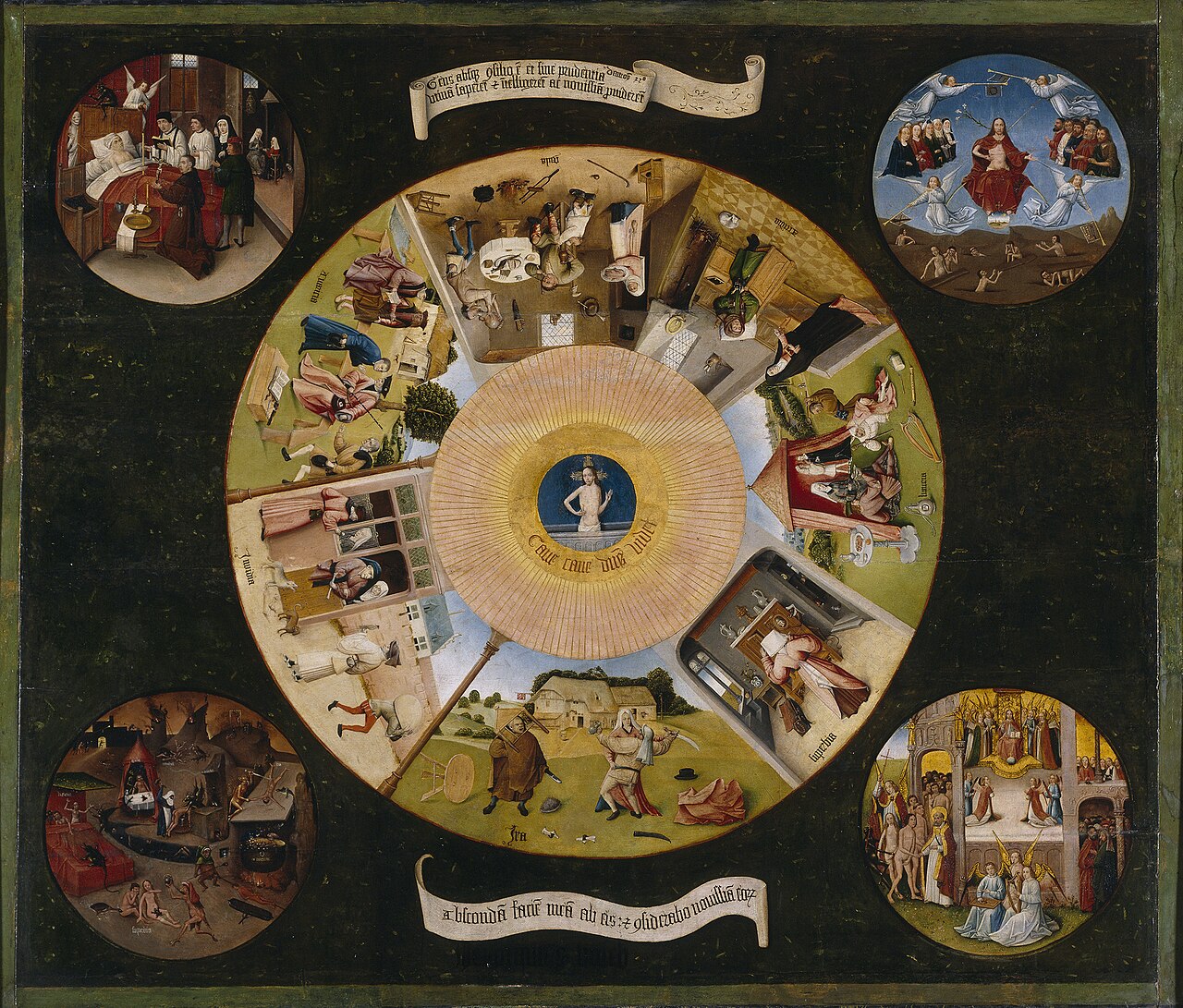

A key concept is "dialectal closure", words that are not understandable without a simple explanation.

👁️

Dialectical closure means:

- You have looked at something from all the necessary sides, and

- nothing essential is missing anymore.

When closure is reached:

- tensions are recognized, opposites are connected, action - meaning make sense together

It does not mean:

- agreement, perfection, the end of change

It means the picture is whole enough to act responsibly.

Dialectical closure is when all three views are taken together before deciding the next move.

| ✅ Steering Closure | ❌ Skipped to binary |

| Look ahead ➡ where am I going? | only looking ahead ➡ fantasy |

| Look around ➡ what is happening now? | only looking around ➡ drifting |

| Look back ➡ did my last move work? | only looking back ➡ paralysis |

This is a simple list of 3 tensions, for awareness.

🎭

Using the 3*3 matrix the cycle as the flow around "

execution".

| ✅ in 3*3 terms | ❌ any is missing: |

| Problem is seen (Context * Sense) | no real learning occurs |

| Execution happens (Process * Act) | decisions feel arbitrary |

| Purpose is reflected (Outcome * Reflect) | people get confused or resist |

This is a simple list of 3 tensions, in activities.

Without closure: frameworks feel abstract, discussions go in circles, people talk past each other

With closure: disagreements become productive, roles become clear, action becomes legitimate.

Dialectical closure is reached when context, action, and consequences are considered together, allowing meaningful action without ignoring tensions.

Although there are only 7 items mentioned by a tension in two axis it is about 3*3 items.

The quest for methodlogies and practices, seacch to STEM

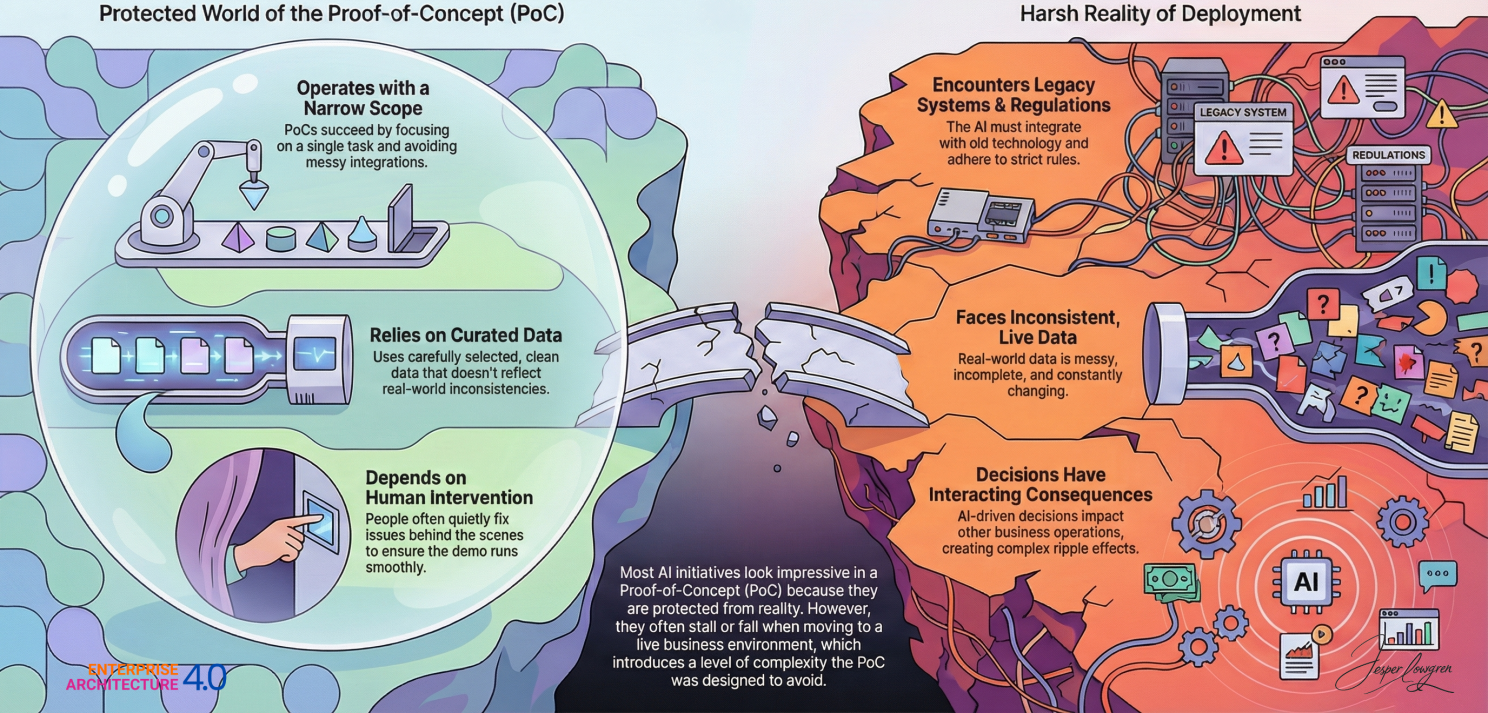

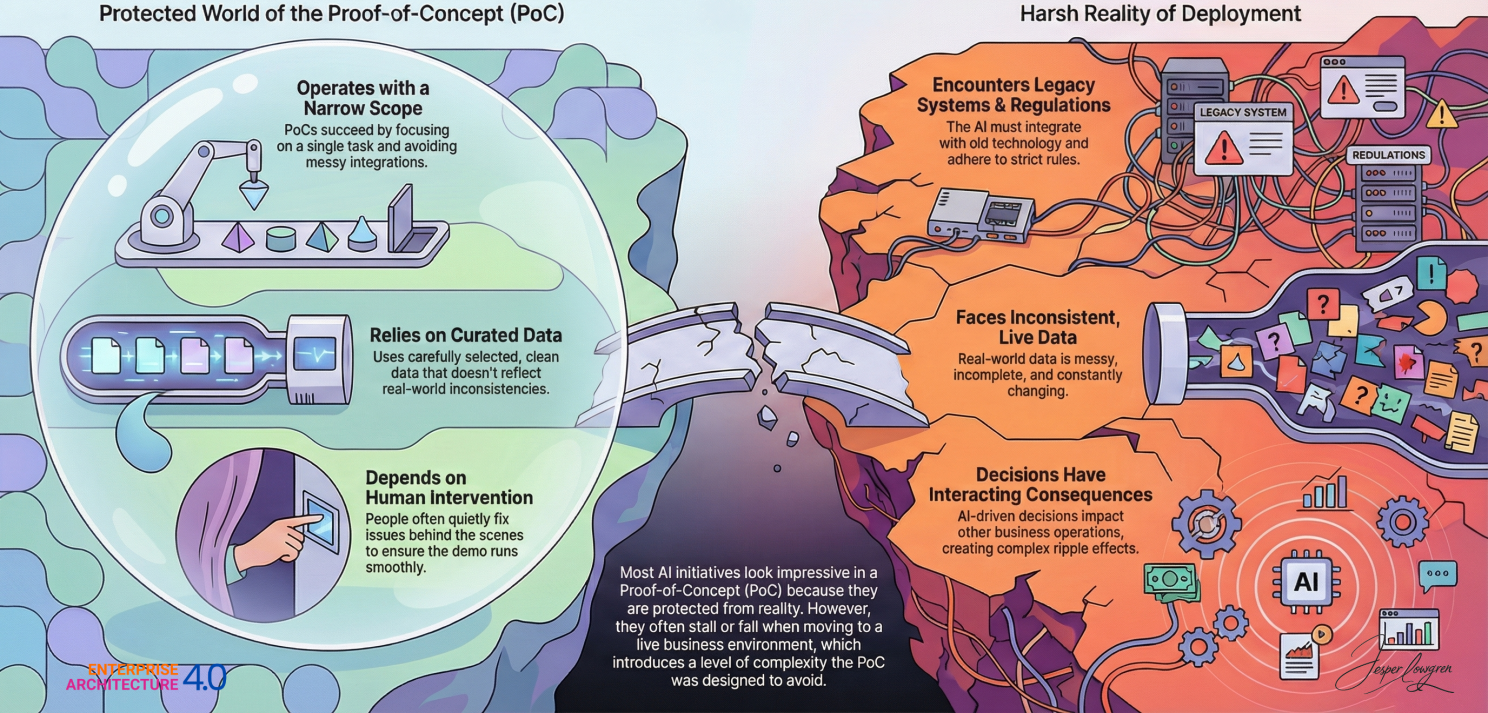

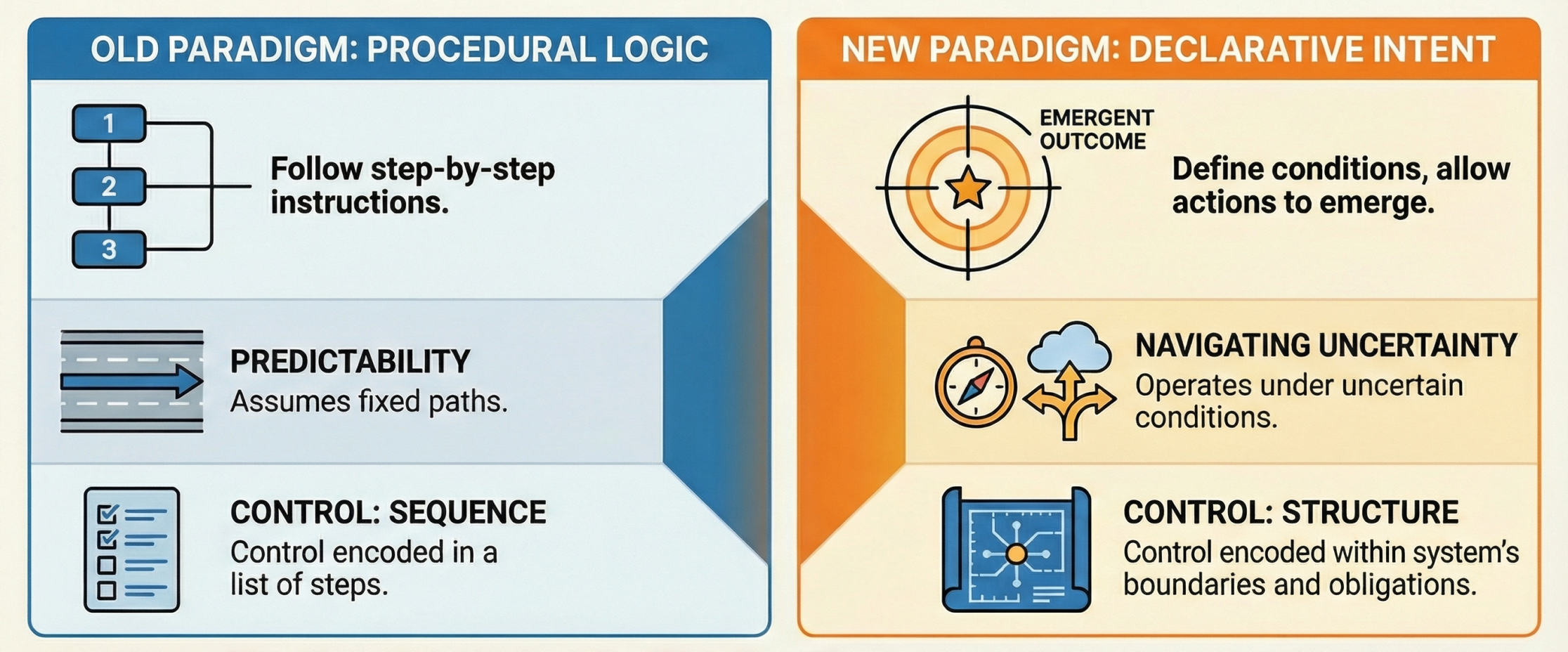

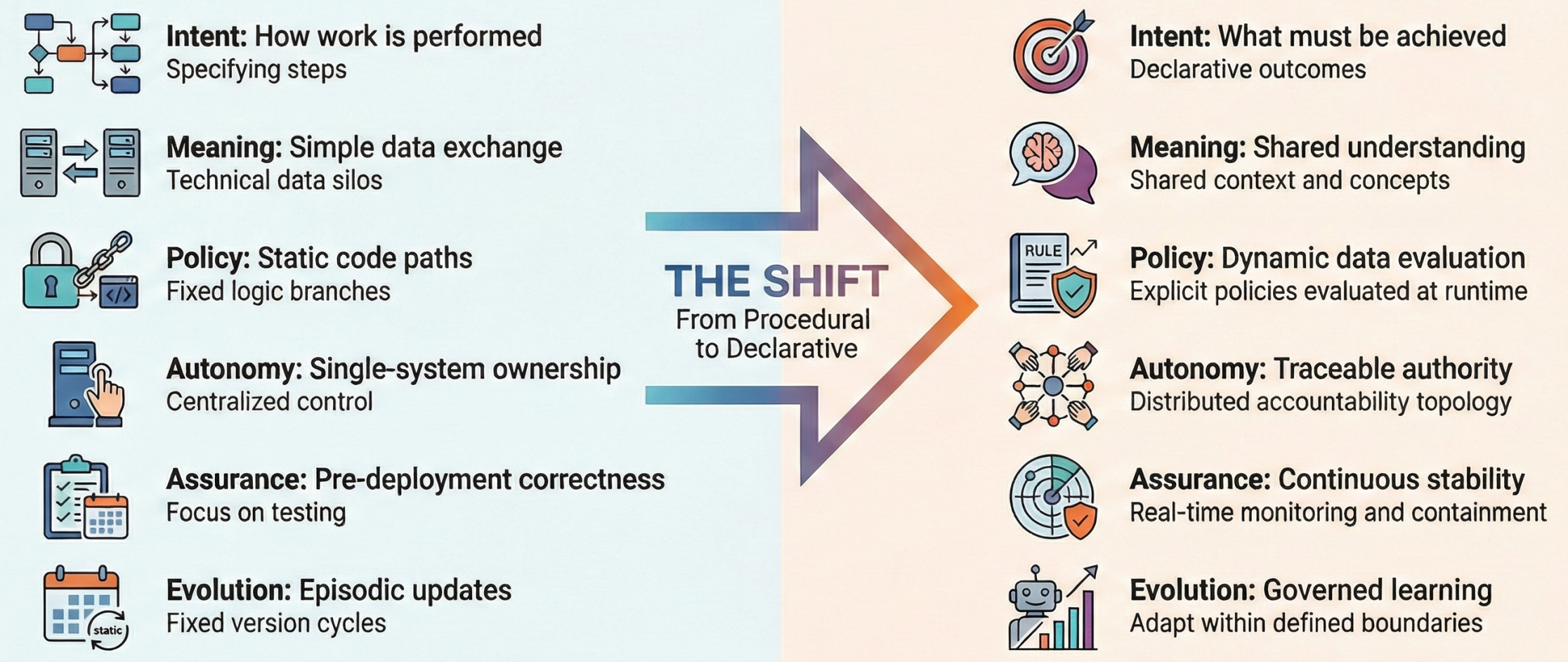

👉🏾 This is far from a technology-tools mindset but it is very well possible to treat it as technology-relationship mindset.

Seeing it is relationship there are approaches in Science, technology, engineering, and mathematics (STEM) that enable to handle those.

- In mathematics, a dynamical system is a system in which a function describes the time dependence of a point in an ambient space, such as in a parametric curve.

- In probability theory and statistics, a Markov chains Markov chain or Markov process is a stochastic process describing a sequence of possible events in which the probability of each event depends only on the state attained in the previous event.

- System dynamics (SD) is an approach to understanding the nonlinear behaviour of complex systems over time using stocks, flows, internal feedback loops, table functions and time delays.

A problem arises when to evaluate things that are not having a scale loike worth in value ethics Te

⚙ RN-1.1.2 Local content

⚖ RN-1.1.3 Guide reading this page

A manifesto for Systemic Wisdom.

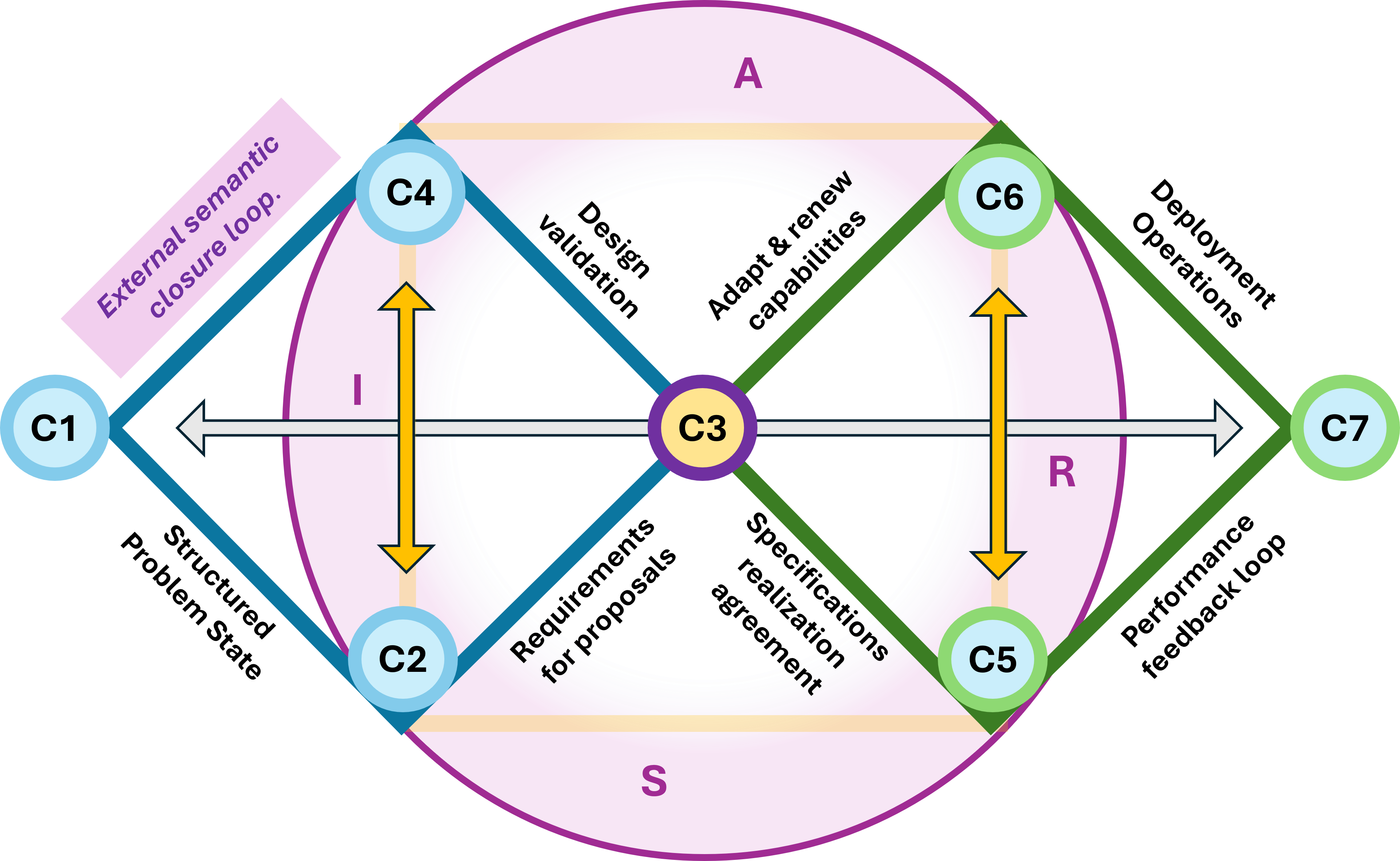

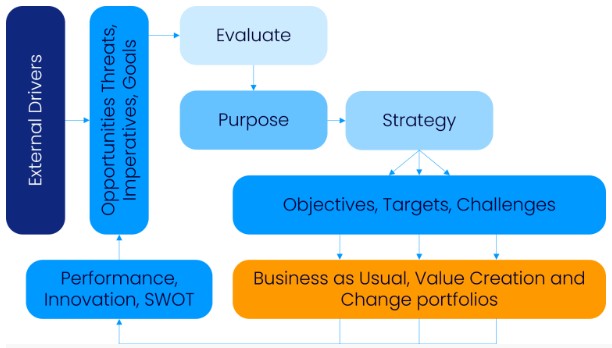

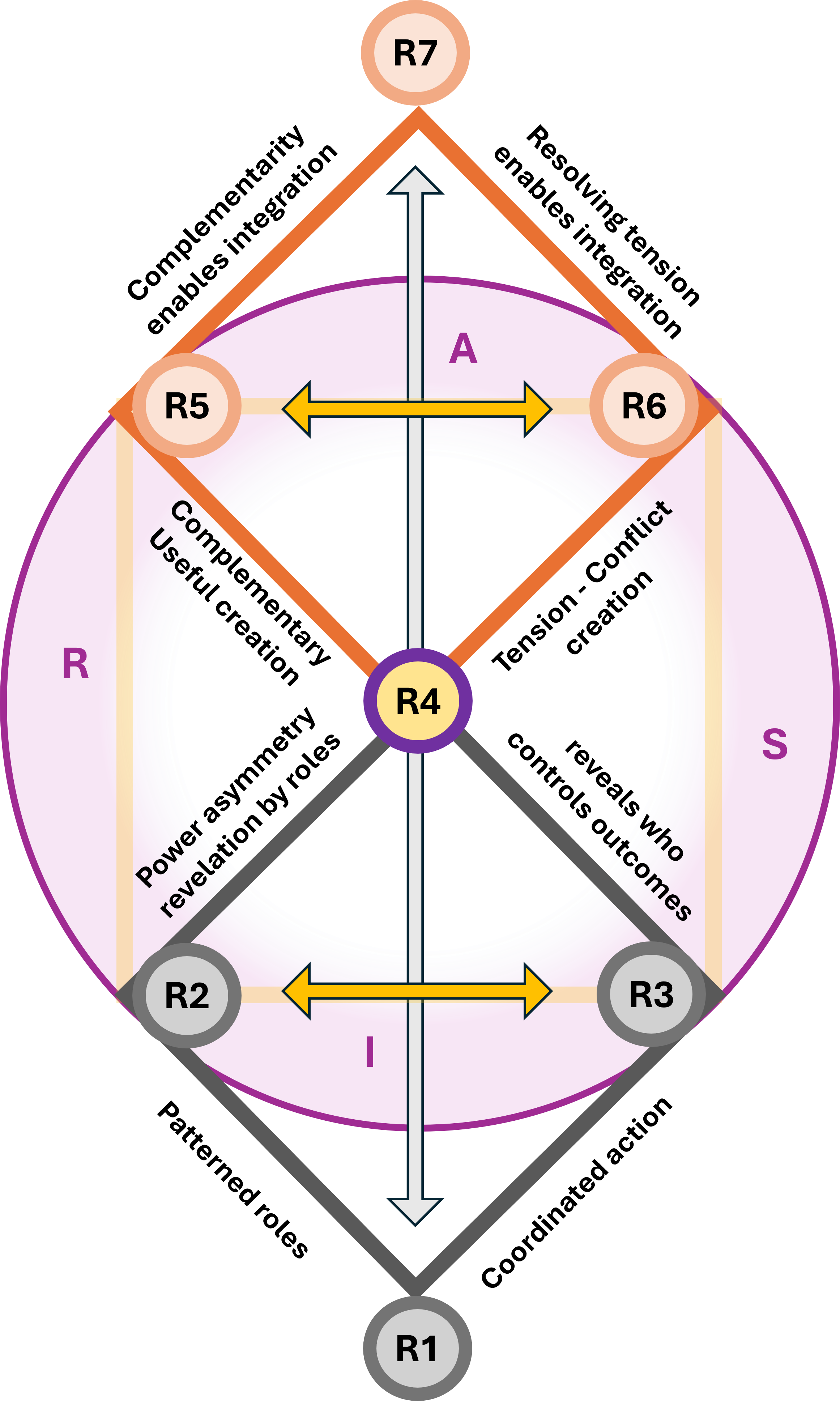

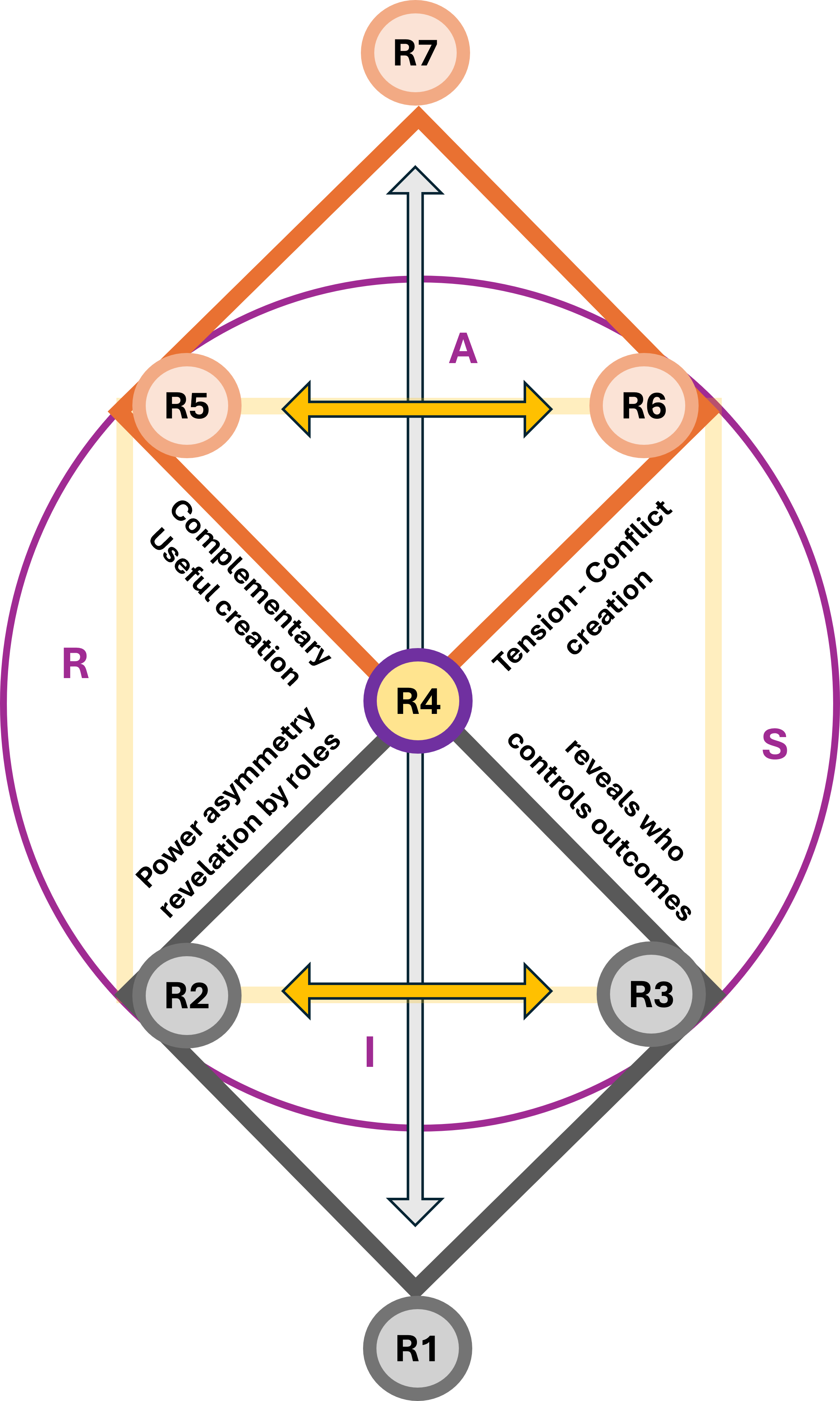

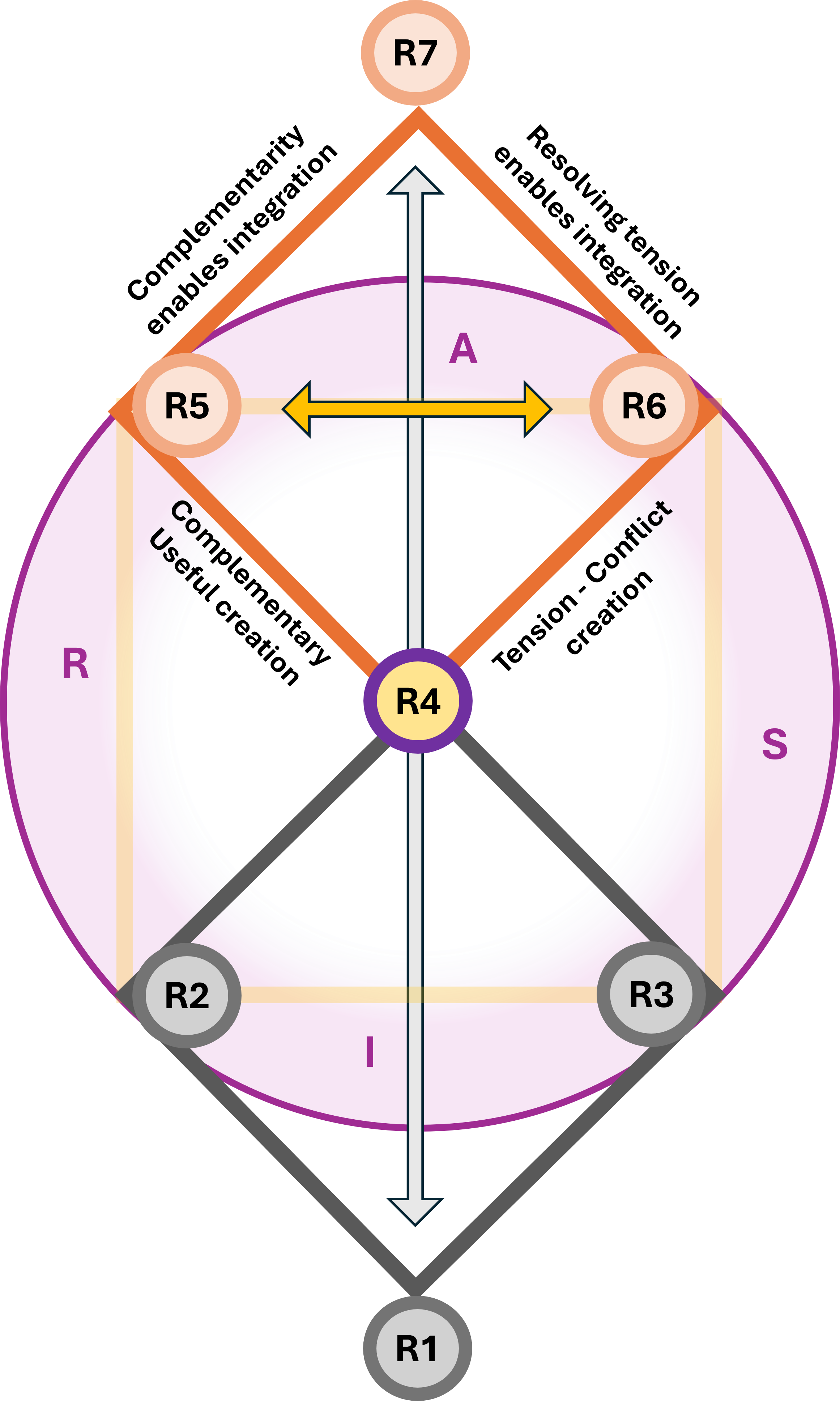

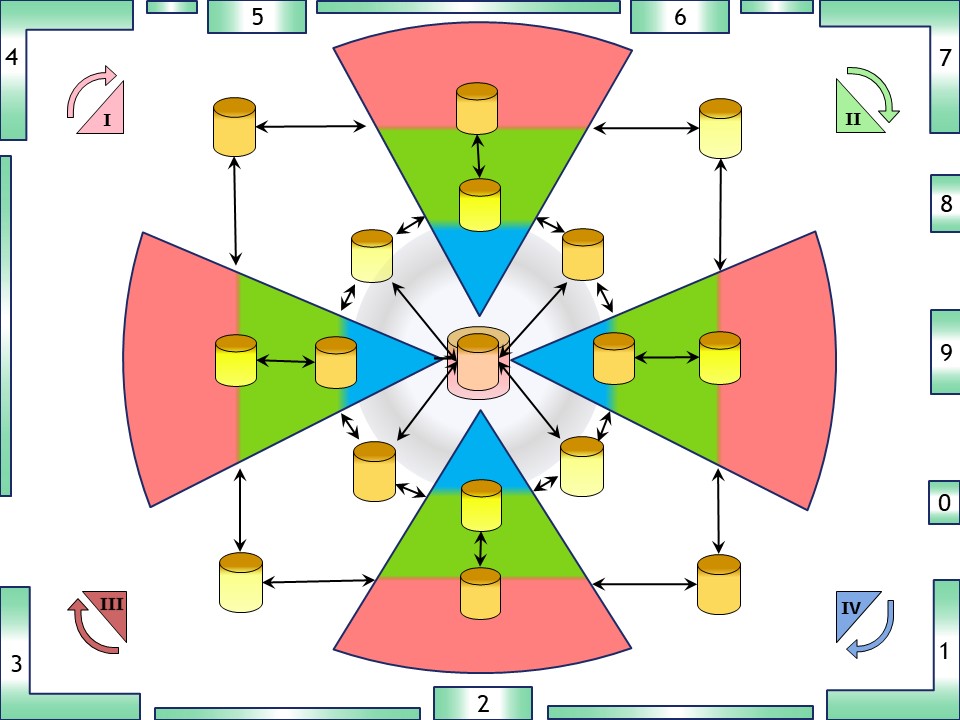

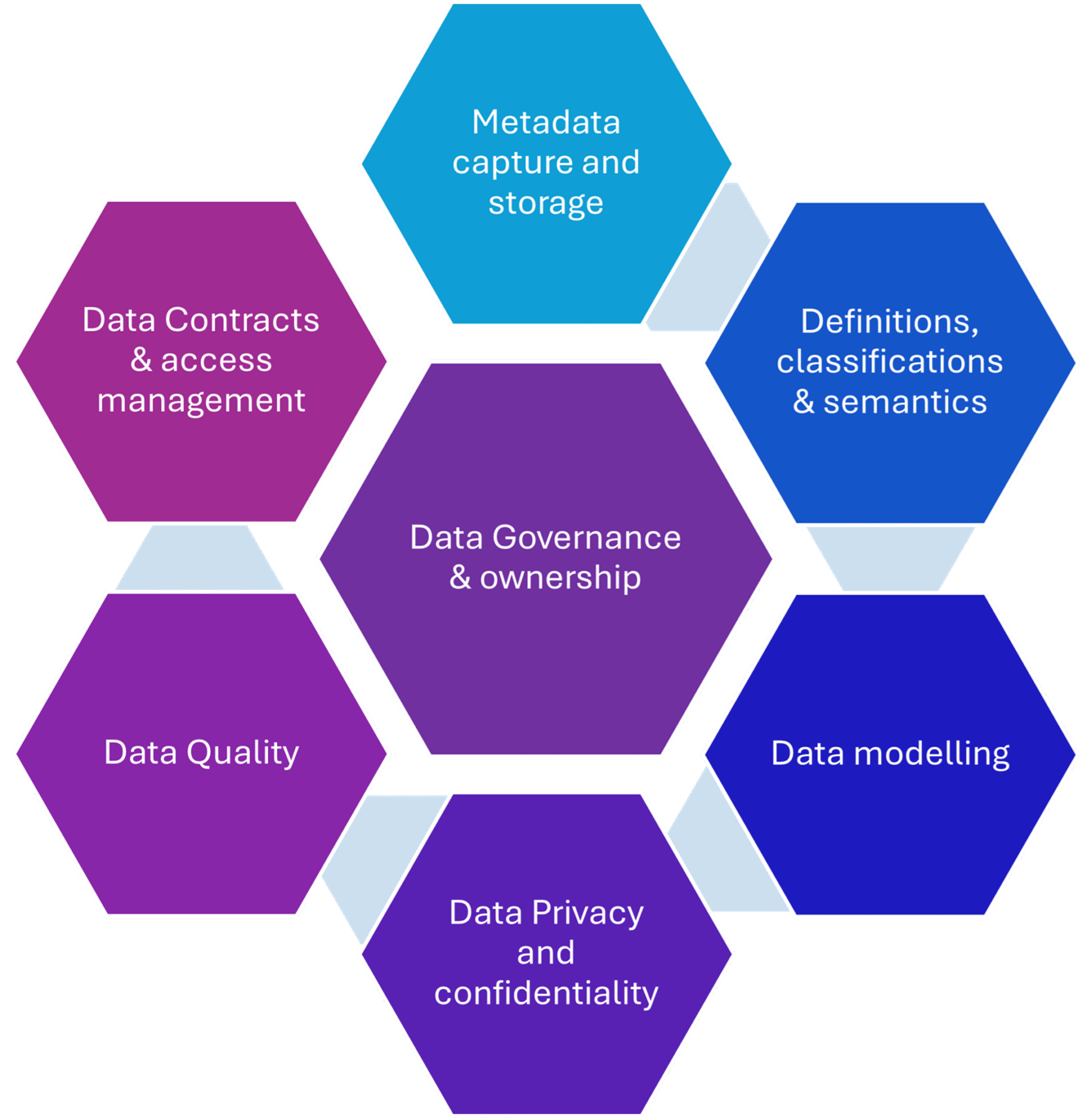

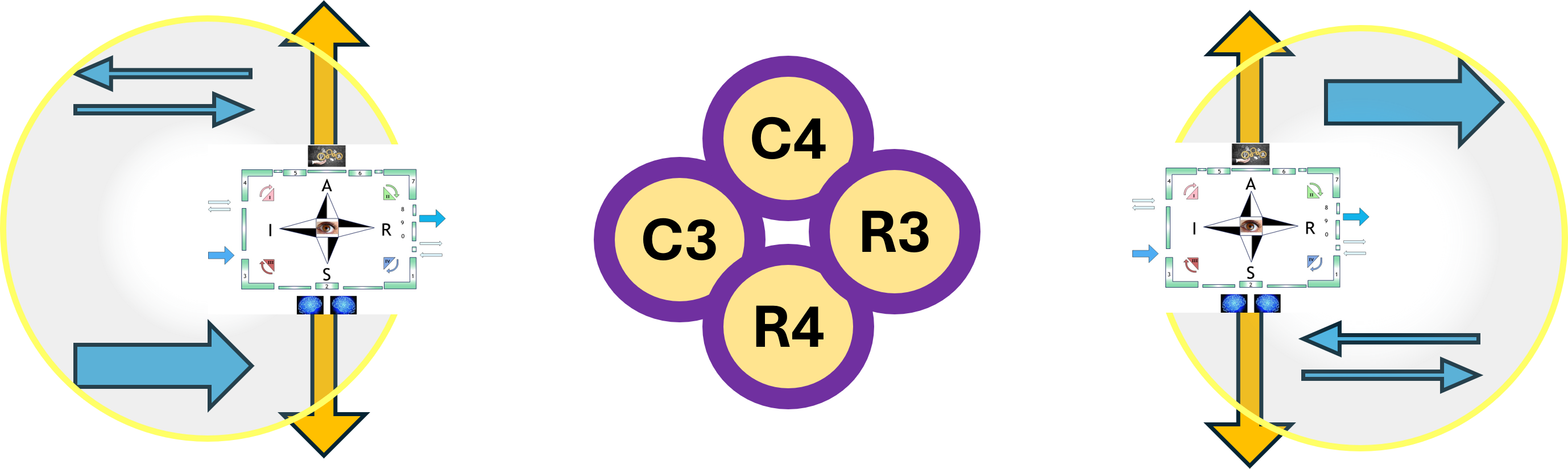

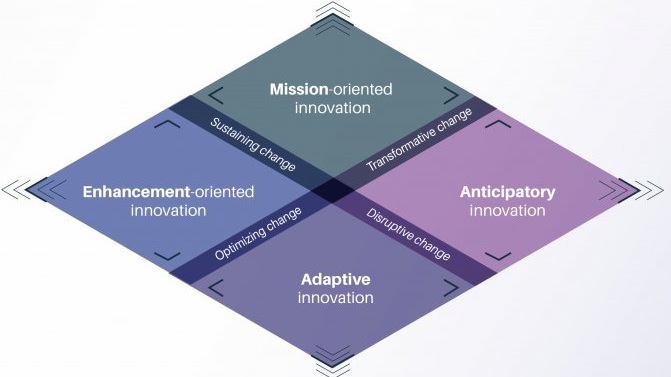

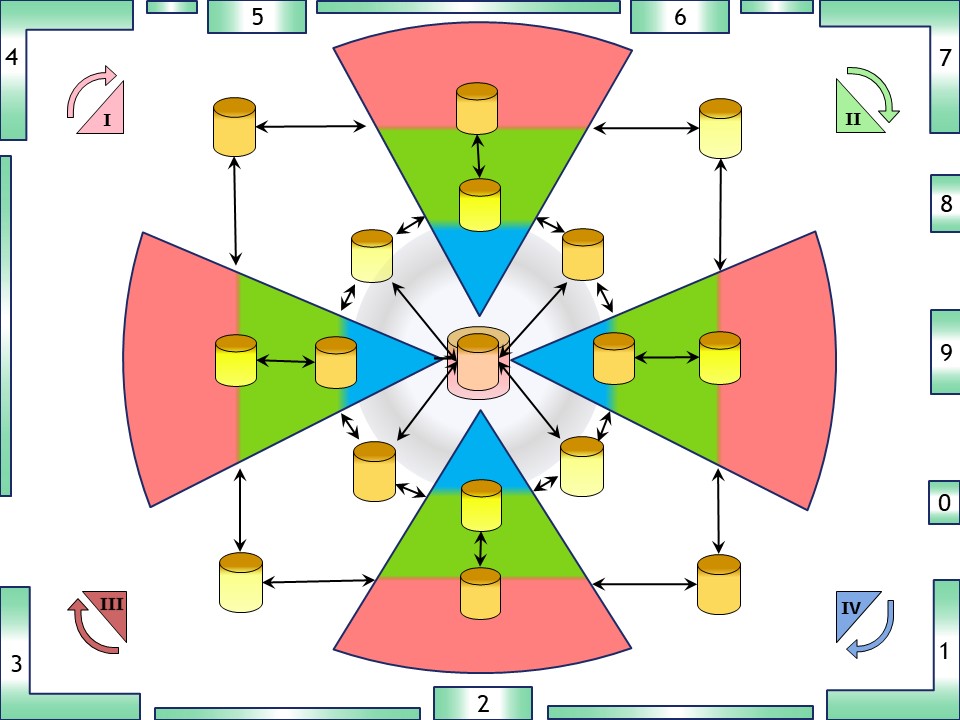

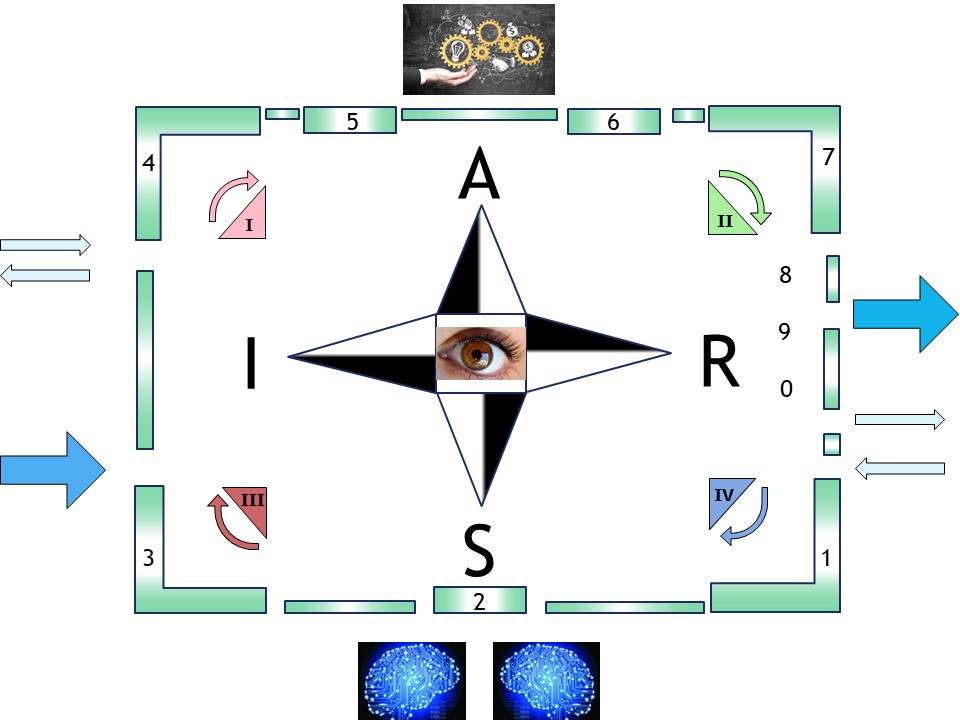

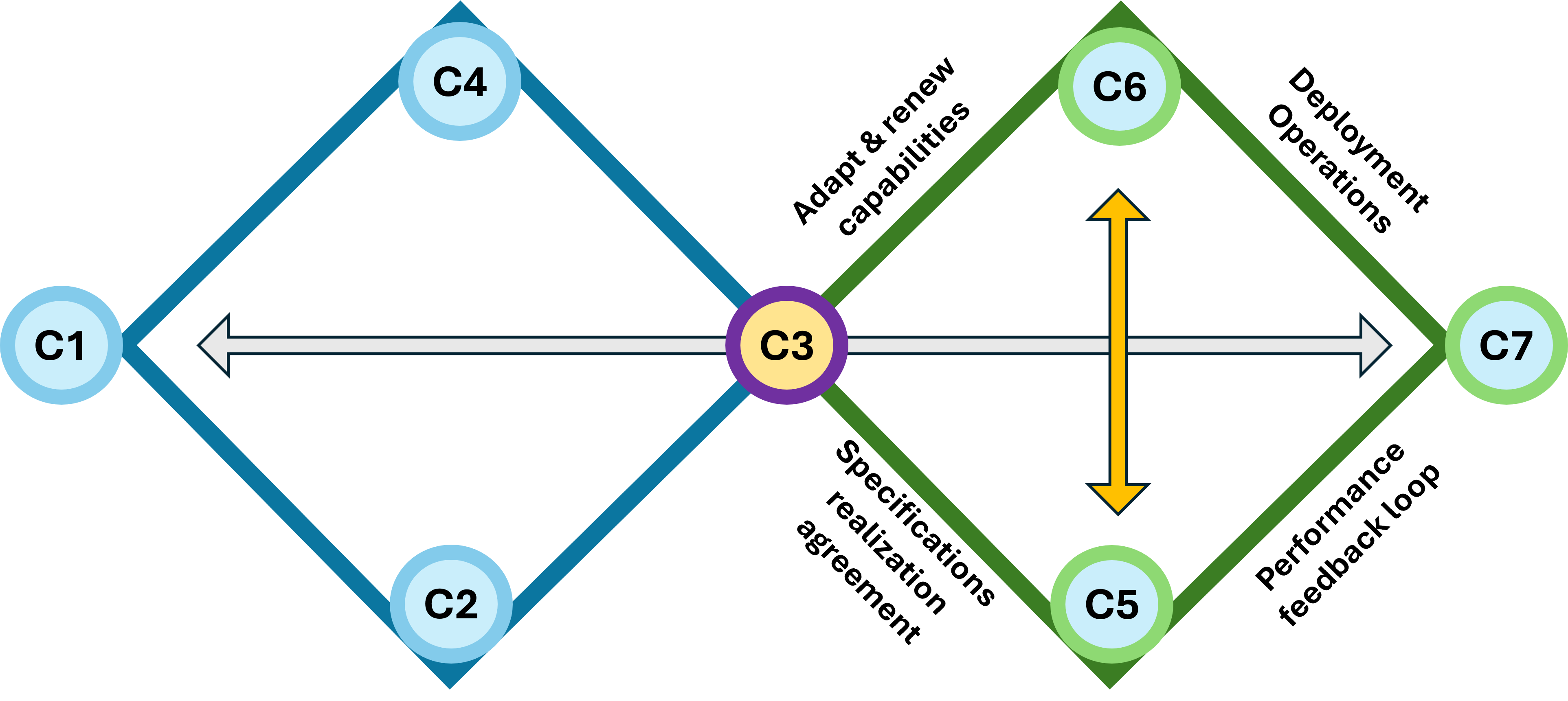

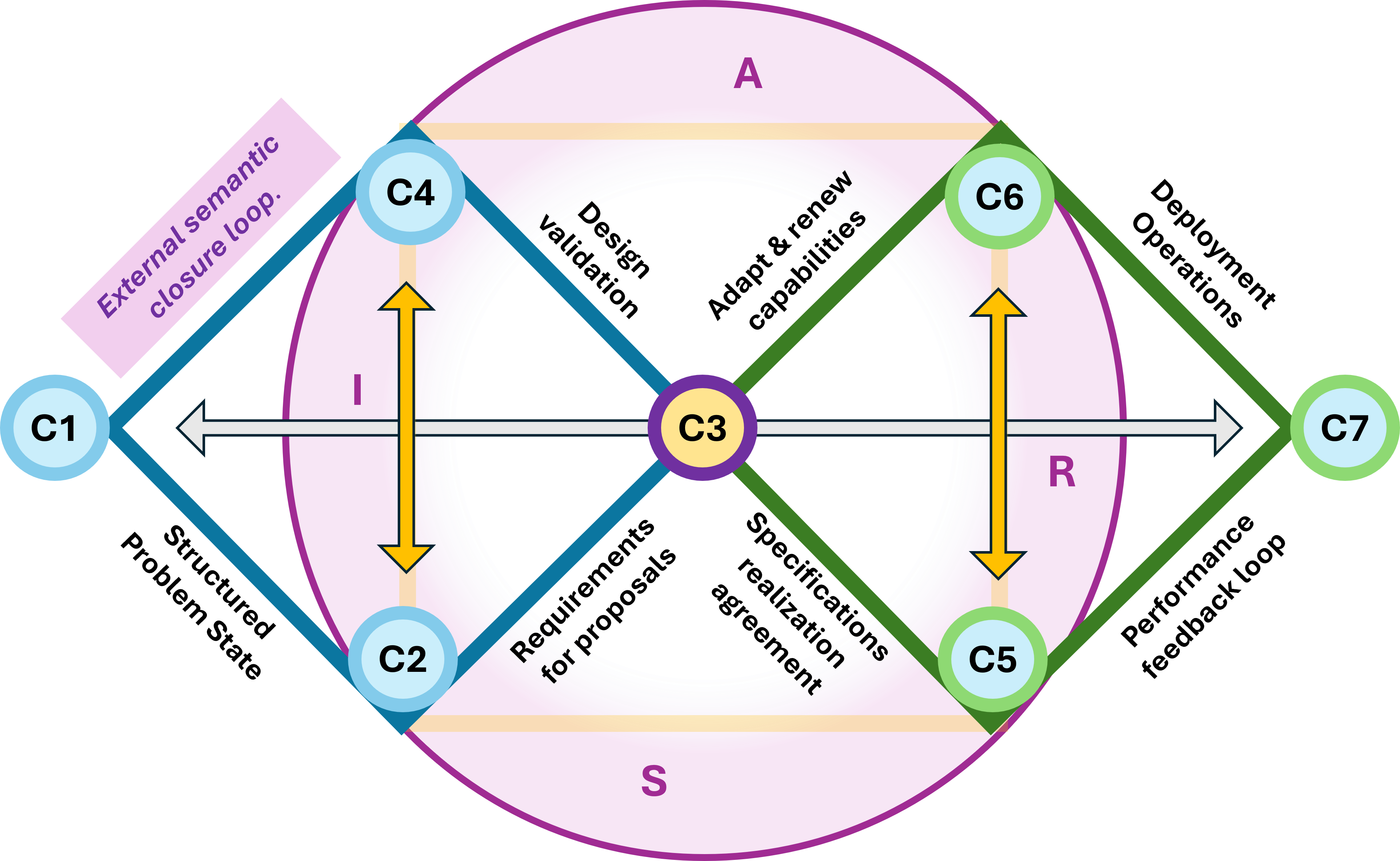

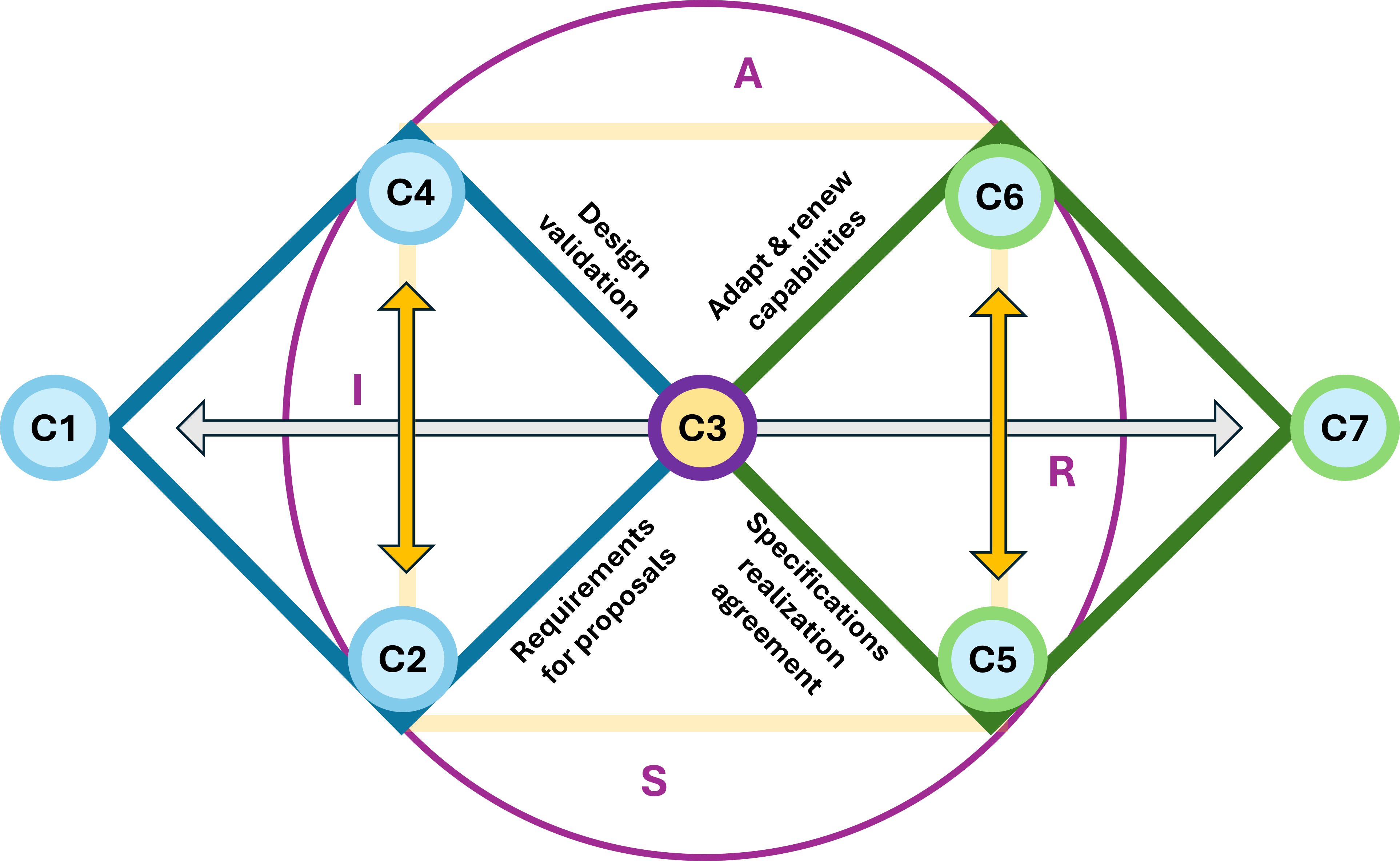

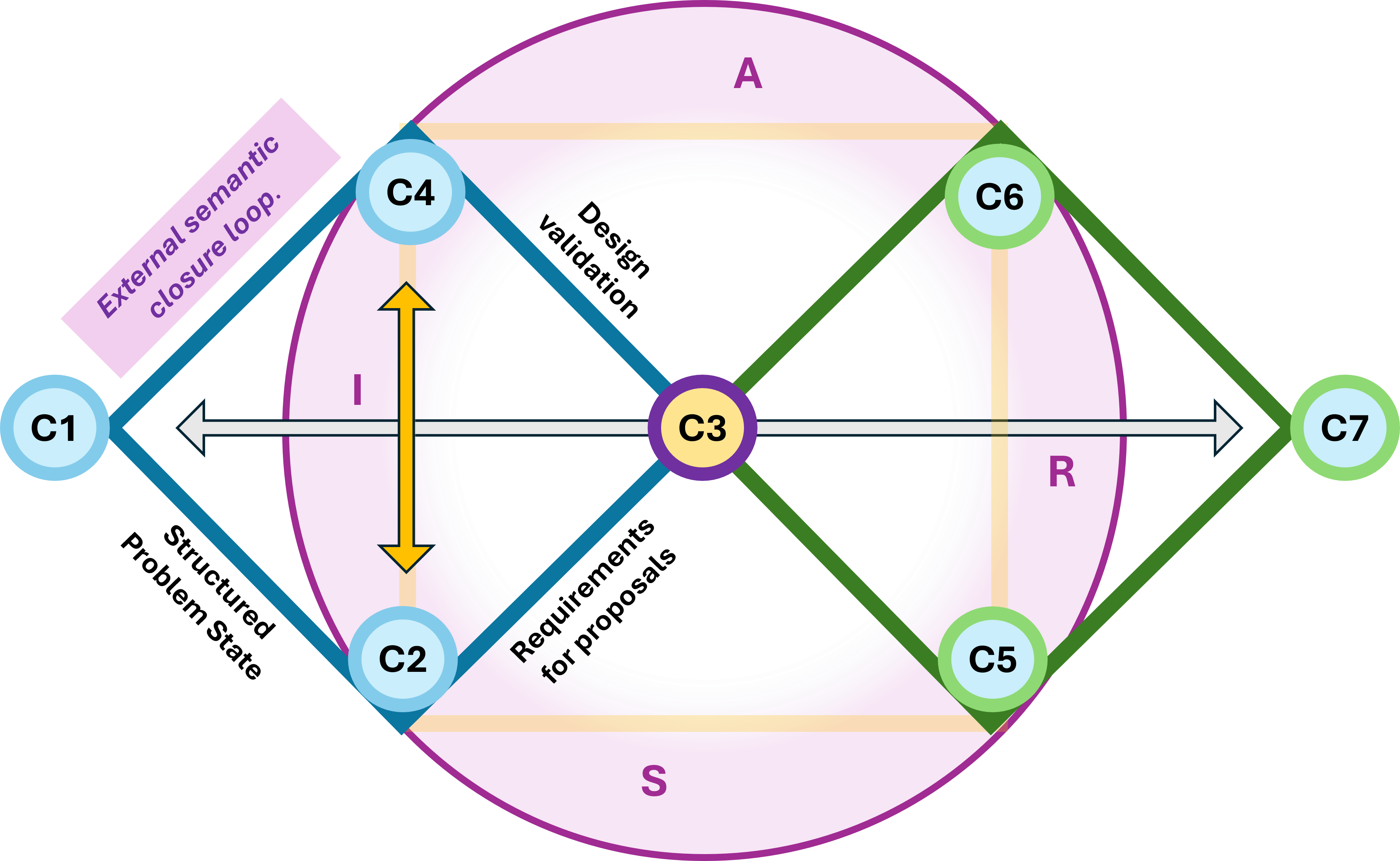

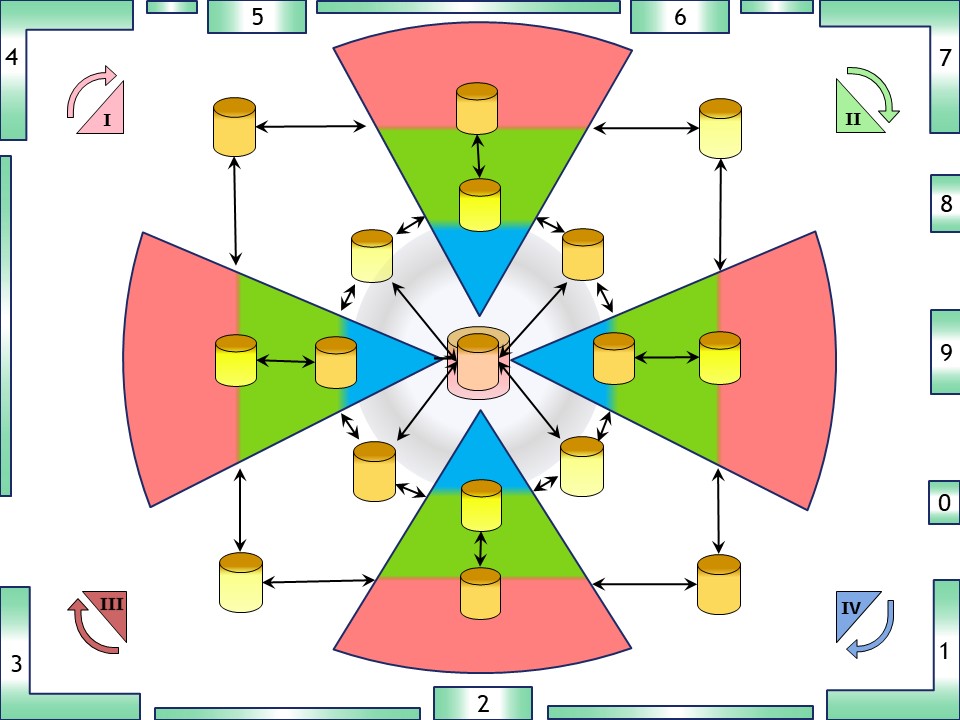

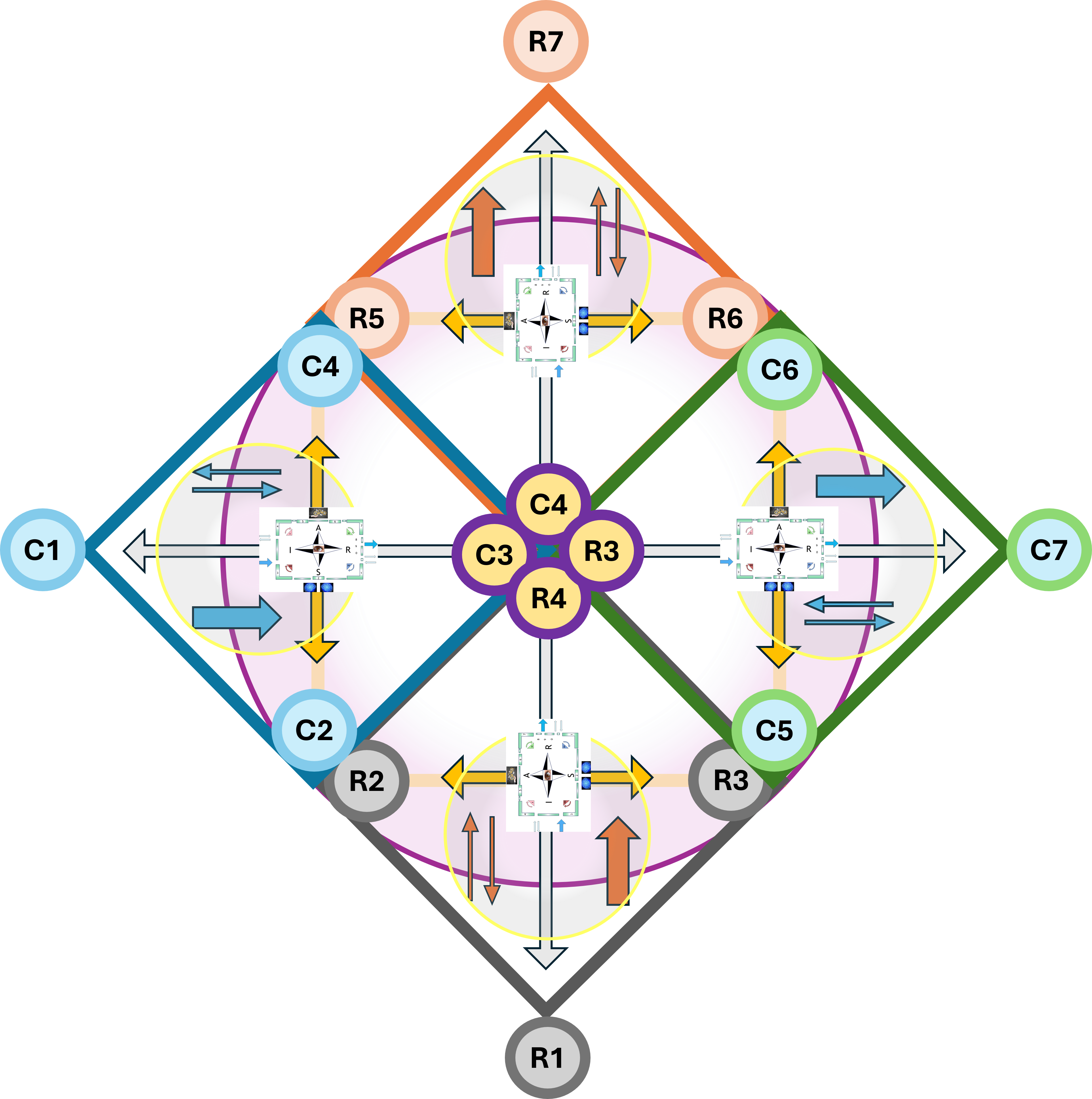

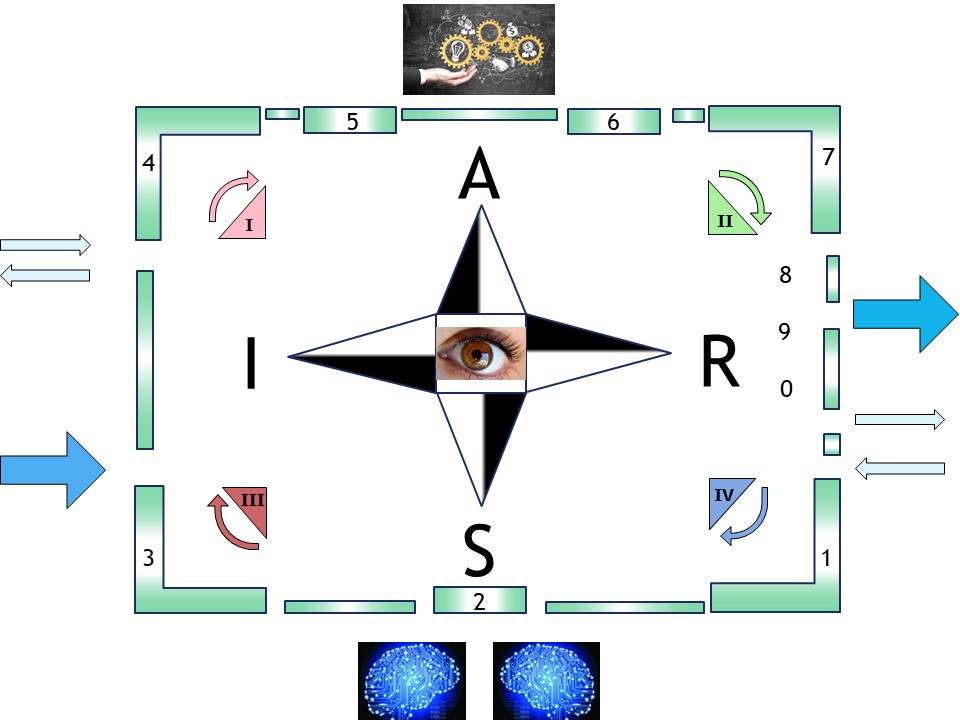

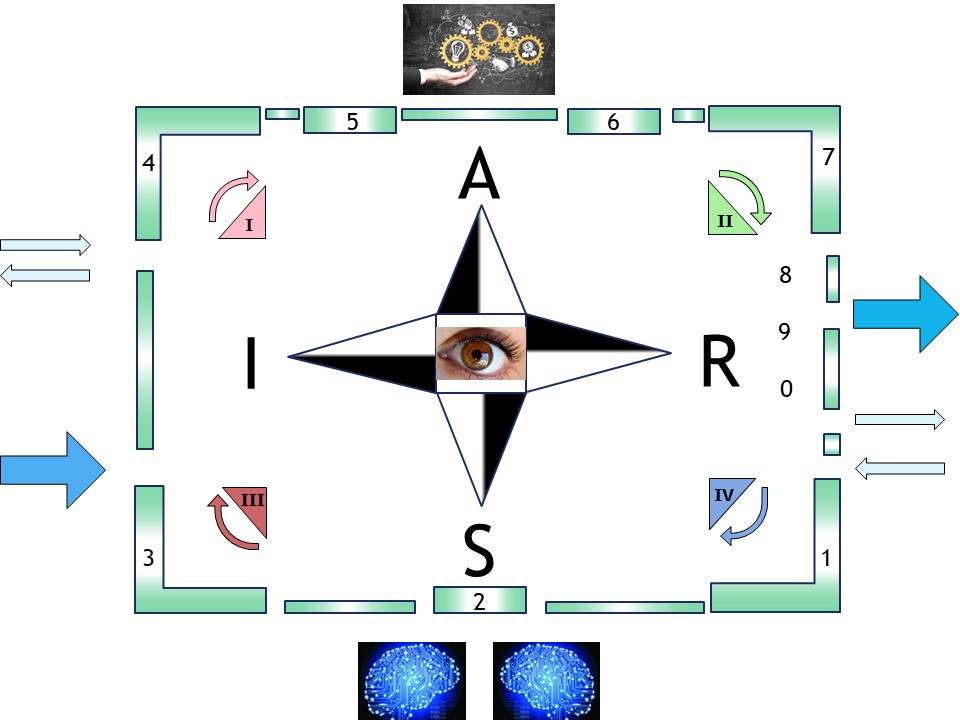

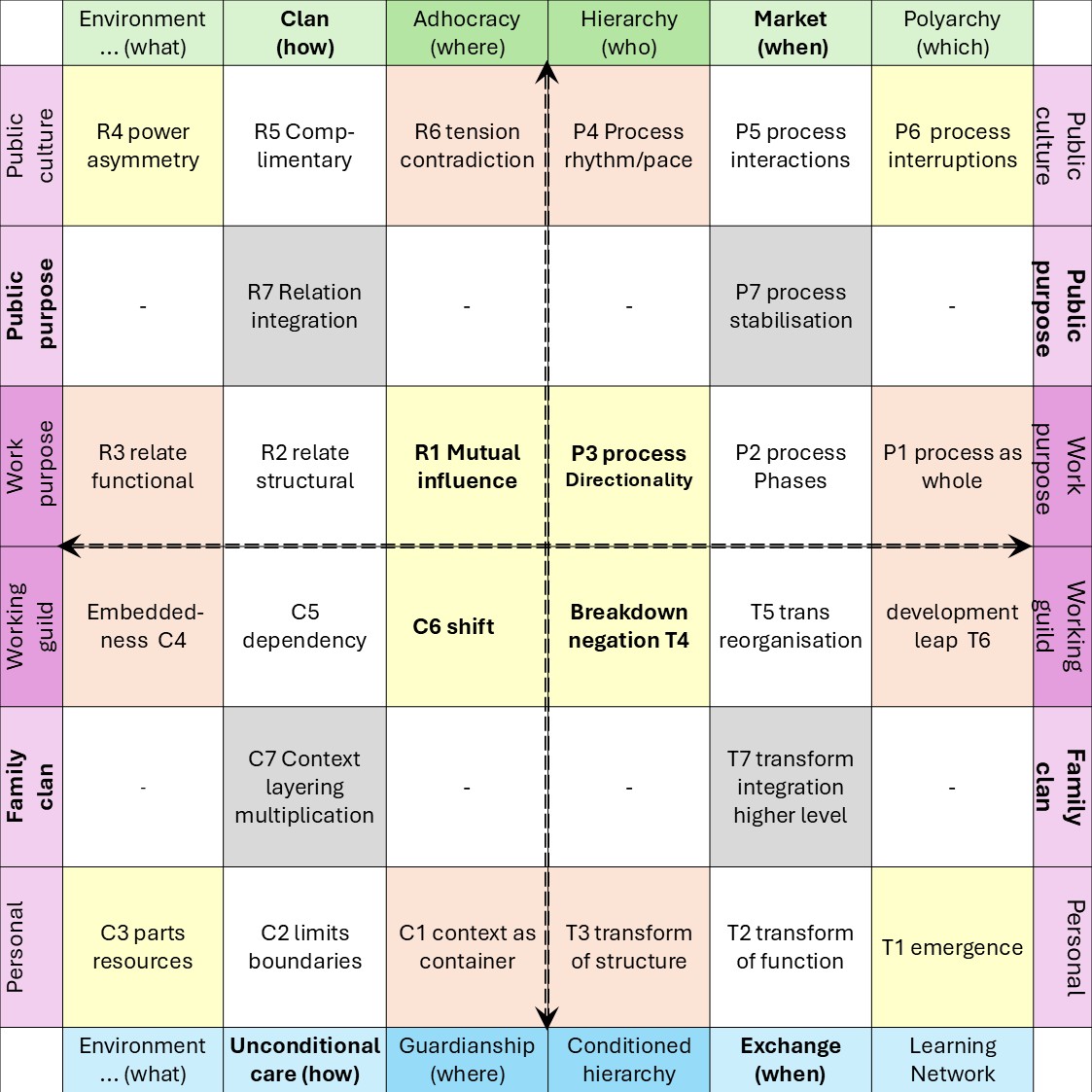

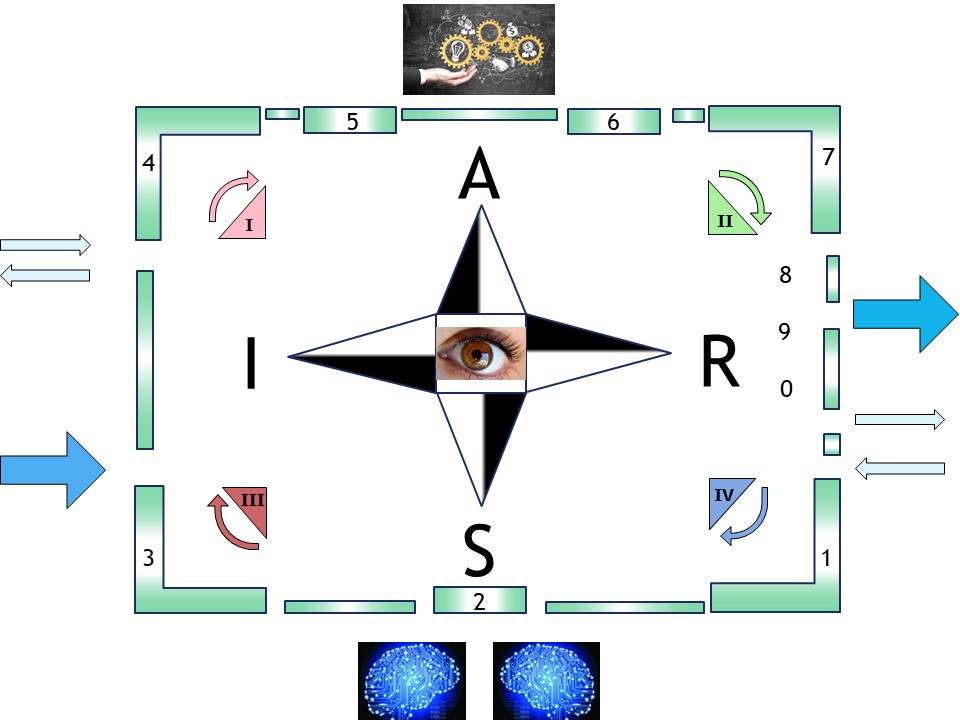

The foundation is a fractal model used to organize thought and action. It maps two dimensions:

- Horizontal (Relational Scales) : Sense ➡ Act ➡ Reflect.

- Vertical (Contextual Scales): Context ➡ Process ➡ Outcome.

⚖️

By intersecting these, the framework identifies specific "cells" for organizational health (e.g., "Problem" is the intersection of Context and Sense; "Execution" is Process and Act).

In situations where a 3*3 approach is not rich enough extending it to 6*6 approach is solving that.

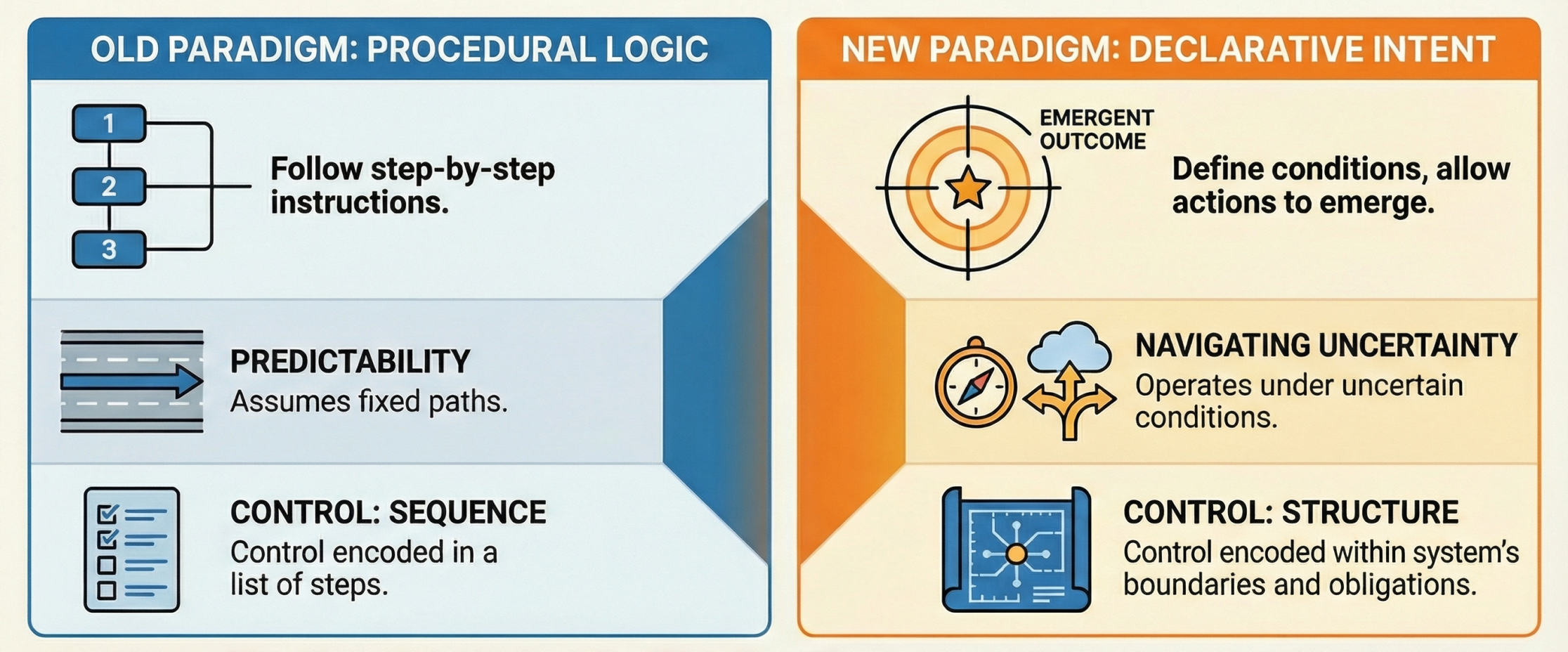

In complex environments (where humans are part of the system), traditional management fails because it ignores uncertainty and human bias.

Th change proposal is using a rigorous "grammar" of distinctions to ensure that governance is recursive, fractal, and responsive to reality rather than just "fantasy" plans.

An approach to understanding complex problems:

- Actor Perspective: The narrative of the person closest to the problem.

- Human Factors: Forces shaping behavior (incentives, power, norms).

- Ecosystem View: How other actors experience the same situation.

- Restated Problem: A synthesis that includes the history of failed prior attempts.

- Feasible Influence: Determining what capabilities are actually needed to effect

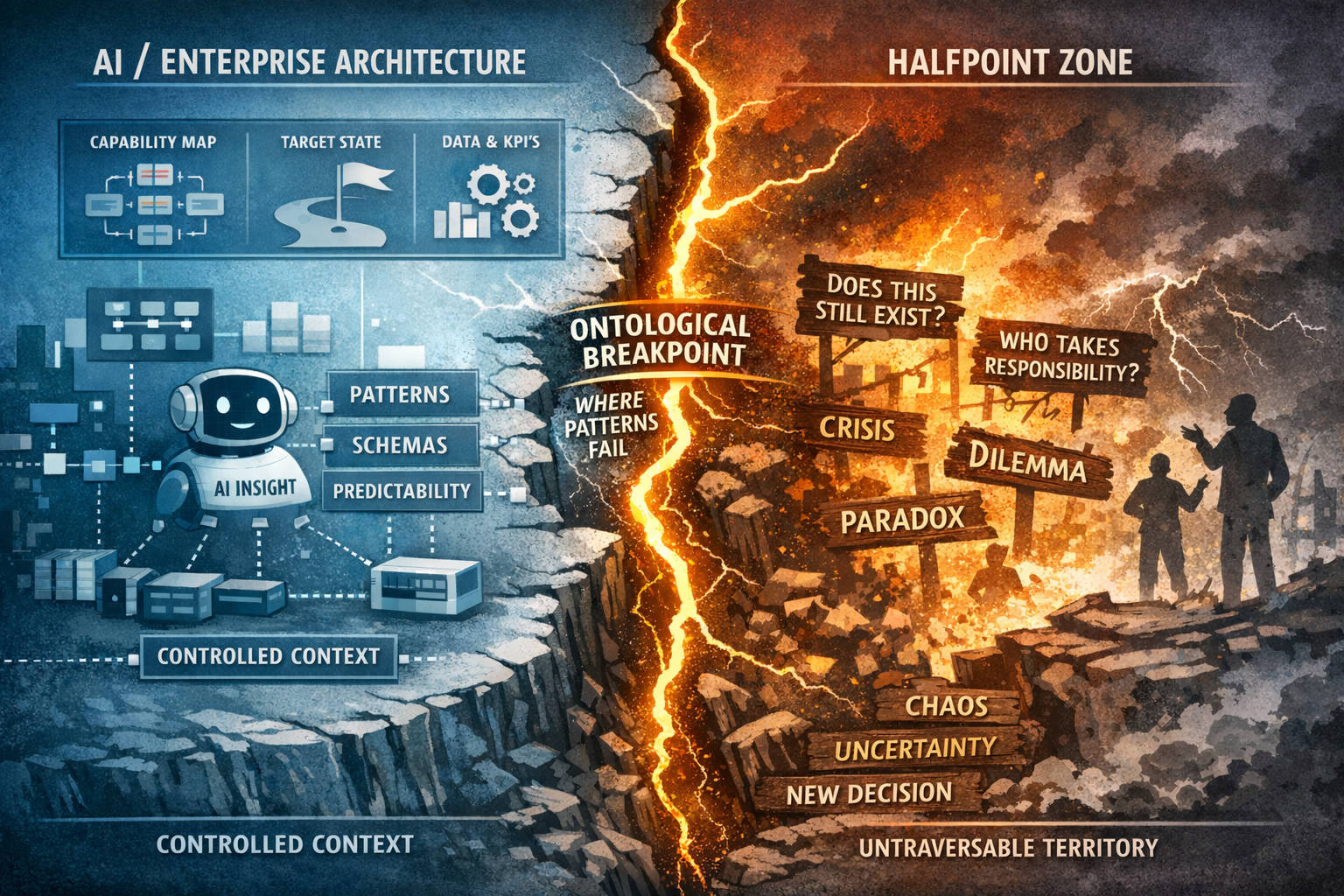

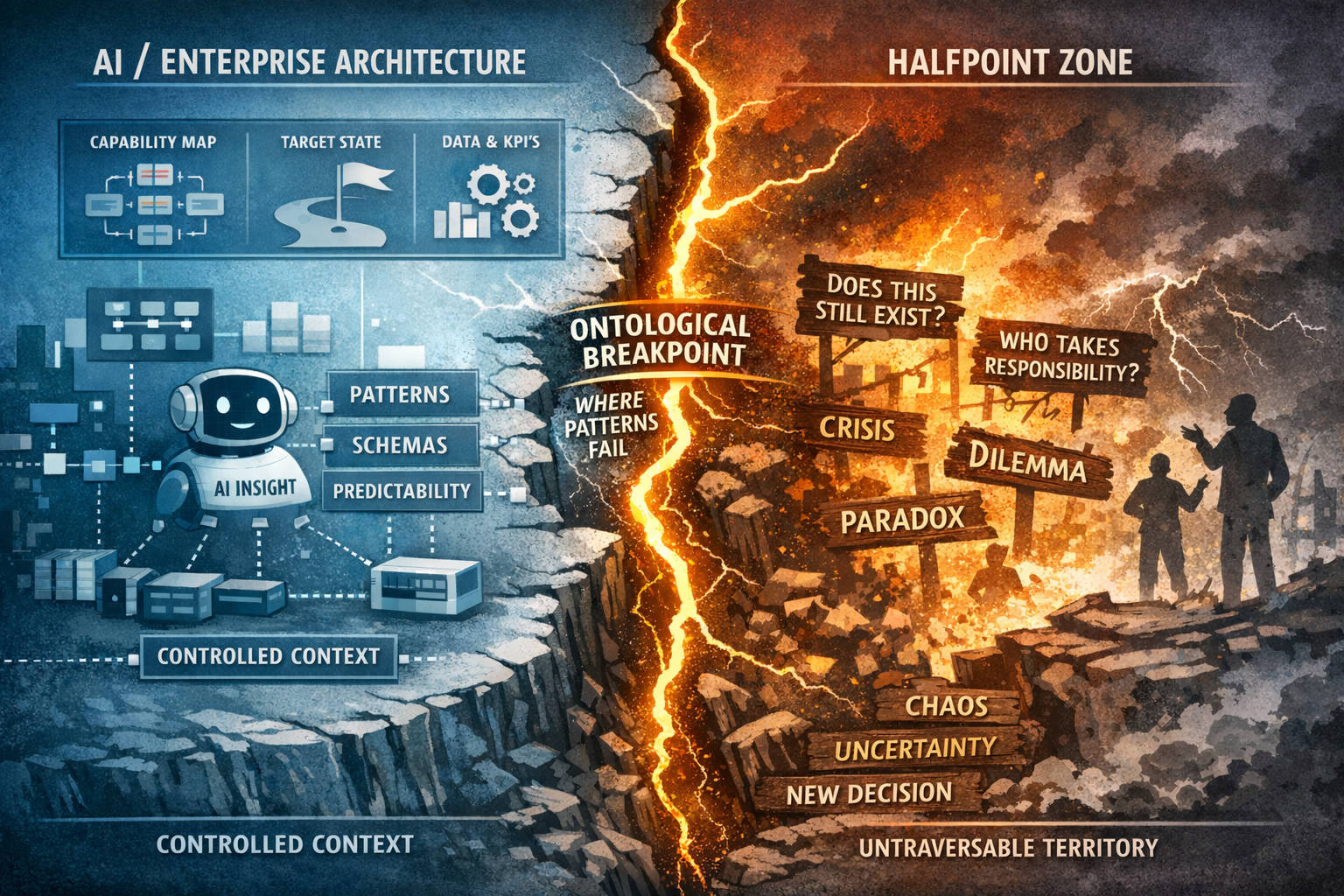

Moving away from just asking for AI results (appeasing) and toward requiring AI reasoning.

Transitioning EA from a static descriptive role into an active participant in "integrated governance."

Diagnosing "broken systems" by looking at where the "sense-act-reflect" loop is interrupted (e.g., "drifting" happens if you only look around but never back).

🎭

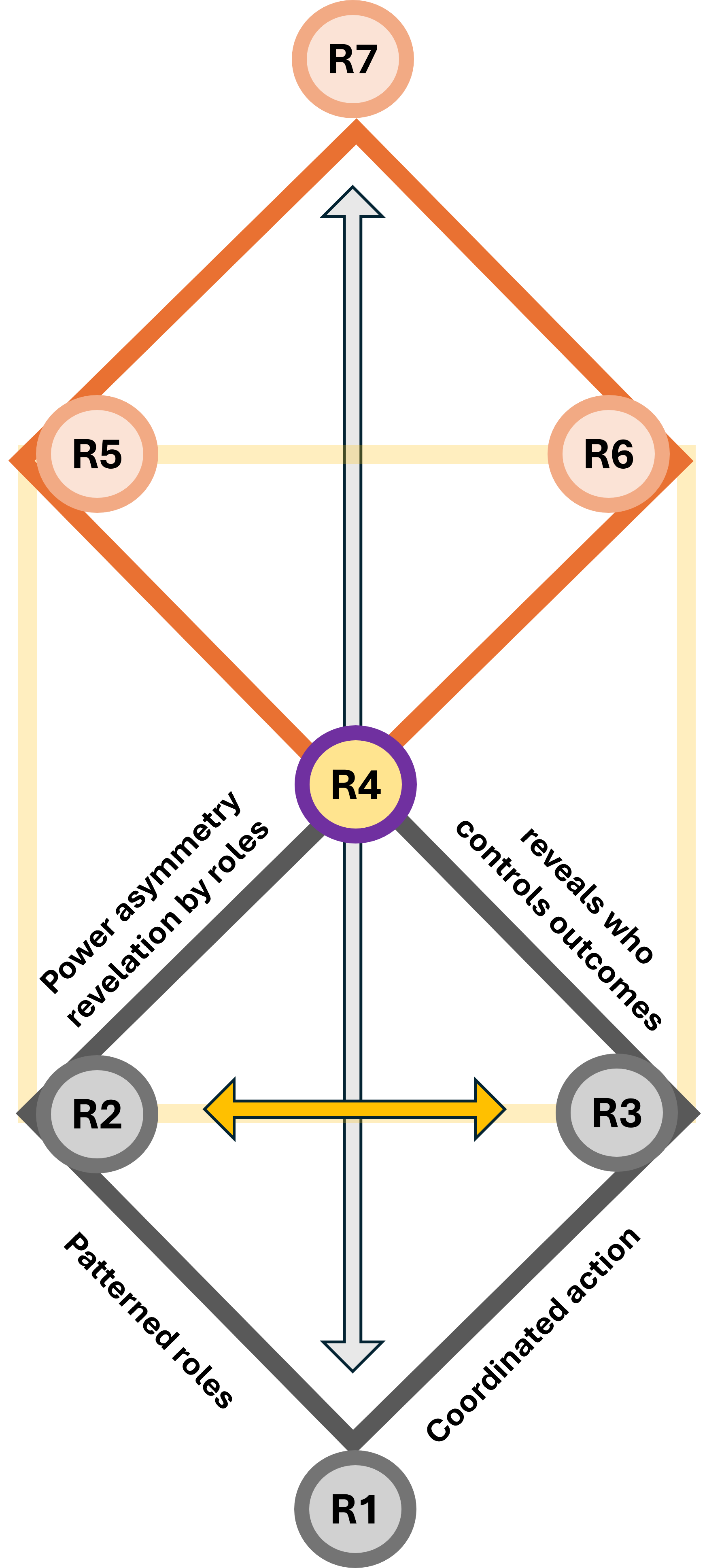

The distinction between State Points (referred to as "Semantic Stable Cells") and Halfway Points is about the difference between being somewhere and transitioning between stages of understanding.

State Points are where we are competent and consistent.

Halfway Points are where we are stretching and pretending.

🔰

A goal among others is to help in seeing when you are "stuck" in a halfway point so you can move toward a new stable state.

It requires to see: using the language of progress to hide a lack of actual change.

State Points and halfway points a recursive problem in understanding

The first chapters are technological, they deal with the "Serve" mindset.

🔰

Technology is not just about computers; it is the "Grammar" of the system.

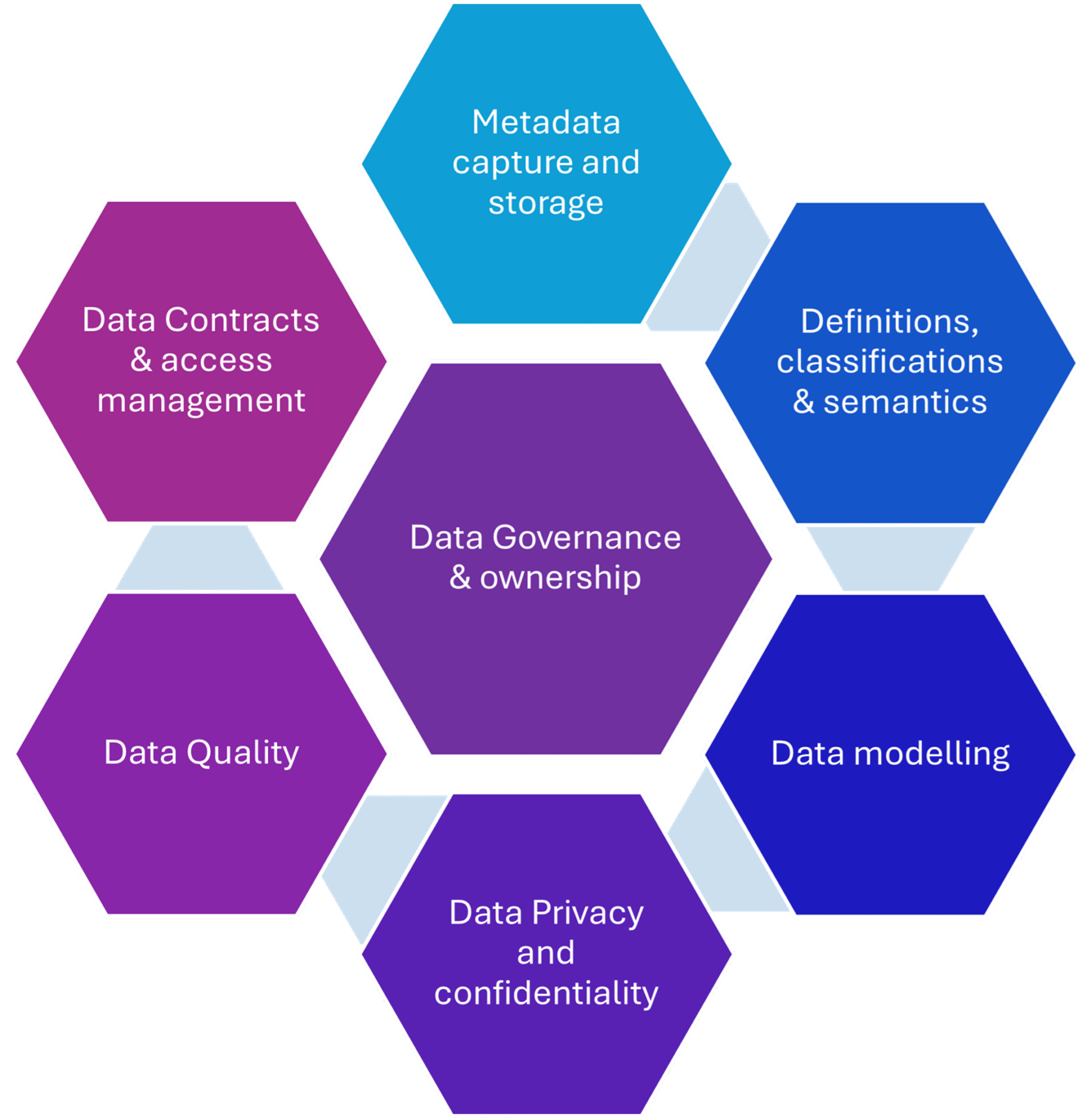

The focus is on Ontology (how we define things), Taxonomies (how we categorize things), and Data Topology (where things are).

The Goal: It aims to build the "Reference Frame." Before you can think about complex "Halfway Points," you need a stable technological language.

If the "State Points" aren't defined correctly here, the transitions later will be pure chaos.

- Technology is "Semantic Blind": Technology alone cannot "close" a system.

You can have the best technology structure in the world, but it is "incomplete" until a human applies Sense-Making to it.

- Focus on the "How," not the "What": The geometry of information (how it flows) but leaves the specific technical "dimensions" empty.

Every organization has different tools (AI, SQL, SAP, etc.) and they change in time.

The chapter is a "blank map" meant to be filled with your specific technical reality at a moment.

- Invoke Harold Leavitt and Talcott Parsons: Bridging the gap between "Management Science" (the technical engine) and "Sociology" (the social system).

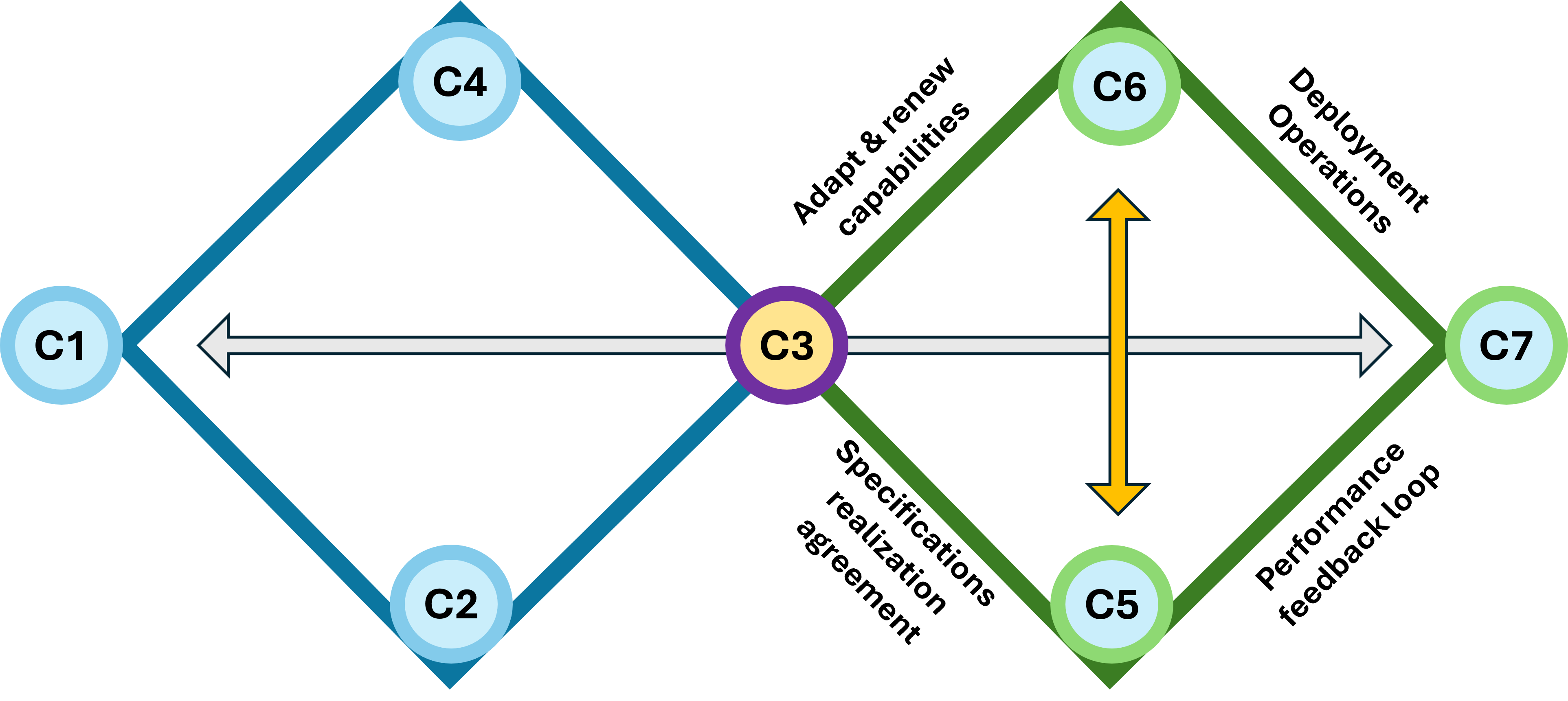

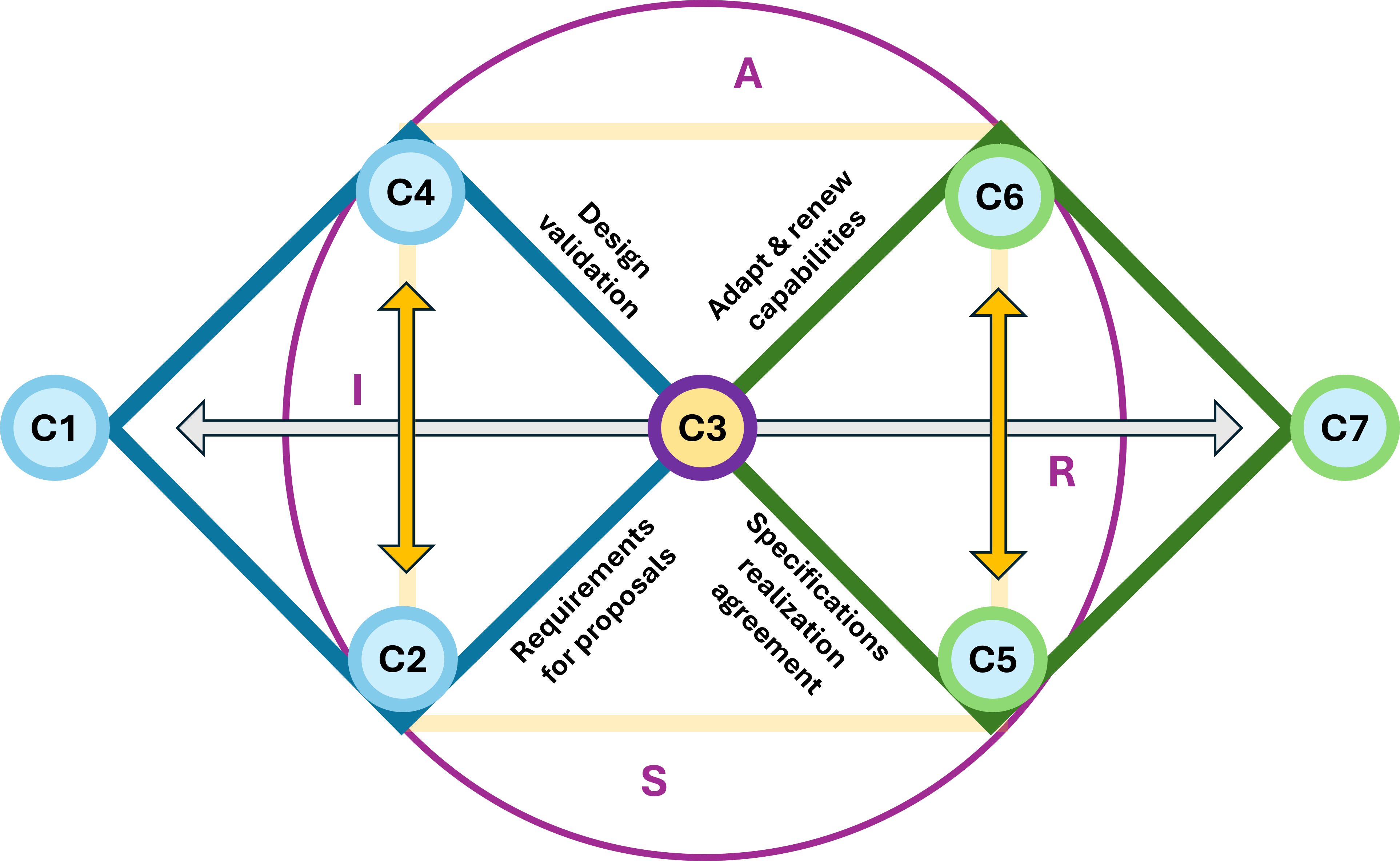

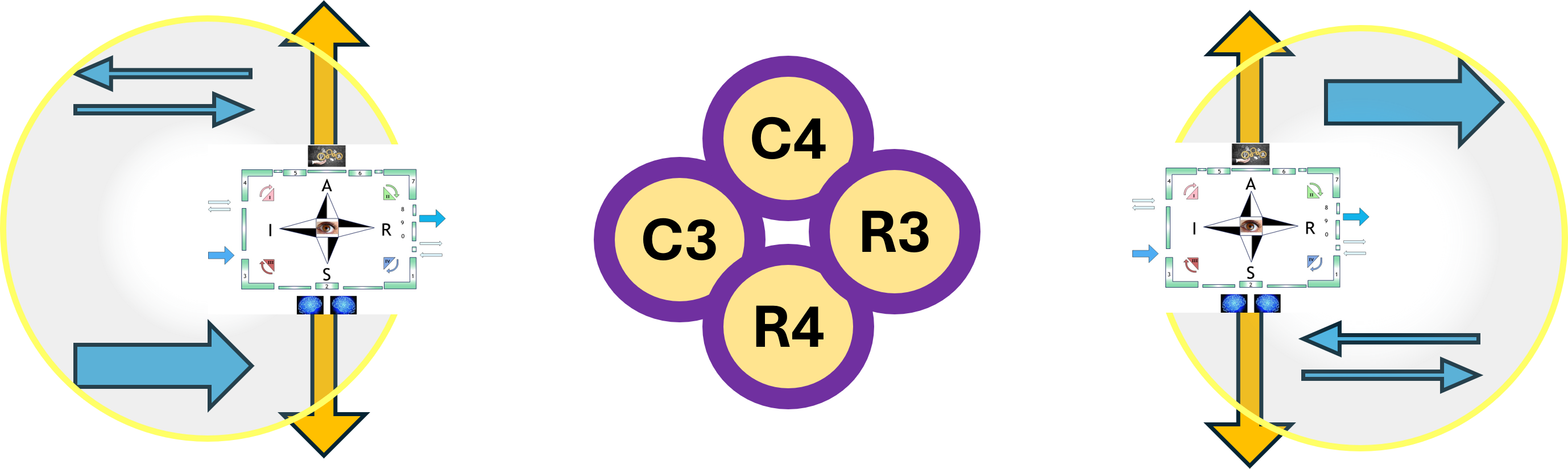

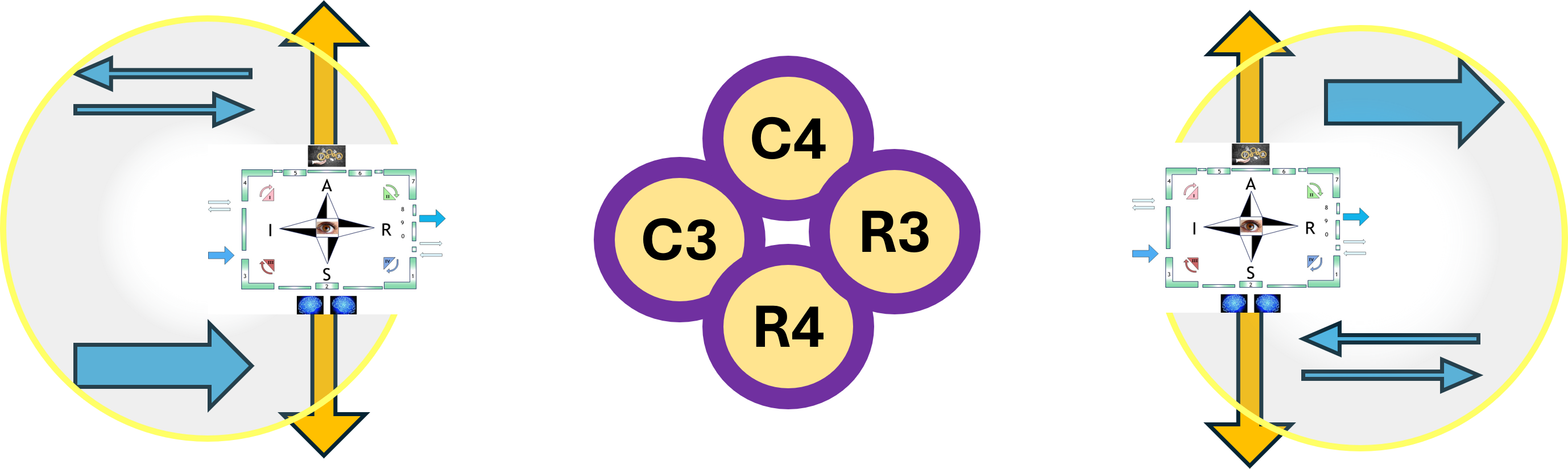

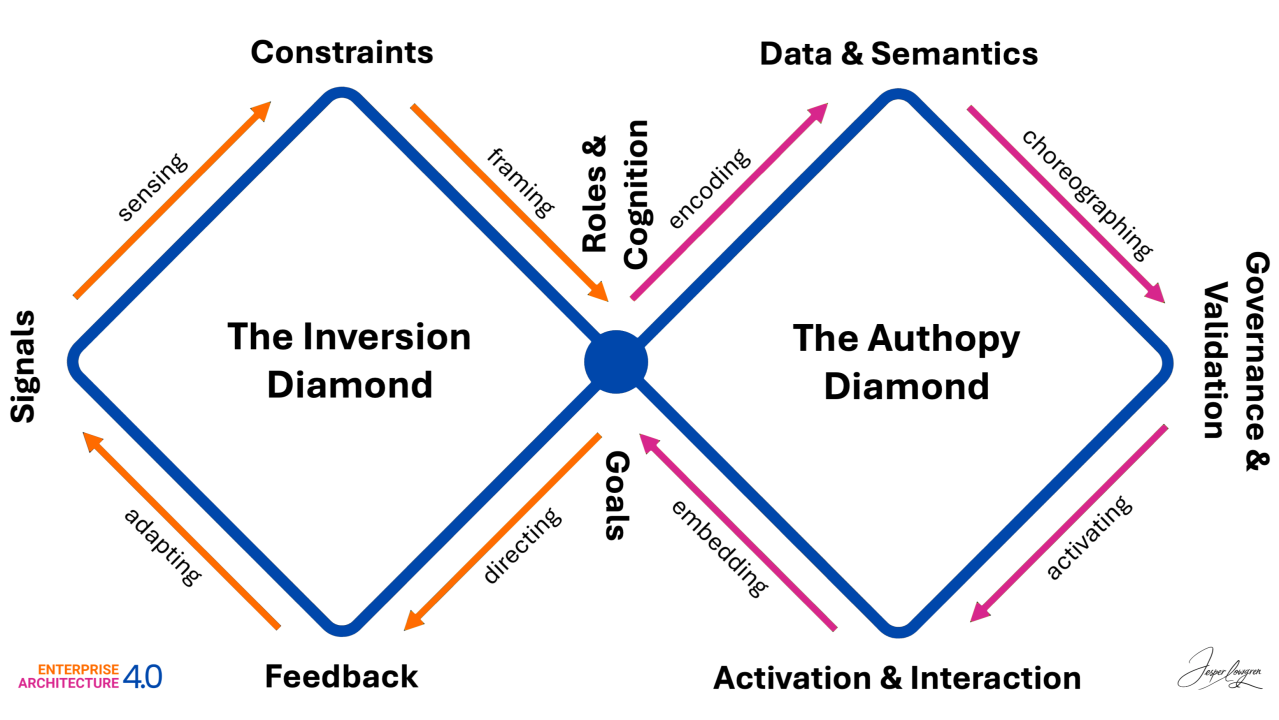

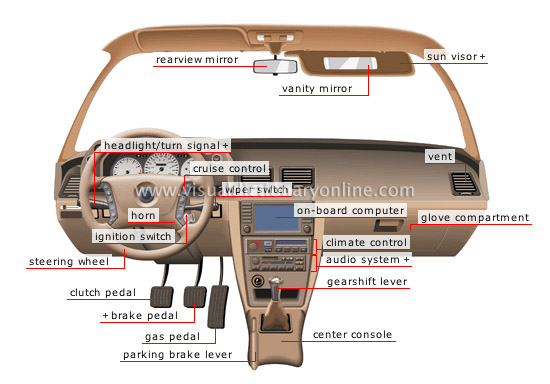

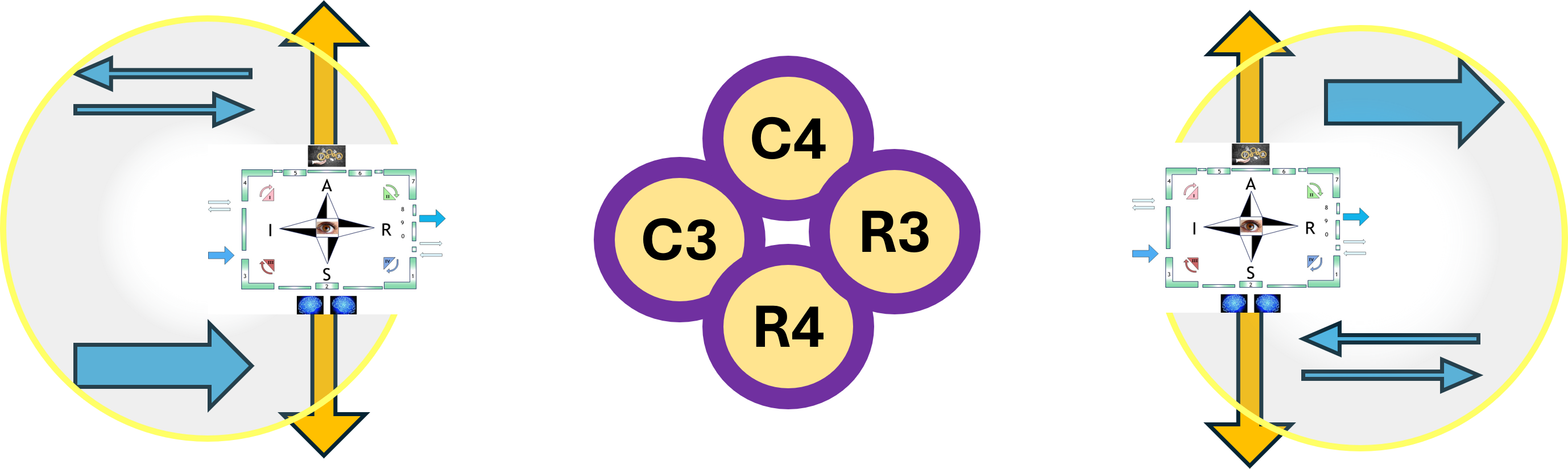

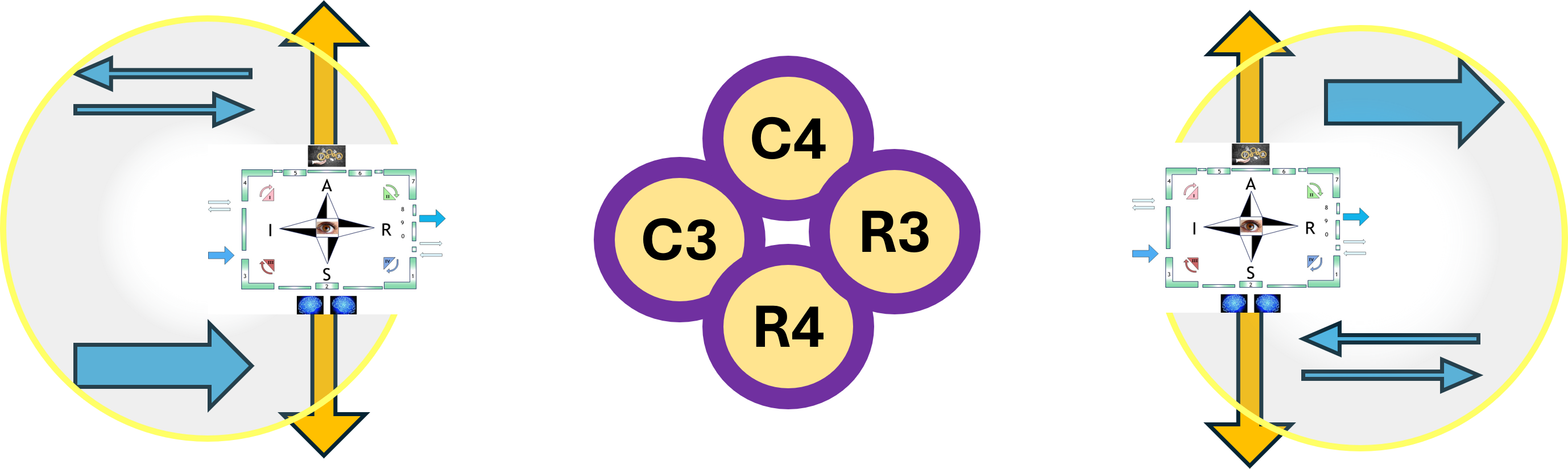

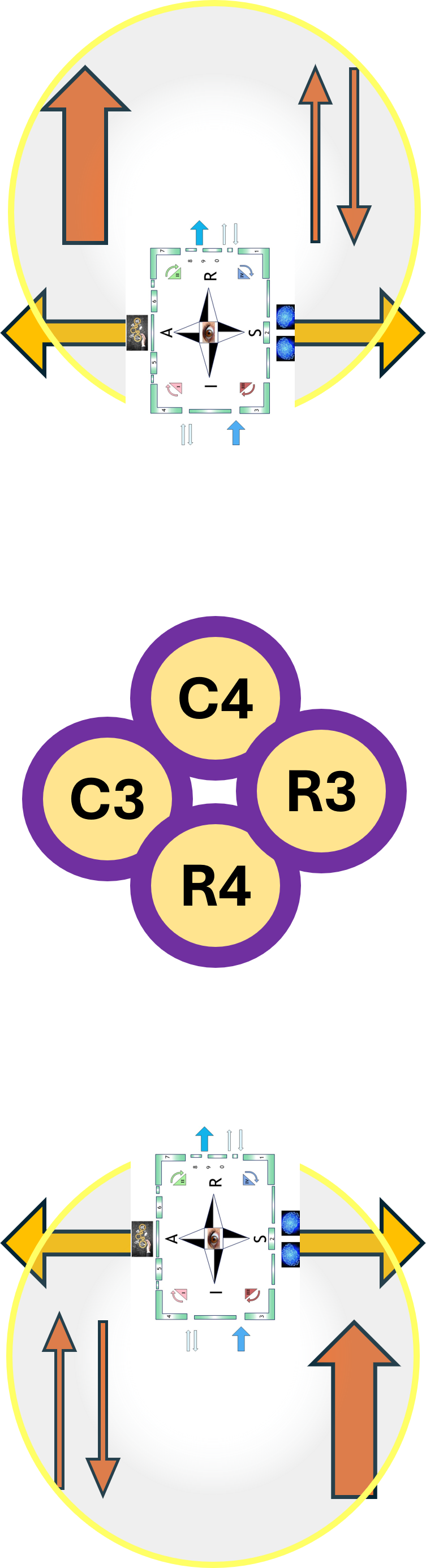

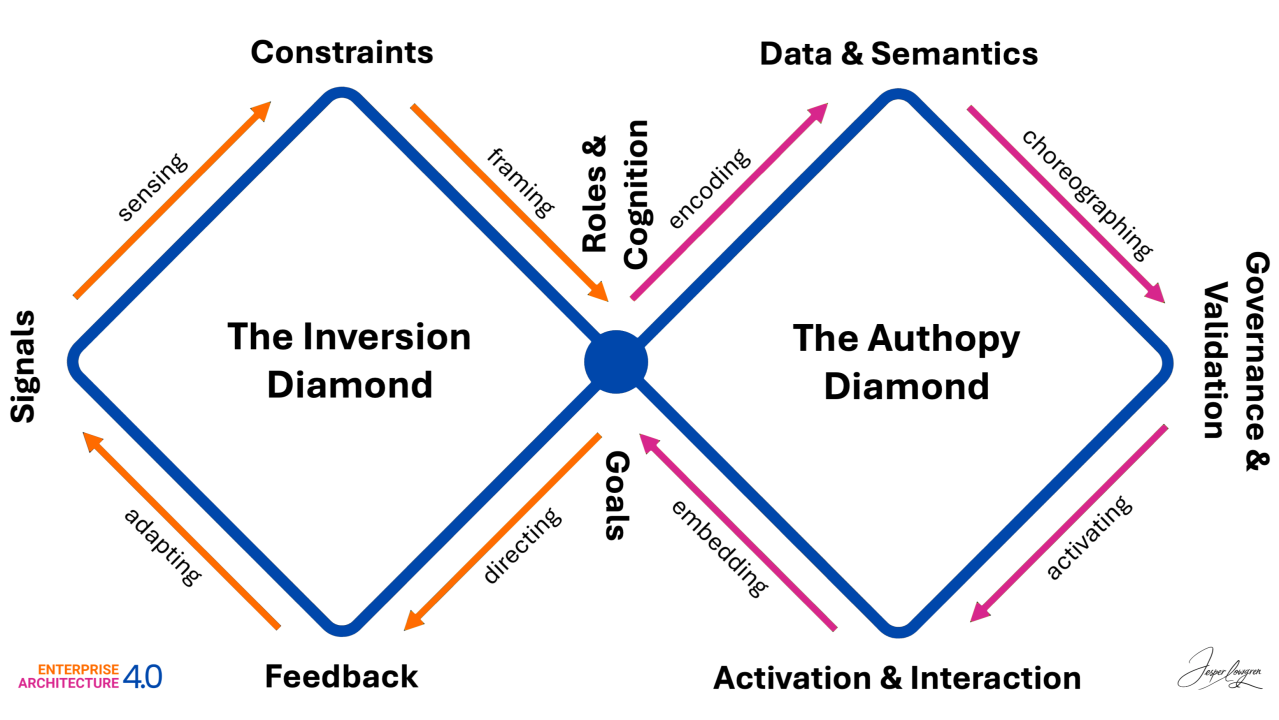

In an overlay these two diamonds""one horizontal and one vertical""you are creating a 3D Navigation System for a value stream.

- Leavitt's Diamond (Technology, Tasks, Structure, People) is the Engine.

Perspective: It focuses on Efficiency.

The Dashboard: This measures how fast we are moving and how much force we are applying. It is the "Horizontal" axis of execution.

- Parsons' AGIL (Adaptation, Goal Attainment, Integration, Latency) the Compass.

Perspective: It focuses on Effectiveness and survival within an environment.

The Dashboard: This tells us where we are in the landscape and why we are going there. It is the "Vertical" axis of ideology and purpose.

🎭

You can have all the "Power and Speed" (Leavitt) in the world, but if your "Location and Directions" (Parsons) are wrong, you are just accelerating toward a cliff.

When set Leavitt horizontally and Parsons vertically, the area where they overlap creates a third emergent diamond at the center, this is the Value Stream Nexus.

By reaching back to the 1950s and 60s, it is saying that our modern "DevOps" or "Digital Transformation" problems aren't actually new.

They are the same friction points between social systems and technical systems that Parsons and Leavitt identified decades ago.

🤔

The problem in this the theory doesn't unfold linear so practices supported by theory are problematic until theory gets closed.

Starting these dashboards but it leaves them "incomplete" because the intersection hasn't happened yet.

We can see the Leavitt components, bt the "New Diamond" only appears when you move into the later chapters and drop the Parsons framework on top of it.

This is itself a halfwaypoint for the human dialectic that is required to make the technology meaningful.

⚒ RN-1.1.4 Progress

done and currently working on:

- 2012 week:44

- Moved the legal references list to the new inventory page.

- Added possible mismatches in the value stream with a BISL reference demand supply.

- 2019 week:48

- Page converted, added with all lean and value stream idea´s.

- Aside the values stream and EDWH 3.0 approach links are added tot the building block patterns SDLC and Meta.

- The technical improvements external on the market are the options for internal improvements.

- 2025 week 49

- Start to rebuild these pages as a split off of the Serve devops.

- There was too much content not able to consider what should come resulting in leaving it open at the serve devops page.

- When the split-off happened at the shape design the door opened to sess how to connect fractals.

- Old content to categorize evaluate and relocate choosing three pages inherited at the this location, other pages to archive

- 2025 week 50,51, ...

- Extensive reflections in using DTF by using chatgpt, surprising answers to reflect.

- A different perspective in using DTF than for persons, using text artifacts.

- Two visions in the the connections one of DTF and the other of Zarf Jabes Cynefin.

- 2026 week 1,2,3, ...

- Chapters RN-2.1 to RN-2.6 draft finished a full range from volution from cognitive grammar to boundary governance.

- The first tree are setting the methods for observation.

- The next tree are details for governance, the issues of failing EA and the structural split between operations and administration.

👓 Highly related to this is information processing mindset, Jabes Jabsa Zarf how it started:

- I-Jabes The technological idea of knowledge management that is bothering me.

The topics that are unique on this page RN-1

It is focussing more at technology related ones.

👉🏾 The challenge in ICT for using useful practices in ontologies taxonomies.

There is no well defined history in ontologies taxonomies.

When there would be well known generic taxonomies:

- A standard naming convention for elements is in place

- The standard naming convention for elements supports:

- a wide possibility in change (velocity)

- a wide possibility in variety (volume)

- easy exchange in technology (vitality)

👉🏾 The maturity difference for OT and IT in safety.

The bold claim is embedded by "security by design".

The reasoning:

- OT: "Security by design" is included in OT from design to implementation. Security measures are taken beforehand.

Of course, things sometimes go wrong, and action is taken to address them.

The mindset: preventing fires

- IT: "Security by design" is an illusion in IT; it's not a standard part of the design.

They try to make things presentable afterward with tools, caught up in the buzz and hypes of published new threats.

Penetration tests are the guiding principle for the implementation result.

What prevails is doing security after the fact to achieve a point of something that's considered workable.

The mindset: putting out fires.

What is old-fashioned and should be replaced to something more modern?

- Old-fashioned: fighting fires, putting them out.

- Modern: preventing fires.

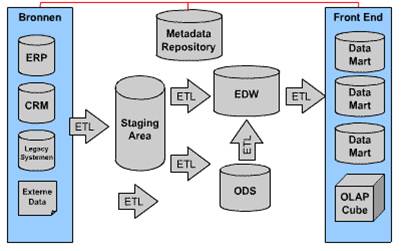

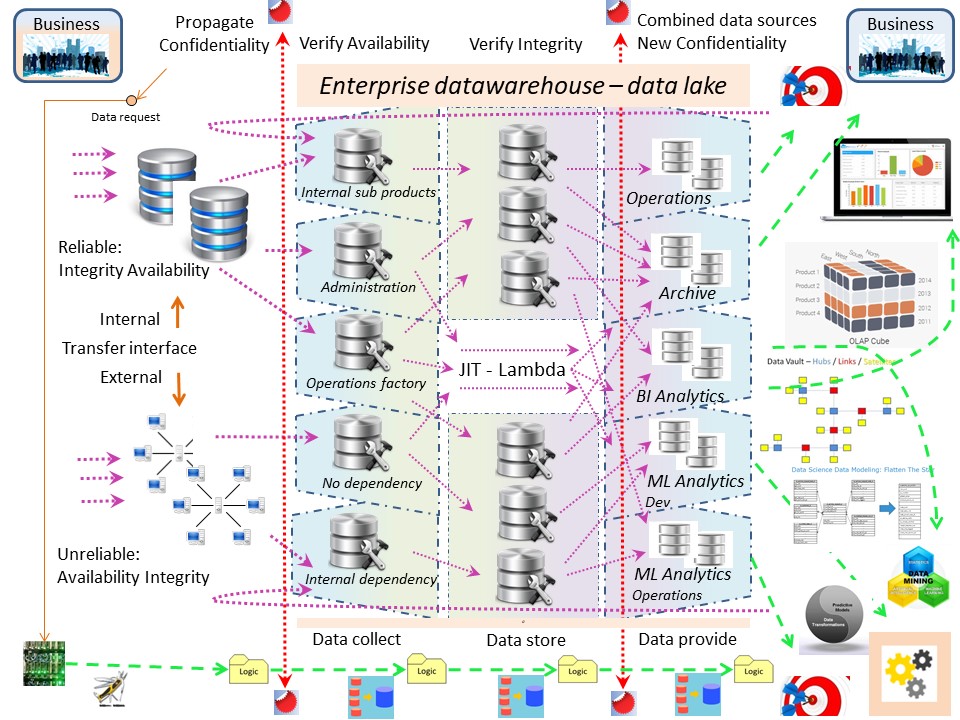

👉🏾 A generalised view how the information flows.

In the intangible setting of information flows there are different flows, one of a product/service and another the descriptions knowledge of enabling that product/service.

The property of intangible is the root cause of a lot confusion :

- The perspective of information that is similar to OT is the product/service flow.

- The perspective of information that describing the is describing the product/service flow is IM information management flow.

- Defining Domains OT, IM and using layers is enabling defining segments into all details with a defined and isolated risk to be damage

👉🏾 The question for optimizing the functioning or optimizing the fucntionality.

There are in the intangible setting of information flows the different flows of a product/service and the descriptions knowledge of enabling that product/service.

The property of intangible is a cause of a lot confusion.:

- Leavitt's Diamond (Technology, Tasks, Structure, People) is the Engine focuses on Efficiency.

- Parsons' AGIL (Adaptation, Goal Attainment, Integration, Latency) focuses on Effectiveness and survival within an environment.

- These two competing perspectives must be aligned coordinated they are a duality and dichotomy. They cannot exist without the other but they are easily going into fighting each other.

The topics that are unique on this page RN-2

This are the core topics that are related to the diagonal counterpart that is deepening the social neural aspects and what is needed for a Stem mindset.

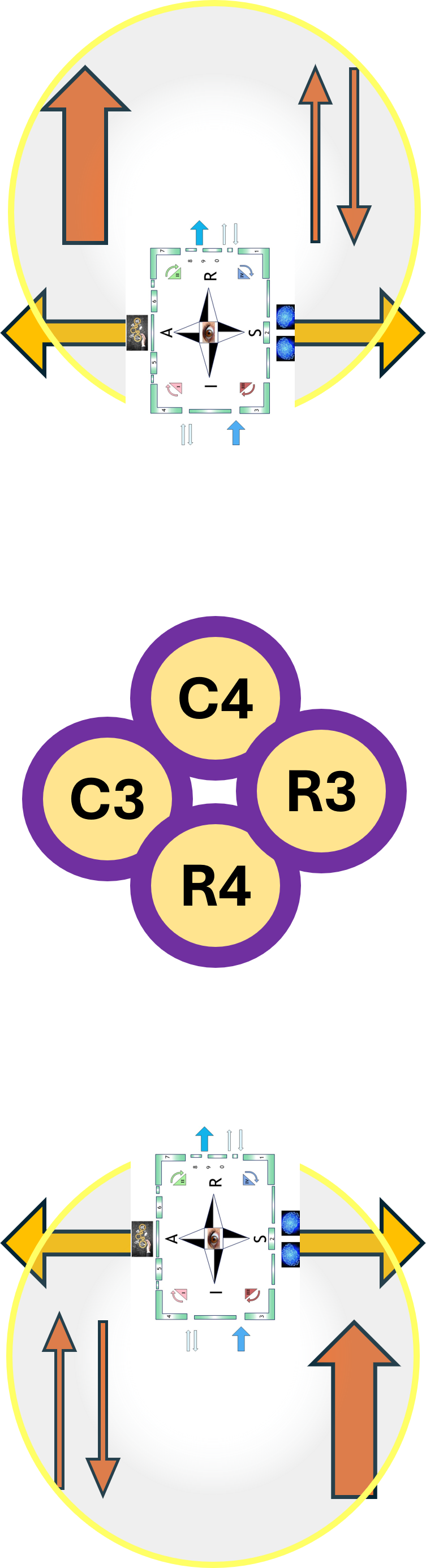

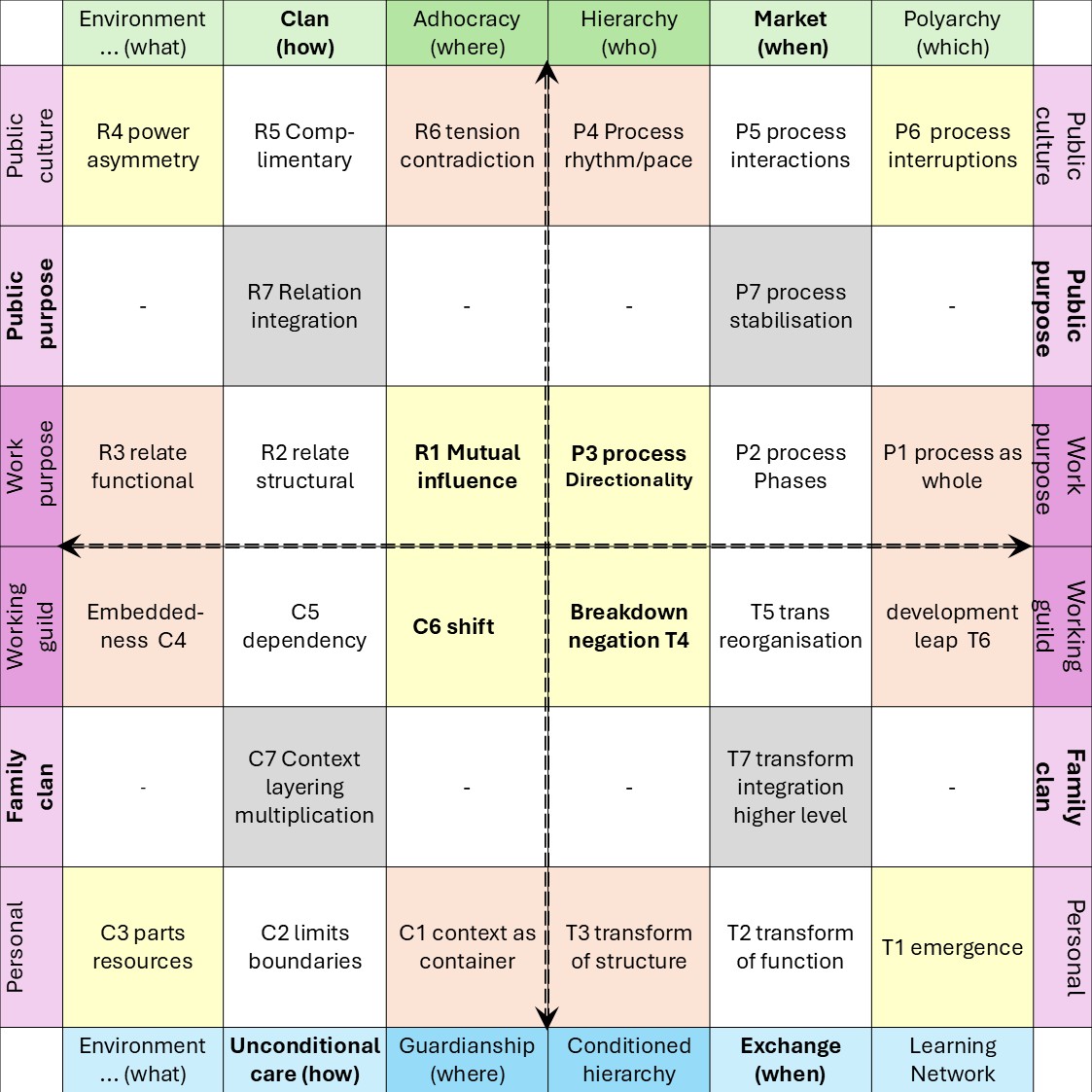

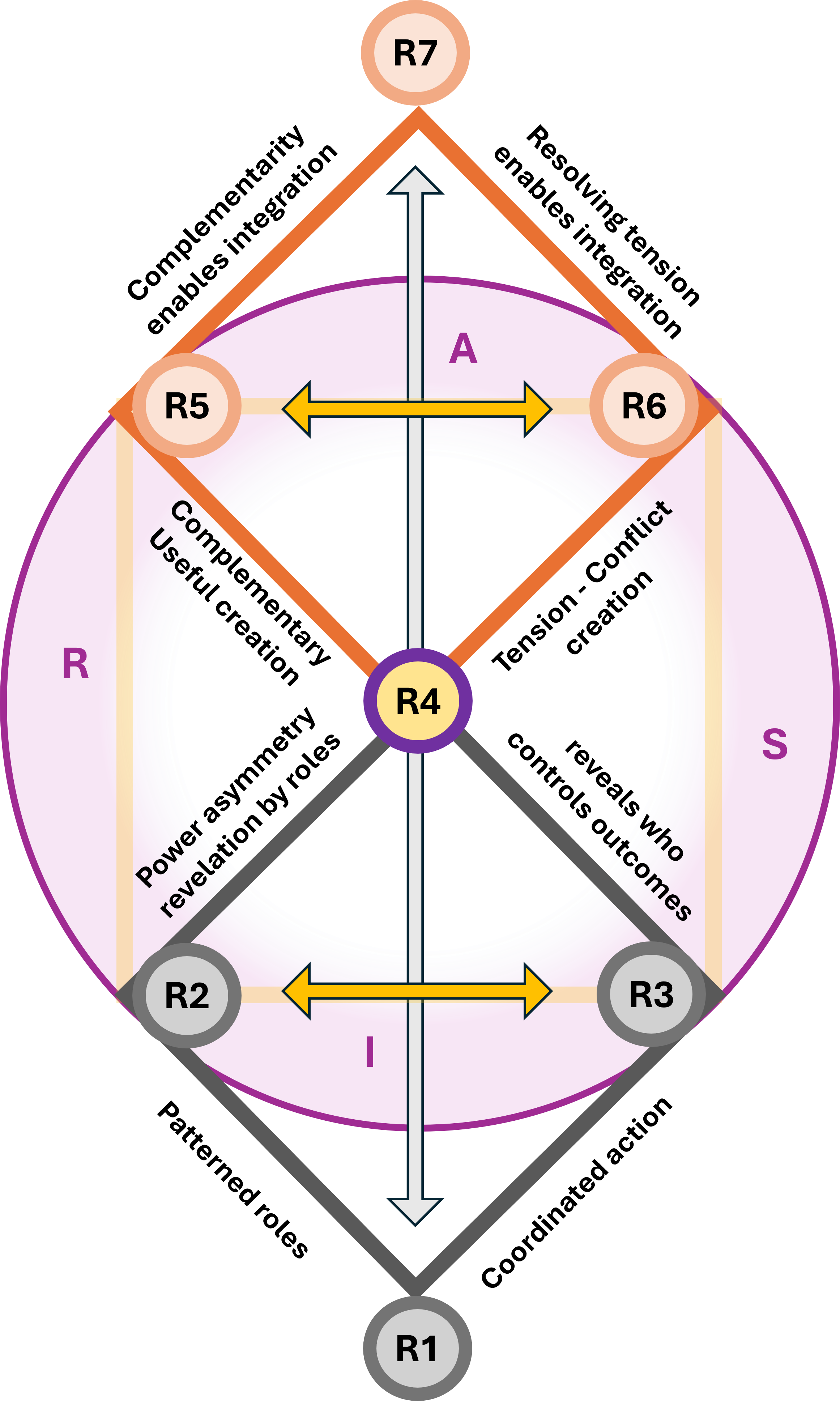

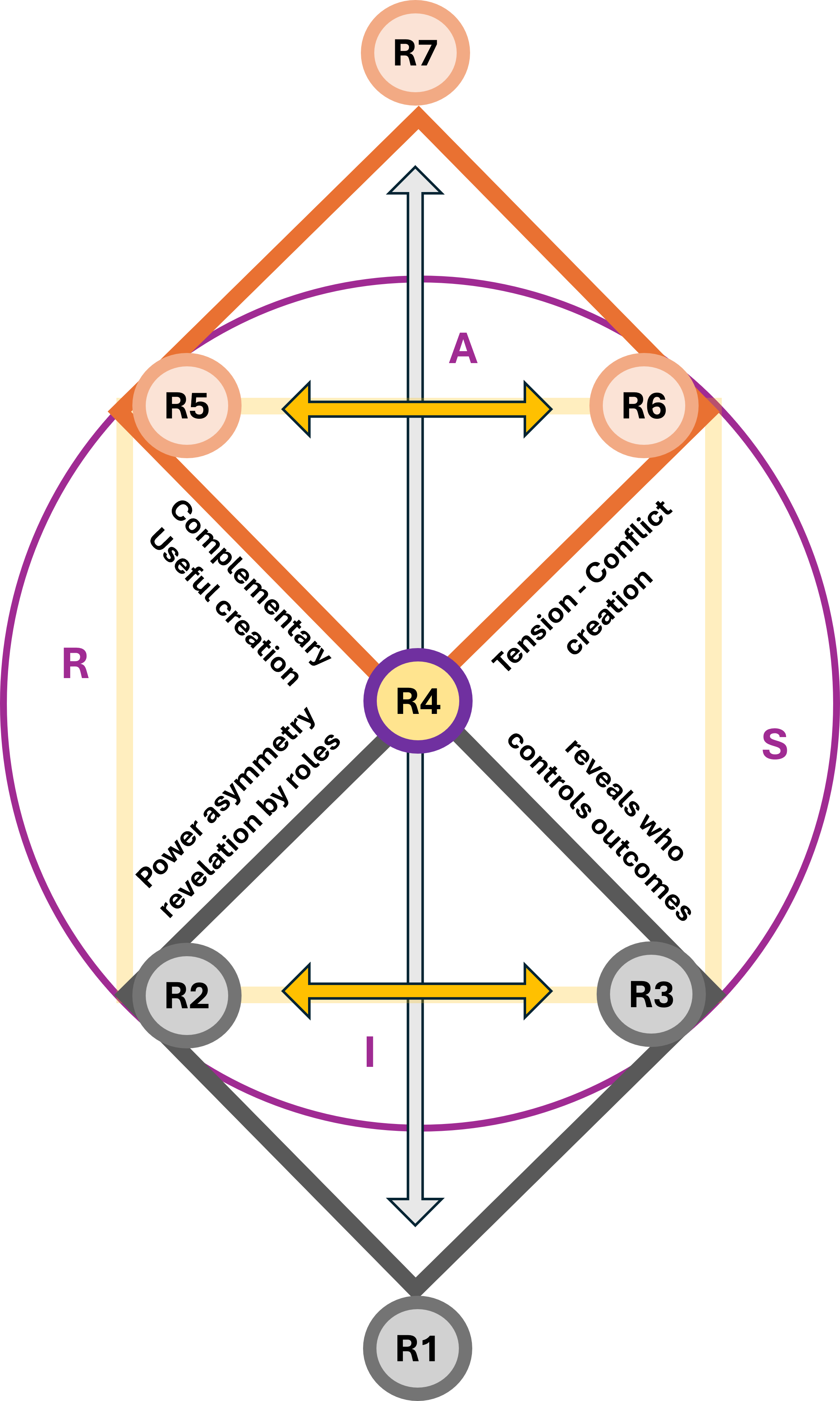

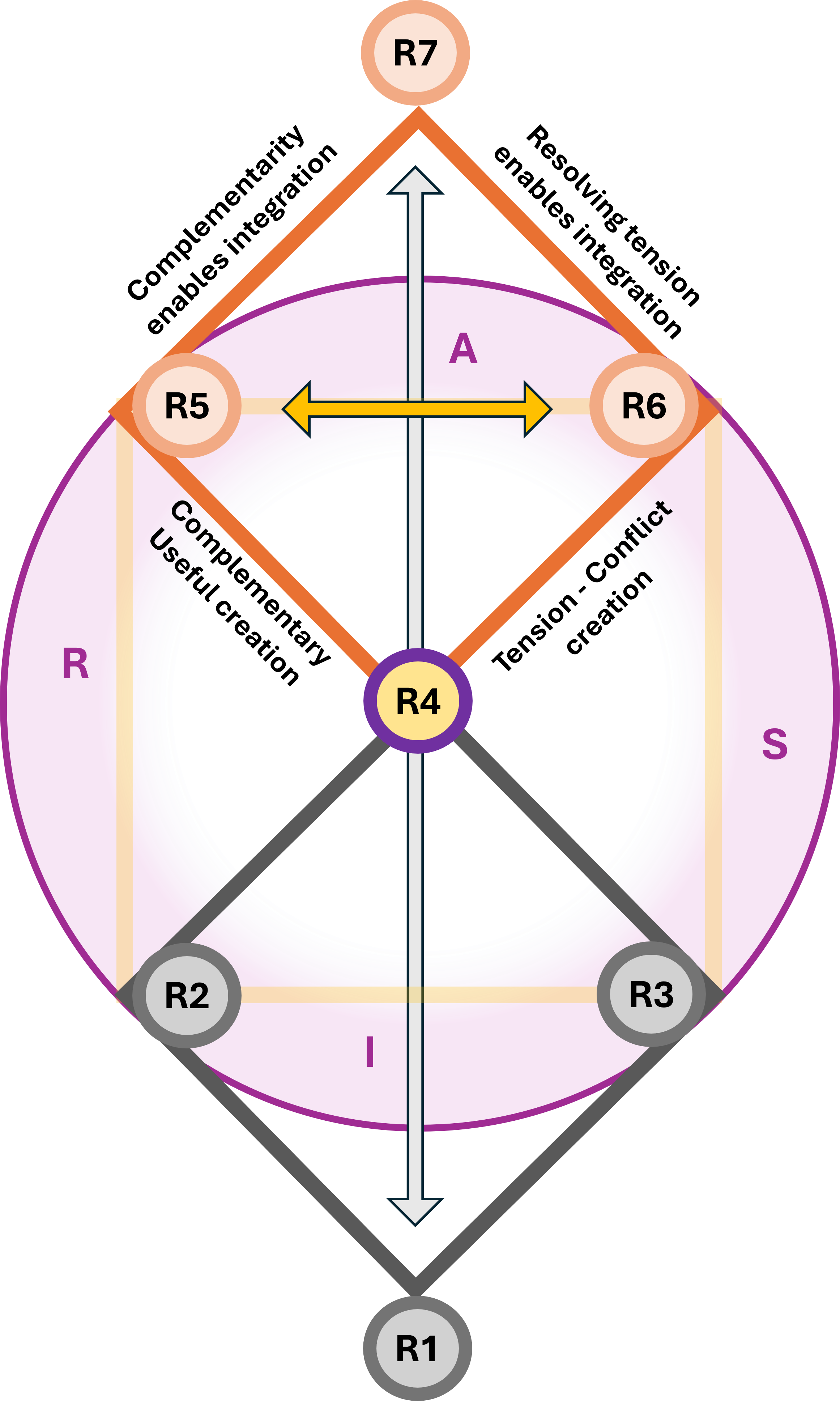

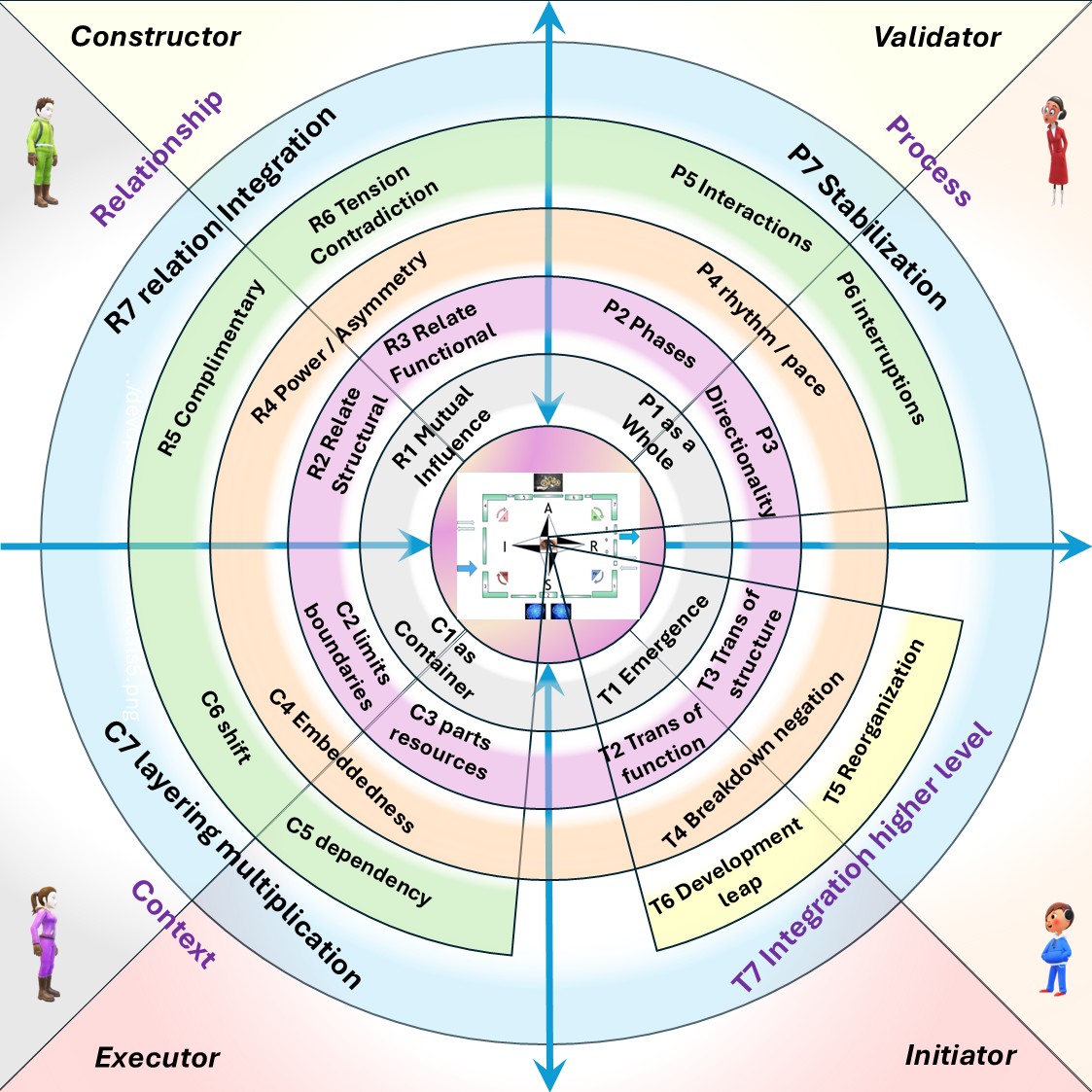

👉🏾 Introducing the use of a full dialectical framework that can be use in evaluating frameworks and situations.

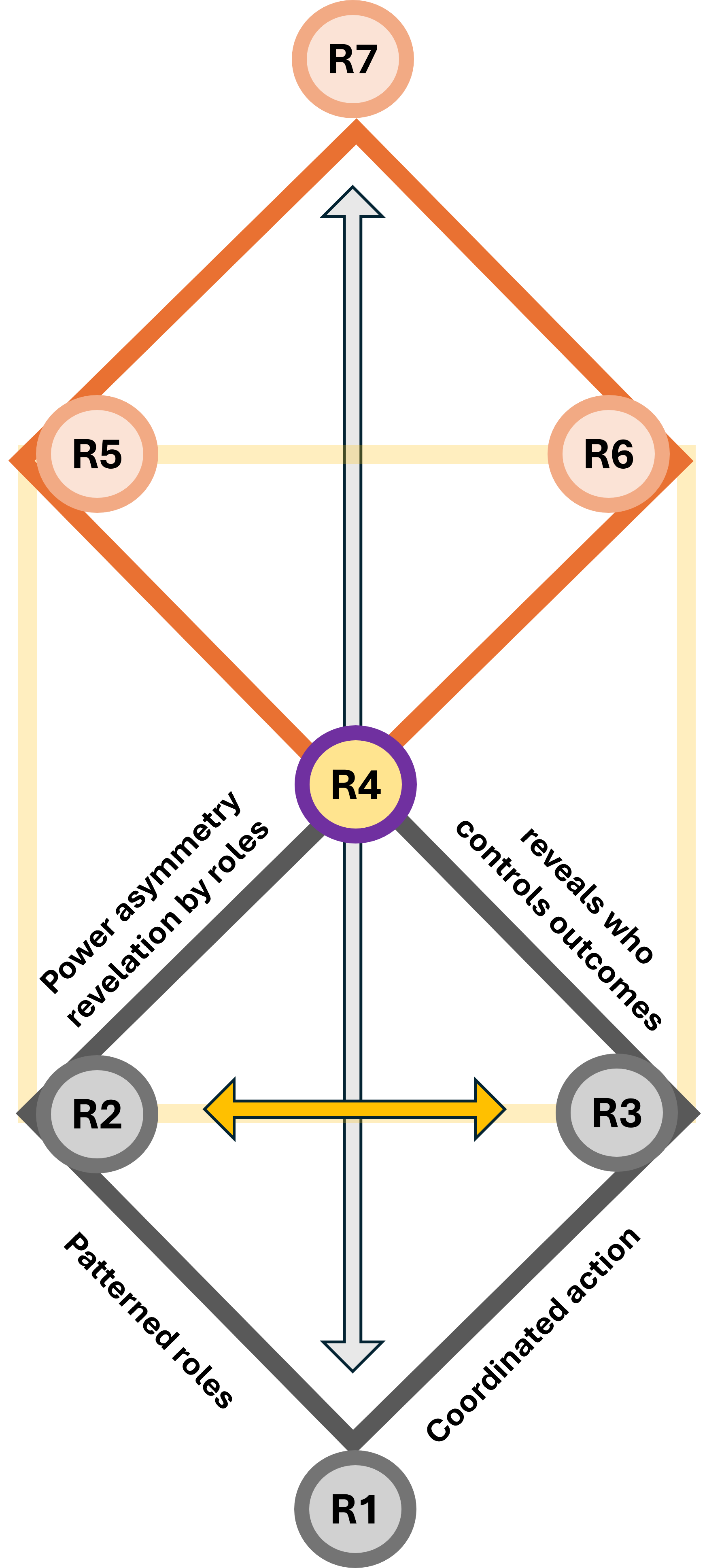

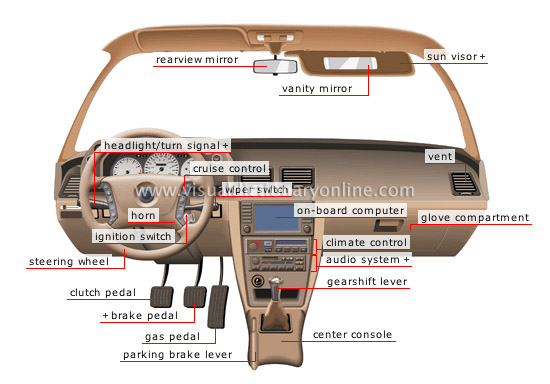

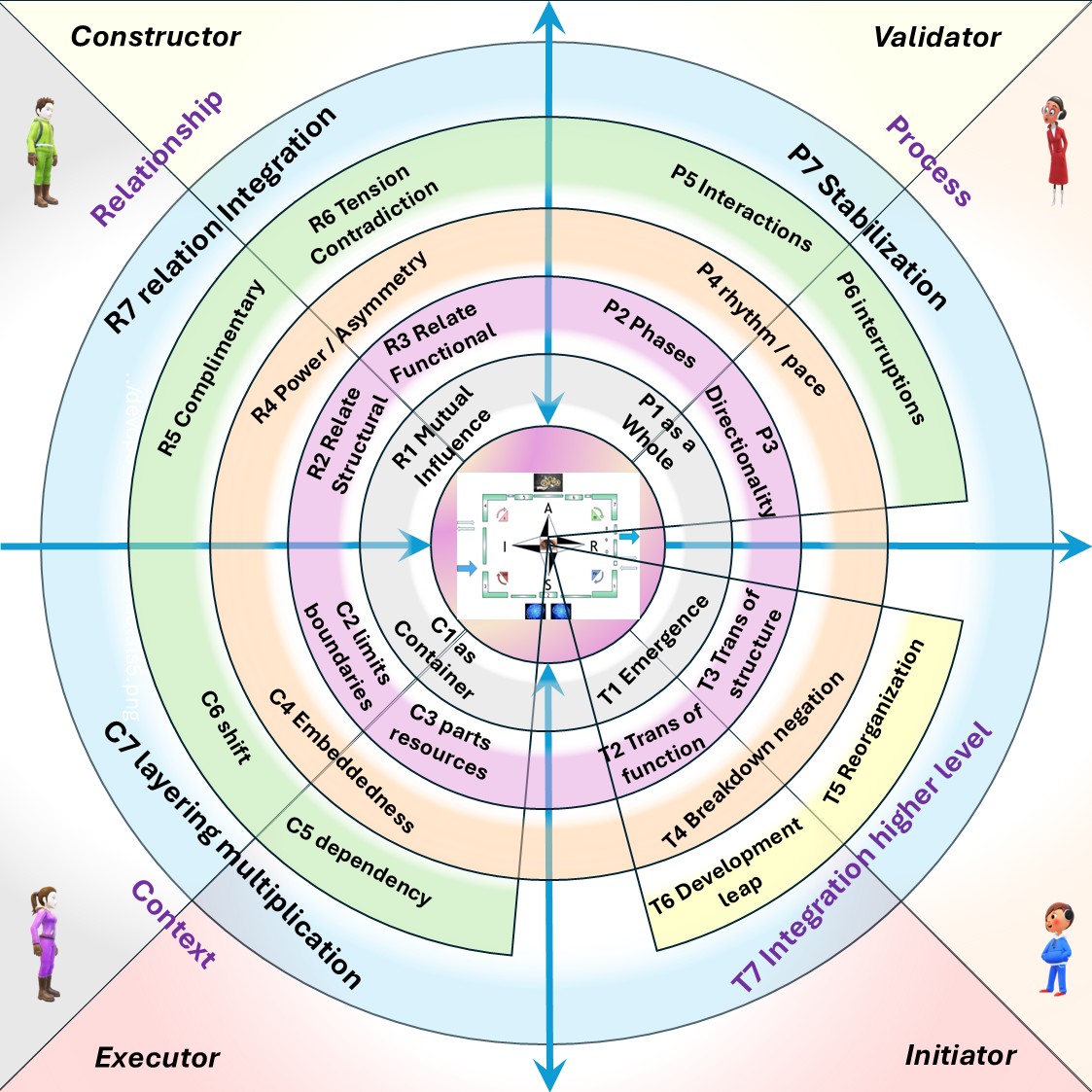

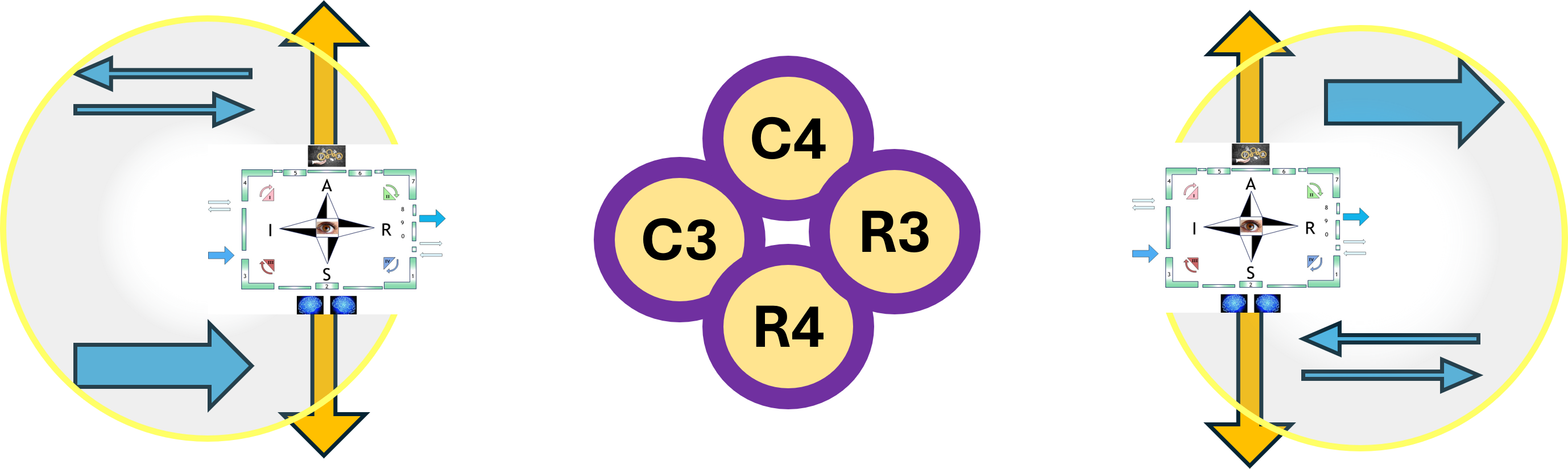

- The DTF, dialectical thought forms are by 4 main categories each in seven sub-categories.

- The 4 main DTF categories: Relationship(R), Process(P), Context(C), transformation(T).

- Alignment of DTF to others: Zachman VSM Cynefin extended to many more.

- The reasoning of about 7 categories for becoming dialectical closed.

O.Laske educated at the frankfurter school created DTF and more using that transitional language for change in time (learning)

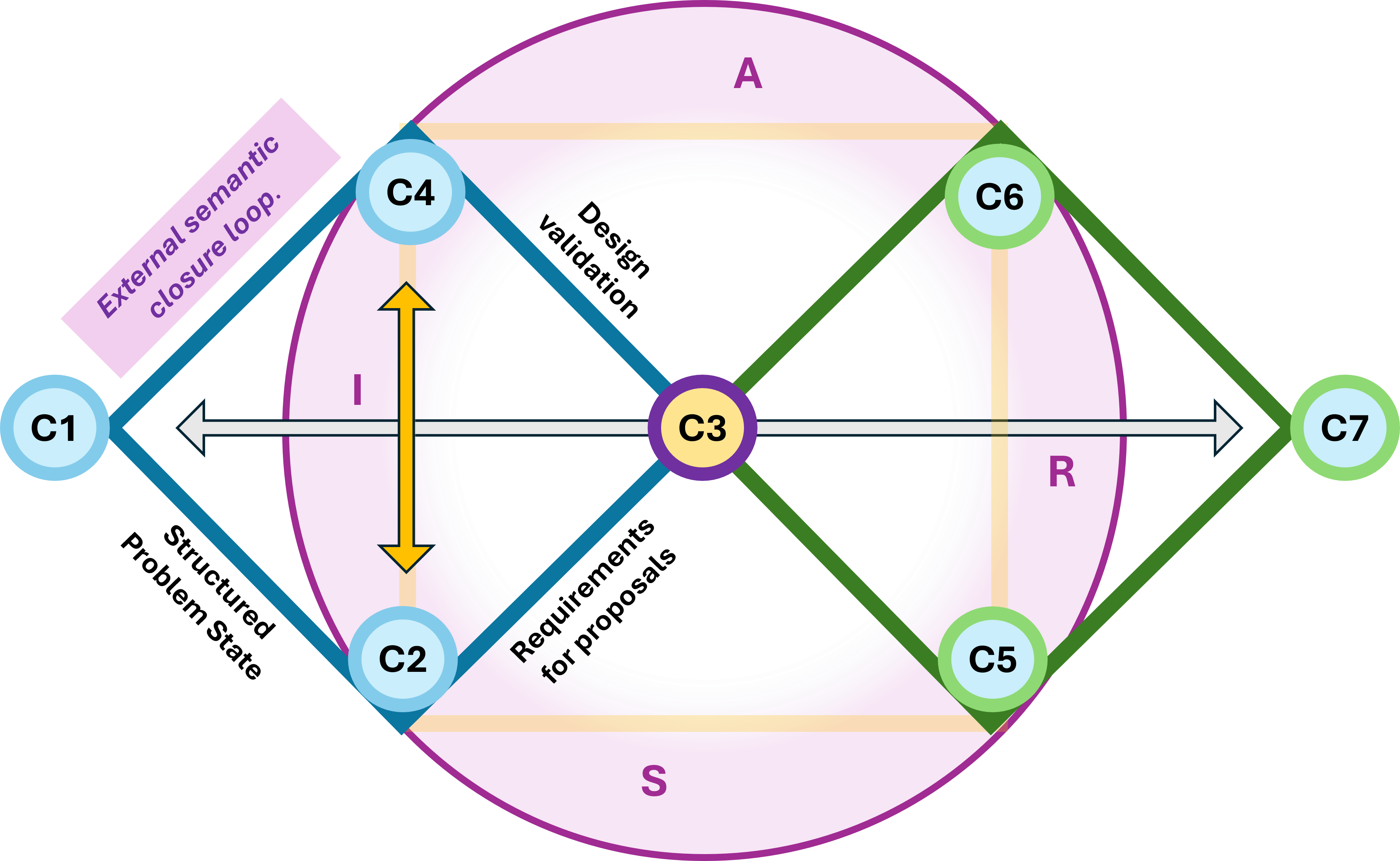

👉🏾 The workshop proposal is designed to solve the problems seen by observation in a social intervention: the Workshop.

- There is a timeless structural conflict between social goals and technical tasks resulting in seen problems.

- The Goal: To move from a "flat" view of the process to a "thick" understanding of why things are stuck.

Once the workshop has surfaced the friction, you need a place to put it.

This is the Problem State proposal.

- It provides the Reference Points (R) for the "Broken" states. If the workshop is the "X-ray," the Problem State is the "Diagnosis

- The Workshop provides the raw data of human frustration and technical lag, and the Problem State Proposal provides the semantic structure to categorize that data.

👉🏾 The workshop proposal is designed to solve the problems seen by observation in a social intervention: the Workshop.

The topics that are unique on this page RN-3

It is focussing more at complex relationships as the RN-2 topics.

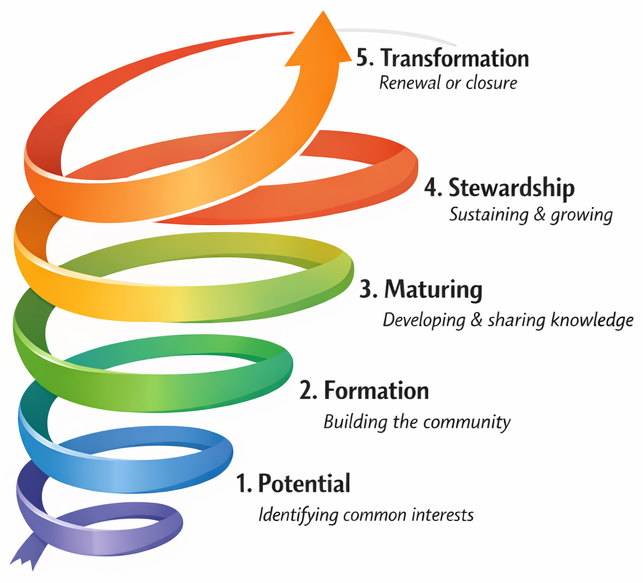

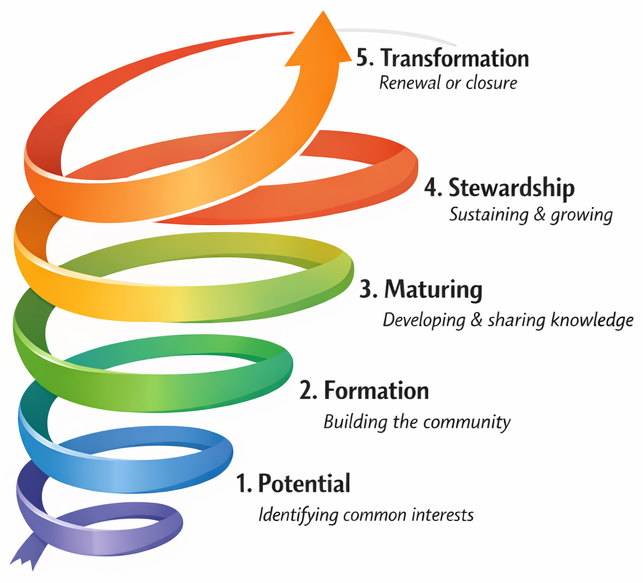

👉🏾 using a time dimension transformation in knowledge learning growing.

- The learning paths are having a dimension of time, that is explicit or hidden

- Adding time the knowledge itself is changing knowledge from the paste is not the same of that in the now or the future. When only looking back in the paste it collapses, it answers "why".

- When adding the future there is uncertainty unpredictability added.

The "why" changes into a "which" and "worth" for "when" is needed.

Knowledge evolves by options, choices and events.

The result will be two knowledge lines supporting for changes in time.

One for what was known when starting to work on it and one that is the becoming one in the future.

Is that idea new of something old done before?

- The original diamond of D.Leavitt did have four topics.

Structure was lost, but that one could be seen as decision causing change in time

- Talcott Parsons did use AGIL, Adapt, Goal, Integration and Latency.

Latency can be seen as change in time.

- O.Laske educated at the frankfurter school does use that transitional for change in time (learning)

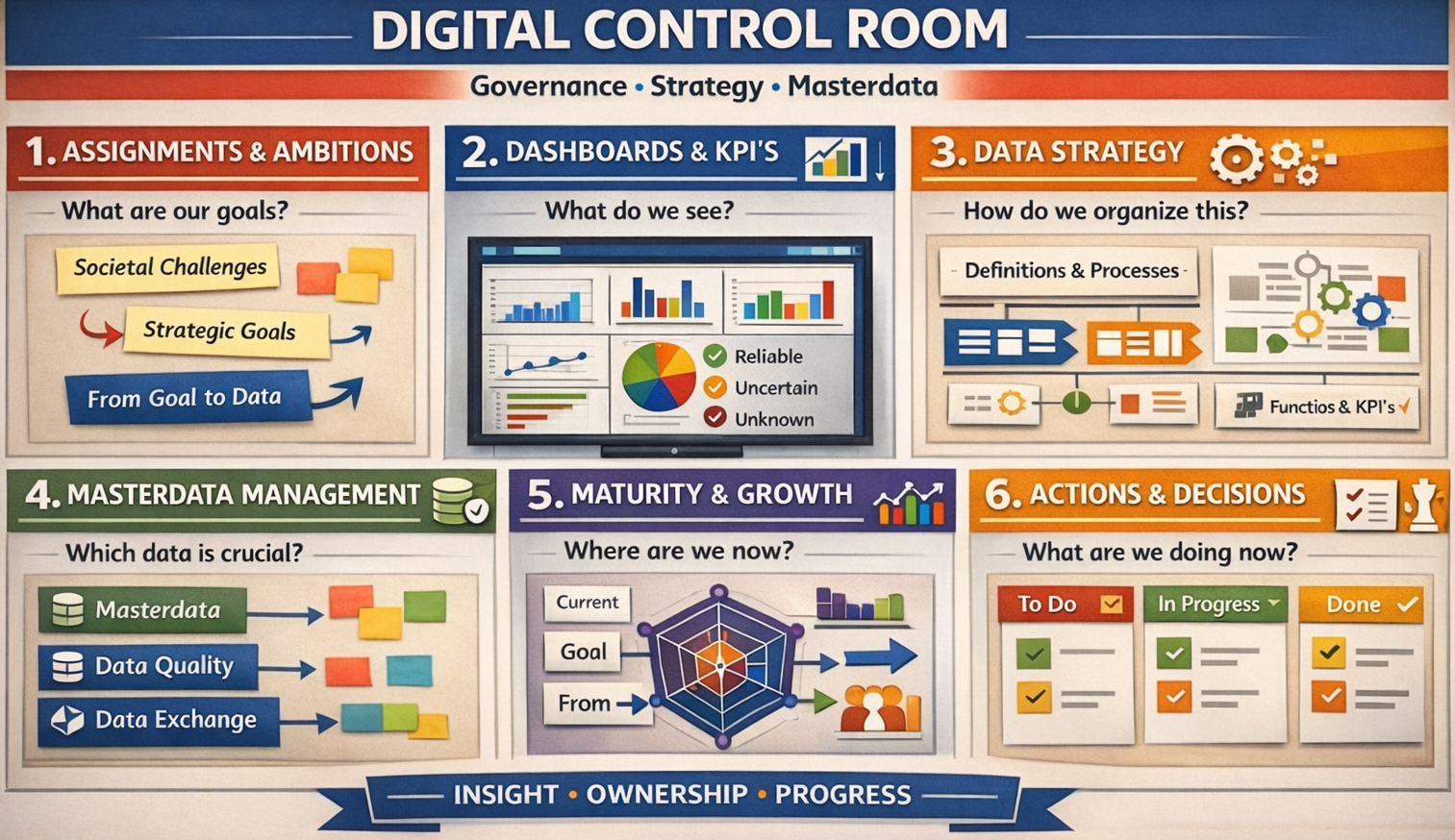

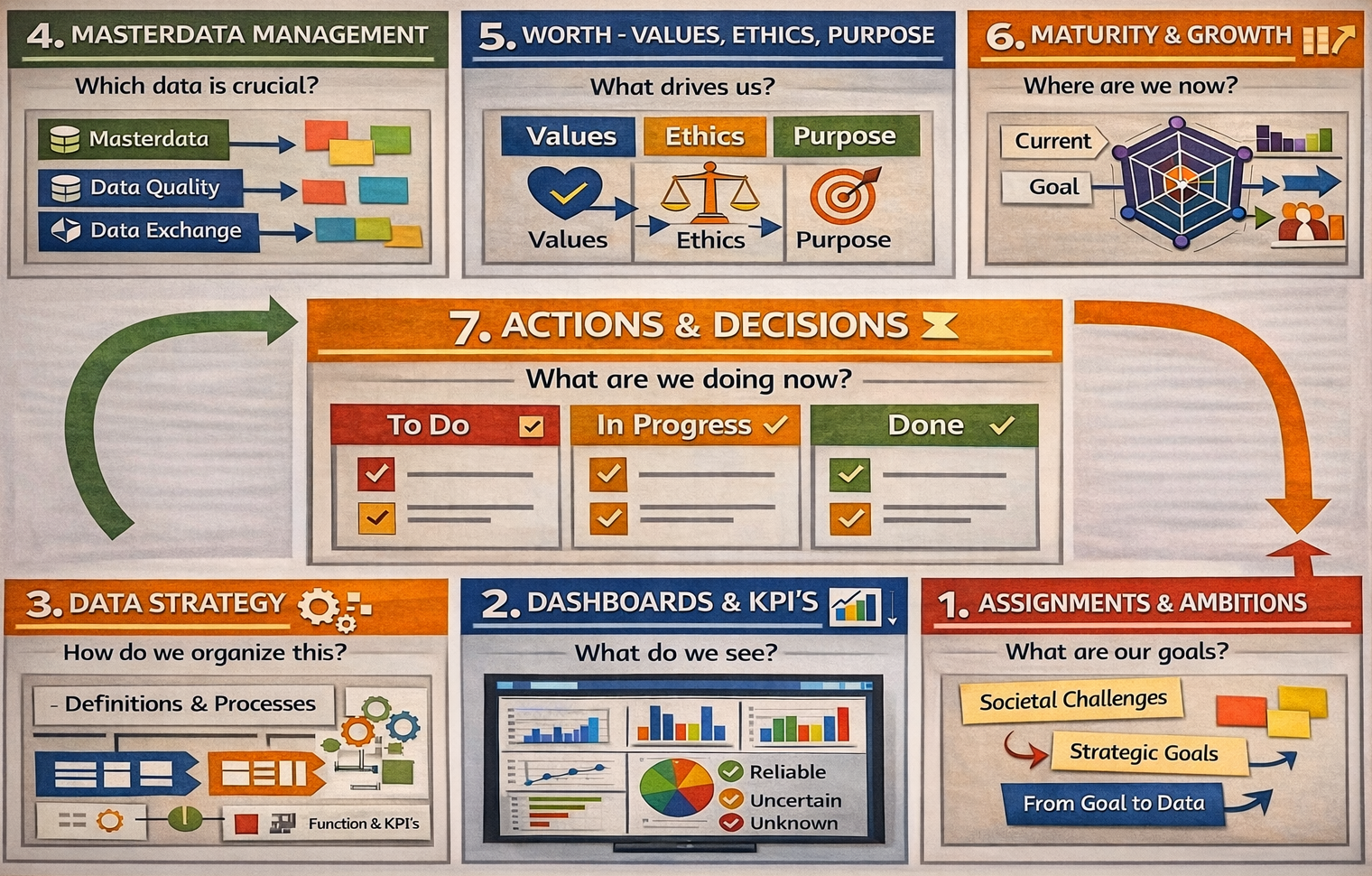

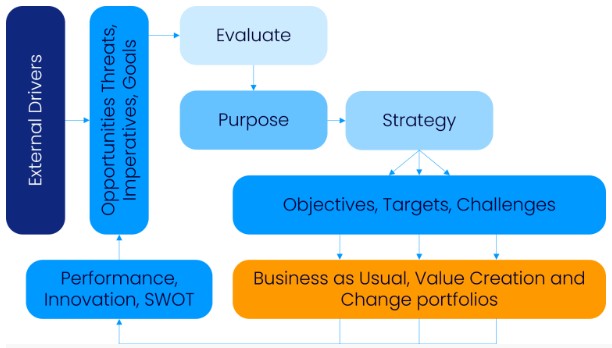

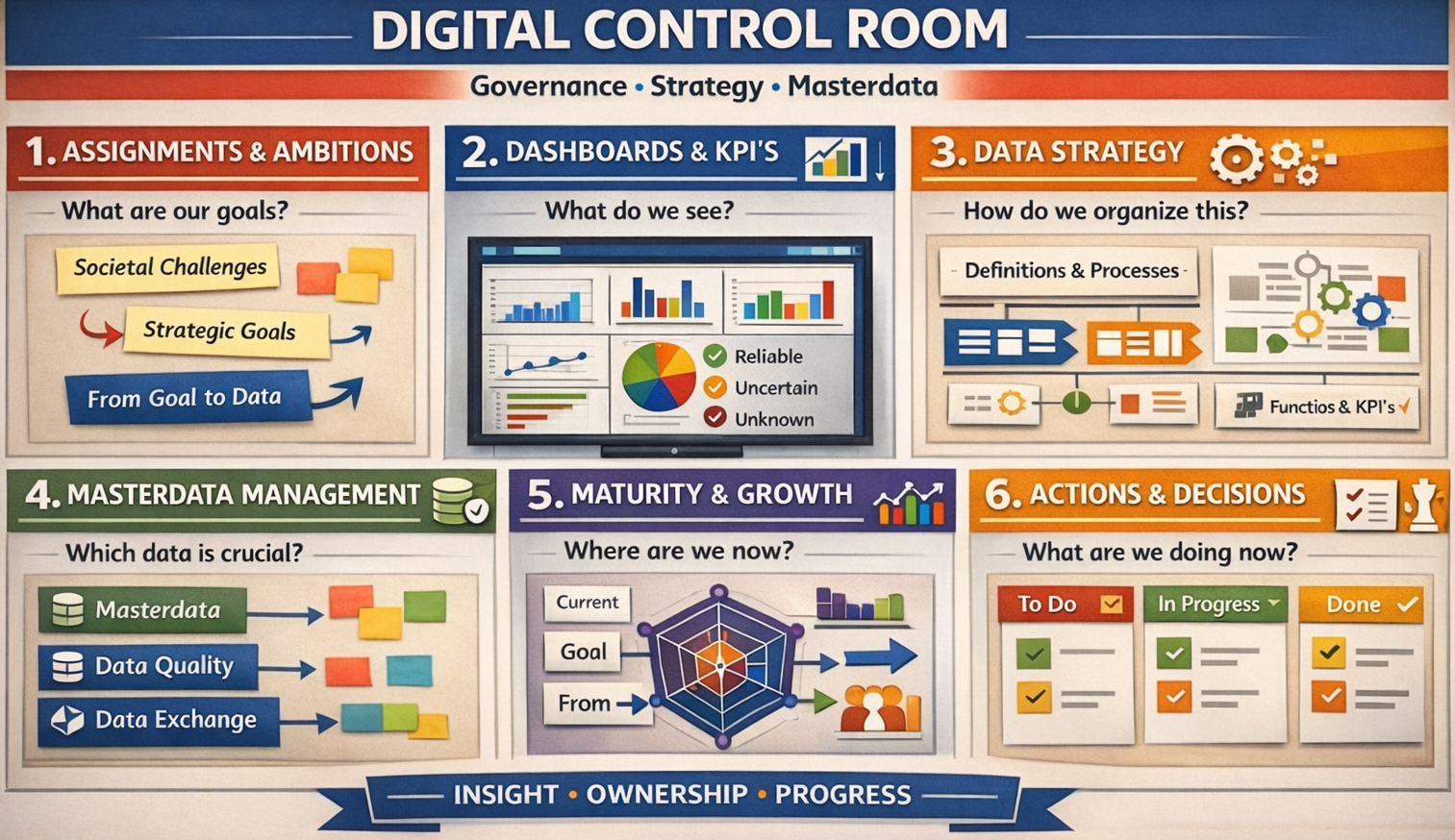

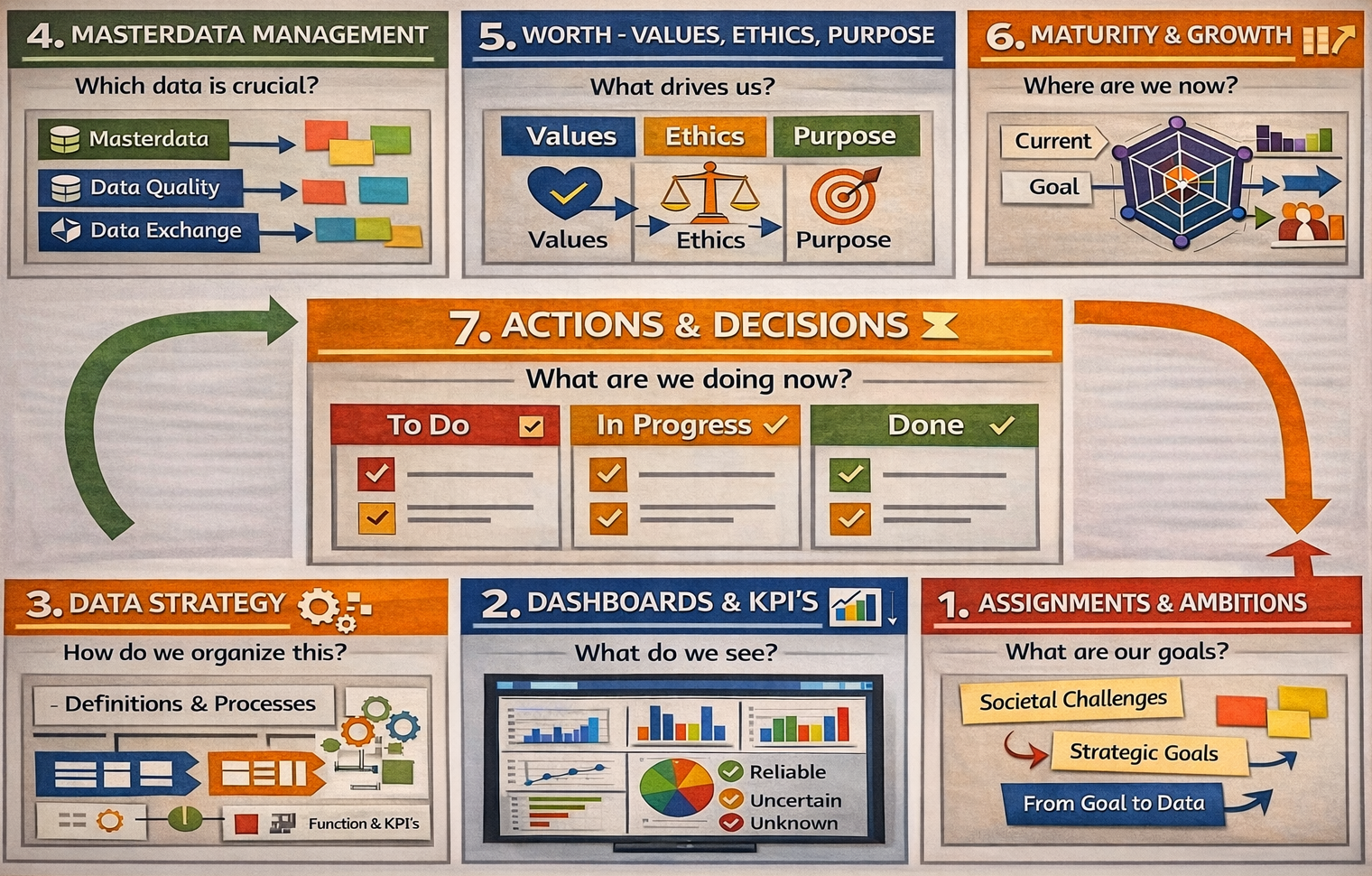

RN-1.2 The quest in understanding the path going to somewhere

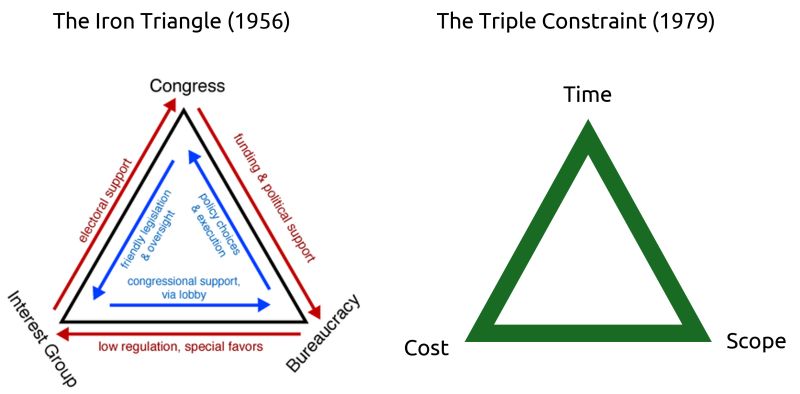

The classification for management, executive, information was based on technical approaches for:

- Creating complex dashboards with a lot of technology details in the assumption they would result in better decisions

- The experience for what is known at operational execution being ignored assuming the dashboards are the truth.

- The assumption that a top down C&C command & control is the ultimate only valid target operation model.

🚧 Breaking the assumption of complex dashboards are the truth.

⟲ RN-1.2.1 Understanding systems by Concepts - Ontology - taxonomies

Understanding Concepts - Ontology - taxonomie

The anatomy of an ontology (W.H.Inmon J.Talisman 2026)

People can say or write anything they want. There simply is no rhythm to creating and collection of text.

Text can come in many forms, voice, print, spreadsheets, email and so forth.

In a word, reading text and extracting meaningful data from text was and is a daunting task.

There is a technology/discipline that greatly abets the challenges of extracting meaningful data from text. That technology/discipline is called an ontology.

⏳

What in the world is an ontology?

Note that the word text is use for any form of communication.

- An ontology characterized simplified:

- carefully vetted vocabulary designed to unravel and classify a body of raw text.

- containing what can be called a series or collections of taxonomies.

⌛

What exactly is a taxonomy?

- A taxonomy is a vocabulary of related words designed to classify something:

- a tangible object, a car, an airplane,

- an intangible object, a concept, a discipline,

- a football team, a method of teaching swimming and so forth

The only requirement is that classifications are sensible in the intended context.

- A taxonomy has boundaries to the ontology it resides in.

- contain only taxonomies that relate to the ontology focus.

- ontology focus has an influence on the contents of the taxonomies it hosts.

- A taxonomy has multiple levels of classification:

- They have some one unifying category to draw the elements together.

- They may have multiple levels of categories contained inside the taxonomy.

- The taxonomy can show relationships between classifications and words:

- Each classified word has a similar relationship to words that are being classified as all of the other words contained for the same classification.

- Taxonomies inside a ontology may or may not have relationships to each other.

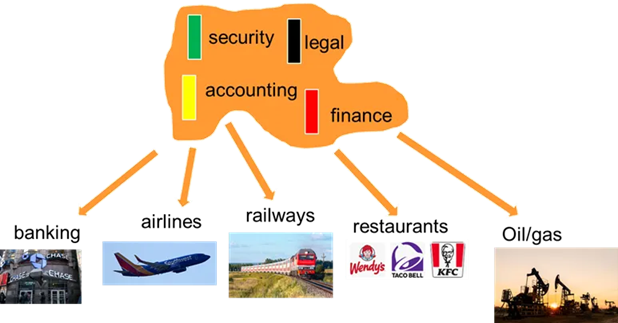

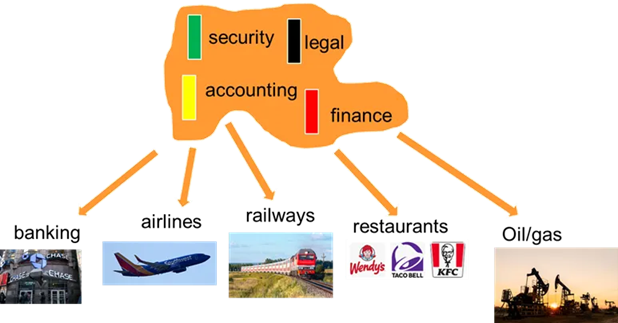

- A taxonomy can be generic by a generic discipline or settled branches.

- A generic taxonomy is a taxonomy that can be used in many places.

- From generic disciplines they can exist independently. Examples:

- accounting, finance, advisories, joint cooperations

- security, safety, procurement, legal

- For settled branches there are common reusable taxonomies. Examples:

- banking (credit card, savings and loans ), insurance, airlines,

- railways, oil/gas electricity energy,

- restaurants, supermarkts, shops

- Defining and maintaining the ontology and taxonomies.

The biggest challenge in building and using a taxonomy lies in the first iteration of the building of the taxonomy.

- Ontology, taxonomies will change over time as the enterprise, organisation, change.

- The "good" is that most enterprises, organisations only change marginally over time.

Some taxonomies are generic across multiple ontologies, these are generic disciplines.

👉🏾 Note security safety is assumed to be a generic discipline.

⚠️🚧 Security safety should be a generic discipline using information technology but that is a failing situation.

Using the word conceptual or ontology taxonomy business model

Using different words. conceptual model, ontology taxonomy can be confusing.

Conceptual -ontology (LI: R.Ross 2025).

In 1993, Gruber originally defined the notion of an ontology as an "explicit specification of a conceptualization". In 1997, Borst

defined an ontology as a "formal specification of a shared conceptualization".

⏳

Asking an analsyes for ontology to concepts gave the following.

In the methodology of Ronald Ross, an ontology (primarily expressed as a concept model) is a strategic framework designed to ensure shared understanding and business clarity across an organization.

- Core Concepts & Functioning : Concept Model as Ontology: Ross defines an ontology as a "shared conceptualization".

For him, the core component of a Business Knowledge Blueprint is a concept model, which identifies the essential "things" (nouns) and their relationships (verbs) that matter to a specific community.

- Business Rules Integration: Ross is widely known as the "Father of Business Rules".

In his framework, an ontology provides the structural foundation (vocabulary) upon which business rules (behavioral and definitional) are built.

You cannot have clear rules without precisely defined concepts.

- Eliminating Semantic Silos: Organizations often suffer from functional silos where different departments use the same terms to mean different things.

Ross's strategy replaces these "semantic silos" with a "Knowledge Commons" a unified business vocabulary that serves as a common ground for all stakeholders.

- Application-Centric vs. Knowledge-Centric: A key part of his development strategy is moving away from application-centric development (where data is designed for a single system) toward a knowledge-centric approach.

This ensures that data is "potent," reusable, and manageable across different domains.

⌛

The work behind and achieving acceptance for its usage is development strategy.

- Define Terms Precisely: Use the Business Knowledge Blueprint to establish unambiguous definitions based on business logic, not IT requirements.

- Map Relationships: Structure concepts according to their inherent business relations to create a Concept Model.

- Bridge the Gap: Use these blueprints as a "front end" for technical system design, ensuring that IT implementations (databases, AI, etc.) speak the "language of the business".

The hard workd for an ontology and taxonomies and business model

Continuation of analysing "The anatomy of an ontology (W.H.Inmon J.Talisman 2026)".

Just defining what it is about is not enough it should be usable in practice.

- The work behind the words for an ontology :

- represents decisions, thousands of small, careful decisions about how concepts relate to one another, where boundaries should be drawn, and what matters enough to include.

- is a human activity, not a mechanical one. The ontology builder must understand the words of a domain AND the conceptual architecture underneath those words, the way practitioners in that domain actually think about their work.

- The work behind Scope and granularity:

- The most effective ontologies are purpose-built. They serve a specific need within a specific context.

The ontology builder must resist the temptation to capture everything and instead focus on capturing what matters for the task at hand.

- Determining the scope or coverage of a taxonomy and ontology are both decisions that must be analyzed and decided upon by humans, as ultimately, these decisions construct the profile of the organization, workflows and the things human workers care about and need, to be successful.

- If the need is to route documents to the correct department, broad categories may suffice.

If you need to identify patterns in outcomes, fine-grained distinctions become essential.

- The work behind relationships beyond hierarchy:

- Associative relationships allow the ontology to represent the rich web of connections that characterize any complex domain.

These are not taxonomy relationships.

- An ontology is never truly finished, language evolves, domains change, new concepts emerge while old ones fade from use.

- The ontology must have a steward, someone responsible for keeping it aligned with the reality it represents.

- The ontologist is not a technician cataloging words.

The ontology builder is making choices about what matters: choices that will ripple through every analysis that depends on the ontology upstream, downstream and in between.

🤔 To be short: it is a never-ending story of activity for continuous improvement.

⟲ RN-1.2.2 Information security taxonomy and ontology relationship

The state of the taxonomy for information security

When assuming security would be part of ontologies by a discipline having a generic taxonomy, there should be references for those.

Howevers what is found and seen are practices of doing without any relationship to ontologies.

⏳

It is a signal of a fundamental gap causing a lot of issues.

Information security (infosec) is the practice of protecting information by mitigating information risks. It is part of information risk management.

There are many specialist roles - tasks - in Information Security including:

There are many specialist roles - tasks - in Information Security including:

- securing networks and allied infrastructure,

- securing applications and databases,

- security testing,

- business continuity planning,

- electronic record discovery, and digital forensics.

- information systems auditing,

To standardize this discipline, academics and professionals collaborate to offer guidance, policies, and industry standards on passwords, antivirus software, firewalls, encryption software, legal liability, security awareness and training, and so forth.

This standardization may be further driven by a wide variety of laws and regulations that affect how data is accessed, processed, stored, transferred, and destroyed.

⌛ That is a siloed technological approach nor really a systemic structural one, worse just reacting after the fact.

Information Security Attributes: or qualities, i.e.,

- Confidentiality, Integrity and Availability (CIA).

Information Systems are composed in three main portions,

- hardware, software and communications

with the purpose to help identify and apply information security industry standards, as mechanisms of protection and prevention, at three levels or layers:

- physical, personal and organizational.

Essentially, procedures or policies are implemented to tell administrators, users and operators how to use products to ensure information security within the organizations

The quest for aligning security to the ontlogy generic.

Seen the gap what would be need to change for closing the gap in secure information processing?

Building an ontology and taxonomy is requiring seeing the relevant layers.

The question is what the relevant layser could be, a proposal using 6 levels:

| Abstraction | classification level | System integration level |

| context | ontology-taxonomy | System as Whole |

| concept | organisational | Procedures |

| logical | personal | People, processes |

| physical | physical (segmentation) | hardware, software, communication |

| component | applications (business) | Identity & access - CIA, BIA |

| instance | tools, middleware | Encryption, defending software |

An important difference for procedures and processes.

👉🏾 procedures are activities to overcome issues.

👉🏾 processes are standards that hopefully prevent issues.

- No single point of failure.

Distribute dependencies (cloud, identity, backup).

- Data is owned, the application is replaceable.

Separate data from the software layer.

- Data must always be exportable.

Open formats. Complete. Instantly available.

- Recover outside of your primary provider.

Backups and recovery are configured independently.

Business continuity planning

(BCM) is a relationship part of the safety taxonomy.

An organization's resistance to failure is "the ability ... to withstand changes in its environment and still function".

Often called resilience, it is a capability that enables organizations to either endure environmental changes without having to permanently adapt, or the organization is forced to adapt a new way of working that better suits the new environmental conditions.

An organization's resistance to failure is "the ability ... to withstand changes in its environment and still function".

Often called resilience, it is a capability that enables organizations to either endure environmental changes without having to permanently adapt, or the organization is forced to adapt a new way of working that better suits the new environmental conditions.

Several triads of components.

Business Continuity Planning Practice

Loss of assets can disable an organisation to function. It is risk analysis to what level continuity, in what time, at what cost, is required and what kind of loss is acceptable.

The datacentre has got relocated with the increased telecommunications capacity. A hot stand by with the same information on a Realtime duplicated storage made possible.

Loss of network connections.

Loss control to critical information.

⚠ The cost argument with this new option resulted in ingorance of resilence of other type of disasters to recover and ignorance of archiving compliancy requirements.

⚠ With a distributed approach of datacenters the loss of single datacentre is not a valid scenario anymore. Having services spread over locations the isolated DR test of a having one location failing is not having the value as before.

Eliminating single points of failure in a backup (restore) strategy.

Only the proof of a successful recovery is a valid checkpoint.

3-2-1 backup rules , the 3-2-1 backup strategy is made up of three rules, they are as follows:

- Three copies of data- This includes the original data and at least two backups.

- Two different storage types- Both copies of the backed up data should be kept on two separate storage types to minimize the chance of failure. Storage types could include an internal hard drive, external hard drive, removable storage drive or cloud backup environment.

- One copy offsite- At least one data copy should be stored in an offsite or remote location to ensure that natural or geographical disasters cannot affect all data copies.

Loss of information, software tools compromised, database storage compromised, is the new scenario when everything has become accessible using communications.

Just losing the control to hackers being taken into ransom or having data information leaked unwanted externally is far more likely and more common than previous disaster scenarios.

Not everything is possible to prevent. Some events are too difficult or costly to prevent. Rrisk based evaluation on how to resilence.

⚠ Loss of data integrity - business.

⚠ Loss of confidentiality - information.

⚠ Robustness failing - single point of failures.

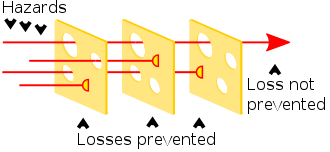

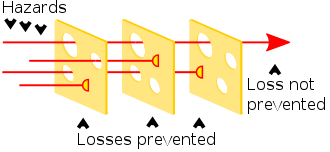

The Swiss cheese model of accident causation is a model used in risk analysis and risk management, including aviation safety, engineering, healthcare, emergency service organizations,

and as the principle behind layered security, as used in computer security and defense in depth.

Therefore, in theory, lapses and weaknesses in one defense do not allow a risk to materialize, since other defenses also exist, to prevent a single point of failure.

Although the Swiss cheese model is respected and considered to be a useful method of relating concepts, it has been subject to criticism that it is used too broadly, and without enough other models or support.

💣 BCM is risk based having visible cost for needed implementations but not visible advantages or profits. There are several layers

Loss of physical office & datacentre.

In the early days using computers all was located close to the office with all users because the technical communication lines did not allow long distances.

Using batch processing with a day or longer to see results on hard copy prints. Limited Terminal usage needing copper wires in connections.

The disaster recovery plan was based on a relocation of the office with all users and the data centre when needed in case of a total loss (disaster).

For business applications a dedicate backup for each of them aside of the needed infrastructure software including the tools(applications).

⚠ The period to resilence could easily span several weeks, there was no great dependency yes on computer technology. Payments for example did not have any dependency in the 70´s.

⟲ RN-1.2.3 A practical taxonomy approach to multiple disciplines

Hardening is indispensible but always tailored

Asking a hardening checklist for a tool and generalising the results.

-

Indentities & licensing: accountability for the proces owner.

- Use dedicated Integration Users (service accounts).

It could be cheaper when specialized licenses exist in moving away from standard user licenses.

Safety: Restricted Tools to API-only access by default preventing from using a UI.

- One Integration, One User to ensure auditability and limiting the "blast radius" if one system is compromised.

Safety: Never reuse a single "generic" integration account for multiple systems.

The challenge is a trade off in the number of service accoutns and the level of achieved segmentation

- Access Control & Network Security: accountability for the process owner.

- Safety: Restrict Login - source Ranges: Set specific ranges by sources for the integration user.

This ensures the account can only be accessed from your known middleware or server.

When doing this for natural users you are limiting it to known locations

- Safety: Enforce MFA for API Logins: Ensure the integration user is challenged for MFA during the initial handshake or login flow.

MFA is becoming standard for natural personal users requiring an additional manual action.

The challenge: MFA for technical (non personal) accounts manual actions is impossible but a trusted second source validations is possible.

- Safety: Limit "API Enabled" Permission: Only grant the "API Enabled" permission to users (personal & non-personal) who strictly require it for their function.

The challenge is well defined roles aligned to functions that are supporting limited scopes.

- Authorization (Least Privilege): accountability for the proces owner.

- Safety: Permission Set-Led Security Model: Assign the Minimum Access, e.g. API Only profile and layer on specific permissions using Permission Sets.

- Safety: Never use the "System Administrator" profile for integrations.

The challenge: It will work quickly and at low cost when installing & configuring a tool.

The real cost is in the events when being abused, but these costs are missing in the finacial accounting.

- Object & Field Level Security (FLS): Explicitly grant Read or Edit only to the objects and fields the integration must touch. Deny access to sensitive fields like SSNs or birthdates unless absolutely necessary

- Audit "Modify All Data": Avoid this permission at all costs.

If an integration needs to manage many records, use Sharing Rules or specific View All/Modify All permissions on a per-object basis instead.

- Connected Tools Governance: accountability for the proces owner.

- Safety: Block "Uninstalled" tools: restrict all uninstalled connected tools unless they are explicitly allow listed by an admin.

- Safety: Scope Limitation: Limit scopes to the bare minimum (e.g., use of API access instead of full access).

The prevention of code injections is an example why to do this.

A challenge: when writing code is part of the job the prevention of writing code is not what should be done.

- Safety: Shorten Refresh Token Lifespans: Set refresh tokens to expire if they aren't used within a specific window to prevent dormant keys from being weaponized later.

Refresh tokens are used minimizing the impact of overlaoding in MFA requests. Overloading with MFA request is an attack vector.

- Proactive limits in execution: accountability for the proces owner.

- Safety: Transaction Security Policies (TSP) in using shields for creation of real-time blocks.

The goal is limiting what can be done to what is normal expected to be able to be done.

For example, if an integration user attempts to export more than 1000 records in a single query, automatically kill the session and alert the security team.

- Run Security Health Check regular: Aim for a score of 90% or higher to ensure your integration settings haven't drifted from what the intention was.

Standard understandable naming conventions meta

⟲ RN-1.2.4 Building on what is known of systems for new improvements

Understanding systems, the floor practices

Why Do Manufacturing Systems Fail? (LI: K.Kohls 2026)

Because they run exactly as designed, any improvements fail for the same reason.

Most manufacturing systems don't fail because people are careless, they fail because they were designed using averages: balanced lines, one-piece flow everywhere, high OEE (Overall Equipment Effectiveness) at every station.

⏳

On paper, these designs look flawless, on the floor they create:

- growing queues, longer lead times

- chronic firefighting and "mystery" bottlenecks that seem to move every week

To understand why, we don't need another framework or philosophy we need a dice game.

A simple simulation, used for decades, where each station rolls dice to determine output.

When variability is introduced (as it always is in real life), something uncomfortable becomes obvious very quickly: systems designed to be

- balanced, fully utilized, locally efficient

become unstable by design.

For what is going on the floor the question to what dimension the questions belong it is answered by the engineering/administration.

These are nicely ordered.

There is fit for what (the problem state), how, where, who when which.

The question where ToC belongs to is a duality between he how and who for perspectives in different levels of abstraction.

⌛

In the following article series, I'll use that dice game and basic simulation logic to show:

- why balanced lines collapse under variability

- how buffers reveal the true bottleneck

- why OEE often damages throughput

- how lead time is mostly an inventory decision

- why capable systems design bottlenecks instead of discovering them

- and why manufacturing, uniquely, resists simulation despite its success everywhere else

This isn't a critique of Lean, TOC, or any method.

👉🏾

It's a critique of intuition-driven design in a variable world.

Every claim in this series can be demonstrated, every conclusion can be validated, no belief required.

If your system feels like it's constantly fighting itself, there's a good chance it's doing exactly what it was designed to do.

- TIP: Data Collection at the Bottleneck (LI: K.Kohls 2026)

If you want sustainable throughput improvement, you need to see the bottleneck clearly.

In real time. With real data. At the point where seconds matter.

RN-1.3 The location setting for the path going to somewhere

The classification for management, executive, information was based on technical approaches for:

- Creating complex dashboards with a lot of technology details in they assumption the would result in better decisions

- The experience for what is known at operational execution being ignored assuming the dashboards are the truth.

- The assumption that a top down C&C command & control is the ultimate only valid target operation model.

🚧 Breaking the assumption of the top down C&C.

⟲ RN-1.3.1 Searching for what is unknown for new improvements

Dashboard paralyses, two error types

Ackoff distinguished between two types of errors:

- Errors of commission: doing something that should not have been done

- Errors of omission: failing to do something that should have been done

What's striking is not the definition, it's how organisations react to them.

Errors of commission are visible.

- They show up in reports, audits, incident logs, and post-mortems.

- They are easier to point at, easier to blame, and therefore easier to punish.

Errors of omission are different.

- They leave no invoice, no variance, no accounting entry.

- Nothing happens and that's exactly the problem.

Ackoff argued that omission errors are often more critical than commission errors:

- not investing when capacity is constrained

- not addressing an obvious systemic risk

- not stopping a failing policy early

- not acting when weak signals were already there

But because traditional accounting only records what happened, not what could have happened, these errors remain invisible.

And invisibility shapes culture.

Over time, organisations learn an implicit lesson:

Doing nothing is safer than doing something.

Initiative becomes risky.

Caution becomes rational.

Inaction becomes the default, not because people don't care, but because the system quietly rewards it.

The irony?

Many of the biggest failures in organisations are not caused by bold mistakes, but by missed opportunities and delayed decisions that were never tracked, reviewed, or learned from.

Ackoff's insight is uncomfortable because it shifts the question:

Not only "What went wrong?", but also "What didn't we do and why?"

Mangement informations systems

Gemba walks the most honest mirror of your share (LI : Alper Ozel jamuarie 2026)

We spend hours discussing performance in meeting rooms, but the real story is written on the shopfloor.

If we're honest, most of the problems we "discover" in reviews were visible days ago at the Gemba where value is actually created.

That's why Gemba walks are not an 'Operational Ritual'; they are mirrors of 'Leadership Behaviour'.

Done right, they transform:

- The way we see problems

- The way teams see leadership

- The speed at which we turn issues into improvements

When we walk the Gemba, we should try to look beyond "Is everything OK?".

It's also about how we lead. Thats why we should also use a parallel set of leadership lenses to guide the conversation.

| Lense beyond is it OK | 👐 | conversation guidance |

| What is the process? | | How can we improve the process? |

| What is normal vs abnormal? | | How can we eliminate the abnormal? |

| What is working well? | | How can we move good to great? |

| What is not working well? | | Why is standard not being followed? |

| What is broken? | | How can we prevent broken things? |

| What is not understood? | | Why is it not understood? |

| What is creating waste? | | Why is it creating waste? |

| What is creating strain? | | How can we prevent strain? |

| What is creating unevenness? | | How can we smooth unevenness? |

| What is not visible enough? | | How can we make it visible? |

A good Gemba walk has at least six rules:

- Go with curiosity, not a checklist

- Listen more than you speak

- Ask "What makes your job difficult today?" and really wait for the answer

- Instead of asking why we are off-target : ask 'what stops us from hitting it'

- Always leave a trace of action

- Connect what you see to your leadership

Nothing destroys Gemba faster than leaders who walk, nod, take photos and change nothing.

Convert at least one observation into a clear action, owner, and date and follow up visibly.

Gemba Walk isn't about walking around with a clipboard.

It's about building a culture where problems are seen, spoken about, and solved together: At the place where they happen.

What is lean realy about, 7 stages

How can we support lean systems? (M.Balle Nicolas Chartier 2025 planet lean)

Basic skills are in and of themselves not so obvious to pinpoint.

From our work on the shop floor, this is what we look for in our people:

- Technical skills: Knowing the job and understanding how their tools interact with their materials to produce customer satisfaction (or dissatisfaction) and at what cost - also understanding that getting the job done means improving safety, quality, timing and cost of the work.

- Seeing and listening skills: Being able to look at a situation and hear what people say about it and build a picture of the problem in their minds, beyond what they originally thought. This sounds obvious, but it turns out that seeing and listening skills are quite rare and can always be worked on (particularly in terms of seeing safety, quality, lead-time or cost issues, as well as recognizing enthusiasm or distress in people).

- Problem-solving skills: Being able to recognize and pinpoint a problem, then draw a functional analysis (how things are supposed to work) in order to spot where things are not working, knowing who to talk to about it and how to start looking for countermeasures is also a basic skill, although when you look at it it's not that basic and needs constant learning and honing.

- Teamwork skills: Some people are easy to get along with, others less so. Keeping a team task-oriented while being aware and open to members' individual moods and personal difficulties is a skill that involves both emotional empathy (being attuned to others) and cognitive empathy (seeing what they're trying to achieve) and how to switch from one to the other - as well as knowing when to follow, when to organize, and when to lead.

- Communication skills: Getting one's point across by expressing ideas clearly and concisely, as well as attentive listening to staff's concerns and suggestions, conveying to them the importance of their job and making sure to share changes that will affect them. Simple conflict resolution skills, such as making sure people feel heard and checking facts before jumping to conclusions, are critical as well.

- Looking ahead skills: This again sounds simple enough, but it is yet another basic skill people need to develop in order both to understand how things work and evaluate possible countermeasures according to impact. The ability to anticipate problems - both on tasks and on people's reactions - is an essential part of problem awareness and managerial potential.

- Leadership skills: Discovering opportunities, taking initiative and negotiating the support needed to get things moving is another basic-yet-difficult skill required to function within a lean framework and use its tools. Without this skill, tools can turn into formal activities with very little value.

Of course, one can think of many more "basic" skills than this set of seven. Yet, without minimal proficiency in these skills, problem-solving and kaizen initiatives can easily turn out to be misguided or fraught with interpersonal friction. Once the basic skills are in place, however, we can then turn to building the tool with the person - explaining how the tool works to do what - and have them practice, until we can use the tool on real-life, complex problems.

⟲ RN-1.3.2 Info

The Philosophy and Practicality of Lean Jidoka

Lean has a long history with a lot of misunderstandings.

There is however a duality dichotomy in the fundaments, you cannot have one without the other.

Only looking at a detailed aspect like JIT is missing what really brings value. Jidoka vs JIT

Diving deep into the Toyota philosophy, you could see this as JIT telling you to let the material flow, and jidoka telling you when to stop the flow.

This is a bit like the Chinese philosophical concept of Ying and Yang, where seemingly opposite or contrary forces may actually be complementary.

Diving deep into the Toyota philosophy, you could see this as JIT telling you to let the material flow, and jidoka telling you when to stop the flow.

This is a bit like the Chinese philosophical concept of Ying and Yang, where seemingly opposite or contrary forces may actually be complementary.

The same applies here. JIT encourages flow, and Jidoka encourages stops, which seems contrary. However, both help to produce more and better parts at a lower cost.

Unfortunately, JIT gets much, much more attention as it is the glamorous and positive side, whereas jidoka is often seen as all about problems and stops and other negative aspects.

Yet, both are necessary for a good production system.

💣 Ignoring the holistic view of the higher goal can make things worse not better.

There are several approaches in lean:

- Focus for on the jobs needed for to work be done

- Focus on the objects in flow lines that are result of work

- Focus on the objects that are done only once like change of flow lines

The project shop is associated with not possible applying lean thoughts.

The project shop, moving the unmovable a lean appraoch, is altought possible to see getting done in lean approache.

Does it or are there situations where new technology are implementing a lean working way.

It is using a great invention of process improvement over and over again.

That is: the dock. Building in the water is not possible. Building it ashore is giving the question how to get it into the water safely.

🔰 Reinvention of patterns.

Moving something that is unmovable.

Changing something that has alwaus be done tath wasy.

Minimizing time for road adjustment, placing tunnel. Placing it when able to move done in just 3 days. Building several months.

See time-lapse. 👓 Placing the tunnel was a success, a pity the intended road isn´t done after three years.

The project approach of moving the unmovable has been copied many times with the intended usage afterwards.

rail bridge deck cover

The approach is repeatable.

💡 Reinvention of patterns. Moving something that is unmovable.

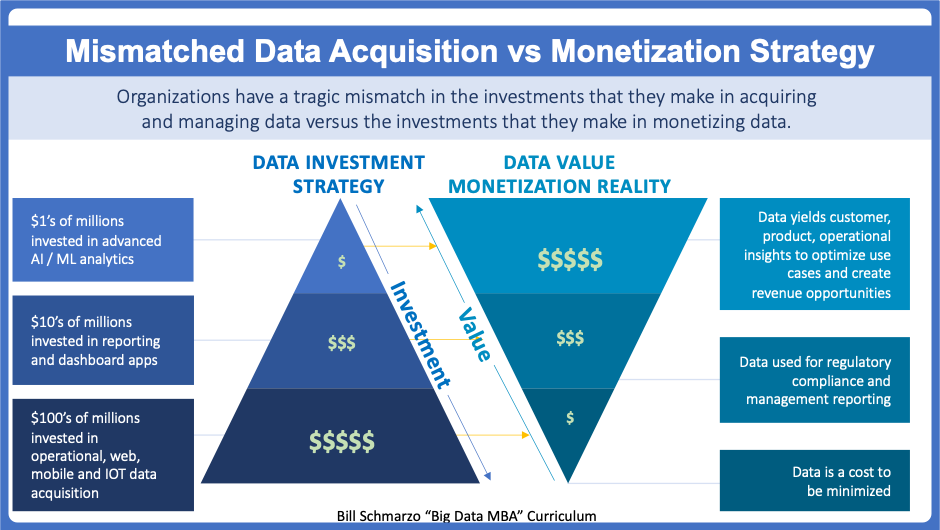

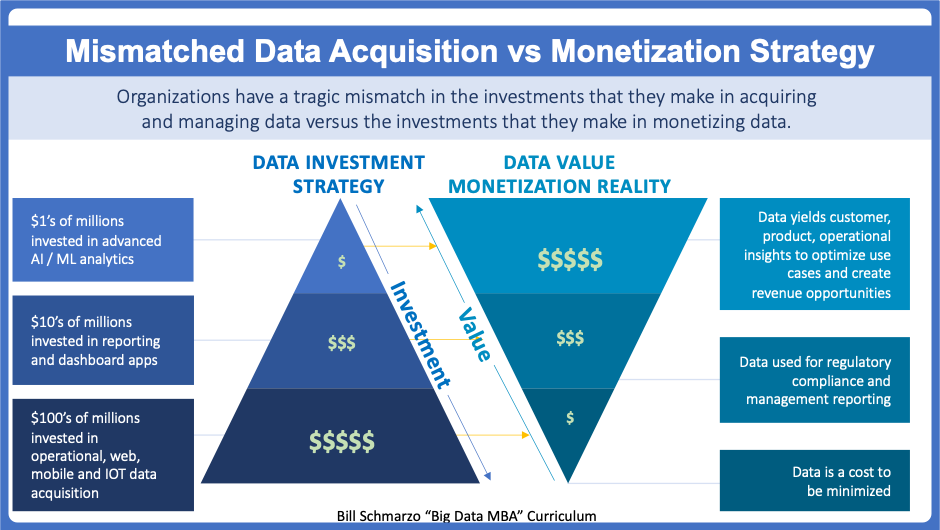

The Tragic mismatch in data strategy

A review on the topic of buzz and investments: "Organizations do not need a Big Data strategy; they need a business strategy that incorporates Big Data"

Data Strategy:

Tragic Mismatch in Data Acquisition versus Monetization Strategies. (LI: Bill Schmarzo 2020.)

The Internet and Globalization have mitigated the economic, operational and cultural impediments traditionally associated with time and distance.

We are an intertwined global economy, and now we realize (the hard way) that when someone sneezes in some part of the world, everyone everywhere gets sick.

We are constantly getting punched in the mouth, and while we may not be sure from whence that punch might come next (pandemic, economic crisis, financial meltdown, climate change, catastrophic storms), trust me when I say that in a continuously transforming and evolving world, there are more punches coming our way.

my next two blogs are going to discuss: How does one develop and adapt data and AI strategies in a world of continuous change and transformation?

It"s not that strategy is dead (though at times Strategy does look like an episode of the "Walking Dead"); it"s that strategy - like every other part of the organization and the world - needs to operate in an environment of continuous change and transformation.

Organizations spend 100"s of millions of dollars in acquiring data as they deploy operational systems such as ERP, CRM, SCM, SFA, BFA, eCommerce, social media, mobile and now IoT.

Then they spend even more outrageous sums of money to maintain all of the data whose most immediate benefit is regulatory, compliance and management reporting.

No wonder CIO"s have an almost singular mandate to reduce those data management costs (hello, cloud).

Data is a cost to be minimized when the only "value" one gets from that data is regulatory, compliance and management risk reduction.

Companies are better at collecting data, about their customers, about their products, about competitors, than analyzing that data and designing strategy around it.

Too many organizations are making Big Data, and now IOT, an IT project.

Instead, think of the mastery of big data and IOT as a strategic business capability that enables organizations to exploit the power of data with advanced analytics to uncover new sources of customer, product and operational value that can power the organization's business and operational models.

To exploit the unique economic value of data, organization"s need a Business Strategy that uses advanced analytics to interrogate/torture the data to uncover detailed customer, product, service and operational insights that can be used to optimize key operational processes, mitigate compliance and cyber-security risks, uncover new revenue opportunities and create a more compelling, more differentiated customer experience.

But exactly how does one accomplish this?

- By focusing on becoming value-driven, not data-driven.

⟲ RN-1.3.3 Info

Using BI analytics

Using BI analytics in the security operations centre (SOC).

This technical environment of bi usage is relative new. It is demanding in a very good runtime performance with well defined isolated and secured data. There are some caveats:

⚠ Monitoring events, ids, may not be mixed with changing access rights.

⚠ Limited insight at security design. Insight on granted rights is done.

It is called

Security information and event management (SIEM)

is a subsection within the field of computer security, where software products and services combine security information management (SIM) and security event management (SEM). They provide real-time analysis of security alerts generated by applications and network hardware.

Vendors sell SIEM as software, as appliances, or as managed services; these products are also used to log security data and generate reports for compliance purposes.

Using BI analytics for capacity and system performance.

This technical environment of bi usage is relative old optimizing the technical system performing better. Defining containers for processes and implementing a security design.

⚠ Monitoring systems for performance is bypassed when the cost is felt too high.

⚠ Defining and implementing an usable agile security design is hard work.

⚠ Getting the security model and monitoring for security purposes is a new challenge.

It is part of ITSM (IT Service maangemetn)

Capacity management´s

primary goal is to ensure that information technology resources are right-sized to meet current and future business requirements in a cost-effective manner. One common interpretation of capacity management is described in the ITIL framework.

ITIL version 3 views capacity management as comprising three sub-processes: business capacity management, service capacity management, and component capacity management.

In the fields of information technology (IT) and systems management, IT operations analytics (ITOA) is an approach or method to retrieve, analyze, and report data for IT operations. ITOA may apply big data analytics to large datasets to produce business insights.

Loss of confidentiality. compromised information.

getting hacked having got compromised by whale phishing is getting a lot of attention.

A whaling attack, also known as whaling phishing or a whaling phishing attack, is a specific type of phishing attack that targets high-profile employees, such as the CEO or CFO, in order to steal sensitive information from a company.

In many whaling phishing attacks, the attacker's goal is to manipulate the victim into authorizing high-value wire transfers to the attacker.

Government Organisation Integrity.

Different responsible parties have their own opinion how conflicts about logging information should get solved.

🤔 Having information deleted permanent there is no way to recover when that decision is wrong.

🤔 The expectation it would be cheaper and having better quality is a promise without warrrants.

🤔 Having no alignment between the silo´s there is a question on the version of the truth.

⟲ RN-1.3.4 Info

THe challenge in adults and continious learning

Individual learning k (Walter Smith book review 1987 )

The concept of learning style and its subsequent utilization in learning programs has grown out of the realization that traditional group instruction methods are not adequate for modern education systems. With new technologies rapidly creating a labor market where there is virtually no unskilled labor, the traditional group instruction approach to learning, with its process of eliminating slower students, has been deemed totally inadequate (Knaak, 1983).

The Paradox, duality- dichotomy:

Adults need to be able to cope with and respond to diversity, contradictions, dilemmas, and paradoxes.

These are listed by Brundage and MacKeracher (1980) as the dynamic equilibrium between

- stability and change,

- exposure to threat of failure and loss of self-esteem,

- the threat of becoming overqualified for their current work or

- throwing their personal relationships out of balance,

- and the conflict between the need to be needed and need to learn independency.

While some stress is normal and necessary to stimulate challenge in the learning environment, it may also create anger and frustration.

Anger was alleviated in this project by explaining to the students that it was a normal part of the learning process and by helping each of them deal with it in their own way.

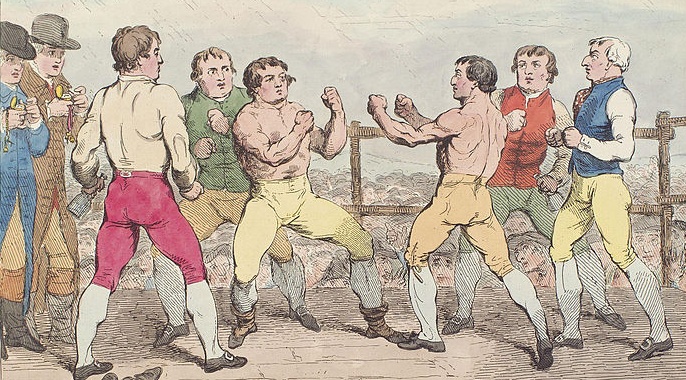

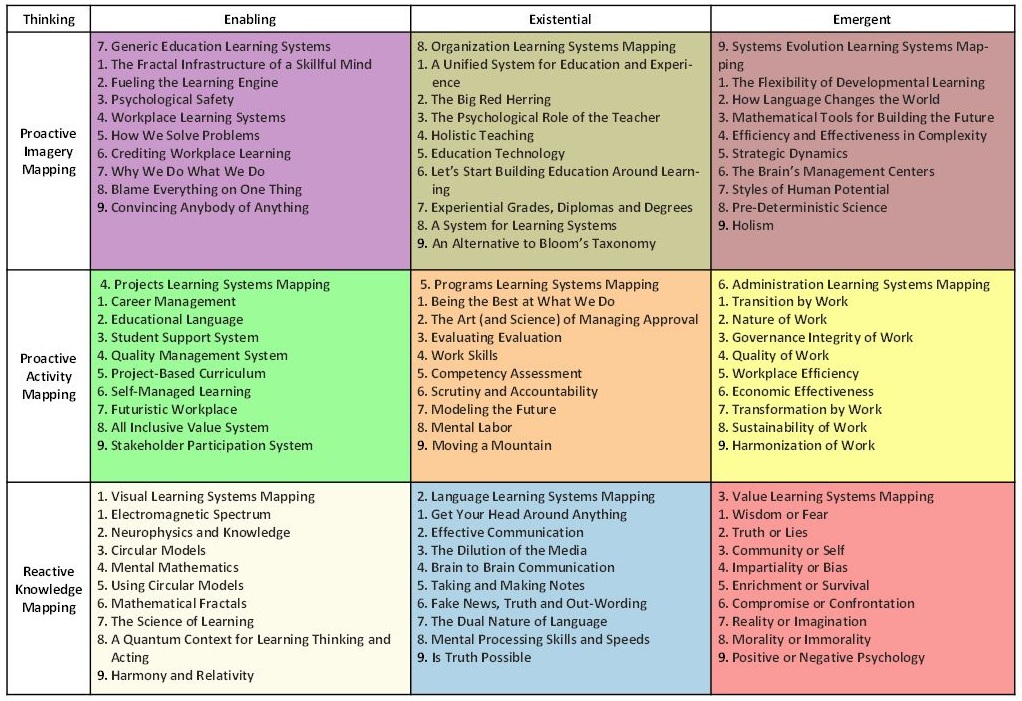

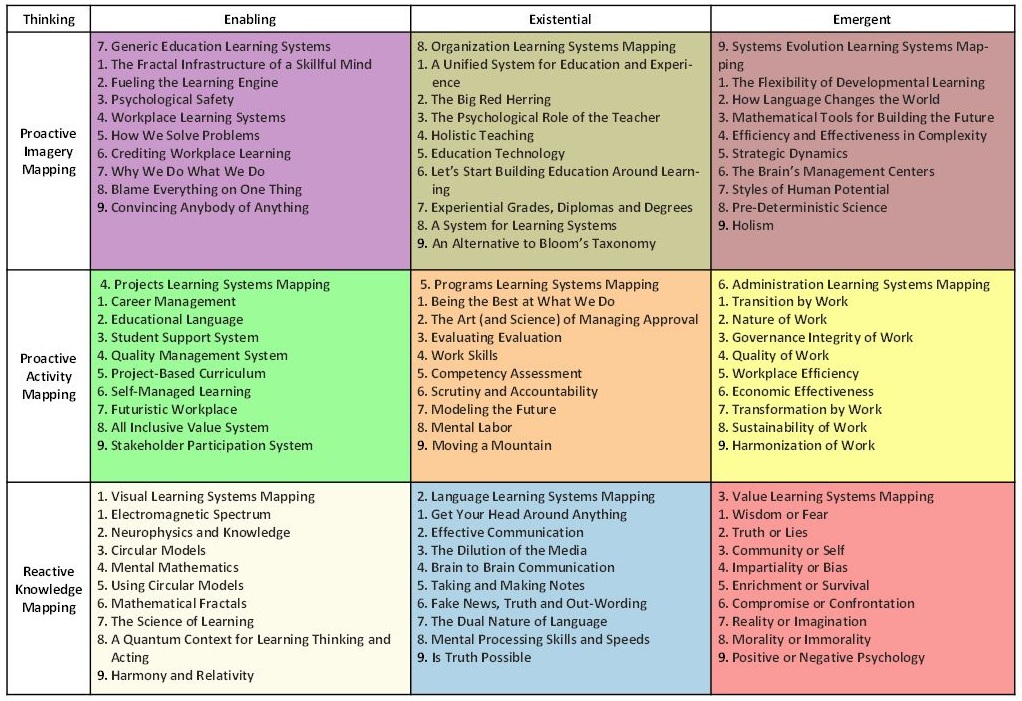

Affective Learing systems mapping (LI: walter Smit 2026)

Administrative learning systems set the stage for dynamic management.

Everyone was on the same page, and the page could be adapted to management needs.

In short, learning systems are people systems. The lay a foundation for continuous problem solving that is interconnected throughout the school or business.

Decision can be made at different levels so that the entire system flexes with smallest change.

The interesting part of this taxonomie is a 9 plane with each of the ceels mentions 9 items.

| Thinking | Enabling | Existential | Emergent |

| Proactive imagery | Generic Education learning | Organization Learning Systems | Systems Evaluation Learning systems |

| Proactive activity | Projects learning Systems | Programs Learning Systems | Administration Learning systems |

| Reactive knowledge | Visual Learning Systems | Language Learning Systems | Value learning systems |

RN-1.4 Harsh conditions inclusiveness for going to somewhere

The classification for management, executive, information was based on technical approaches for:

- Creating complex dashboards with a lot of technology details in the assumption they would result in better decisions

- The experience for what is known at operational execution being ignored assuming the dashboards are the truth.

- The assumption that a top down C&C command & control is the ultimate only valid target operation model.

🚧 Breaking with the far too complex dashboards.

⟲ RN-1.4.1 Redefining the how in getting decision knowledge for changes

The threat of failure and loss of an eco-system - IIT OIT ICT

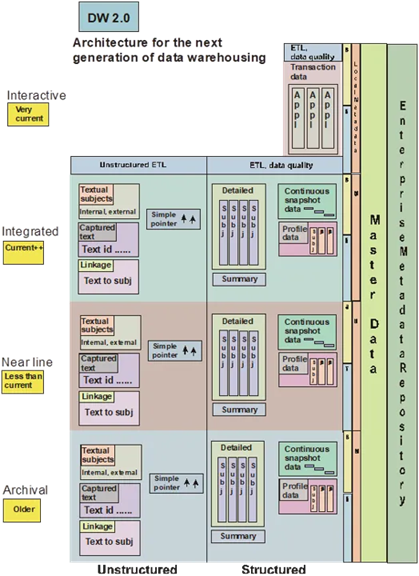

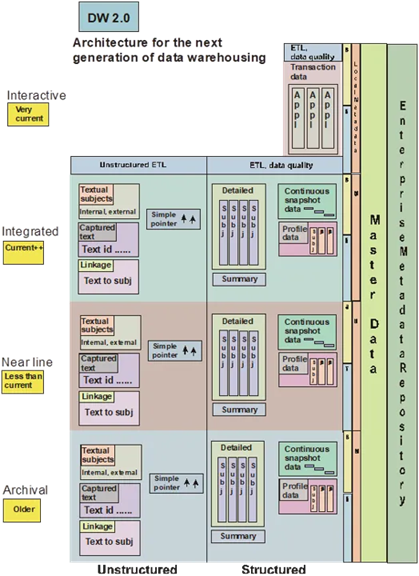

For a long time the "data-driven" dogma is made similar to building to what is labelled as Datawarehouse (DWH), data lake.

Nobody is daring to challenge that there is a huge profitable market and a feeling of safety in best practices.

The similarity to a physcial warehouse should open up questions.

- Is knowledge found in a storage location full of some materials?

- Is what is possible changing or needed got known by looking around in a storage location?

❌

💰

The answer is simple understandable negative, no one would make those kind of claims in the physical world.

Those with better approaches will very fast replace those.

➡ For knowledge you are going to a library that has access to scientific sources.

Details in what a warehouse, storage location could be:

👉🏾 Self-service sounds very friendly, it is a euphemism for no service.

Collecting your data, processing your data, yourself.

Get it from a location someone stored a lot you possible can use.

👉🏾 The limitation: Very likely not what you are needing .... unless

👉🏾 it is about getting measurement tooling helping in understanding.

💡 Really doing measurements is new information for IT

IIT.

It can become very messy when there is no agreement on what should get measured.

Different accountabilities have their own opinion.

❓ How conflicts should get solved?

The easy way is outsourcing issues to external parties.

New viewpoints perspectives and most likely increasing tensions.

🤔 The expectation: outsourcing is cheaper and better quality is a promise without warrants.

🤔 Having no alignment between the silo's there is a growing problem easily breaking the system as a whole.

❌ These are the classic

ICT battles for technology (no progress).

Have it prepared transported for you so it can processed for you.

The advantages are a well controlled environment that also is capable of handling more sensitive stuff (confidential secres).

💡 Seeing operational flow is information for IT

OIT.

Breaking the classic DataWareHousing: EDWH, Data Lake - IIT OIT ICT

Process

Data lakes are where context goes to die. (LI: Adam Walls 2026)

Data model harmonisation projects that never finish.

Master data management that assumes there's one canonical truth.

ETL pipelines are built on the assumption that you know what questions you'll ask before you ask them.

- You take data from a system that understands it. You strip it of its local meaning.

- You transform it into some canonical schema. You dump it in a lake.

- Then you spend months building pipelines to make it useful again.

- By the time you've done all that, reality has moved on. The schema is already out of date. The data is already stale.

⏳ Breaking what has always been done.

The real problem is deeper than technology.

When you centralise data you're making an assumption that variation is error, different business units having different definitions is a problem to be solved.

It's not, it's information.

Different parts of your organisation define things differently for good reasons.

- Regional variations reflect local markets.

- Operational differences reflect how work actually gets done.

- When you force everything into one schema you don't eliminate that variation.

- You just push it underground where you can't see it.

So what's the alternative?

⌛ Refactoring into an experience plane.

Stop trying to unify, start federating, send the question to the systems that own the data.

Let each system answer in its own terms.

Translate the responses into a shared vocabulary and then read what the differences between those answers actually mean.

The delta between what your CMDB says and what your network traffic shows isn't an error, it's your attack surface.

The delta between what your documentation claims and what your audit logs reveal isn't a governance failure, it's your compliance reality, the gap is the information.

Pathologies by wrong expectations for data driven - dashboards

Many organizations believe they're data-driven (dashboards, KPIs, and reporting), but too often, real conversations in the boardroom are neglected.

It's undeniable that complexity is increasing both within and outside organizations.

Technology is developing faster than people can keep up. And yet, we still overinvest in systems while the organization's most important asset isn't on the balance sheet: its people.

Data-driven work doesn't start with dashboards, but with behavior and with leaders who dare to say: we use data to learn, not to settle accounts, and to empower our people.

Combining and adjusting the text from a A high level goal for what is data-driven and what the relationships are (LI: Feb. 2026 R.van den Wijngaard) and for what is usually done but doesn't help for improvements. (LI: Jan. 2026 R.Borkes )

Many organizations invest heavily in data management: data governance, data platforms, data quality, definitions, and tools.

And yet, the same problems keep recurring: delays, frustration, unreliable management information, Low adoption.

The reason is Data management doesn't fix process failures, at most, it masks them.

⚒️

The question for what is going on in the flow.

- Errors and Defects: "Data - reports that gets it right first time"

Many information products fail to meet customer needs, not because the data is bad, but because the process is failing.

- Process errors, interpretation errors, and operational errors

- reports, dashboards, analyses that are technically correct but functionally deficient.

Data management can standardize data, it can't fix a wrong question.

- Re-editing and Correction "Export the report - results to a spreadsheet"

When information is no longer usable, it is re-edited, corrected, or "touched up," often multiple times.

This is hidden waste:

- Extra analysis time, Extra fine-tuning, Extra validation steps

- Efficiently you fix bad products rather than prevent those bad ones.

Data management can help in reporting on processes, it can't fix wrong processes.

- Control "The convincing single truth to others by a report"

There is a direct relationship between:

- Low process reliability, High control pressure

- More controls: reviews, checks, reconciliations, and shadow administration.

Data management facilitates control, but it is not a substitute for robust process design.

- Waiting "waiting for: the right data."

Decision-making and implementation are waiting not because data is lacking, but because:

- Definitions are ambiguous, ownership is unclear, processes are not synchronized

- Waiting time for decisions is pure waste, and often administratively invisible.

Eis systems can help in decision making, but are no substitute for resilent descision making.

⚙️

The question for what about to achieve defined states.

- Inventory The more inventory, the lower the agility.

In service and administrative processes, inventory isn't a physical pile, but rather:

- data still in progress, Backlogs, open files, unfinished analyses.

- Getting better insight into what are inventory items.

Data management provides insight, but it doesn't automatically optimize it.

- Moving data Following blindly "best practices" external consultancy advisories

Storing data, information from operational systems into:

- data lakes, data warehouses, integration layers

Seems rational, but is fragile, time-consuming, and expensive, but every transfer introduces:

- delay, interpretation risk, maintenance burden

- The assumption: more centralized data ⇄ less complexity.

Data duplication followed by manipulations is not a replacement for sensors.

- Overprocessing Not because the organization needs it, but because it can.

Spending more time on data than strictly necessary:

- extra analyses, extra dashboards, extra levels of detail

- Data management sometimes encourages precision where simplicity suffices.

- "Underutilizing Data"

Perhaps the most costly mistake: Data with high intrinsic value that:

- is not linked, is not interpreted, is not used in decision-making

- Not due to a lack of technology, but due to a lack of context, ownership, and direction.

The inconvenient truth is that Data Management optimizes what already exists, it doesn't transform anything.

True value only arises when data management becomes subordinate to:

- process design, management questions

- explicit management information, clear responsibilities

The question shouldn't be "Is our data management system right?" , but:

⚖️

"What waste are we perpetuating with perfect data management?"

When seeing "waiting for: the right data." and "Underutilizing Data" as the same that is a connection point than we have the first three (1,2,3) a describing negative in what could be "power" and the last three (5,6,7) as negative ones for "speed".

⟲ RN-1.4.2 Searching for the how in getting knowledge for improvements

Connecting ICT - administration to agile lean, information flow.

Having the flow lines approach for what is similar to work in mass the same kind (OIT), the work of changing flow lines is more similart to one-off projects.

🎭 When a project shop is better in place, why not copy this approach for ICT?

This perspective to administration work moving the papers around is Operational IT (OIT) supported by Administrative IT (AIT) and managed using informational IT (IIT).

Flow lines (👓)

are often the best and most organized approach to establish a value stream.

The "easiest" one is an unstructured approach.

The processes are still arranged in sequence; however, there is no fixed signal when to start processing at those.

During the process the errors in them to be corrected in the flow.

Flow lines (👓)

are often the best and most organized approach to establish a value stream.

The "easiest" one is an unstructured approach.

The processes are still arranged in sequence; however, there is no fixed signal when to start processing at those.

During the process the errors in them to be corrected in the flow.

💡

💡 The reinvention of common flow patterns using the information flow (OIT) similar as a physicial assembly line.

Aside the the standard operational activities there are administrative processes associated to them.

The difference between OIT and AIT is difficult to see the used technology is indenticial, but when reviewing the goal of the activity should make the difference clear.

⚠️ Information processes are different to physical ones that use physical objects.

- Information is easily duplicated Creating copies gives some feeling of independency from others.

That feeling has disadvantages costs: losing awareness in what the value chain is.

- Technology ICT buzz hide the real issues, ignore problem signal in the value chain.

- Information object components are often not complete in the needed OIT value assembly chain.

Missing the physical and administrative verification (AIT) it can go easily into chaotic confusing working practices.

Failures mistakes to blame to the machine (computer syas no") where the value process chain is the real problem", but not noticed (IIT).

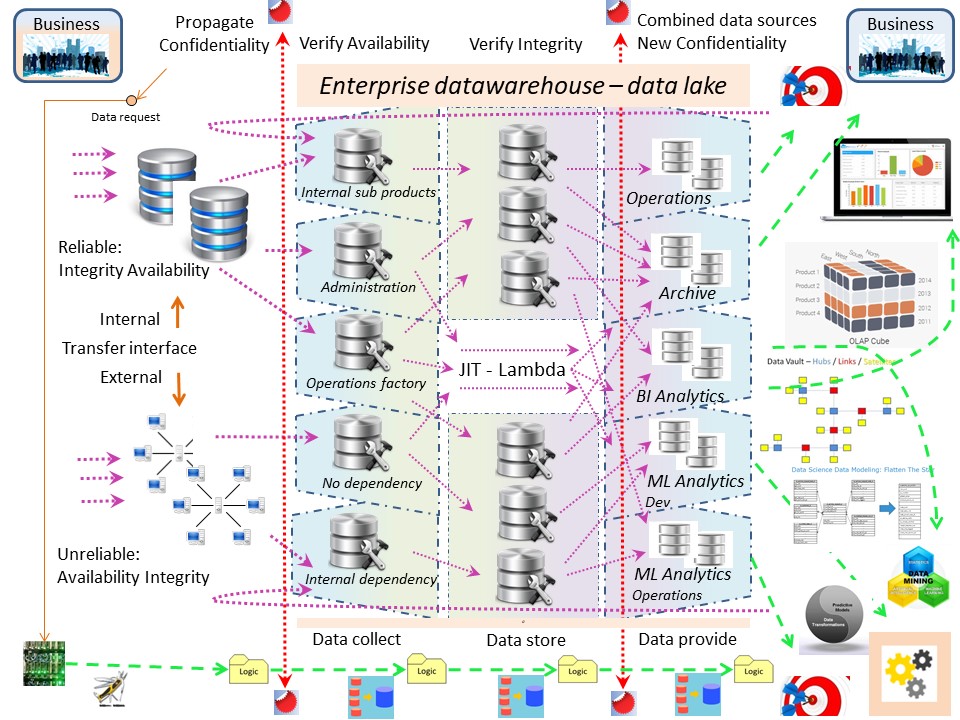

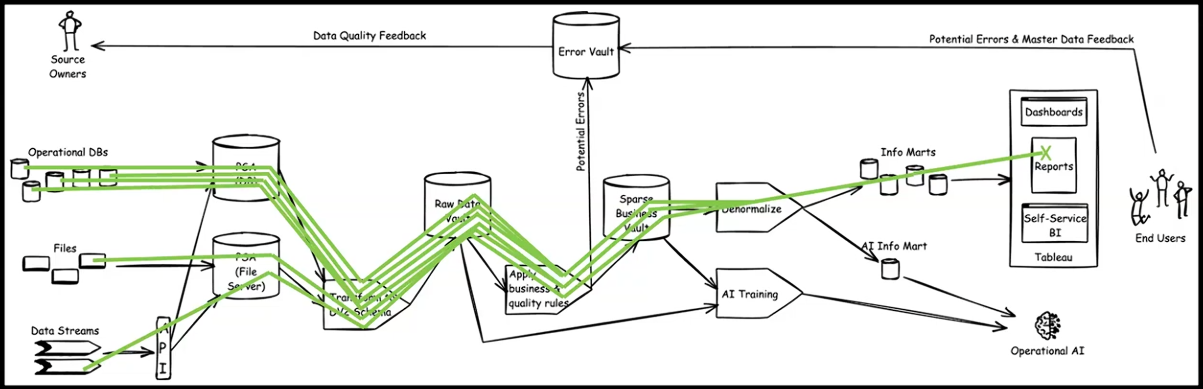

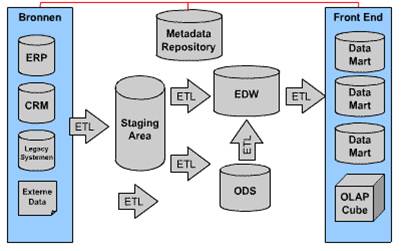

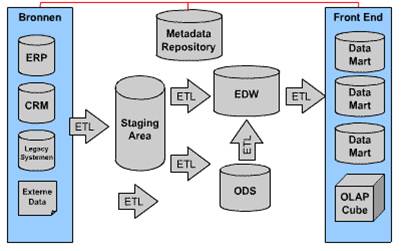

DataWareHousing, Information flow based - EDWH 4.0.

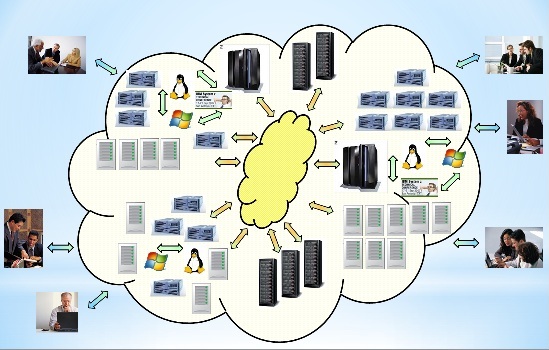

Repositioning the Datawarehouse as part of an operational flow makes more sense than the solely puprose in informing some management.

This shift has a big impact because it forces to see all information as a whole and not by isolated compoents or silos.

A compliancy gap getting a solution:

💡

The are two important phase shifts to be made for a very different approach in building up this enterprise information data ware house.

- 👉🏾 All consumers and providers valid for: Archive, Operations, ML operations

- 👉🏾 Every information container must have a clear ownership.

- All information having a shared understanding in the area of its usage.

A generic data model for relations between all information elements - information containers.

Safety accountablity is an inseparatable property of the system.

In a figure:

🔏

🔏 Getting A phase shift into integration and safety is hard.

⟲ RN-1.4.3 The interaction of four forces to improvements by knowledge

First contact in abstractions to enterprise engineering

Understanding and Modelling Business Processes with DEMO (J.Dietz 1999)

DEMO (Dynamic Essential Modelling of Organizations) is a methodology for modelling, (re)designing and (re)engineering organizations.

A way of thinking about organization and technology that has originated from a deep dissatisfaction with current ways of thinking about information systems and business processes.

These current ways of thinking fail to explain coherently and precisely how organization and ICT (Information and Communication Technology) are interrelated.

Its theoretical basis draws on three scientific sources of inspiration:

- Habermas' Communicative Action Theory, Stamper's Semiotic Ladder and Bunge's Ontology.

The core notion is the OER-transaction, which is a recurrent pattern of communication and action.

- The per-forma of a piece of information is the effect on the relationship between the communicating subjects, caused by communicating the thought.

It is determined by both the illocution and the proposition of the communicative action, and is further dependent on the current norms and values in the shared culture of the communicating subjects.

- Every piece of information has a forma, meaning that it has some perceivable structure carried in some physical substance.

This structure must be recognizable as being an expression in some ‘language', which is the case if the forma con-forms to the syntactical rules of that language

- The in-forma is the meaning of the forma, the reference to some Universe of Discourse, as defined by the semantics of the language.

The aspect in-forma also includes the pragmatic rules of the language, like the choice of the right or best forma to express some in-forma in specific circumstances

The distinction between the three aspects per-forma, in-forma, and forma, gives rise to the distinction of three corresponding levels at which information in an organization has to be managed: the essential level, the informational level, and the documental level respectively.

💡

💡 Solving gaps between silos in the organisation is supporting the values stream.

Having aligned information by involved parties it is avoiding different versions of the truth.

It is more easy to consolidate that kind of information to a central managed (bi analytics) tactical - strategical level.

The change to achieve this is one of cultural attitudes. That is a top down strategical influence.

Combining information silos & layers.

Indeed there are gaps. The question should be is there are mismatch or have the wrong questions been asked?

In the values stream flow there are gaps between:

- operational processes, in the chain of the product transformation - delivery.

- Delivering strategical management information assuming the silo´s in the transformation chains -delivery are cooperating.

- Extracting, creating management information within the silo´s between their internal layers.

When these issues are the real questions real problems to solve:

- Solve the alignment between at operational processes, with the value stream of the product. Both parties need to agree as single version of the truth.

- Solve the alignment in extracting, creating management information within the silo´s between their internal layers. There are two lines of seperations in context.

- Use the management information within the silos in consolidated information in delivering strategical management information.

The resistance for change in information processing

Changing a background in promoting agile to the narrowed setting of reporting analytics as the data management paradigm.

A presentation for

skills for business analysts (Scott Ambler feb 2026)

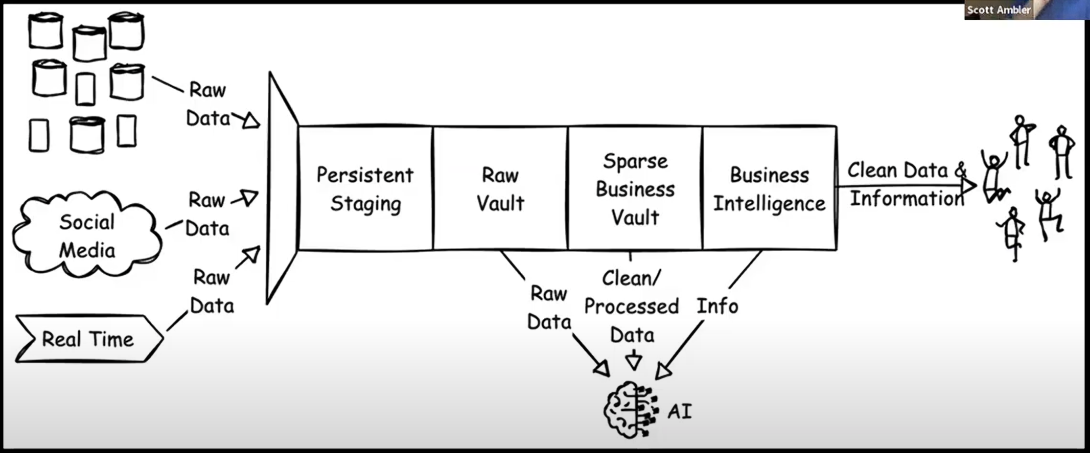

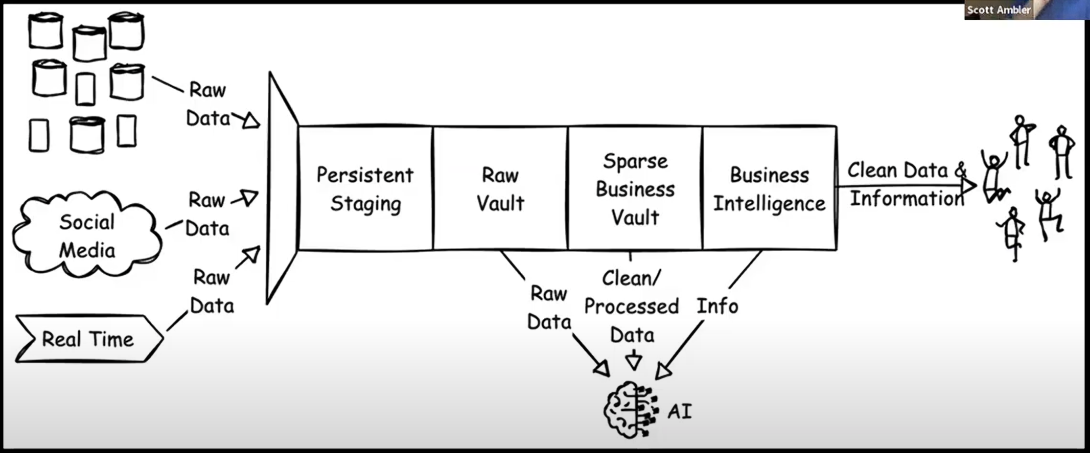

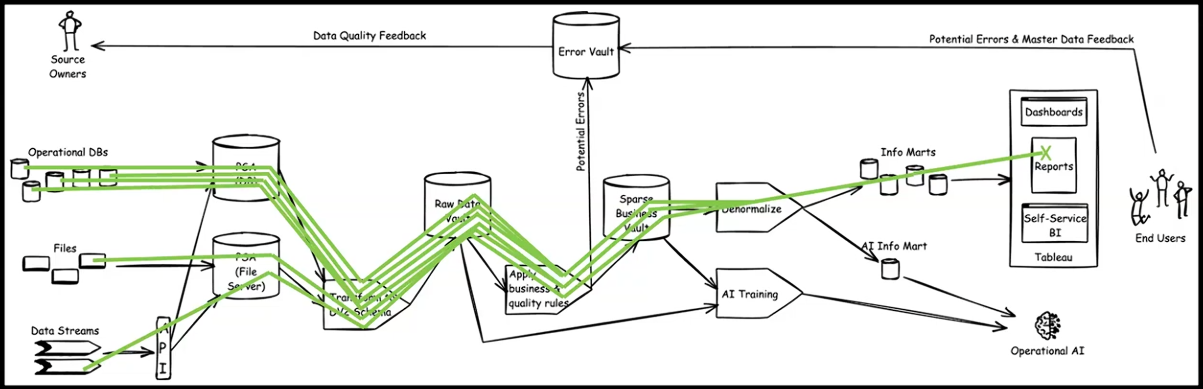

Classic IIT only Collecting and processing for management (BI).

See figure right side.

EIS/MIS systems:

➡ Goal informing managers to decisions (IIT)

➡ Shadow usage solving broking value stream processes (OIT, AIT)

Chaotic complexity No sensors but data duplications into what is felt as sensors.

See figure right side.

Data profiling:

➡ Acting on operational data

➡ seeing files interactions

➡ Understanding data streams

It is ambiguous to see references to Agile e.g. "The Agile data Warehouse design" based on answering: Who, What, When, Where, How many, Why, How, the use of Obeya and scrum but all being used in practices as it has always been done.

Still the long chaotic chain of extracts, data lineage, on duplicated data and no usage of sensors for metrics.

🔏 Getting into a real fundamental change is hard.

⟲ RN-1.4.4 Alignment by three domain types to four time perspectives

Change data - Transformations

the modern data stack (Xebia blog feb 2026 )

Before the rise of the "Modern Data Stack," the landscape was dominated by Relational Databases, primarily designed to provide key figures and trends.