JST JCL Mainframe

Job Submit Tool, Test automatization - JCL structuring

Making work of ICT colleagues easier.

Looking and analyzing what the colleagues are doing, improvements and corrections getting planned (PDCA).

🔰 lost here, than..

devops sdlc.

Progress

- 2019 week:12

- Started trying a conversion from the old site.

- 2019 week:16

- Adjusting to the finished frame.

Contents

| Reference | Topic | Squad |

| Intro | Making work of ICT colleagues easier | 01.01 |

| Environment | JST Introduction environment | 02.01 |

| AutoJob | Structure of Automated JOB Processing | 04.01 |

| | ISTQB JST-JCL relationship | 04.02 |

| StandardJob | Standard JCL JOB procedures | 07.01 |

| DTA Support | DTA support procedures (develop Test Acceptance) | 09.01 |

| Evaluate | Evaluating | 11.00 |

| | Missing for deploying JST As A Service JST-AAS | 11.01 |

| | Words of thank to my former colleagues | 11.02 |

JST Introduction environment

What is JCL?

JCL (Job Control Language) is the script tool in a classic IBM Mainframe used to run processes for the business.

JCL can also be used for a lot of other logic to run, as JCL is just a language.

Before you can do anything to optimize the JCL process running business processes you must structure the way of how JCL is coded.

It has a hughe advantage in disconnecting the physical data reference out of source code to this JCL location.

What you do by that is defining which data should be used on the moment the job is run. JIT,Just In Time definition..

Any business software logical progam can be reused in the DTAP setting wihtout having being modified.

Avoiding not necessary modfications in business software (production, acceptance) is a quality and compliance requirement.

JCL script Structuring

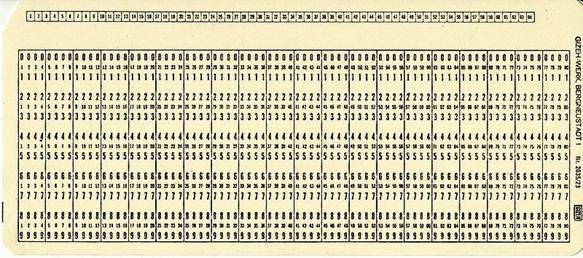

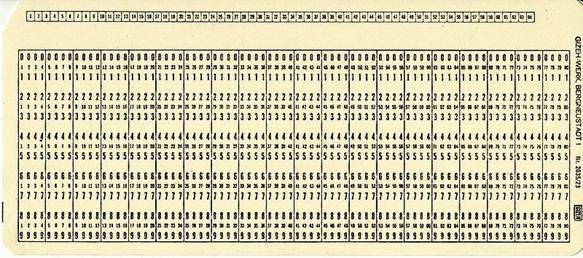

In the old days everything was on Hollerith cards.

After migration those and eliminating these physical artifacts, nothing has changed very much.

Just in some rare cases some modern approach has been implemented.

Putting a bunch of a hollrith cards into a mechanical reader for running something is hard manual work and limited by the paper weight and way of storing it as paper cards.

The first change step was converting those cards into digital datasets on DASD.

The next one is trying to foget the limitations of those old physical cards.

- the numbering in positions 73-80 is not needed anymore as the risk of physical dropping out of your hands is eliminated

- No need to build a complete card-deck as you can get jcl-modules easily from multiple digital datasets

JST Introduction environment (continued)

Environment details

Not a single problem is to solve, there are more in this rather simple question. Job sumbmitting, Devlopment Life Cycle, Technical tools availability.

The IBM mainframe approach isn´t that major leading ICT solution suppliers anymore as in the 70´s. Better, cheaper options have been introduced since those early years.

As an example of analysing and solving an ICT problem it is still nice.

In this case the way of structuring coding at development and the organizational impact.

Life cycle JCL transition

The change is a structured JCL generating and usage approach.

I designed that in coöperation with others.

Splitting up the old Job´s into basic building blocks, reuse them in every job for every type of usage.

⚙ When you can generate JCL, it is also possible to generate a lot of different ones with the goal of segregated parallel testing.

Starting a next job when one is ready is as easy as a manual start.

💰 The cost saving in time is obvious: doing something once instead the same thing over and over again by different people.

💣 Difficult to get it accepted by ICT staf users. Remember: You are (I am) automating the daily work of some or more persons (ICT staff)

Goals Automated JOB Processing :

- Automated testing: regression approach and new logic or fixing found issues

- preparing the data needed for tests

- preparing jobs / scripts in a low code approach

- Automated running: all the jobs / scripts

- Saving & archiving & comparing the results.

Structure Automated JOB processing

Basic design

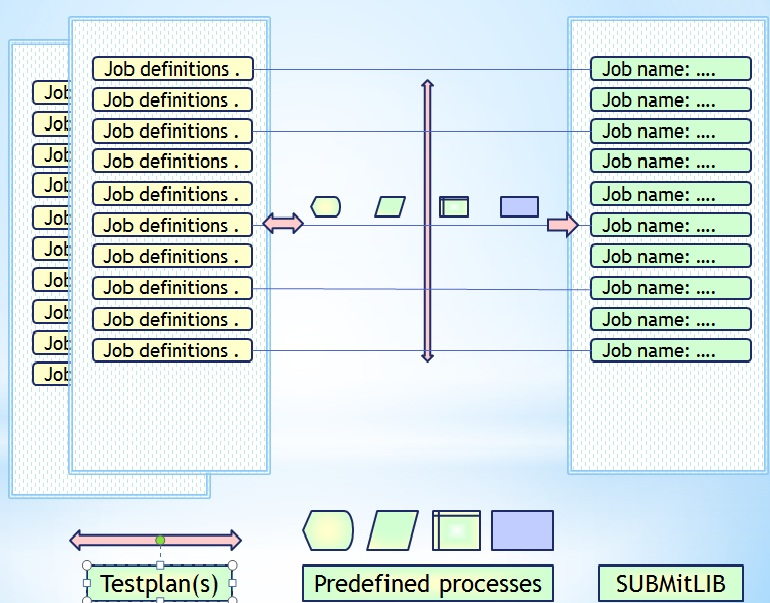

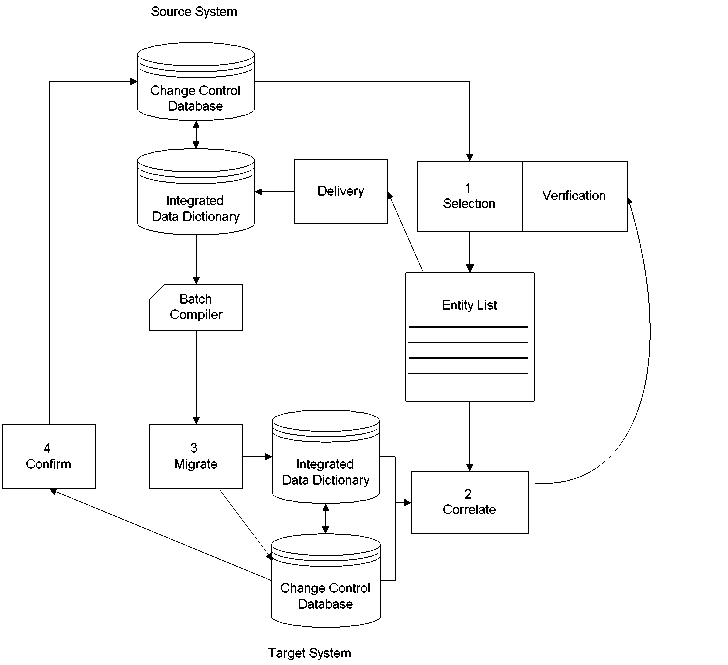

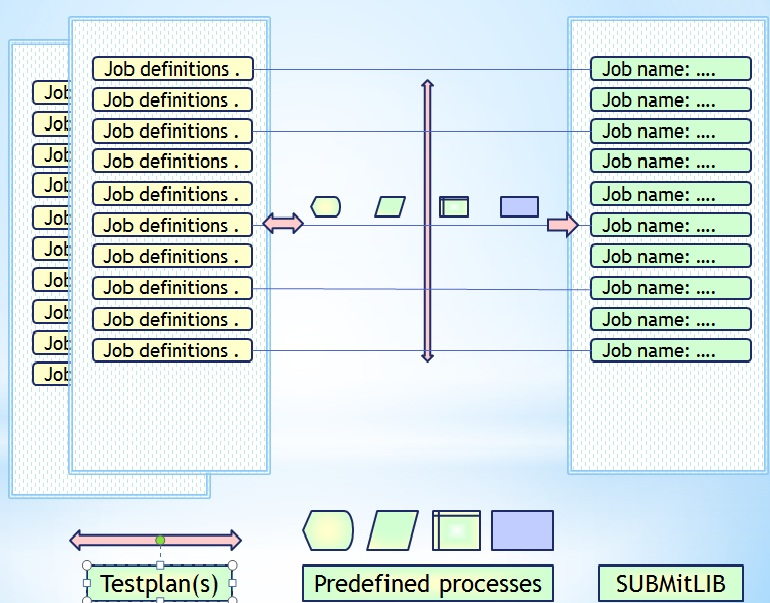

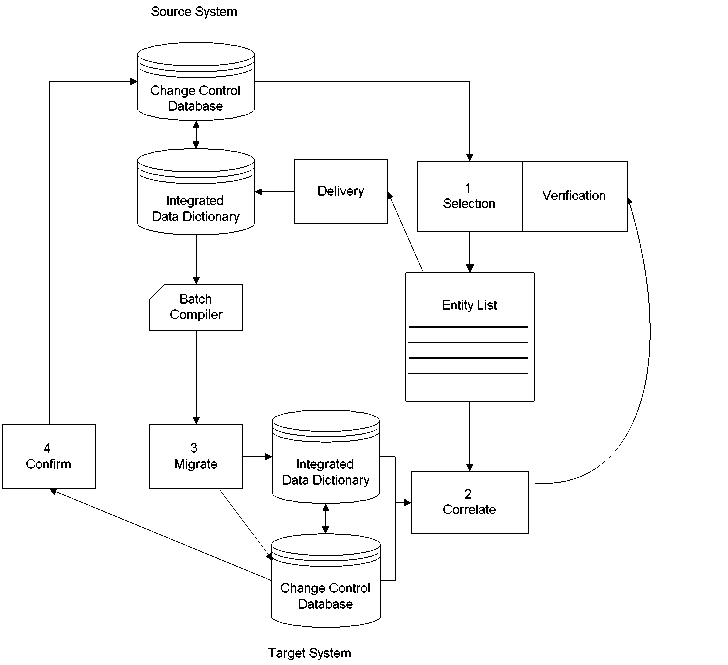

This looks at little bit complicated, the basics are:

- When al Jobs-scripts are generated, trigger the entire flow.

- Selection by choices to build jobs <SUBMiTLIB> out of <predefined processes>

- Archiving and restoring of all made choices, ability to rebuild completely.

Modelling the JST process

The proces model has several dimensions:

- job names

- job bodies, step procedure definitions, dataset io definitions

- environments: code, data, IO definitions

- simulated run date

The red arrows in the figure are indicating the optional selections for:

- jobs - All the defintions to get them run wiht predefined settings and values.

- testplans - The collection of jobs that make a testrun as unit.

- The fact in a star-schema ER-relationship is this the: "job".

Structure Automated JOB processing (continued)

Optimizing for mass processing

The ROI goes positive only hen used intensively.

Imagine:

- Dedicated test-department. One fte running all manually. 5 FTE doing requests, validations.

- Merging two companies (1998), milo (2000), euro conversions (2002) some other major changes.

- Implementing: version / release tool (Endevor) then needing to roll out for developers (25).

- Align the releasing to the end of the SDLC, also covering production scheduling.

This is what happened, intensively used from the 1990´s, up to after 2015.

ISTQB JST-JCL relationship

All work at JST - JCL was done accordingly to the documentation found at ISTQB.

However, it was done years before the ISTQB organization did exist.

Just the mainframe approach is what I have documented here. It is according to the design concepts chapters.

The approach can be used using any tool any environment.

Remarks - #smta

Used environment & Experience

The main issue was structuring JCL so it could be automated and reused.

The tool it self was build with TSO/ISPF using REXX.

All work connecting to JCL was automated with those common available tools.

Optimizing - Performance & Tuning

The coding in TSO/ISPF Rexx is by a limited number of sources.

It is an interpreter having no optimized run-time environment. A huge monolithic program will avoid IO (dasd) compared to having been split up in many sources.

The code is modulair and object oriented having a main program with a lot of subroutines.

The reason is that caching (dasd) was not present. The memory limit went op from 16Mb to 2Gb.

The compile step from develop to live can be seen as cocnatenation of all those lines.

Used concepts & practical knowledge

Reconsidering this nice project, it was nice because it has that many connections to a diverse set of activities. It serves to connect all those topics.

Details to be found at:

👓 Software Life Cycle (business)..

👓 Security ..

👓 Data modelling ..

👓 EIS Business Intelligence & Analytics ..

👓 Tools Life Cycle (infrastructure)..

👓 Low code data driven work as design..

The security association may not that obvious.

Simulating the production using test-accounts, in a way they are like operational production usage, is a good practice.

Having the overall security and configuration in a way you can run a complete DTAP on a single box (Iron) having containerized anything, is a good practice.

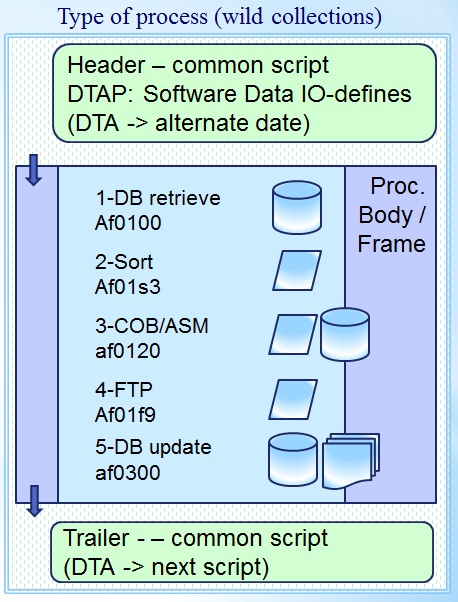

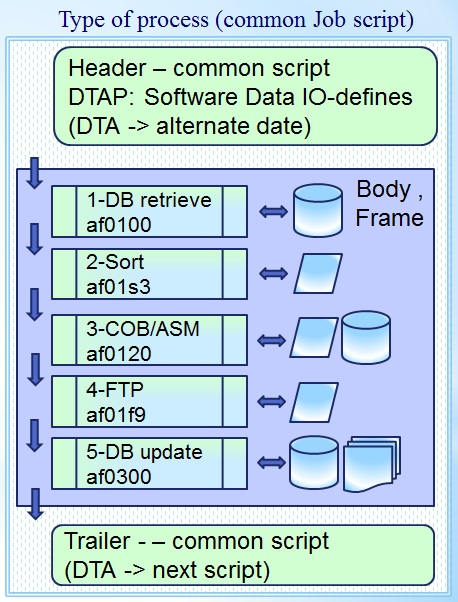

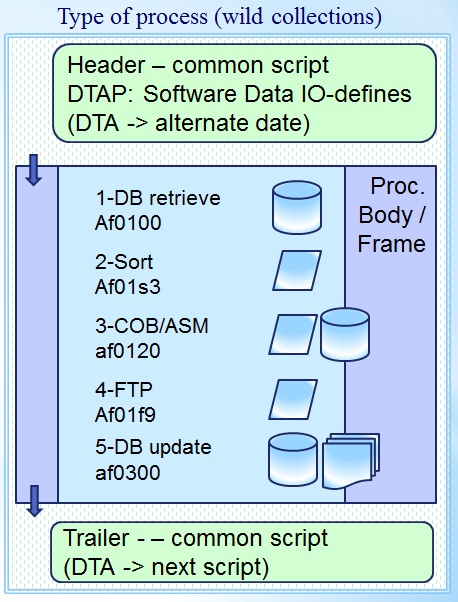

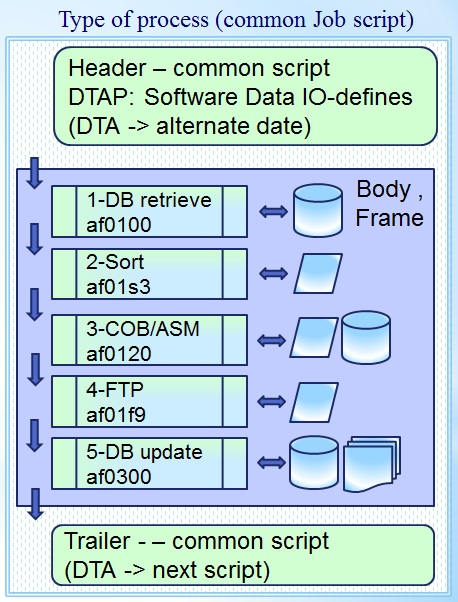

Standard JCL JOB procedures

Header

Defining all globals, job environment settings.

Bodies - Frames

Business logic software application programs.

Data Definitions

Input, Output, Report -log for every step.

Tail

Defining actions, jobs after this one.

Head :

- Joblib statement, where to look for Business Software Logic.

- Jcllib statement, where to look for Data Definitions, procedure Bodies.

- Prefix setting, defining the first levels Data Definitions. DTAP dependency.

- Output destinations (external). Fail safe asice security. DTAP dependency.

- Using a dedicated alternate date for Business Software Logic.

IO

You need somehow to define the datasets output / print / mail / databases / transfers to be used.

These definitions may not be hard coded in the business logic code. Why not?

- Having them managed in a release proces would become impossible.

- Develop acceptance testing would be possible using the same artifacts.

Tail :

- At non production (Business data) usage, triggers next process, next actions.

Standard JCL JOB procedures (continued)

Bodies - Frames (Unstructured) :

- The number of steps can vary between one (1) and many(*).

- Every Frame can be tailored made tot fullfill a dedicated functionality.

This will work and will be manageable when having not that many jobs to run.

A next improvement is structuring this. Define steps conforming business logic using standard building patterns.

Support by functional- technical owners infrastructure middleware components.

Common body / frame

Used by business functional owners.

Common proc bodies

Technicals you can reuse very often.

These technicals are a limited number of JCL proc steps, like:

- run: database retrieve program

- run: program name .📚.

using IO definitions: .📚.

- sort: using code: .📚.

using IO definitions: .📚.

- sas: using code: .📚.

using IO definitions: .📚.

- file transfer: ftp-name: .📚.

using IO definitions: .📚.

- run: database update program

Other procedures needed are :

- backup/restore test environment

- dedicated versions DBMS

- Saving results of processing

- compare & analyse results

- generate - define the structured steps )

- etc.

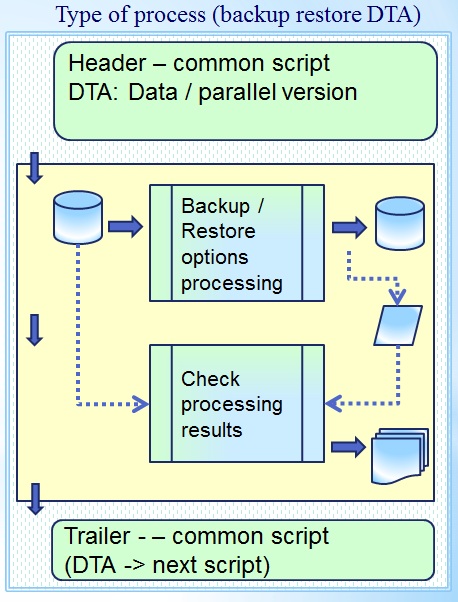

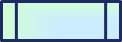

DTA support procedures

Acceptance testing is wiht more variations as is visible in the SDLC reference.

Activities can have origins in project planning, for example education of humans.

Witin the operations busienss requirements of continuity can get a solution.

Within A stage the business data can be different to it´s usage goal, as there are:

- PSP Acceptance: of the Business Software

- PSL Education: acceptance test of people learning software

- PSR Pre production run: limited run: ... using data definitions: ...

- PST Technical Test: new infrastructure components

- PSQ Acceptance to data quality (not the logic created the data)

Backup Restore script D, T, A,

Backup & restore is essential to D,T,A Develop & Test environments. The testenvironment results are important business assets.

Evidence of quality by audits.

Infra-Process

Used by Testers.

To allow many testers to work parallel, segregation in naming and environment should be possible. Verifying content of backups must be poosible.

Support by functional - technical owners infrastructure components.

Backup & Restore, Performance experiences

The backup and restore of a complete testplan used standard backup & restore utilities.

These are optimize for that work collecting and compresing everything as first stage.

In a next stage writing that optimized for a tape device doing sequential IO.

When getting forced not to use those tools found a big performance penalty because of locking queuing on shared dasd is much slower.

It is not what is told as dasd is promoted to be faster.

DTA support procedures (continued)

Interactive Transactional Systems

Aside batch processing, interactive systems (transactional), are also part of business information system.

Mainframe workloads: Batch and online transaction processing

Most workloads fall into one of two categories: Batch processing or online transaction processing, which includes Web-based applications.

Integrated Data Dictionary (IDD IDMS Cullinet)

CA IDMS Reference - Software Management

Promotion Management is the process wherein entities are moved from environment to environment within the software development life cycle.

When these environments consist of multiple dictionaries, application development typically involves staged promotions of entities from one dictionary to another, such as Test to Quality Assurance to Production.

This movement can be in any direction, from a variety of sources.

CA IDMS Reference - Software Management

Promotion Management is the process wherein entities are moved from environment to environment within the software development life cycle.

When these environments consist of multiple dictionaries, application development typically involves staged promotions of entities from one dictionary to another, such as Test to Quality Assurance to Production.

This movement can be in any direction, from a variety of sources.

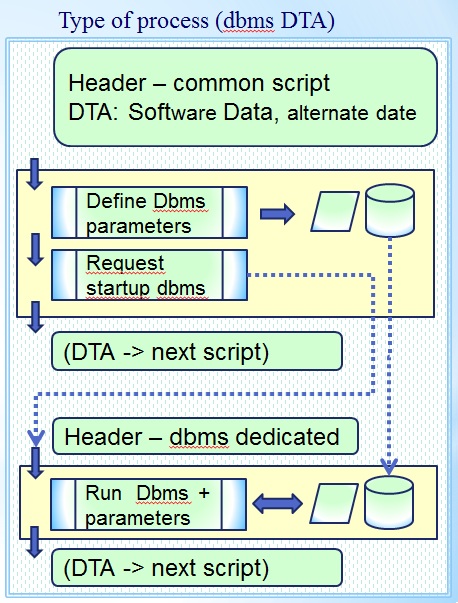

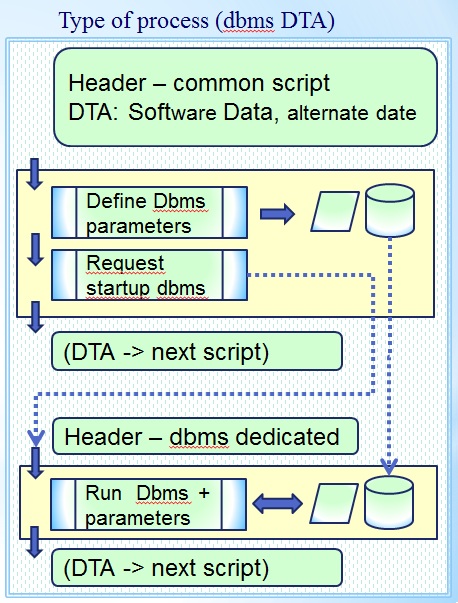

Dedicated DBMS script D, T, A,

This feature essential to D,T,A Test environments when transactional systems are involved.

The difference to backup restore support is: This implementation can be much more challenging is the complexity of startup DBMS support requires to do so.

Infra-Process transactional.

Used by Testers.

Support by functional - technical owners infrastructure components.

Evaluating

Missing for deploying JST As A Service JST-AAS

⚠ Naming Conventions

- When having a well defined set of names, associatied with their types and meaning (metadata).makes a technical realization easy.

- As those namings conventions aren´t generic, porting the JST solution to another environment is almost impossible.

⚠ Life Cycle Management

- Every enviroment has his omn interpretation for Life Cycle Management on: Infrastrcuture, Tools, Business Applications.

- Any testing tool should accomply all those differences and by: Develop, Test, Accept, Production. Porting any solution is almost a mission impossible.

⚠ Security (business: data / code)

- As business data and business logic is the real goal of all this al lot of security related issues are coming in.

Some of those questions are releated to the data, pseudomized anonomized minimimized but still according to the production environment.

- Other questions are business related whether the logic itself is that sensitive it get constraints for accesability because of that.

As of those non standard security approaches porting a solution to another environment is almost impossibles

Interactive Transactional Systems - Integrated Data Dictionary

These experiences are essential building up my mind. An IDD concept is hot in data governance. The new word is "Metadata".

Same concepts and approaches can be used with all those new tools.

Having multiple IDD's needed for the required DTAP segregations, used was:

- - system no 11: used as master defining other systems.

- D system no 66: Develop & module tests.

- T system no 88: integration Testing, education.

- A system no 77: Acceptance testing.

- P system no 01: real Production usage.

Evaluating (continued)

Words of thank to my former colleagues

We had a lot fun in stressfull times working out this on the mainframe in the nineties. The uniquenes and difficulties to port it to somewhere else realising.

A few names:

- 🙏 J.Pak co-designer of the common job approach.

- 🙏 D.Roekel inventing more proc-bodies faster than me.

- 🙏 J.Hofman, P.Grobbee working it out smoothly to testers

Many more people to mention, sorry when I didn´t. (notes: 2008)

Not always unhappy on what is going on

Sometimes you get a feeling there is some path still to go, you are not at the real destination yet.

🔰 lost here, than..

devops sdlc.

© 2012,2019 J.A.Karman

Looking and analyzing what the colleagues are doing, improvements and corrections getting planned (PDCA).

Looking and analyzing what the colleagues are doing, improvements and corrections getting planned (PDCA).

The red arrows in the figure are indicating the optional selections for:

The red arrows in the figure are indicating the optional selections for:

Defining all globals, job environment settings.

Defining all globals, job environment settings.  Business logic software application programs.

Business logic software application programs.

Input, Output, Report -log for every step.

Input, Output, Report -log for every step.

Technicals you can reuse very often.

Technicals you can reuse very often.

Used by Testers.

Used by Testers.  Aside batch processing, interactive systems (transactional), are also part of business information system.

Aside batch processing, interactive systems (transactional), are also part of business information system.

Used by Testers.

Used by Testers.